当前提交

9163a54a

共有 159 个文件被更改,包括 11675 次插入 和 4573 次删除

-

21README.md

-

9docs/Background-Jupyter.md

-

17docs/Getting-Started-with-Balance-Ball.md

-

2docs/Learning-Environment-Design-Brains.md

-

12docs/Learning-Environment-Examples.md

-

52docs/Limitations-and-Common-Issues.md

-

6docs/ML-Agents-Overview.md

-

3docs/Readme.md

-

32docs/Using-Docker.md

-

215docs/images/ml-agents-ODD.png

-

865docs/images/wall.png

-

54python/Basics.ipynb

-

4python/tests/test_bc.py

-

4python/tests/test_ppo.py

-

2python/tests/test_unityagents.py

-

2python/tests/test_unitytrainers.py

-

69python/trainer_config.yaml

-

10python/unityagents/environment.py

-

13python/unitytrainers/models.py

-

6python/unitytrainers/ppo/models.py

-

6python/unitytrainers/ppo/trainer.py

-

29unity-environment/Assets/ML-Agents/Examples/3DBall/Scripts/Ball3DDecision.cs

-

942unity-environment/Assets/ML-Agents/Examples/Banana/BananaImitation.unity

-

964unity-environment/Assets/ML-Agents/Examples/Banana/BananaRL.unity

-

4unity-environment/Assets/ML-Agents/Examples/Banana/Prefabs/BANANA.prefab

-

36unity-environment/Assets/ML-Agents/Examples/Banana/Prefabs/StudentAgent.prefab

-

40unity-environment/Assets/ML-Agents/Examples/Banana/Prefabs/TeacherAgent.prefab

-

4unity-environment/Assets/ML-Agents/Examples/Banana/Prefabs/BADBANANA.prefab

-

74unity-environment/Assets/ML-Agents/Examples/Banana/Scripts/BananaAgent.cs

-

22unity-environment/Assets/ML-Agents/Examples/Basic/Scripts/BasicDecision.cs

-

219unity-environment/Assets/ML-Agents/Examples/Crawler/Crawler.unity

-

964unity-environment/Assets/ML-Agents/Examples/Crawler/TFModels/crawler.bytes

-

41unity-environment/Assets/ML-Agents/Examples/Hallway/Prefabs/HallwayArea.prefab

-

884unity-environment/Assets/ML-Agents/Examples/Hallway/Scenes/Hallway.unity

-

198unity-environment/Assets/ML-Agents/Examples/Hallway/Scripts/HallwayAgent.cs

-

927unity-environment/Assets/ML-Agents/Examples/Hallway/TFModels/Hallway.bytes

-

5unity-environment/Assets/ML-Agents/Examples/Hallway/TFModels/Hallway.bytes.meta

-

2unity-environment/Assets/ML-Agents/Examples/PushBlock/Materials/groundPushblock.mat

-

108unity-environment/Assets/ML-Agents/Examples/PushBlock/Prefabs/PushBlockArea.prefab

-

180unity-environment/Assets/ML-Agents/Examples/PushBlock/Scenes/PushBlock.unity

-

150unity-environment/Assets/ML-Agents/Examples/PushBlock/Scripts/PushAgentBasic.cs

-

994unity-environment/Assets/ML-Agents/Examples/PushBlock/TFModels/PushBlock.bytes

-

2unity-environment/Assets/ML-Agents/Examples/Reacher/Materials/Materials/checker1.mat

-

4unity-environment/Assets/ML-Agents/Examples/Soccer/Prefabs/SoccerBall/Prefabs/SoccerBall.prefab

-

67unity-environment/Assets/ML-Agents/Examples/Soccer/Scenes/SoccerTwos.unity

-

147unity-environment/Assets/ML-Agents/Examples/Soccer/Scripts/AgentSoccer.cs

-

19unity-environment/Assets/ML-Agents/Examples/Template/Scripts/TemplateDecision.cs

-

31unity-environment/Assets/ML-Agents/Examples/SharedAssets/Scripts/RandomDecision.cs

-

2unity-environment/Assets/ML-Agents/Examples/SharedAssets/Materials/SuccessGround.mat

-

2unity-environment/Assets/ML-Agents/Examples/SharedAssets/Materials/Obstacle.mat

-

2unity-environment/Assets/ML-Agents/Examples/SharedAssets/Materials/Goal.mat

-

2unity-environment/Assets/ML-Agents/Examples/SharedAssets/Materials/FailGround.mat

-

2unity-environment/Assets/ML-Agents/Examples/SharedAssets/Materials/Block.mat

-

2unity-environment/Assets/ML-Agents/Examples/SharedAssets/Materials/Ball.mat

-

2unity-environment/Assets/ML-Agents/Examples/SharedAssets/Materials/MaterialsGrid/pitMaterial.mat

-

2unity-environment/Assets/ML-Agents/Examples/SharedAssets/Materials/MaterialsGrid/goalMaterial.mat

-

2unity-environment/Assets/ML-Agents/Examples/SharedAssets/Materials/MaterialsGrid/agentMaterial.mat

-

2unity-environment/Assets/ML-Agents/Examples/SharedAssets/Materials/MaterialsBall/Materials/logo2.mat

-

2unity-environment/Assets/ML-Agents/Examples/SharedAssets/Materials/MaterialsBall/Materials/logo1.mat

-

2unity-environment/Assets/ML-Agents/Scripts/Brain.cs

-

71unity-environment/Assets/ML-Agents/Scripts/Decision.cs

-

4unity-environment/Assets/ML-Agents/Scripts/ExternalCommunicator.cs

-

160unity-environment/Assets/ML-Agents/Scripts/Monitor.cs

-

6unity-environment/ProjectSettings/TagManager.asset

-

39docs/Feature-On-Demand-Decisions.md

-

30docs/Migrating-v0.3.md

-

1001unity-environment/Assets/ML-Agents/Examples/Banana/Prefabs/RLAgent.prefab

-

1001unity-environment/Assets/ML-Agents/Examples/Banana/Prefabs/RLArea.prefab

-

8unity-environment/Assets/ML-Agents/Examples/Banana/Prefabs/RLArea.prefab.meta

-

1001unity-environment/Assets/ML-Agents/Examples/Banana/Prefabs/TeachingArea.prefab

-

1001unity-environment/Assets/ML-Agents/Examples/Banana/TFModels/BananaRL.bytes

-

7unity-environment/Assets/ML-Agents/Examples/Banana/TFModels/BananaRL.bytes.meta

-

9unity-environment/Assets/ML-Agents/Examples/WallJump.meta

-

80unity-environment/Assets/ML-Agents/Examples/SharedAssets/Scripts/RayPerception.cs

-

11unity-environment/Assets/ML-Agents/Examples/SharedAssets/Scripts/RayPerception.cs.meta

-

10unity-environment/Assets/ML-Agents/Examples/WallJump/Material.meta

-

77unity-environment/Assets/ML-Agents/Examples/WallJump/Material/spawnVolumeMaterial.mat

-

10unity-environment/Assets/ML-Agents/Examples/WallJump/Material/spawnVolumeMaterial.mat.meta

-

77unity-environment/Assets/ML-Agents/Examples/WallJump/Material/wallMaterial.mat

-

10unity-environment/Assets/ML-Agents/Examples/WallJump/Material/wallMaterial.mat.meta

-

10unity-environment/Assets/ML-Agents/Examples/WallJump/Prefabs.meta

-

700unity-environment/Assets/ML-Agents/Examples/WallJump/Prefabs/WallJumpArea.prefab

-

9unity-environment/Assets/ML-Agents/Examples/WallJump/Prefabs/WallJumpArea.prefab.meta

-

10unity-environment/Assets/ML-Agents/Examples/WallJump/Scenes.meta

-

1001unity-environment/Assets/ML-Agents/Examples/WallJump/Scenes/WallJump.unity

-

9unity-environment/Assets/ML-Agents/Examples/WallJump/Scenes/WallJump.unity.meta

-

10unity-environment/Assets/ML-Agents/Examples/WallJump/Scripts.meta

-

28unity-environment/Assets/ML-Agents/Examples/WallJump/Scripts/WallJumpAcademy.cs

-

13unity-environment/Assets/ML-Agents/Examples/WallJump/Scripts/WallJumpAcademy.cs.meta

-

327unity-environment/Assets/ML-Agents/Examples/WallJump/Scripts/WallJumpAgent.cs

-

13unity-environment/Assets/ML-Agents/Examples/WallJump/Scripts/WallJumpAgent.cs.meta

-

10unity-environment/Assets/ML-Agents/Examples/WallJump/TFModels.meta

-

1001unity-environment/Assets/ML-Agents/Examples/WallJump/TFModels/WallJump.bytes

-

9unity-environment/Assets/ML-Agents/Examples/WallJump/TFModels/WallJump.bytes.meta

942

unity-environment/Assets/ML-Agents/Examples/Banana/BananaImitation.unity

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

964

unity-environment/Assets/ML-Agents/Examples/Banana/BananaRL.unity

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

|

|||

using System.Collections; |

|||

using System.Collections.Generic; |

|||

using System.Collections.Generic; |

|||

|

|||

public float[] Decide(List<float> state, List<Texture2D> observation, float reward, bool done, List<float> memory) |

|||

public float[] Decide( |

|||

List<float> vectorObs, |

|||

List<Texture2D> visualObs, |

|||

float reward, |

|||

bool done, |

|||

List<float> memory) |

|||

return new float[1]{ 1f }; |

|||

|

|||

return new float[1] { 1f }; |

|||

public List<float> MakeMemory(List<float> state, List<Texture2D> observation, float reward, bool done, List<float> memory) |

|||

public List<float> MakeMemory( |

|||

List<float> vectorObs, |

|||

List<Texture2D> visualObs, |

|||

float reward, |

|||

bool done, |

|||

List<float> memory) |

|||

|

|||

} |

|||

} |

|||

964

unity-environment/Assets/ML-Agents/Examples/Crawler/TFModels/crawler.bytes

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

884

unity-environment/Assets/ML-Agents/Examples/Hallway/Scenes/Hallway.unity

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

927

unity-environment/Assets/ML-Agents/Examples/Hallway/TFModels/Hallway.bytes

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

|

|||

fileFormatVersion: 2 |

|||

guid: 8aa65be485d2a408291f32ff56a9074e |

|||

guid: 207e5dbbeeca8431d8f57fd1c96280af |

|||

timeCreated: 1520904091 |

|||

licenseType: Free |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

994

unity-environment/Assets/ML-Agents/Examples/PushBlock/TFModels/PushBlock.bytes

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

|

|||

using System.Collections; |

|||

using System.Collections.Generic; |

|||

using System.Collections.Generic; |

|||

public float[] Decide(List<float> state, List<Texture2D> observation, float reward, bool done, List<float> memory) |

|||

public float[] Decide( |

|||

List<float> vectorObs, |

|||

List<Texture2D> visualObs, |

|||

float reward, |

|||

bool done, |

|||

List<float> memory) |

|||

|

|||

public List<float> MakeMemory(List<float> state, List<Texture2D> observation, float reward, bool done, List<float> memory) |

|||

public List<float> MakeMemory( |

|||

List<float> vectorObs, |

|||

List<Texture2D> visualObs, |

|||

float reward, |

|||

bool done, |

|||

List<float> memory) |

|||

|

|||

} |

|||

} |

|||

|

|||

using System.Collections; |

|||

using System.Collections.Generic; |

|||

using System.Collections.Generic; |

|||

/// Generic functions for Decision Interface

|

|||

/// <summary>

|

|||

/// Interface for implementing the behavior of an Agent that uses a Heuristic

|

|||

/// Brain. The behavior of an Agent in this case is fully decided using the

|

|||

/// implementation of these methods and no training or inference takes place.

|

|||

/// Currently, the Heuristic Brain does not support text observations and actions.

|

|||

/// </summary>

|

|||

/// \brief Implement this method to define the logic of decision making

|

|||

/// for the CoreBrainHeuristic

|

|||

/** Given the information about the agent, return a vector of actions. |

|||

* @param state The state of the agent |

|||

* @param observation The cameras the agent uses |

|||

* @param reward The reward the agent had at the previous step |

|||

* @param done Whether or not the agent is done |

|||

* @param memory The memories stored from the previous step with MakeMemory() |

|||

* @return The vector of actions the agent will take at the next step |

|||

*/ |

|||

float[] Decide(List<float> state, List<Texture2D> observation, float reward, bool done, List<float> memory); |

|||

/// <summary>

|

|||

/// Defines the decision-making logic of the agent. Given the information

|

|||

/// about the agent, returns a vector of actions.

|

|||

/// </summary>

|

|||

/// <returns>Vector action vector.</returns>

|

|||

/// <param name="vectorObs">The vector observations of the agent.</param>

|

|||

/// <param name="visualObs">The cameras the agent uses for visual observations.</param>

|

|||

/// <param name="reward">The reward the agent received at the previous step.</param>

|

|||

/// <param name="done">Whether or not the agent is done.</param>

|

|||

/// <param name="memory">

|

|||

/// The memories stored from the previous step with

|

|||

/// <see cref="MakeMemory(List{float}, List{Texture2D}, float, bool, List{float})"/>

|

|||

/// </param>

|

|||

float[] Decide( |

|||

List<float> |

|||

vectorObs, |

|||

List<Texture2D> visualObs, |

|||

float reward, |

|||

bool done, |

|||

List<float> memory); |

|||

/// \brief Implement this method to define the logic of memory making for

|

|||

/// the CoreBrainHeuristic

|

|||

/** Given the information about the agent, return the new memory vector for the agent. |

|||

* @param state The state of the agent |

|||

* @param observation The cameras the agent uses |

|||

* @param reward The reward the agent had at the previous step |

|||

* @param done Weather or not the agent is done |

|||

* @param memory The memories stored from the previous step with MakeMemory() |

|||

* @return The vector of memories the agent will use at the next step |

|||

*/ |

|||

List<float> MakeMemory(List<float> state, List<Texture2D> observation, float reward, bool done, List<float> memory); |

|||

} |

|||

/// <summary>

|

|||

/// Defines the logic for creating the memory vector for the Agent.

|

|||

/// </summary>

|

|||

/// <returns>The vector of memories the agent will use at the next step.</returns>

|

|||

/// <param name="vectorObs">The vector observations of the agent.</param>

|

|||

/// <param name="visualObs">The cameras the agent uses for visual observations.</param>

|

|||

/// <param name="reward">The reward the agent received at the previous step.</param>

|

|||

/// <param name="done">Whether or not the agent is done.</param>

|

|||

/// <param name="memory">

|

|||

/// The memories stored from the previous call to this method.

|

|||

/// </param>

|

|||

List<float> MakeMemory( |

|||

List<float> vectorObs, |

|||

List<Texture2D> visualObs, |

|||

float reward, |

|||

bool done, |

|||

List<float> memory); |

|||

} |

|||

|

|||

# On Demand Decision Making |

|||

|

|||

## Description |

|||

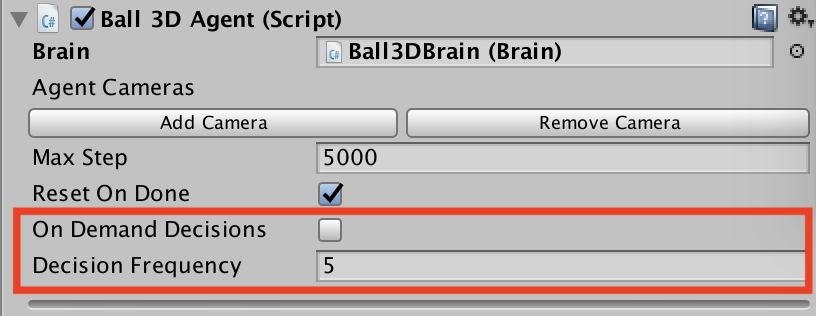

On demand decision making allows agents to request decisions from their |

|||

brains only when needed instead of receiving decisions at a fixed |

|||

frequency. This is useful when the agents commit to an action for a |

|||

variable number of steps or when the agents cannot make decisions |

|||

at the same time. This typically the case for turn based games, games |

|||

where agents must react to events or games where agents can take |

|||

actions of variable duration. |

|||

|

|||

## How to use |

|||

|

|||

To enable or disable on demand decision making, use the checkbox called |

|||

`On Demand Decisions` in the Agent Inspector. |

|||

|

|||

<p align="center"> |

|||

<img src="images/ml-agents-ODD.png" |

|||

alt="On Demand Decision" |

|||

width="500" border="10" /> |

|||

</p> |

|||

|

|||

* If `On Demand Decisions` is not checked, the Agent will request a new |

|||

decision every `Decision Frequency` steps and |

|||

perform an action every step. In the example above, |

|||

`CollectObservations()` will be called every 5 steps and |

|||

`AgentAction()` will be called at every step. This means that the |

|||

Agent will reuse the decision the Brain has given it. |

|||

|

|||

* If `On Demand Decisions` is checked, the Agent controls when to receive |

|||

decisions, and take actions. To do so, the Agent may leverage one or two methods: |

|||

* `RequestDecision()` Signals that the Agent is requesting a decision. |

|||

This causes the Agent to collect its observations and ask the Brain for a |

|||

decision at the next step of the simulation. Note that when an Agent |

|||

requests a decision, it also request an action. |

|||

This is to ensure that all decisions lead to an action during training. |

|||

* `RequestAction()` Signals that the Agent is requesting an action. The |

|||

action provided to the Agent in this case is the same action that was |

|||

provided the last time it requested a decision. |

|||

|

|||

# Migrating to ML-Agents v0.3 |

|||

|

|||

There are a large number of new features and improvements in ML-Agents v0.3 which change both the training process and Unity API in ways which will cause incompatibilities with environments made using older versions. This page is designed to highlight those changes for users familiar with v0.1 or v0.2 in order to ensure a smooth transition. |

|||

|

|||

## Important |

|||

* ML-Agents is no longer compatible with Python 2. |

|||

|

|||

## Python Training |

|||

* The training script `ppo.py` and `PPO.ipynb` Python notebook have been replaced with a single `learn.py` script as the launching point for training with ML-Agents. For more information on using `learn.py`, see [here](). |

|||

* Hyperparameters for training brains are now stored in the `trainer_config.yaml` file. For more information on using this file, see [here](). |

|||

|

|||

## Unity API |

|||

* Modifications to an Agent's rewards must now be done using either `AddReward()` or `SetReward()`. |

|||

* Setting an Agent to done now requires the use of the `Done()` method. |

|||

* `CollectStates()` has been replaced by `CollectObservations()`, which now no longer returns a list of floats. |

|||

* To collect observations, call `AddVectorObs()` within `CollectObservations()`. Note that you can call `AddVectorObs()` with floats, integers, lists and arrays of floats, Vector3 and Quaternions. |

|||

* `AgentStep()` has been replaced by `AgentAction()`. |

|||

* `WaitTime()` has been removed. |

|||

* The `Frame Skip` field of the Academy is replaced by the Agent's `Decision Frequency` field, enabling agent to make decisions at different frequencies. |

|||

|

|||

## Semantics |

|||

In order to more closely align with the terminology used in the Reinforcement Learning field, and to be more descriptive, we have changed the names of some of the concepts used in ML-Agents. The changes are highlighted in the table below. |

|||

|

|||

| Old - v0.2 and earlier | New - v0.3 and later | |

|||

| --- | --- | |

|||

| State | Vector Observation | |

|||

| Observation | Visual Observation | |

|||

| Action | Vector Action | |

|||

| N/A | Text Observation | |

|||

| N/A | Text Action | |

|||

1001

unity-environment/Assets/ML-Agents/Examples/Banana/Prefabs/RLAgent.prefab

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

1001

unity-environment/Assets/ML-Agents/Examples/Banana/Prefabs/RLArea.prefab

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

|

|||

fileFormatVersion: 2 |

|||

guid: 38400a68c4ea54b52998e34ee238d1a7 |

|||

NativeFormatImporter: |

|||

externalObjects: {} |

|||

mainObjectFileID: 100100000 |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

1001

unity-environment/Assets/ML-Agents/Examples/Banana/Prefabs/TeachingArea.prefab

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

1001

unity-environment/Assets/ML-Agents/Examples/Banana/TFModels/BananaRL.bytes

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

|

|||

fileFormatVersion: 2 |

|||

guid: f60ba855bdc5f42689de283a9a572667 |

|||

TextScriptImporter: |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: e61aed11f93544227801dfd529bf41c6 |

|||

folderAsset: yes |

|||

timeCreated: 1520964896 |

|||

licenseType: Free |

|||

DefaultImporter: |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

using System.Collections.Generic; |

|||

using UnityEngine; |

|||

|

|||

/// <summary>

|

|||

/// Ray perception component. Attach this to agents to enable "local perception"

|

|||

/// via the use of ray casts directed outward from the agent.

|

|||

/// </summary>

|

|||

public class RayPerception : MonoBehaviour |

|||

{ |

|||

List<float> perceptionBuffer = new List<float>(); |

|||

Vector3 endPosition; |

|||

RaycastHit hit; |

|||

/// <summary>

|

|||

/// Creates perception vector to be used as part of an observation of an agent.

|

|||

/// </summary>

|

|||

/// <returns>The partial vector observation corresponding to the set of rays</returns>

|

|||

/// <param name="rayDistance">Radius of rays</param>

|

|||

/// <param name="rayAngles">Anlges of rays (starting from (1,0) on unit circle).</param>

|

|||

/// <param name="detectableObjects">List of tags which correspond to object types agent can see</param>

|

|||

/// <param name="startOffset">Starting heigh offset of ray from center of agent.</param>

|

|||

/// <param name="endOffset">Ending height offset of ray from center of agent.</param>

|

|||

public List<float> Perceive(float rayDistance, |

|||

float[] rayAngles, string[] detectableObjects, |

|||

float startOffset, float endOffset) |

|||

{ |

|||

perceptionBuffer.Clear(); |

|||

// For each ray sublist stores categorial information on detected object

|

|||

// along with object distance.

|

|||

foreach (float angle in rayAngles) |

|||

{ |

|||

endPosition = transform.TransformDirection( |

|||

PolarToCartesian(rayDistance, angle)); |

|||

endPosition.y = endOffset; |

|||

if (Application.isEditor) |

|||

{ |

|||

Debug.DrawRay(transform.position + new Vector3(0f, startOffset, 0f), |

|||

endPosition, Color.black, 0.01f, true); |

|||

} |

|||

float[] subList = new float[detectableObjects.Length + 2]; |

|||

if (Physics.SphereCast(transform.position + |

|||

new Vector3(0f, startOffset, 0f), 0.5f, |

|||

endPosition, out hit, rayDistance)) |

|||

{ |

|||

for (int i = 0; i < detectableObjects.Length; i++) |

|||

{ |

|||

if (hit.collider.gameObject.CompareTag(detectableObjects[i])) |

|||

{ |

|||

subList[i] = 1; |

|||

subList[detectableObjects.Length + 1] = hit.distance / rayDistance; |

|||

break; |

|||

} |

|||

} |

|||

} |

|||

else |

|||

{ |

|||

subList[detectableObjects.Length] = 1f; |

|||

} |

|||

perceptionBuffer.AddRange(subList); |

|||

} |

|||

return perceptionBuffer; |

|||

} |

|||

|

|||

/// <summary>

|

|||

/// Converts polar coordinate to cartesian coordinate.

|

|||

/// </summary>

|

|||

public static Vector3 PolarToCartesian(float radius, float angle) |

|||

{ |

|||

float x = radius * Mathf.Cos(DegreeToRadian(angle)); |

|||

float z = radius * Mathf.Sin(DegreeToRadian(angle)); |

|||

return new Vector3(x, 0f, z); |

|||

} |

|||

|

|||

/// <summary>

|

|||

/// Converts degrees to radians.

|

|||

/// </summary>

|

|||

public static float DegreeToRadian(float degree) |

|||

{ |

|||

return degree * Mathf.PI / 180f; |

|||

} |

|||

} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: bb172294dbbcc408286b156a2c4b553c |

|||

MonoImporter: |

|||

externalObjects: {} |

|||

serializedVersion: 2 |

|||

defaultReferences: [] |

|||

executionOrder: 0 |

|||

icon: {instanceID: 0} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 3002d747534d24598b059f75c43b8d45 |

|||

folderAsset: yes |

|||

timeCreated: 1517448702 |

|||

licenseType: Free |

|||

DefaultImporter: |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

%YAML 1.1 |

|||

%TAG !u! tag:unity3d.com,2011: |

|||

--- !u!21 &2100000 |

|||

Material: |

|||

serializedVersion: 6 |

|||

m_ObjectHideFlags: 0 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 0} |

|||

m_Name: spawnVolumeMaterial |

|||

m_Shader: {fileID: 46, guid: 0000000000000000f000000000000000, type: 0} |

|||

m_ShaderKeywords: _ALPHABLEND_ON |

|||

m_LightmapFlags: 4 |

|||

m_EnableInstancingVariants: 0 |

|||

m_DoubleSidedGI: 0 |

|||

m_CustomRenderQueue: 3000 |

|||

stringTagMap: |

|||

RenderType: Transparent |

|||

disabledShaderPasses: [] |

|||

m_SavedProperties: |

|||

serializedVersion: 3 |

|||

m_TexEnvs: |

|||

- _BumpMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailAlbedoMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailMask: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailNormalMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _EmissionMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _MainTex: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _MetallicGlossMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _OcclusionMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _ParallaxMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

m_Floats: |

|||

- _BumpScale: 1 |

|||

- _Cutoff: 0.5 |

|||

- _DetailNormalMapScale: 1 |

|||

- _DstBlend: 10 |

|||

- _GlossMapScale: 1 |

|||

- _Glossiness: 0 |

|||

- _GlossyReflections: 1 |

|||

- _Metallic: 0 |

|||

- _Mode: 2 |

|||

- _OcclusionStrength: 1 |

|||

- _Parallax: 0.02 |

|||

- _SmoothnessTextureChannel: 0 |

|||

- _SpecularHighlights: 1 |

|||

- _SrcBlend: 5 |

|||

- _UVSec: 0 |

|||

- _ZWrite: 0 |

|||

m_Colors: |

|||

- _Color: {r: 0, g: 0.83448315, b: 1, a: 0.303} |

|||

- _EmissionColor: {r: 0, g: 0, b: 0, a: 1} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: ecd59def9213741058b969f699d10e8e |

|||

timeCreated: 1506376733 |

|||

licenseType: Pro |

|||

NativeFormatImporter: |

|||

externalObjects: {} |

|||

mainObjectFileID: 2100000 |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

%YAML 1.1 |

|||

%TAG !u! tag:unity3d.com,2011: |

|||

--- !u!21 &2100000 |

|||

Material: |

|||

serializedVersion: 6 |

|||

m_ObjectHideFlags: 0 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 0} |

|||

m_Name: wallMaterial |

|||

m_Shader: {fileID: 46, guid: 0000000000000000f000000000000000, type: 0} |

|||

m_ShaderKeywords: _ALPHABLEND_ON |

|||

m_LightmapFlags: 4 |

|||

m_EnableInstancingVariants: 0 |

|||

m_DoubleSidedGI: 0 |

|||

m_CustomRenderQueue: 3000 |

|||

stringTagMap: |

|||

RenderType: Transparent |

|||

disabledShaderPasses: [] |

|||

m_SavedProperties: |

|||

serializedVersion: 3 |

|||

m_TexEnvs: |

|||

- _BumpMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailAlbedoMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailMask: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailNormalMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _EmissionMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _MainTex: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _MetallicGlossMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _OcclusionMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _ParallaxMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

m_Floats: |

|||

- _BumpScale: 1 |

|||

- _Cutoff: 0.5 |

|||

- _DetailNormalMapScale: 1 |

|||

- _DstBlend: 10 |

|||

- _GlossMapScale: 1 |

|||

- _Glossiness: 0 |

|||

- _GlossyReflections: 1 |

|||

- _Metallic: 0 |

|||

- _Mode: 2 |

|||

- _OcclusionStrength: 1 |

|||

- _Parallax: 0.02 |

|||

- _SmoothnessTextureChannel: 0 |

|||

- _SpecularHighlights: 1 |

|||

- _SrcBlend: 5 |

|||

- _UVSec: 0 |

|||

- _ZWrite: 0 |

|||

m_Colors: |

|||

- _Color: {r: 0.56228375, g: 0.76044035, b: 0.9558824, a: 0.603} |

|||

- _EmissionColor: {r: 0, g: 0, b: 0, a: 1} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: a0c2c8b2ac71342e1bd714d7178198e3 |

|||

timeCreated: 1506376733 |

|||

licenseType: Pro |

|||

NativeFormatImporter: |

|||

externalObjects: {} |

|||

mainObjectFileID: 2100000 |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 22e282f4b1d48436b91d6ad8a8903e1c |

|||

folderAsset: yes |

|||

timeCreated: 1517535133 |

|||

licenseType: Free |

|||

DefaultImporter: |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

%YAML 1.1 |

|||

%TAG !u! tag:unity3d.com,2011: |

|||

--- !u!1001 &100100000 |

|||

Prefab: |

|||

m_ObjectHideFlags: 1 |

|||

serializedVersion: 2 |

|||

m_Modification: |

|||

m_TransformParent: {fileID: 0} |

|||

m_Modifications: [] |

|||

m_RemovedComponents: [] |

|||

m_ParentPrefab: {fileID: 0} |

|||

m_RootGameObject: {fileID: 1280098394364104} |

|||

m_IsPrefabParent: 1 |

|||

--- !u!1 &1195095783991828 |

|||

GameObject: |

|||

m_ObjectHideFlags: 0 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

serializedVersion: 5 |

|||

m_Component: |

|||

- component: {fileID: 4651390251185036} |

|||

- component: {fileID: 33846302425286506} |

|||

- component: {fileID: 65193133000831296} |

|||

- component: {fileID: 23298506819960420} |

|||

- component: {fileID: 54678503543725326} |

|||

- component: {fileID: 114925928594762506} |

|||

- component: {fileID: 114092229367912210} |

|||

m_Layer: 0 |

|||

m_Name: Agent |

|||

m_TagString: agent |

|||

m_Icon: {fileID: 0} |

|||

m_NavMeshLayer: 0 |

|||

m_StaticEditorFlags: 0 |

|||

m_IsActive: 1 |

|||

--- !u!1 &1243905751985214 |

|||

GameObject: |

|||

m_ObjectHideFlags: 0 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

serializedVersion: 5 |

|||

m_Component: |

|||

- component: {fileID: 4245926775170606} |

|||

- component: {fileID: 33016986498506672} |

|||

- component: {fileID: 65082856895024712} |

|||

- component: {fileID: 23546212824591690} |

|||

m_Layer: 0 |

|||

m_Name: Wall |

|||

m_TagString: wall |

|||

m_Icon: {fileID: 0} |

|||

m_NavMeshLayer: 0 |

|||

m_StaticEditorFlags: 0 |

|||

m_IsActive: 1 |

|||

--- !u!1 &1280098394364104 |

|||

GameObject: |

|||

m_ObjectHideFlags: 0 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

serializedVersion: 5 |

|||

m_Component: |

|||

- component: {fileID: 4768003208014390} |

|||

m_Layer: 0 |

|||

m_Name: WallJumpArea |

|||

m_TagString: Untagged |

|||

m_Icon: {fileID: 0} |

|||

m_NavMeshLayer: 0 |

|||

m_StaticEditorFlags: 0 |

|||

m_IsActive: 1 |

|||

--- !u!1 &1395477826315484 |

|||

GameObject: |

|||

m_ObjectHideFlags: 0 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

serializedVersion: 5 |

|||

m_Component: |

|||

- component: {fileID: 4294902888415044} |

|||

- component: {fileID: 33528566080995282} |

|||

- component: {fileID: 65551840025329434} |

|||

- component: {fileID: 23354960268522594} |

|||

- component: {fileID: 54027918861229180} |

|||

m_Layer: 0 |

|||

m_Name: shortBlock |

|||

m_TagString: block |

|||

m_Icon: {fileID: 0} |

|||

m_NavMeshLayer: 0 |

|||

m_StaticEditorFlags: 0 |

|||

m_IsActive: 1 |

|||

--- !u!1 &1535176706844624 |

|||

GameObject: |

|||

m_ObjectHideFlags: 0 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

serializedVersion: 5 |

|||

m_Component: |

|||

- component: {fileID: 4064361527677764} |

|||

- component: {fileID: 33890127227328306} |

|||

- component: {fileID: 65857793473814344} |

|||

- component: {fileID: 23318234009360618} |

|||

m_Layer: 0 |

|||

m_Name: SpawnVolume |

|||

m_TagString: Untagged |

|||

m_Icon: {fileID: 0} |

|||

m_NavMeshLayer: 0 |

|||

m_StaticEditorFlags: 0 |

|||

m_IsActive: 1 |

|||

--- !u!1 &1707364840842826 |

|||

GameObject: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

serializedVersion: 5 |

|||

m_Component: |

|||

- component: {fileID: 4930287780304116} |

|||

- component: {fileID: 33507625006194266} |

|||

- component: {fileID: 65060909118748988} |

|||

- component: {fileID: 23872068720866504} |

|||

m_Layer: 0 |

|||

m_Name: Cube |

|||

m_TagString: Untagged |

|||

m_Icon: {fileID: 0} |

|||

m_NavMeshLayer: 0 |

|||

m_StaticEditorFlags: 0 |

|||

m_IsActive: 1 |

|||

--- !u!1 &1964440537870194 |

|||

GameObject: |

|||

m_ObjectHideFlags: 0 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

serializedVersion: 5 |

|||

m_Component: |

|||

- component: {fileID: 4192801639223200} |

|||

- component: {fileID: 33252015425015410} |

|||

- component: {fileID: 65412457053290128} |

|||

- component: {fileID: 23001074490764582} |

|||

m_Layer: 0 |

|||

m_Name: Ground |

|||

m_TagString: walkableSurface |

|||

m_Icon: {fileID: 0} |

|||

m_NavMeshLayer: 0 |

|||

m_StaticEditorFlags: 0 |

|||

m_IsActive: 1 |

|||

--- !u!1 &1982078136115924 |

|||

GameObject: |

|||

m_ObjectHideFlags: 0 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

serializedVersion: 5 |

|||

m_Component: |

|||

- component: {fileID: 4011541840118462} |

|||

- component: {fileID: 33618033993823702} |

|||

- component: {fileID: 65431820516000586} |

|||

- component: {fileID: 23621829541977726} |

|||

m_Layer: 0 |

|||

m_Name: Goal |

|||

m_TagString: goal |

|||

m_Icon: {fileID: 0} |

|||

m_NavMeshLayer: 0 |

|||

m_StaticEditorFlags: 0 |

|||

m_IsActive: 1 |

|||

--- !u!4 &4011541840118462 |

|||

Transform: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1982078136115924} |

|||

m_LocalRotation: {x: -0, y: -0, z: -0, w: 1} |

|||

m_LocalPosition: {x: 6.7, y: 0.4, z: 3.3} |

|||

m_LocalScale: {x: 4, y: 0.32738775, z: 4} |

|||

m_Children: [] |

|||

m_Father: {fileID: 4768003208014390} |

|||

m_RootOrder: 2 |

|||

m_LocalEulerAnglesHint: {x: 0, y: 0, z: 0} |

|||

--- !u!4 &4064361527677764 |

|||

Transform: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1535176706844624} |

|||

m_LocalRotation: {x: -0, y: -0, z: -0, w: 1} |

|||

m_LocalPosition: {x: 0, y: 1.05, z: -6.5} |

|||

m_LocalScale: {x: 16.2, y: 1, z: 7} |

|||

m_Children: [] |

|||

m_Father: {fileID: 4768003208014390} |

|||

m_RootOrder: 5 |

|||

m_LocalEulerAnglesHint: {x: 0, y: 0, z: 0} |

|||

--- !u!4 &4192801639223200 |

|||

Transform: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1964440537870194} |

|||

m_LocalRotation: {x: -0, y: -0, z: -0, w: 1} |

|||

m_LocalPosition: {x: 0, y: 0, z: -4} |

|||

m_LocalScale: {x: 20, y: 1, z: 20} |

|||

m_Children: [] |

|||

m_Father: {fileID: 4768003208014390} |

|||

m_RootOrder: 1 |

|||

m_LocalEulerAnglesHint: {x: 0, y: 0, z: 0} |

|||

--- !u!4 &4245926775170606 |

|||

Transform: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1243905751985214} |

|||

m_LocalRotation: {x: 0, y: 0, z: 0, w: 1} |

|||

m_LocalPosition: {x: 0, y: 0, z: -1.53} |

|||

m_LocalScale: {x: 20, y: 0, z: 1.5} |

|||

m_Children: [] |

|||

m_Father: {fileID: 4768003208014390} |

|||

m_RootOrder: 4 |

|||

m_LocalEulerAnglesHint: {x: 0, y: 0, z: 0} |

|||

--- !u!4 &4294902888415044 |

|||

Transform: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1395477826315484} |

|||

m_LocalRotation: {x: -0, y: -0, z: -0, w: 1} |

|||

m_LocalPosition: {x: 1.51, y: 2.05, z: -3.86} |

|||

m_LocalScale: {x: 3, y: 2, z: 3} |

|||

m_Children: [] |

|||

m_Father: {fileID: 4768003208014390} |

|||

m_RootOrder: 3 |

|||

m_LocalEulerAnglesHint: {x: 0, y: 0, z: 0} |

|||

--- !u!4 &4651390251185036 |

|||

Transform: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1195095783991828} |

|||

m_LocalRotation: {x: -0, y: 0.96758014, z: -0, w: 0.25256422} |

|||

m_LocalPosition: {x: -8.2, y: 1, z: -12.08} |

|||

m_LocalScale: {x: 1, y: 1, z: 1} |

|||

m_Children: |

|||

- {fileID: 4930287780304116} |

|||

m_Father: {fileID: 4768003208014390} |

|||

m_RootOrder: 0 |

|||

m_LocalEulerAnglesHint: {x: 0, y: 0, z: 0} |

|||

--- !u!4 &4768003208014390 |

|||

Transform: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1280098394364104} |

|||

m_LocalRotation: {x: -0, y: -0, z: -0, w: 1} |

|||

m_LocalPosition: {x: 0, y: 0, z: 0} |

|||

m_LocalScale: {x: 1, y: 1, z: 1} |

|||

m_Children: |

|||

- {fileID: 4651390251185036} |

|||

- {fileID: 4192801639223200} |

|||

- {fileID: 4011541840118462} |

|||

- {fileID: 4294902888415044} |

|||

- {fileID: 4245926775170606} |

|||

- {fileID: 4064361527677764} |

|||

m_Father: {fileID: 0} |

|||

m_RootOrder: 0 |

|||

m_LocalEulerAnglesHint: {x: 0, y: 0, z: 0} |

|||

--- !u!4 &4930287780304116 |

|||

Transform: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1707364840842826} |

|||

m_LocalRotation: {x: 0, y: 0, z: 0, w: 1} |

|||

m_LocalPosition: {x: -0, y: 0, z: 0.49} |

|||

m_LocalScale: {x: 0.1941064, y: 0.19410636, z: 0.19410636} |

|||

m_Children: [] |

|||

m_Father: {fileID: 4651390251185036} |

|||

m_RootOrder: 0 |

|||

m_LocalEulerAnglesHint: {x: 0, y: 0, z: 0} |

|||

--- !u!23 &23001074490764582 |

|||

MeshRenderer: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1964440537870194} |

|||

m_Enabled: 1 |

|||

m_CastShadows: 1 |

|||

m_ReceiveShadows: 1 |

|||

m_MotionVectors: 1 |

|||

m_LightProbeUsage: 1 |

|||

m_ReflectionProbeUsage: 1 |

|||

m_Materials: |

|||

- {fileID: 2100000, guid: 2c19bff363d1644b0818652340f120d5, type: 2} |

|||

m_StaticBatchInfo: |

|||

firstSubMesh: 0 |

|||

subMeshCount: 0 |

|||

m_StaticBatchRoot: {fileID: 0} |

|||

m_ProbeAnchor: {fileID: 0} |

|||

m_LightProbeVolumeOverride: {fileID: 0} |

|||

m_ScaleInLightmap: 1 |

|||

m_PreserveUVs: 1 |

|||

m_IgnoreNormalsForChartDetection: 0 |

|||

m_ImportantGI: 0 |

|||

m_SelectedEditorRenderState: 3 |

|||

m_MinimumChartSize: 4 |

|||

m_AutoUVMaxDistance: 0.5 |

|||

m_AutoUVMaxAngle: 89 |

|||

m_LightmapParameters: {fileID: 0} |

|||

m_SortingLayerID: 0 |

|||

m_SortingLayer: 0 |

|||

m_SortingOrder: 0 |

|||

--- !u!23 &23298506819960420 |

|||

MeshRenderer: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1195095783991828} |

|||

m_Enabled: 1 |

|||

m_CastShadows: 1 |

|||

m_ReceiveShadows: 1 |

|||

m_MotionVectors: 1 |

|||

m_LightProbeUsage: 1 |

|||

m_ReflectionProbeUsage: 1 |

|||

m_Materials: |

|||

- {fileID: 2100000, guid: 260483cdfc6b14e26823a02f23bd8baa, type: 2} |

|||

m_StaticBatchInfo: |

|||

firstSubMesh: 0 |

|||

subMeshCount: 0 |

|||

m_StaticBatchRoot: {fileID: 0} |

|||

m_ProbeAnchor: {fileID: 0} |

|||

m_LightProbeVolumeOverride: {fileID: 0} |

|||

m_ScaleInLightmap: 1 |

|||

m_PreserveUVs: 1 |

|||

m_IgnoreNormalsForChartDetection: 0 |

|||

m_ImportantGI: 0 |

|||

m_SelectedEditorRenderState: 3 |

|||

m_MinimumChartSize: 4 |

|||

m_AutoUVMaxDistance: 0.5 |

|||

m_AutoUVMaxAngle: 89 |

|||

m_LightmapParameters: {fileID: 0} |

|||

m_SortingLayerID: 0 |

|||

m_SortingLayer: 0 |

|||

m_SortingOrder: 0 |

|||

--- !u!23 &23318234009360618 |

|||

MeshRenderer: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1535176706844624} |

|||

m_Enabled: 1 |

|||

m_CastShadows: 1 |

|||

m_ReceiveShadows: 1 |

|||

m_MotionVectors: 1 |

|||

m_LightProbeUsage: 1 |

|||

m_ReflectionProbeUsage: 1 |

|||

m_Materials: |

|||

- {fileID: 2100000, guid: ecd59def9213741058b969f699d10e8e, type: 2} |

|||

m_StaticBatchInfo: |

|||

firstSubMesh: 0 |

|||

subMeshCount: 0 |

|||

m_StaticBatchRoot: {fileID: 0} |

|||

m_ProbeAnchor: {fileID: 0} |

|||

m_LightProbeVolumeOverride: {fileID: 0} |

|||

m_ScaleInLightmap: 1 |

|||

m_PreserveUVs: 1 |

|||

m_IgnoreNormalsForChartDetection: 0 |

|||

m_ImportantGI: 0 |

|||

m_SelectedEditorRenderState: 3 |

|||

m_MinimumChartSize: 4 |

|||

m_AutoUVMaxDistance: 0.5 |

|||

m_AutoUVMaxAngle: 89 |

|||

m_LightmapParameters: {fileID: 0} |

|||

m_SortingLayerID: 0 |

|||

m_SortingLayer: 0 |

|||

m_SortingOrder: 0 |

|||

--- !u!23 &23354960268522594 |

|||

MeshRenderer: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1395477826315484} |

|||

m_Enabled: 1 |

|||

m_CastShadows: 1 |

|||

m_ReceiveShadows: 1 |

|||

m_MotionVectors: 1 |

|||

m_LightProbeUsage: 1 |

|||

m_ReflectionProbeUsage: 1 |

|||

m_Materials: |

|||

- {fileID: 2100000, guid: f4abcb290251940948a31b349a6f9995, type: 2} |

|||

m_StaticBatchInfo: |

|||

firstSubMesh: 0 |

|||

subMeshCount: 0 |

|||

m_StaticBatchRoot: {fileID: 0} |

|||

m_ProbeAnchor: {fileID: 0} |

|||

m_LightProbeVolumeOverride: {fileID: 0} |

|||

m_ScaleInLightmap: 1 |

|||

m_PreserveUVs: 1 |

|||

m_IgnoreNormalsForChartDetection: 0 |

|||

m_ImportantGI: 0 |

|||

m_SelectedEditorRenderState: 3 |

|||

m_MinimumChartSize: 4 |

|||

m_AutoUVMaxDistance: 0.5 |

|||

m_AutoUVMaxAngle: 89 |

|||

m_LightmapParameters: {fileID: 0} |

|||

m_SortingLayerID: 0 |

|||

m_SortingLayer: 0 |

|||

m_SortingOrder: 0 |

|||

--- !u!23 &23546212824591690 |

|||

MeshRenderer: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1243905751985214} |

|||

m_Enabled: 1 |

|||

m_CastShadows: 1 |

|||

m_ReceiveShadows: 1 |

|||

m_MotionVectors: 1 |

|||

m_LightProbeUsage: 1 |

|||

m_ReflectionProbeUsage: 1 |

|||

m_Materials: |

|||

- {fileID: 2100000, guid: a0c2c8b2ac71342e1bd714d7178198e3, type: 2} |

|||

m_StaticBatchInfo: |

|||

firstSubMesh: 0 |

|||

subMeshCount: 0 |

|||

m_StaticBatchRoot: {fileID: 0} |

|||

m_ProbeAnchor: {fileID: 0} |

|||

m_LightProbeVolumeOverride: {fileID: 0} |

|||

m_ScaleInLightmap: 1 |

|||

m_PreserveUVs: 1 |

|||

m_IgnoreNormalsForChartDetection: 0 |

|||

m_ImportantGI: 0 |

|||

m_SelectedEditorRenderState: 3 |

|||

m_MinimumChartSize: 4 |

|||

m_AutoUVMaxDistance: 0.5 |

|||

m_AutoUVMaxAngle: 89 |

|||

m_LightmapParameters: {fileID: 0} |

|||

m_SortingLayerID: 0 |

|||

m_SortingLayer: 0 |

|||

m_SortingOrder: 0 |

|||

--- !u!23 &23621829541977726 |

|||

MeshRenderer: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1982078136115924} |

|||

m_Enabled: 1 |

|||

m_CastShadows: 1 |

|||

m_ReceiveShadows: 1 |

|||

m_MotionVectors: 1 |

|||

m_LightProbeUsage: 1 |

|||

m_ReflectionProbeUsage: 1 |

|||

m_Materials: |

|||

- {fileID: 2100000, guid: e810187ce86f44ba1a373ca07a86ea81, type: 2} |

|||

m_StaticBatchInfo: |

|||

firstSubMesh: 0 |

|||

subMeshCount: 0 |

|||

m_StaticBatchRoot: {fileID: 0} |

|||

m_ProbeAnchor: {fileID: 0} |

|||

m_LightProbeVolumeOverride: {fileID: 0} |

|||

m_ScaleInLightmap: 1 |

|||

m_PreserveUVs: 1 |

|||

m_IgnoreNormalsForChartDetection: 0 |

|||

m_ImportantGI: 0 |

|||

m_SelectedEditorRenderState: 3 |

|||

m_MinimumChartSize: 4 |

|||

m_AutoUVMaxDistance: 0.5 |

|||

m_AutoUVMaxAngle: 89 |

|||

m_LightmapParameters: {fileID: 0} |

|||

m_SortingLayerID: 0 |

|||

m_SortingLayer: 0 |

|||

m_SortingOrder: 0 |

|||

--- !u!23 &23872068720866504 |

|||

MeshRenderer: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1707364840842826} |

|||

m_Enabled: 1 |

|||

m_CastShadows: 1 |

|||

m_ReceiveShadows: 1 |

|||

m_MotionVectors: 1 |

|||

m_LightProbeUsage: 1 |

|||

m_ReflectionProbeUsage: 1 |

|||

m_Materials: |

|||

- {fileID: 10303, guid: 0000000000000000f000000000000000, type: 0} |

|||

m_StaticBatchInfo: |

|||

firstSubMesh: 0 |

|||

subMeshCount: 0 |

|||

m_StaticBatchRoot: {fileID: 0} |

|||

m_ProbeAnchor: {fileID: 0} |

|||

m_LightProbeVolumeOverride: {fileID: 0} |

|||

m_ScaleInLightmap: 1 |

|||

m_PreserveUVs: 1 |

|||

m_IgnoreNormalsForChartDetection: 0 |

|||

m_ImportantGI: 0 |

|||

m_SelectedEditorRenderState: 3 |

|||

m_MinimumChartSize: 4 |

|||

m_AutoUVMaxDistance: 0.5 |

|||

m_AutoUVMaxAngle: 89 |

|||

m_LightmapParameters: {fileID: 0} |

|||

m_SortingLayerID: 0 |

|||

m_SortingLayer: 0 |

|||

m_SortingOrder: 0 |

|||

--- !u!33 &33016986498506672 |

|||

MeshFilter: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1243905751985214} |

|||

m_Mesh: {fileID: 10202, guid: 0000000000000000e000000000000000, type: 0} |

|||

--- !u!33 &33252015425015410 |

|||

MeshFilter: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1964440537870194} |

|||

m_Mesh: {fileID: 10202, guid: 0000000000000000e000000000000000, type: 0} |

|||

--- !u!33 &33507625006194266 |

|||

MeshFilter: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1707364840842826} |

|||

m_Mesh: {fileID: 10202, guid: 0000000000000000e000000000000000, type: 0} |

|||

--- !u!33 &33528566080995282 |

|||

MeshFilter: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1395477826315484} |

|||

m_Mesh: {fileID: 10202, guid: 0000000000000000e000000000000000, type: 0} |

|||

--- !u!33 &33618033993823702 |

|||

MeshFilter: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1982078136115924} |

|||

m_Mesh: {fileID: 10202, guid: 0000000000000000e000000000000000, type: 0} |

|||

--- !u!33 &33846302425286506 |

|||

MeshFilter: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1195095783991828} |

|||

m_Mesh: {fileID: 10202, guid: 0000000000000000e000000000000000, type: 0} |

|||

--- !u!33 &33890127227328306 |

|||

MeshFilter: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1535176706844624} |

|||

m_Mesh: {fileID: 10202, guid: 0000000000000000e000000000000000, type: 0} |

|||

--- !u!54 &54027918861229180 |

|||

Rigidbody: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1395477826315484} |

|||

serializedVersion: 2 |

|||

m_Mass: 10 |

|||

m_Drag: 1 |

|||

m_AngularDrag: 0.05 |

|||

m_UseGravity: 1 |

|||

m_IsKinematic: 0 |

|||

m_Interpolate: 0 |

|||

m_Constraints: 116 |

|||

m_CollisionDetection: 0 |

|||

--- !u!54 &54678503543725326 |

|||

Rigidbody: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1195095783991828} |

|||

serializedVersion: 2 |

|||

m_Mass: 25 |

|||

m_Drag: 2 |

|||

m_AngularDrag: 0.05 |

|||

m_UseGravity: 1 |

|||

m_IsKinematic: 0 |

|||

m_Interpolate: 0 |

|||

m_Constraints: 80 |

|||

m_CollisionDetection: 0 |

|||

--- !u!65 &65060909118748988 |

|||

BoxCollider: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1707364840842826} |

|||

m_Material: {fileID: 0} |

|||

m_IsTrigger: 0 |

|||

m_Enabled: 0 |

|||

serializedVersion: 2 |

|||

m_Size: {x: 1, y: 1, z: 1} |

|||

m_Center: {x: 0, y: 0, z: 0} |

|||

--- !u!65 &65082856895024712 |

|||

BoxCollider: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1243905751985214} |

|||

m_Material: {fileID: 13400000, guid: 2053f160e462a428ab794446c043b144, type: 2} |

|||

m_IsTrigger: 0 |

|||

m_Enabled: 1 |

|||

serializedVersion: 2 |

|||

m_Size: {x: 1, y: 1, z: 1} |

|||

m_Center: {x: 0, y: 0, z: 0} |

|||

--- !u!65 &65193133000831296 |

|||

BoxCollider: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1195095783991828} |

|||

m_Material: {fileID: 0} |

|||

m_IsTrigger: 0 |

|||

m_Enabled: 1 |

|||

serializedVersion: 2 |

|||

m_Size: {x: 1, y: 1, z: 1} |

|||

m_Center: {x: 0, y: 0, z: 0} |

|||

--- !u!65 &65412457053290128 |

|||

BoxCollider: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1964440537870194} |

|||

m_Material: {fileID: 0} |

|||

m_IsTrigger: 0 |

|||

m_Enabled: 1 |

|||

serializedVersion: 2 |

|||

m_Size: {x: 1, y: 1, z: 1} |

|||

m_Center: {x: 0, y: 0, z: 0} |

|||

--- !u!65 &65431820516000586 |

|||

BoxCollider: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1982078136115924} |

|||

m_Material: {fileID: 0} |

|||

m_IsTrigger: 1 |

|||

m_Enabled: 1 |

|||

serializedVersion: 2 |

|||

m_Size: {x: 1, y: 50, z: 1} |

|||

m_Center: {x: 0, y: 0, z: 0} |

|||

--- !u!65 &65551840025329434 |

|||

BoxCollider: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1395477826315484} |

|||

m_Material: {fileID: 13400000, guid: 2053f160e462a428ab794446c043b144, type: 2} |

|||

m_IsTrigger: 0 |

|||

m_Enabled: 1 |

|||

serializedVersion: 2 |

|||

m_Size: {x: 1, y: 1, z: 1} |

|||

m_Center: {x: 0, y: 0, z: 0} |

|||

--- !u!65 &65857793473814344 |

|||

BoxCollider: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1535176706844624} |

|||

m_Material: {fileID: 0} |

|||

m_IsTrigger: 0 |

|||

m_Enabled: 1 |

|||

serializedVersion: 2 |

|||

m_Size: {x: 1, y: 1, z: 1} |

|||

m_Center: {x: 0, y: 0, z: 0} |

|||

--- !u!114 &114092229367912210 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1195095783991828} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: bb172294dbbcc408286b156a2c4b553c, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

--- !u!114 &114925928594762506 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 1 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 100100000} |

|||

m_GameObject: {fileID: 1195095783991828} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: 676fca959b8ee45539773905ca71afa1, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

brain: {fileID: 0} |

|||

agentParameters: |

|||

agentCameras: [] |

|||

maxStep: 2000 |

|||

resetOnDone: 1 |

|||

onDemandDecision: 0 |

|||

numberOfActionsBetweenDecisions: 5 |

|||

noWallBrain: {fileID: 0} |

|||

smallWallBrain: {fileID: 0} |

|||

bigWallBrain: {fileID: 0} |

|||

ground: {fileID: 1964440537870194} |

|||

spawnArea: {fileID: 1535176706844624} |

|||

goal: {fileID: 1982078136115924} |

|||

shortBlock: {fileID: 1395477826315484} |

|||

wall: {fileID: 1243905751985214} |

|||

jumpingTime: 0 |

|||

jumpTime: 0.2 |

|||

fallingForce: 111 |

|||

hitGroundColliders: |

|||

- {fileID: 0} |

|||

- {fileID: 0} |

|||

- {fileID: 0} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 54e3af627216447f790531de496099f0 |

|||

timeCreated: 1520541093 |

|||

licenseType: Free |

|||

NativeFormatImporter: |

|||

mainObjectFileID: 100100000 |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 1d53e87fe54dd4178b88cc1a23b11731 |

|||

folderAsset: yes |

|||

timeCreated: 1517446674 |

|||

licenseType: Free |

|||

DefaultImporter: |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

1001

unity-environment/Assets/ML-Agents/Examples/WallJump/Scenes/WallJump.unity

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

|

|||

fileFormatVersion: 2 |

|||

guid: 56024e8d040d344709949bc88128944d |

|||

timeCreated: 1506808980 |

|||

licenseType: Pro |

|||

DefaultImporter: |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 344123e9bd87b48fdbebb4202a771d96 |

|||

folderAsset: yes |

|||

timeCreated: 1517445791 |

|||

licenseType: Free |

|||

DefaultImporter: |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

using System.Collections; |

|||

using System.Collections.Generic; |

|||

using UnityEngine; |

|||

|

|||

public class WallJumpAcademy : Academy { |

|||

|

|||

[Header("Specific to WallJump")] |

|||

public float agentRunSpeed; |

|||

public float agentJumpHeight; |

|||

//when a goal is scored the ground will use this material for a few seconds.

|

|||

public Material goalScoredMaterial; |

|||

//when fail, the ground will use this material for a few seconds.

|

|||

public Material failMaterial; |

|||

|

|||

[HideInInspector] |

|||

//use ~3 to make things less floaty

|

|||

public float gravityMultiplier = 2.5f; |

|||

[HideInInspector] |

|||

public float agentJumpVelocity = 777; |

|||

[HideInInspector] |

|||

public float agentJumpVelocityMaxChange = 10; |

|||

|

|||

// Use this for initialization

|

|||

public override void InitializeAcademy() |

|||

{ |

|||

Physics.gravity *= gravityMultiplier; |

|||

} |

|||

} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 50b93afe82bc647b581a706891913e7f |

|||

timeCreated: 1517447911 |

|||

licenseType: Free |

|||

MonoImporter: |

|||

externalObjects: {} |

|||

serializedVersion: 2 |

|||

defaultReferences: [] |

|||

executionOrder: 0 |

|||

icon: {instanceID: 0} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

//Put this script on your blue cube.

|

|||

|

|||

using System.Collections; |

|||

using System.Collections.Generic; |

|||

using UnityEngine; |

|||

using System.Linq; |

|||

|

|||

public class WallJumpAgent : Agent |

|||

{ |

|||

// Depending on this value, the wall will have different height

|

|||

int configuration; |

|||

// Brain to use when no wall is present

|

|||

public Brain noWallBrain; |

|||

// Brain to use when a jumpable wall is present

|

|||

public Brain smallWallBrain; |

|||

// Brain to use when a wall requiring a block to jump over is present

|

|||

public Brain bigWallBrain; |

|||

|

|||

public GameObject ground; |

|||

public GameObject spawnArea; |

|||

Bounds spawnAreaBounds; |

|||

|

|||

|

|||

public GameObject goal; |

|||

public GameObject shortBlock; |

|||

public GameObject wall; |

|||

Rigidbody shortBlockRB; |

|||

Rigidbody agentRB; |

|||

Material groundMaterial; |

|||

Renderer groundRenderer; |

|||

WallJumpAcademy academy; |

|||

RayPerception rayPer; |

|||

|

|||

public float jumpingTime; |

|||

public float jumpTime; |

|||

// This is a downward force applied when falling to make jumps look

|

|||

// less floaty

|

|||

public float fallingForce; |

|||

// Use to check the coliding objects

|

|||

public Collider[] hitGroundColliders = new Collider[3]; |

|||

Vector3 jumpTargetPos; |

|||

Vector3 jumpStartingPos; |

|||

|

|||

string[] detectableObjects; |

|||

|

|||

public override void InitializeAgent() |

|||

{ |

|||

academy = FindObjectOfType<WallJumpAcademy>(); |

|||

rayPer = GetComponent<RayPerception>(); |

|||

configuration = Random.Range(0, 5); |

|||

detectableObjects = new string[] { "wall", "goal", "block" }; |

|||

|

|||

agentRB = GetComponent<Rigidbody>(); |

|||

shortBlockRB = shortBlock.GetComponent<Rigidbody>(); |

|||

spawnAreaBounds = spawnArea.GetComponent<Collider>().bounds; |

|||

groundRenderer = ground.GetComponent<Renderer>(); |

|||

groundMaterial = groundRenderer.material; |

|||

|

|||

spawnArea.SetActive(false); |

|||

} |

|||

|

|||

|

|||

// Begin the jump sequence

|

|||

public void Jump() |

|||

{ |

|||

|

|||

jumpingTime = 0.2f; |

|||

jumpStartingPos = agentRB.position; |

|||

} |

|||

|

|||

/// <summary>

|

|||

/// Does the ground check.

|

|||

/// </summary>

|

|||

/// <returns><c>true</c>, if the agent is on the ground,

|

|||

/// <c>false</c> otherwise.</returns>

|

|||

/// <param name="boxWidth">The width of the box used to perform

|

|||

/// the ground check. </param>

|

|||

public bool DoGroundCheck(float boxWidth) |

|||

{ |

|||

hitGroundColliders = new Collider[3]; |

|||

Physics.OverlapBoxNonAlloc( |

|||

gameObject.transform.position + new Vector3(0, -0.05f, 0), |

|||

new Vector3(boxWidth / 2f, 0.5f, boxWidth / 2f), |

|||

hitGroundColliders, |

|||

gameObject.transform.rotation); |

|||

bool grounded = false; |

|||

foreach (Collider col in hitGroundColliders) |

|||

{ |

|||

|

|||

if (col != null && col.transform != this.transform && |

|||

(col.CompareTag("walkableSurface") || |

|||

col.CompareTag("block") || |

|||

col.CompareTag("wall"))) |

|||

{ |

|||

grounded = true; //then we're grounded

|

|||

break; |

|||

} |

|||

} |

|||

return grounded; |

|||

} |

|||

|

|||

|

|||

/// <summary>

|

|||

/// Moves a rigidbody towards a position smoothly.

|

|||

/// </summary>

|

|||

/// <param name="targetPos">Target position.</param>

|

|||

/// <param name="rb">The rigidbody to be moved.</param>

|

|||

/// <param name="targetVel">The velocity to target during the

|

|||

/// motion.</param>

|

|||

/// <param name="maxVel">The maximum velocity posible.</param>

|

|||

void MoveTowards( |

|||

Vector3 targetPos, Rigidbody rb, float targetVel, float maxVel) |

|||

{ |

|||

Vector3 moveToPos = targetPos - rb.worldCenterOfMass; |

|||

Vector3 velocityTarget = moveToPos * targetVel * Time.fixedDeltaTime; |

|||

if (float.IsNaN(velocityTarget.x) == false) |

|||

{ |

|||

rb.velocity = Vector3.MoveTowards( |

|||

rb.velocity, velocityTarget, maxVel); |

|||

} |

|||

} |

|||

|

|||

public override void CollectObservations() |

|||

{ |

|||

float rayDistance = 20f; |

|||

float[] rayAngles = { 0f, 45f, 90f, 135f, 180f, 110f, 70f }; |

|||

AddVectorObs(rayPer.Perceive( |

|||

rayDistance, rayAngles, detectableObjects, 0f, 0f)); |

|||

AddVectorObs(rayPer.Perceive( |

|||

rayDistance, rayAngles, detectableObjects, 2.5f, 2.5f)); |

|||

Vector3 agentPos = agentRB.position - ground.transform.position; |

|||

|

|||

AddVectorObs(agentPos / 20f); |

|||

AddVectorObs(DoGroundCheck(0.4f) ? 1 : 0); |

|||

|

|||

|

|||

} |

|||

|

|||

/// <summary>

|

|||

/// Gets a random spawn position in the spawningArea.

|

|||

/// </summary>

|

|||

/// <returns>The random spawn position.</returns>

|

|||

public Vector3 GetRandomSpawnPos() |

|||

{ |

|||

Vector3 randomSpawnPos = Vector3.zero; |

|||

float randomPosX = Random.Range(-spawnAreaBounds.extents.x, |

|||

spawnAreaBounds.extents.x); |

|||

float randomPosZ = Random.Range(-spawnAreaBounds.extents.z, |

|||

spawnAreaBounds.extents.z); |

|||

|

|||

randomSpawnPos = spawnArea.transform.position + |

|||

new Vector3(randomPosX, 0.45f, randomPosZ); |

|||

return randomSpawnPos; |

|||

} |

|||

|

|||

/// <summary>

|

|||

/// Chenges the color of the ground for a moment

|

|||

/// </summary>

|

|||

/// <returns>The Enumerator to be used in a Coroutine</returns>

|

|||

/// <param name="mat">The material to be swaped.</param>

|

|||

/// <param name="time">The time the material will remain.</param>

|

|||

IEnumerator GoalScoredSwapGroundMaterial(Material mat, float time) |

|||

{ |

|||

groundRenderer.material = mat; |

|||

yield return new WaitForSeconds(time); //wait for 2 sec

|

|||

groundRenderer.material = groundMaterial; |

|||

} |

|||

|

|||

|

|||

public void MoveAgent(float[] act) |

|||

{ |

|||

|

|||