您最多选择25个主题

主题必须以中文或者字母或数字开头,可以包含连字符 (-),并且长度不得超过35个字符

目录树:

0813411f

/main

/develop-generalizationTraining-TrainerController

/tag-0.2.0

/tag-0.2.1

/tag-0.2.1a

/tag-0.2.1c

/tag-0.2.1d

/hotfix-v0.9.2a

/develop-gpu-test

/0.10.1

/develop-pyinstaller

/develop-horovod

/PhysXArticulations20201

/importdocfix

/develop-resizetexture

/hh-develop-walljump_bugfixes

/develop-walljump-fix-sac

/hh-develop-walljump_rnd

/tag-0.11.0.dev0

/develop-pytorch

/tag-0.11.0.dev2

/develop-newnormalization

/tag-0.11.0.dev3

/develop

/release-0.12.0

/tag-0.12.0-dev

/tag-0.12.0.dev0

/tag-0.12.1

/2D-explorations

/asymm-envs

/tag-0.12.1.dev0

/2D-exploration-raycast

/tag-0.12.1.dev1

/release-0.13.0

/release-0.13.1

/plugin-proof-of-concept

/release-0.14.0

/hotfix-bump-version-master

/soccer-fives

/release-0.14.1

/bug-failed-api-check

/test-recurrent-gail

/hh-add-icons

/release-0.15.0

/release-0.15.1

/hh-develop-all-posed-characters

/internal-policy-ghost

/distributed-training

/hh-develop-improve_tennis

/test-tf-ver

/release_1_branch

/tennis-time-horizon

/whitepaper-experiments

/r2v-yamato-linux

/docs-update

/release_2_branch

/exp-mede

/sensitivity

/release_2_verified_load_fix

/test-sampler

/release_2_verified

/hh-develop-ragdoll-testing

/origin-develop-taggedobservations

/MLA-1734-demo-provider

/sampler-refactor-copy

/PhysXArticulations20201Package

/tag-com.unity.ml-agents_1.0.8

/release_3_branch

/github-actions

/release_3_distributed

/fix-batch-tennis

/distributed-ppo-sac

/gridworld-custom-obs

/hw20-segmentation

/hh-develop-gamedev-demo

/active-variablespeed

/release_4_branch

/fix-env-step-loop

/release_5_branch

/fix-walker

/release_6_branch

/hh-32-observation-crawler

/trainer-plugin

/hh-develop-max-steps-demo-recorder

/hh-develop-loco-walker-variable-speed

/exp-0002

/experiment-less-max-step

/hh-develop-hallway-wall-mesh-fix

/release_7_branch

/exp-vince

/hh-develop-gridsensor-tests

/tag-release_8_test0

/tag-release_8_test1

/release_8_branch

/docfix-end-episode

/release_9_branch

/hybrid-action-rewardsignals

/MLA-462-yamato-win

/exp-alternate-atten

/hh-develop-fps_game_project

/fix-conflict-base-env

/release_10_branch

/exp-bullet-hell-trainer

/ai-summit-exp

/comms-grad

/walljump-pushblock

/goal-conditioning

/release_11_branch

/hh-develop-water-balloon-fight

/gc-hyper

/layernorm

/yamato-linux-debug-venv

/soccer-comms

/hh-develop-pushblockcollab

/release_12_branch

/fix-get-step-sp-curr

/continuous-comms

/no-comms

/hh-develop-zombiepushblock

/hypernetwork

/revert-4859-develop-update-readme

/sequencer-env-attention

/hh-develop-variableobs

/exp-tanh

/reward-dist

/exp-weight-decay

/exp-robot

/bullet-hell-barracuda-test-1.3.1

/release_13_branch

/release_14_branch

/exp-clipped-gaussian-entropy

/tic-tac-toe

/hh-develop-dodgeball

/repro-vis-obs-perf

/v2-staging-rebase

/release_15_branch

/release_15_removeendepisode

/release_16_branch

/release_16_fix_gridsensor

/ai-hw-2021

/check-for-ModelOverriders

/fix-grid-obs-shape-init

/fix-gym-needs-reset

/fix-resume-imi

/release_17_branch

/release_17_branch_gpu_test

/colab-links

/exp-continuous-div

/release_17_branch_gpu_2

/exp-diverse-behavior

/grid-onehot-extra-dim-empty

/2.0-verified

/faster-entropy-coeficient-convergence

/pre-r18-update-changelog

/release_18_branch

/main/tracking

/main/reward-providers

/main/project-upgrade

/main/limitation-docs

/develop/nomaxstep-test

/develop/tf2.0

/develop/tanhsquash

/develop/magic-string

/develop/trainerinterface

/develop/separatevalue

/develop/nopreviousactions

/develop/reenablerepeatactions

/develop/0memories

/develop/fixmemoryleak

/develop/reducewalljump

/develop/removeactionholder-onehot

/develop/canonicalize-quaternions

/develop/self-playassym

/develop/demo-load-seek

/develop/progress-bar

/develop/sac-apex

/develop/cubewars

/develop/add-fire

/develop/gym-wrapper

/develop/mm-docs-main-readme

/develop/mm-docs-overview

/develop/no-threading

/develop/dockerfile

/develop/model-store

/develop/checkout-conversion-rebase

/develop/model-transfer

/develop/bisim-review

/develop/taggedobservations

/develop/transfer-bisim

/develop/bisim-sac-transfer

/develop/basketball

/develop/torchmodules

/develop/fixmarkdown

/develop/shortenstrikervsgoalie

/develop/shortengoalie

/develop/torch-save-rp

/develop/torch-to-np

/develop/torch-omp-no-thread

/develop/actionmodel-csharp

/develop/torch-extra

/develop/restructure-torch-networks

/develop/jit

/develop/adjust-cpu-settings-experiment

/develop/torch-sac-threading

/develop/wb

/develop/amrl

/develop/memorydump

/develop/permutepytorch

/develop/sac-targetq

/develop/actions-out

/develop/reshapeonnxmemories

/develop/crawlergail

/develop/debugtorchfood

/develop/hybrid-actions

/develop/bullet-hell

/develop/action-spec-gym

/develop/battlefoodcollector

/develop/use-action-buffers

/develop/hardswish

/develop/leakyrelu

/develop/torch-clip-scale

/develop/contentropy

/develop/manch

/develop/torchcrawlerdebug

/develop/fix-nan

/develop/multitype-buffer

/develop/windows-delay

/develop/torch-tanh

/develop/gail-norm

/develop/multiprocess

/develop/unified-obs

/develop/rm-rf-new-models

/develop/skipcritic

/develop/centralizedcritic

/develop/dodgeball-tests

/develop/cc-teammanager

/develop/weight-decay

/develop/singular-embeddings

/develop/zombieteammanager

/develop/superpush

/develop/teammanager

/develop/zombie-exp

/develop/update-readme

/develop/readme-fix

/develop/coma-noact

/develop/coma-withq

/develop/coma2

/develop/action-slice

/develop/gru

/develop/critic-op-lstm-currentmem

/develop/decaygail

/develop/gail-srl-hack

/develop/rear-pad

/develop/mm-copyright-dates

/develop/dodgeball-raycasts

/develop/collab-envs-exp-ervin

/develop/pushcollabonly

/develop/sample-curation

/develop/soccer-groupman

/develop/input-actuator-tanks

/develop/validate-release-fix

/develop/new-console-log

/develop/lex-walker-model

/develop/lstm-burnin

/develop/grid-vaiable-names

/develop/fix-attn-embedding

/develop/api-documentation-update-some-fixes

/develop/update-grpc

/develop/grid-rootref-debug

/develop/pbcollab-rays

/develop/2.0-verified-pre

/develop/parameterizedenvs

/develop/custom-ray-sensor

/develop/mm-add-v2blog

/develop/custom-raycast

/develop/area-manager

/develop/remove-unecessary-lr

/develop/use-base-env-in-learn

/soccer-fives/multiagent

/develop/cubewars/splashdamage

/develop/add-fire/exp

/develop/add-fire/jit

/develop/add-fire/speedtest

/develop/add-fire/bc

/develop/add-fire/ckpt-2

/develop/add-fire/normalize-context

/develop/add-fire/components-dir

/develop/add-fire/halfentropy

/develop/add-fire/memoryclass

/develop/add-fire/categoricaldist

/develop/add-fire/mm

/develop/add-fire/sac-lst

/develop/add-fire/mm3

/develop/add-fire/continuous

/develop/add-fire/ghost

/develop/add-fire/policy-tests

/develop/add-fire/export-discrete

/develop/add-fire/test-simple-rl-fix-resnet

/develop/add-fire/remove-currdoc

/develop/add-fire/clean2

/develop/add-fire/doc-cleanups

/develop/add-fire/changelog

/develop/add-fire/mm2

/develop/model-transfer/add-physics

/develop/model-transfer/train

/develop/jit/experiments

/exp-vince/sep30-2020

/hh-develop-gridsensor-tests/static

/develop/hybrid-actions/distlist

/develop/bullet-hell/buffer

/goal-conditioning/new

/goal-conditioning/sensors-2

/goal-conditioning/sensors-3-pytest-fix

/goal-conditioning/grid-world

/soccer-comms/disc

/develop/centralizedcritic/counterfact

/develop/centralizedcritic/mm

/develop/centralizedcritic/nonego

/develop/zombieteammanager/disableagent

/develop/zombieteammanager/killfirst

/develop/superpush/int

/develop/superpush/branch-cleanup

/develop/teammanager/int

/develop/teammanager/cubewar-nocycle

/develop/teammanager/cubewars

/develop/superpush/int/hunter

/goal-conditioning/new/allo-crawler

/develop/coma2/clip

/develop/coma2/singlenetwork

/develop/coma2/samenet

/develop/coma2/fixgroup

/develop/coma2/samenet/sum

/hh-develop-dodgeball/goy-input

/develop/soccer-groupman/mod

/develop/soccer-groupman/mod/hunter

/develop/soccer-groupman/mod/hunter/cine

/ai-hw-2021/tensor-applier

0.1.1

0.1.2

0.10.0

0.10.1

0.11.0

0.11.0.dev0

0.11.0.dev1

0.11.0.dev2

0.11.0.dev3

0.12.0

0.12.0-dev

0.12.0.dev0

0.12.1

0.12.1.dev0

0.12.1.dev1

0.13.0

0.13.1

0.14.0

0.14.1

0.15.0

0.15.1

0.2.0

0.2.1

0.2.1a

0.2.1b

0.2.1c

0.2.1d

0.3.0

0.3.0a

0.3.0b

0.3.1

0.3.1a

0.3.1b

0.4.0

0.4.0a

0.4.0b

0.5.0

0.5.0a

0.6.0

0.6.0a

0.7.0

0.8.0

0.8.1

0.8.2

0.9.0

0.9.1

0.9.2

0.9.3

com.unity.ml-agents_1.0.0

com.unity.ml-agents_1.0.2

com.unity.ml-agents_1.0.3

com.unity.ml-agents_1.0.4

com.unity.ml-agents_1.0.5

com.unity.ml-agents_1.0.6

com.unity.ml-agents_1.0.7

com.unity.ml-agents_1.0.8

com.unity.ml-agents_1.1.0

com.unity.ml-agents_1.2.0

com.unity.ml-agents_1.3.0

com.unity.ml-agents_1.4.0

com.unity.ml-agents_1.5.0

com.unity.ml-agents_1.6.0

com.unity.ml-agents_1.7.0

com.unity.ml-agents_1.7.2

com.unity.ml-agents_1.8.0

com.unity.ml-agents_1.8.1

com.unity.ml-agents_1.9.0

com.unity.ml-agents_1.9.1

com.unity.ml-agents_2.0.0

com.unity.ml-agents_2.1.0

latest_release

python-packages_0.16.0

python-packages_0.16.1

python-packages_0.17.0

python-packages_0.18.0

python-packages_0.18.1

python-packages_0.19.0

python-packages_0.20.0

python-packages_0.21.0

python-packages_0.21.1

python-packages_0.22.0

python-packages_0.23.0

python-packages_0.24.0

python-packages_0.24.1

python-packages_0.25.0

python-packages_0.25.1

python-packages_0.26.0

python-packages_0.27.0

release_1

release_10

release_10_docs

release_11

release_11_docs

release_12

release_12_docs

release_13

release_13_docs

release_14

release_14_docs

release_15

release_15_docs

release_16

release_16_docs

release_17

release_17_docs

release_18

release_18_docs

release_1_docs

release_2

release_2_docs

release_2_verified_docs

release_3

release_3_docs

release_4

release_4_docs

release_5

release_5_docs

release_6

release_6_docs

release_7

release_7_docs

release_8

release_8_docs

release_8_test0

release_8_test1

release_9

release_9_docs

v0.1

1.9 KiB

1.9 KiB

Using Recurrent Neural Networks in ML-Agents

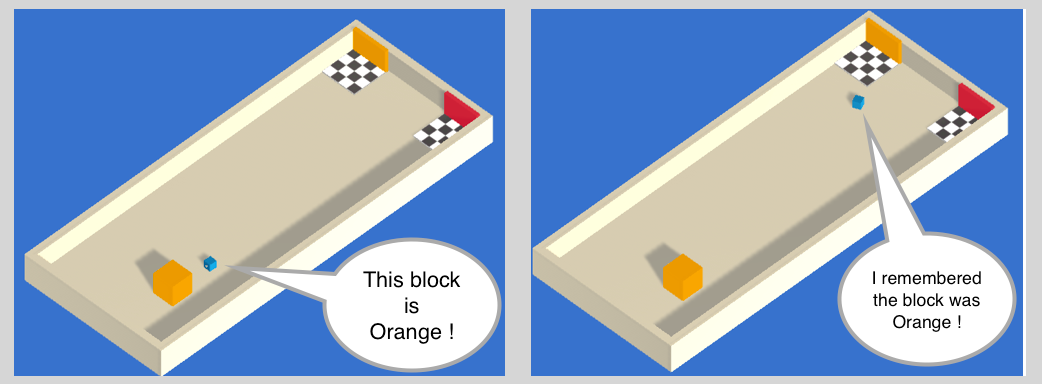

What are memories for?

Have you ever entered a room to get something and immediately forgot what you were looking for? Don't let that happen to your agents.

It is now possible to give memories to your agents. When training, the agents will be able to store a vector of floats to be used next time they need to make a decision.

Deciding what the agents should remember in order to solve a task is not easy to do by hand, but our training algorithms can learn to keep track of what is important to remember with LSTM.

How to use

When configuring the trainer parameters in the trainer_config.yaml

file, add the following parameters to the Brain you want to use.

use_recurrent: true

sequence_length: 64

memory_size: 256

use_recurrentis a flag that notifies the trainer that you want to use a Recurrent Neural Network.sequence_lengthdefines how long the sequences of experiences must be while training. In order to use a LSTM, training requires a sequence of experiences instead of single experiences.memory_sizecorresponds to the size of the memory the agent must keep. Note that if this number is too small, the agent will not be able to remember a lot of things. If this number is too large, the neural network will take longer to train.

Limitations

- LSTM does not work well with continuous vector action space. Please use discrete vector action space for better results.

- Since the memories must be sent back and forth between Python

and Unity, using too large

memory_sizewill slow down training. - Adding a recurrent layer increases the complexity of the neural

network, it is recommended to decrease

num_layerswhen using recurrent. - It is required that

memory_sizebe divisible by 4.