当前提交

c3644f56

共有 124 个文件被更改,包括 14228 次插入 和 2604 次删除

-

2.gitignore

-

92README.md

-

353docs/Getting-Started-with-Balance-Ball.md

-

61docs/Readme.md

-

2docs/Training-on-Amazon-Web-Service.md

-

8docs/Limitations-and-Common-Issues.md

-

4docs/Python-API.md

-

10docs/Using-TensorFlow-Sharp-in-Unity.md

-

2docs/Feature-Broadcasting.md

-

2docs/Feature-Monitor.md

-

73python/trainer_config.yaml

-

4python/unityagents/environment.py

-

38python/unitytrainers/models.py

-

27python/unitytrainers/ppo/models.py

-

25python/unitytrainers/ppo/trainer.py

-

2python/unitytrainers/trainer_controller.py

-

19unity-environment/Assets/ML-Agents/Examples/3DBall/Scripts/Ball3DDecision.cs

-

132unity-environment/Assets/ML-Agents/Examples/Crawler/Crawler.unity

-

14unity-environment/Assets/ML-Agents/Examples/Hallway/Scripts/HallwayAcademy.cs

-

8unity-environment/Assets/ML-Agents/Examples/Hallway/Scripts/HallwayAgent.cs

-

2unity-environment/Assets/ML-Agents/Scripts/Academy.cs

-

2unity-environment/Assets/ML-Agents/Scripts/Agent.cs

-

547docs/images/mlagents-BuildWindow.png

-

511docs/images/mlagents-TensorBoard.png

-

345docs/images/brain.png

-

377docs/images/learning_environment_basic.png

-

16docs/Background-Jupyter.md

-

183docs/Background-Machine-Learning.md

-

37docs/Background-TensorFlow.md

-

18docs/Background-Unity.md

-

20docs/Contribution-Guidelines.md

-

3docs/Glossary.md

-

3docs/Installation-Docker.md

-

61docs/Installation.md

-

25docs/Learning-Environment-Best-Practices.md

-

398docs/Learning-Environment-Create-New.md

-

42docs/Learning-Environment-Design-Academy.md

-

277docs/Learning-Environment-Design-Agents.md

-

52docs/Learning-Environment-Design-Brains.md

-

89docs/Learning-Environment-Design.md

-

186docs/Learning-Environment-Examples.md

-

403docs/ML-Agents-Overview.md

-

68docs/Training-Curriculum-Learning.md

-

3docs/Training-Imitation-Learning.md

-

4docs/Training-ML-Agents.md

-

118docs/Training-PPO.md

-

5docs/Using-Tensorboard.md

-

1001docs/dox-ml-agents.conf

-

5docs/doxygen/Readme.md

-

1001docs/doxygen/doxygenbase.css

-

14docs/doxygen/footer.html

-

56docs/doxygen/header.html

-

18docs/doxygen/logo.png

-

146docs/doxygen/navtree.css

-

15docs/doxygen/splitbar.png

-

410docs/doxygen/unity.css

-

219docs/images/academy.png

-

58docs/images/basic.png

-

119docs/images/broadcast.png

-

110docs/images/internal_brain.png

-

744docs/images/learning_environment.png

-

469docs/images/learning_environment_example.png

-

1001docs/images/mlagents-3DBall.png

-

712docs/images/mlagents-3DBallHierarchy.png

-

144docs/images/mlagents-NewProject.png

-

183docs/images/mlagents-NewTutAcademy.png

-

810docs/images/mlagents-NewTutAssignBrain.png

-

186docs/images/mlagents-NewTutBlock.png

-

184docs/images/mlagents-NewTutBrain.png

-

209docs/images/mlagents-NewTutFloor.png

-

54docs/images/mlagents-NewTutHierarchy.png

-

192docs/images/mlagents-NewTutSphere.png

-

591docs/images/mlagents-NewTutSplash.png

-

580docs/images/mlagents-Open3DBall.png

-

1001docs/images/mlagents-Scene.png

-

79docs/images/mlagents-SetExternalBrain.png

-

64docs/images/normalization.png

-

129docs/images/player_brain.png

-

111docs/images/rl_cycle.png

-

79docs/images/scene-hierarchy.png

-

15docs/images/splitbar.png

-

71docs/Agents-Editor-Interface.md

-

127docs/Making-a-new-Unity-Environment.md

-

33docs/Organizing-the-Scene.md

-

43docs/Unity-Agents-Overview.md

-

23docs/best-practices.md

-

55docs/installation.md

-

114docs/best-practices-ppo.md

-

87docs/curriculum.md

-

29docs/Instantiating-Destroying-Agents.md

-

174docs/Example-Environments.md

-

343images/academy.png

-

241images/player_brain.png

-

58python/README.md

-

52unity-environment/README.md

-

0/docs/Limitations-and-Common-Issues.md

-

0/docs/Python-API.md

-

0/docs/Using-TensorFlow-Sharp-in-Unity.md

|

|||

<img src="images/unity-wide.png" align="middle" width="3000"/> |

|||

<img src="docs/images/unity-wide.png" align="middle" width="3000"/> |

|||

# Unity ML - Agents (Beta) |

|||

# Unity ML-Agents (Beta) |

|||

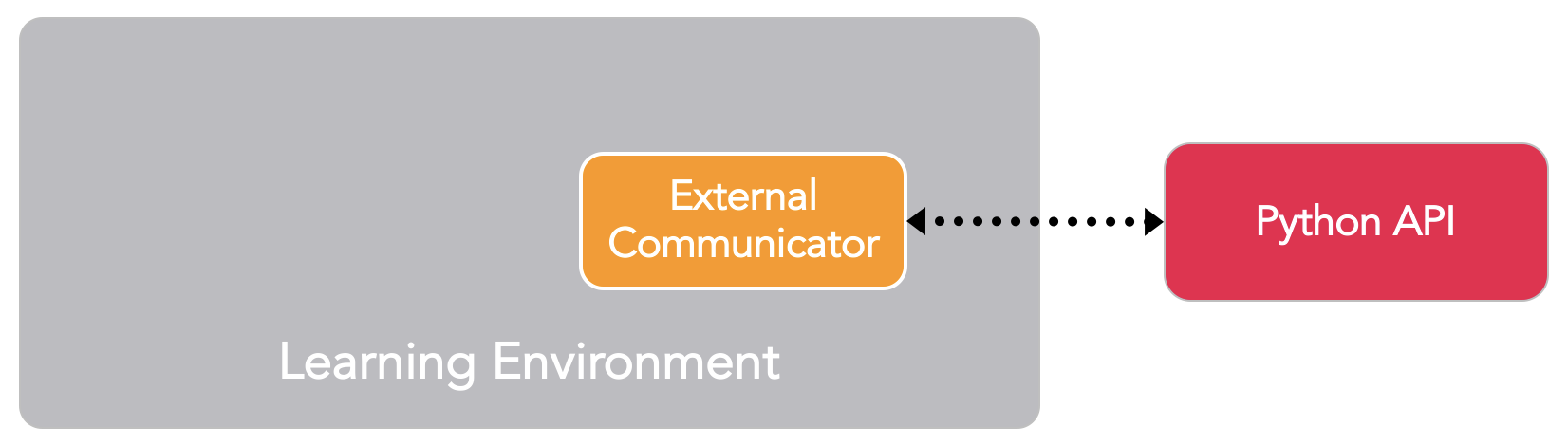

**Unity Machine Learning Agents** allows researchers and developers to |

|||

create games and simulations using the Unity Editor which serve as |

|||

environments where intelligent agents can be trained using |

|||

reinforcement learning, neuroevolution, or other machine learning |

|||

methods through a simple-to-use Python API. For more information, see |

|||

the [documentation page](docs). |

|||

|

|||

For a walkthrough on how to train an agent in one of the provided |

|||

example environments, start |

|||

[here](docs/Getting-Started-with-Balance-Ball.md). |

|||

**Unity Machine Learning Agents** (ML-Agents) is an open-source Unity plugin |

|||

that enables games and simulations to serve as environments for training |

|||

intelligent agents. Agents can be trained using reinforcement learning, |

|||

imitation learning, neuroevolution, or other machine learning methods through |

|||

a simple-to-use Python API. We also provide implementations (based on |

|||

TensorFlow) of state-of-the-art algorithms to enable game developers |

|||

and hobbyists to easily train intelligent agents for 2D, 3D and VR/AR games. |

|||

These trained agents can be used for multiple purposes, including |

|||

controlling NPC behavior (in a variety of settings such as multi-agent and |

|||

adversarial), automated testing of game builds and evaluating different game |

|||

design decisions pre-release. ML-Agents is mutually beneficial for both game |

|||

developers and AI researchers as it provides a central platform where advances |

|||

in AI can be evaluated on Unity’s rich environments and then made accessible |

|||

to the wider research and game developer communities. |

|||

* Multiple observations (cameras) |

|||

* Flexible Multi-agent support |

|||

* Flexible single-agent and multi-agent support |

|||

* Multiple visual observations (cameras) |

|||

* Python (2 and 3) control interface |

|||

* Visualizing network outputs in environment |

|||

* Tensorflow Sharp Agent Embedding _[Experimental]_ |

|||

* Built-in support for Imitation Learning (coming soon) |

|||

* Visualizing network outputs within the environment |

|||

* Python control interface |

|||

* TensorFlow Sharp Agent Embedding _[Experimental]_ |

|||

## Creating an Environment |

|||

## Documentation and References |

|||

The _Agents SDK_, including example environment scenes is located in |

|||

`unity-environment` folder. For requirements, instructions, and other |

|||

information, see the contained Readme and the relevant |

|||

[documentation](docs/Making-a-new-Unity-Environment.md). |

|||

For more information on ML-Agents, in addition to installation, and usage |

|||

instructions, see our [documentation home](docs). |

|||

## Training your Agents |

|||

We have also published a series of blog posts that are relevant for ML-Agents: |

|||

- Overviewing reinforcement learning concepts |

|||

([multi-armed bandit](https://blogs.unity3d.com/2017/06/26/unity-ai-themed-blog-entries/) |

|||

and [Q-learning](https://blogs.unity3d.com/2017/08/22/unity-ai-reinforcement-learning-with-q-learning/)) |

|||

- [Using Machine Learning Agents in a real game: a beginner’s guide](https://blogs.unity3d.com/2017/12/11/using-machine-learning-agents-in-a-real-game-a-beginners-guide/) |

|||

- [Post]() announcing the winners of our |

|||

[first ML-Agents Challenge](https://connect.unity.com/challenges/ml-agents-1) |

|||

- [Post](https://blogs.unity3d.com/2018/01/23/designing-safer-cities-through-simulations/) |

|||

overviewing how Unity can be leveraged as a simulator to design safer cities. |

|||

|

|||

In addition to our own documentation, here are some additional, relevant articles: |

|||

- [Unity AI - Unity 3D Artificial Intelligence](https://www.youtube.com/watch?v=bqsfkGbBU6k) |

|||

- [A Game Developer Learns Machine Learning](https://mikecann.co.uk/machine-learning/a-game-developer-learns-machine-learning-intent/) |

|||

- [Unity3D Machine Learning – Setting up the environment & TensorFlow for AgentML on Windows 10](https://unity3d.college/2017/10/25/machine-learning-in-unity3d-setting-up-the-environment-tensorflow-for-agentml-on-windows-10/) |

|||

- [Explore Unity Technologies ML-Agents Exclusively on Intel Architecture](https://software.intel.com/en-us/articles/explore-unity-technologies-ml-agents-exclusively-on-intel-architecture) |

|||

Once you've built a Unity Environment, example Reinforcement Learning |

|||

algorithms and the Python API are available in the `python` |

|||

folder. For requirements, instructions, and other information, see the |

|||

contained Readme and the relevant |

|||

[documentation](docs/Unity-Agents---Python-API.md). |

|||

## Community and Feedback |

|||

|

|||

ML-Agents is an open-source project and we encourage and welcome contributions. |

|||

If you wish to contribute, be sure to review our |

|||

[contribution guidelines](docs/Contribution-Guidelines.md) and |

|||

[code of conduct](CODE_OF_CONDUCT.md). |

|||

|

|||

You can connect with us and the broader community |

|||

through Unity Connect and GitHub: |

|||

* Join our |

|||

[Unity Machine Learning Channel](https://connect.unity.com/messages/c/035fba4f88400000) |

|||

to connect with others using ML-Agents and Unity developers enthusiastic |

|||

about machine learning. We use that channel to surface updates |

|||

regarding ML-Agents (and, more broadly, machine learning in games). |

|||

* If you run into any problems using ML-Agents, |

|||

[submit an issue](https://github.com/Unity-Technologies/ml-agents/issues) and |

|||

make sure to include as much detail as possible. |

|||

|

|||

For any other questions or feedback, connect directly with the ML-Agents |

|||

team at ml-agents@unity3d.com. |

|||

|

|||

## License |

|||

|

|||

[Apache License 2.0](LICENSE) |

|||

|

|||

# Getting Started with the Balance Ball Example |

|||

# Getting Started with the 3D Balance Ball Example |

|||

|

|||

This tutorial walks through the end-to-end process of opening an ML-Agents |

|||

example environment in Unity, building the Unity executable, training an agent |

|||

in it, and finally embedding the trained model into the Unity environment. |

|||

This tutorial will walk through the end-to-end process of installing Unity Agents, building an example environment, training an agent in it, and finally embedding the trained model into the Unity environment. |

|||

ML-Agents includes a number of [example environments](Learning-Environment-Examples.md) |

|||

which you can examine to help understand the different ways in which ML-Agents |

|||

can be used. These environments can also serve as templates for new |

|||

environments or as ways to test new ML algorithms. After reading this tutorial, |

|||

you should be able to explore and build the example environments. |

|||

Unity ML Agents contains a number of example environments which can be used as templates for new environments, or as ways to test a new ML algorithm to ensure it is functioning correctly. |

|||

|

|||

In this walkthrough we will be using the **3D Balance Ball** environment. The environment contains a number of platforms and balls. Platforms can act to keep the ball up by rotating either horizontally or vertically. Each platform is an agent which is rewarded the longer it can keep a ball balanced on it, and provided a negative reward for dropping the ball. The goal of the training process is to have the platforms learn to never drop the ball. |

|||

This walkthrough uses the **3D Balance Ball** environment. 3D Balance Ball |

|||

contains a number of platforms and balls (which are all copies of each other). |

|||

Each platform tries to keep its ball from falling by rotating either |

|||

horizontally or vertically. In this environment, a platform is an **agent** |

|||

that receives a reward for every step that it balances the ball. An agent is |

|||

also penalized with a negative reward for dropping the ball. The goal of the |

|||

training process is to have the platforms learn to never drop the ball. |

|||

In order to install and set-up the Python and Unity environments, see the instructions [here](installation.md). |

|||

In order to install and set up ML-Agents, the Python dependencies and Unity, |

|||

see the [installation instructions](Installation.md). |

|||

|

|||

## Understanding a Unity Environment (Balance Ball) |

|||

|

|||

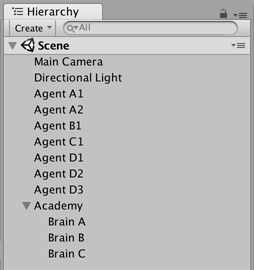

An agent is an autonomous actor that observes and interacts with an |

|||

_environment_. In the context of Unity, an environment is a scene containing |

|||

an Academy and one or more Brain and Agent objects, and, of course, the other |

|||

entities that an agent interacts with. |

|||

|

|||

|

|||

|

|||

**Note:** In Unity, the base object of everything in a scene is the |

|||

_GameObject_. The GameObject is essentially a container for everything else, |

|||

including behaviors, graphics, physics, etc. To see the components that make |

|||

up a GameObject, select the GameObject in the Scene window, and open the |

|||

Inspector window. The Inspector shows every component on a GameObject. |

|||

|

|||

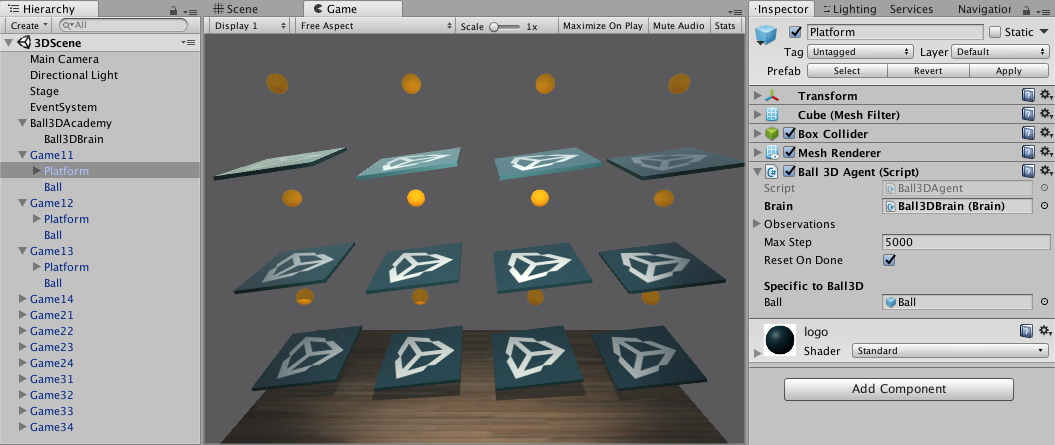

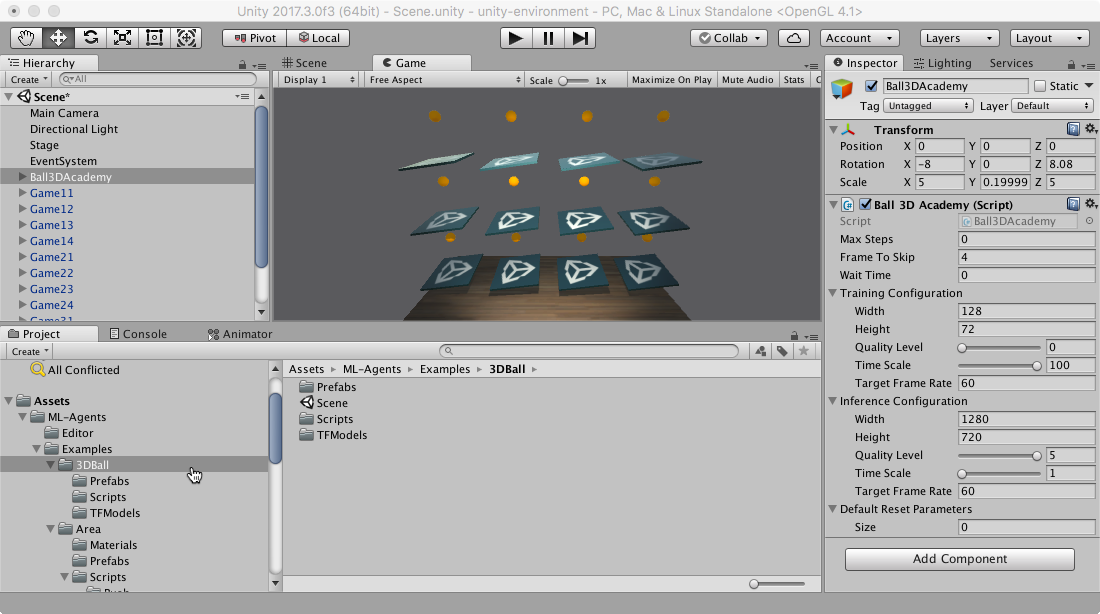

The first thing you may notice after opening the 3D Balance Ball scene is that |

|||

it contains not one, but several platforms. Each platform in the scene is an |

|||

independent agent, but they all share the same brain. Balance Ball does this |

|||

to speed up training since all twelve agents contribute to training in parallel. |

|||

|

|||

### Academy |

|||

|

|||

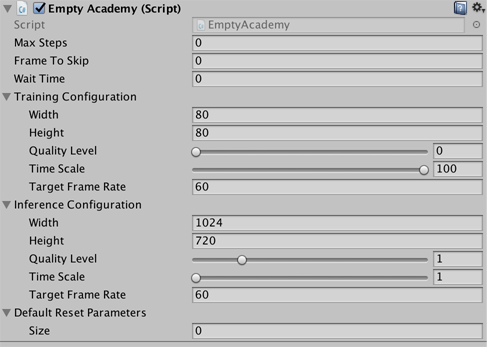

The Academy object for the scene is placed on the Ball3DAcademy GameObject. |

|||

When you look at an Academy component in the inspector, you can see several |

|||

properties that control how the environment works. For example, the |

|||

**Training** and **Inference Configuration** properties set the graphics and |

|||

timescale properties for the Unity application. The Academy uses the |

|||

**Training Configuration** during training and the **Inference Configuration** |

|||

when not training. (*Inference* means that the agent is using a trained model |

|||

or heuristics or direct control — in other words, whenever **not** training.) |

|||

Typically, you set low graphics quality and a high time scale for the |

|||

**Training configuration** and a high graphics quality and the timescale to |

|||

`1.0` for the **Inference Configuration** . |

|||

|

|||

**Note:** if you want to observe the environment during training, you can |

|||

adjust the **Inference Configuration** settings to use a larger window and a |

|||

timescale closer to 1:1. Be sure to set these parameters back when training in |

|||

earnest; otherwise, training can take a very long time. |

|||

|

|||

Another aspect of an environment to look at is the Academy implementation. |

|||

Since the base Academy class is abstract, you must always define a subclass. |

|||

There are three functions you can implement, though they are all optional: |

|||

|

|||

* Academy.InitializeAcademy() — Called once when the environment is launched. |

|||

* Academy.AcademyStep() — Called at every simulation step before |

|||

Agent.AgentStep() (and after the agents collect their state observations). |

|||

* Academy.AcademyReset() — Called when the Academy starts or restarts the |

|||

simulation (including the first time). |

|||

|

|||

The 3D Balance Ball environment does not use these functions — each agent |

|||

resets itself when needed — but many environments do use these functions to |

|||

control the environment around the agents. |

|||

|

|||

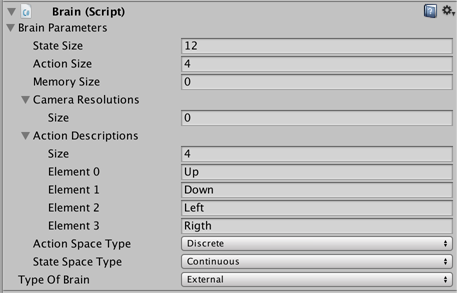

### Brain |

|||

|

|||

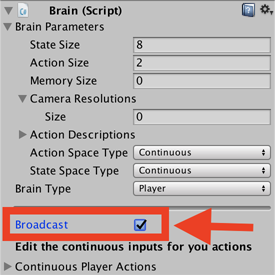

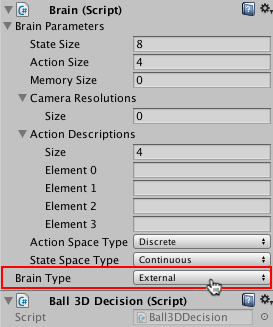

The Ball3DBrain GameObject in the scene, which contains a Brain component, |

|||

is a child of the Academy object. (All Brain objects in a scene must be |

|||

children of the Academy.) All the agents in the 3D Balance Ball environment |

|||

use the same Brain instance. A Brain doesn't save any state about an agent, |

|||

it just routes the agent's collected state observations to the decision making |

|||

process and returns the chosen action to the agent. Thus, all agents can share |

|||

the same brain, but act independently. The Brain settings tell you quite a bit |

|||

about how an agent works. |

|||

|

|||

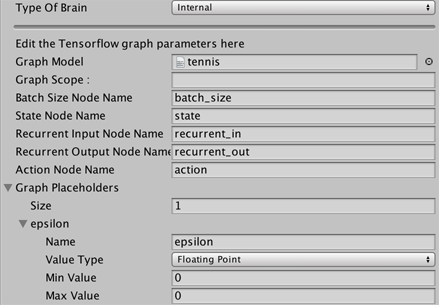

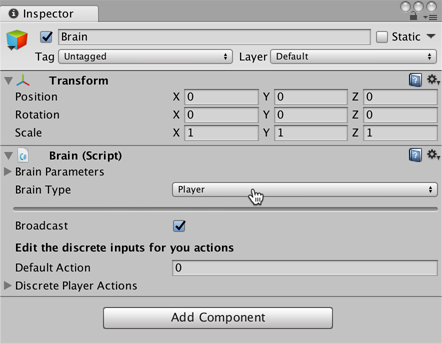

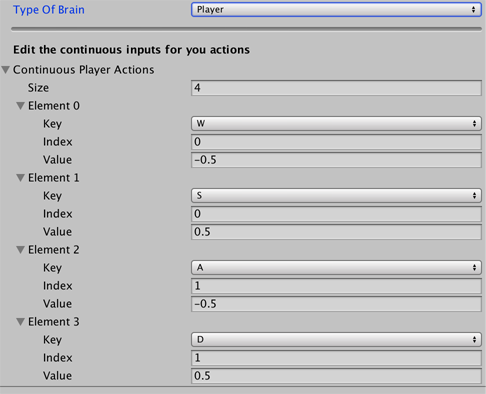

The **Brain Type** determines how an agent makes its decisions. The |

|||

**External** and **Internal** types work together — use **External** when |

|||

training your agents; use **Internal** when using the trained model. |

|||

The **Heuristic** brain allows you to hand-code the agent's logic by extending |

|||

the Decision class. Finally, the **Player** brain lets you map keyboard |

|||

commands to actions, which can be useful when testing your agents and |

|||

environment. If none of these types of brains do what you need, you can |

|||

implement your own CoreBrain to create your own type. |

|||

|

|||

In this tutorial, you will set the **Brain Type** to **External** for training; |

|||

when you embed the trained model in the Unity application, you will change the |

|||

**Brain Type** to **Internal**. |

|||

|

|||

**State Observation Space** |

|||

|

|||

Before making a decision, an agent collects its observation about its state |

|||

in the world. ML-Agents classifies observations into two types: **Continuous** |

|||

and **Discrete**. The **Continuous** state space collects observations in a |

|||

vector of floating point numbers. The **Discrete** state space is an index |

|||

into a table of states. Most of the example environments use a continuous |

|||

state space. |

|||

|

|||

The Brain instance used in the 3D Balance Ball example uses the **Continuous** |

|||

state space with a **State Size** of 8. This means that the feature vector |

|||

containing the agent's observations contains eight elements: the `x` and `z` |

|||

components of the platform's rotation and the `x`, `y`, and `z` components of |

|||

the ball's relative position and velocity. (The state values are defined in |

|||

the agent's `CollectState()` function.) |

|||

|

|||

**Action Space** |

|||

|

|||

An agent is given instructions from the brain in the form of *actions*. Like |

|||

states, ML-Agents classifies actions into two types: the **Continuous** action |

|||

space is a vector of numbers that can vary continuously. What each element of |

|||

the vector means is defined by the agent logic (the PPO training process just |

|||

learns what values are better given particular state observations based on the |

|||

rewards received when it tries different values). For example, an element might |

|||

represent a force or torque applied to a Rigidbody in the agent. The |

|||

**Discrete** action space defines its actions as a table. A specific action |

|||

given to the agent is an index into this table. |

|||

|

|||

The 3D Balance Ball example is programmed to use both types of action space. |

|||

You can try training with both settings to observe whether there is a |

|||

difference. (Set the `Action Size` to 4 when using the discrete action space |

|||

and 2 when using continuous.) |

|||

|

|||

### Agent |

|||

|

|||

The Agent is the actor that observes and takes actions in the environment. |

|||

In the 3D Balance Ball environment, the Agent components are placed on the |

|||

twelve Platform GameObjects. The base Agent object has a few properties that |

|||

affect its behavior: |

|||

|

|||

* **Brain** — Every agent must have a Brain. The brain determines how an agent |

|||

makes decisions. All the agents in the 3D Balance Ball scene share the same |

|||

brain. |

|||

* **Observations** — Defines any Camera objects used by the agent to observe |

|||

its environment. 3D Balance Ball does not use camera observations. |

|||

* **Max Step** — Defines how many simulation steps can occur before the agent |

|||

decides it is done. In 3D Balance Ball, an agent restarts after 5000 steps. |

|||

* **Reset On Done** — Defines whether an agent starts over when it is finished. |

|||

3D Balance Ball sets this true so that the agent restarts after reaching the |

|||

**Max Step** count or after dropping the ball. |

|||

|

|||

Perhaps the more interesting aspect of an agent is the Agent subclass |

|||

implementation. When you create an agent, you must extend the base Agent class. |

|||

The Ball3DAgent subclass defines the following methods: |

|||

|

|||

* Agent.AgentReset() — Called when the Agent resets, including at the beginning |

|||

of a session. The Ball3DAgent class uses the reset function to reset the |

|||

platform and ball. The function randomizes the reset values so that the |

|||

training generalizes to more than a specific starting position and platform |

|||

attitude. |

|||

* Agent.CollectState() — Called every simulation step. Responsible for |

|||

collecting the agent's observations of the environment. Since the Brain |

|||

instance assigned to the agent is set to the continuous state space with a |

|||

state size of 8, the `CollectState()` function returns a vector (technically |

|||

a List<float> object) containing 8 elements. |

|||

* Agent.AgentStep() — Called every simulation step (unless the brain's |

|||

`Frame Skip` property is > 0). Receives the action chosen by the brain. The |

|||

Ball3DAgent example handles both the continuous and the discrete action space |

|||

types. There isn't actually much difference between the two state types in |

|||

this environment — both action spaces result in a small change in platform |

|||

rotation at each step. The `AgentStep()` function assigns a reward to the |

|||

agent; in this example, an agent receives a small positive reward for each |

|||

step it keeps the ball on the platform and a larger, negative reward for |

|||

dropping the ball. An agent is also marked as done when it drops the ball |

|||

so that it will reset with a new ball for the next simulation step. |

|||

## Building Unity Environment |

|||

Launch the Unity Editor, and log in, if necessary. |

|||

## Building the Environment |

|||

1. Open the `unity-environment` folder using the Unity editor. *(If this is not first time running Unity, you'll be able to skip most of these immediate steps, choose directly from the list of recently opened projects)* |

|||

- On the initial dialog, choose `Open` on the top options |

|||

- On the file dialog, choose `unity-environment` and click `Open` *(It is safe to ignore any warning message about non-matching editor installation)* |

|||

- Once the project is open, on the `Project` panel (bottom of the tool), navigate to the folder `Assets/ML-Agents/Examples/3DBall/` |

|||

- Double-click the `Scene` icon (Unity logo) to load all environment assets |

|||

2. Go to `Edit -> Project Settings -> Player` |

|||

- Ensure that `Resolution and Presentation -> Run in Background` is Checked. |

|||

- Ensure that `Resolution and Presentation -> Display Resolution Dialog` is set to Disabled. |

|||

3. Expand the `Ball3DAcademy` GameObject and locate its child object `Ball3DBrain` within the Scene hierarchy in the editor. Ensure Type of Brain for this object is set to `External`. |

|||

4. *File -> Build Settings* |

|||

5. Choose your target platform: |

|||

- (optional) Select “Development Build” to log debug messages. |

|||

6. Click *Build*: |

|||

- Save environment binary to the `python` sub-directory of the cloned repository *(you may need to click on the down arrow on the file chooser to be able to select that folder)* |

|||

The first step is to open the Unity scene containing the 3D Balance Ball |

|||

environment: |

|||

## Training the Brain with Reinforcement Learning |

|||

1. Launch Unity. |

|||

2. On the Projects dialog, choose the **Open** option at the top of the window. |

|||

3. Using the file dialog that opens, locate the `unity-environment` folder |

|||

within the ML-Agents project and click **Open**. |

|||

4. In the `Project` window, navigate to the folder |

|||

`Assets/ML-Agents/Examples/3DBall/`. |

|||

5. Double-click the `Scene` file to load the scene containing the Balance |

|||

Ball environment. |

|||

### Testing Python API |

|||

|

|||

To launch jupyter, run in the command line: |

|||

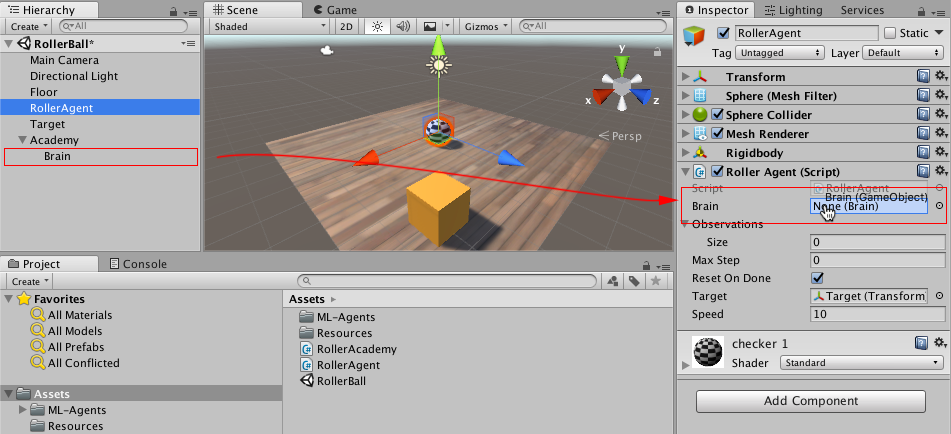

Since we are going to build this environment to conduct training, we need to |

|||

set the brain used by the agents to **External**. This allows the agents to |

|||

communicate with the external training process when making their decisions. |

|||

`jupyter notebook` |

|||

1. In the **Scene** window, click the triangle icon next to the Ball3DAcademy |

|||

object. |

|||

2. Select its child object `Ball3DBrain`. |

|||

3. In the Inspector window, set **Brain Type** to `External`. |

|||

Then navigate to `localhost:8888` to access the notebooks. If you're new to jupyter, check out the [quick start guide](https://jupyter-notebook-beginner-guide.readthedocs.io/en/latest/execute.html) before you continue. |

|||

|

|||

To ensure that your environment and the Python API work as expected, you can use the `python/Basics` Jupyter notebook. This notebook contains a simple walkthrough of the functionality of the API. Within `Basics`, be sure to set `env_name` to the name of the environment file you built earlier. |

|||

Next, we want the set up scene to to play correctly when the training process |

|||

launches our environment executable. This means: |

|||

* The environment application runs in the background |

|||

* No dialogs require interaction |

|||

* The correct scene loads automatically |

|||

|

|||

1. Open Player Settings (menu: **Edit** > **Project Settings** > **Player**). |

|||

2. Under **Resolution and Presentation**: |

|||

- Ensure that **Run in Background** is Checked. |

|||

- Ensure that **Display Resolution Dialog** is set to Disabled. |

|||

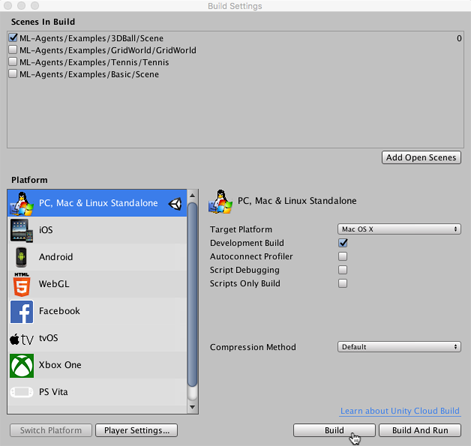

3. Open the Build Settings window (menu:**File** > **Build Settings**). |

|||

4. Choose your target platform. |

|||

- (optional) Select “Development Build” to |

|||

[log debug messages](https://docs.unity3d.com/Manual/LogFiles.html). |

|||

5. If any scenes are shown in the **Scenes in Build** list, make sure that |

|||

the 3DBall Scene is the only one checked. (If the list is empty, than only the |

|||

current scene is included in the build). |

|||

6. Click *Build*: |

|||

a. In the File dialog, navigate to the `python` folder in your ML-Agents |

|||

directory. |

|||

b. Assign a file name and click **Save**. |

|||

|

|||

|

|||

|

|||

## Training the Brain with Reinforcement Learning |

|||

|

|||

Now that we have a Unity executable containing the simulation environment, we |

|||

can perform the training. |

|||

In order to train an agent to correctly balance the ball, we will use a Reinforcement Learning algorithm called Proximal Policy Optimization (PPO). This is a method that has been shown to be safe, efficient, and more general purpose than many other RL algorithms, as such we have chosen it as the example algorithm for use with ML Agents. For more information on PPO, OpenAI has a recent [blog post](https://blog.openai.com/openai-baselines-ppo/) explaining it. |

|||

|

|||

In order to train an agent to correctly balance the ball, we will use a |

|||

Reinforcement Learning algorithm called Proximal Policy Optimization (PPO). |

|||

This is a method that has been shown to be safe, efficient, and more general |

|||

purpose than many other RL algorithms, as such we have chosen it as the |

|||

example algorithm for use with ML-Agents. For more information on PPO, |

|||

OpenAI has a recent [blog post](https://blog.openai.com/openai-baselines-ppo/) |

|||

explaining it. |

|||

3. (optional) Set `run_path` directory to your choice. |

|||

4. Run all cells of notebook with the exception of the last one under "Export the trained Tensorflow graph." |

|||

3. (optional) In order to get the best results quickly, set `max_steps` to |

|||

50000, set `buffer_size` to 5000, and set `batch_size` to 512. For this |

|||

exercise, this will train the model in approximately ~5-10 minutes. |

|||

4. (optional) Set `run_path` directory to your choice. When using TensorBoard |

|||

to observe the training statistics, it helps to set this to a sequential value |

|||

for each training run. In other words, "BalanceBall1" for the first run, |

|||

"BalanceBall2" or the second, and so on. If you don't, the summaries for |

|||

every training run are saved to the same directory and will all be included |

|||

on the same graph. |

|||

5. Run all cells of notebook with the exception of the last one under "Export |

|||

the trained Tensorflow graph." |

|||

In order to observe the training process in more detail, you can use Tensorboard. |

|||

In your command line, enter into `python` directory and then run : |

|||

In order to observe the training process in more detail, you can use |

|||

TensorBoard. In your command line, enter into `python` directory and then run : |

|||

From Tensorboard, you will see the summary statistics of six variables: |

|||

* Cumulative Reward - The mean cumulative episode reward over all agents. Should increase during a successful training session. |

|||

* Value Loss - The mean loss of the value function update. Correlates to how well the model is able to predict the value of each state. This should decrease during a succesful training session. |

|||

* Policy Loss - The mean loss of the policy function update. Correlates to how much the policy (process for deciding actions) is changing. The magnitude of this should decrease during a succesful training session. |

|||

* Episode Length - The mean length of each episode in the environment for all agents. |

|||

* Value Estimates - The mean value estimate for all states visited by the agent. Should increase during a successful training session. |

|||

* Policy Entropy - How random the decisions of the model are. Should slowly decrease during a successful training process. If it decreases too quickly, the `beta` hyperparameter should be increased. |

|||

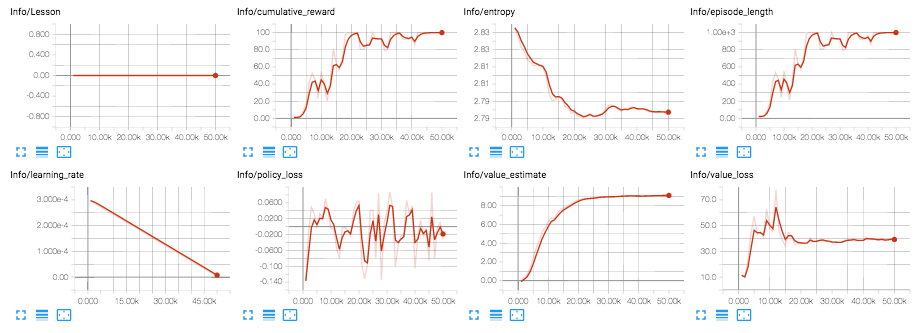

From TensorBoard, you will see the summary statistics: |

|||

## Embedding Trained Brain into Unity Environment _[Experimental]_ |

|||

Once the training process displays an average reward of ~75 or greater, and there has been a recently saved model (denoted by the `Saved Model` message) you can choose to stop the training process by stopping the cell execution. Once this is done, you now have a trained TensorFlow model. You must now convert the saved model to a Unity-ready format which can be embedded directly into the Unity project by following the steps below. |

|||

* Lesson - only interesting when performing |

|||

[curriculum training](Training-Curriculum-Learning.md). |

|||

This is not used in the 3d Balance Ball environment. |

|||

* Cumulative Reward - The mean cumulative episode reward over all agents. |

|||

Should increase during a successful training session. |

|||

* Entropy - How random the decisions of the model are. Should slowly decrease |

|||

during a successful training process. If it decreases too quickly, the `beta` |

|||

hyperparameter should be increased. |

|||

* Episode Length - The mean length of each episode in the environment for all |

|||

agents. |

|||

* Learning Rate - How large a step the training algorithm takes as it searches |

|||

for the optimal policy. Should decrease over time. |

|||

* Policy Loss - The mean loss of the policy function update. Correlates to how |

|||

much the policy (process for deciding actions) is changing. The magnitude of |

|||

this should decrease during a successful training session. |

|||

* Value Estimate - The mean value estimate for all states visited by the agent. |

|||

Should increase during a successful training session. |

|||

* Value Loss - The mean loss of the value function update. Correlates to how |

|||

well the model is able to predict the value of each state. This should decrease |

|||

during a successful training session. |

|||

|

|||

|

|||

|

|||

## Embedding the Trained Brain into the Unity Environment _[Experimental]_ |

|||

|

|||

Once the training process completes, and the training process saves the model |

|||

(denoted by the `Saved Model` message) you can add it to the Unity project and |

|||

use it with agents having an **Internal** brain type. |

|||

Because TensorFlowSharp support is still experimental, it is disabled by default. In order to enable it, you must follow these steps. Please note that the `Internal` Brain mode will only be available once completing these steps. |

|||

1. Make sure you are using Unity 2017.1 or newer. |

|||

2. Make sure the TensorFlowSharp plugin is in your `Assets` folder. A Plugins folder which includes TF# can be downloaded [here](https://s3.amazonaws.com/unity-agents/0.2/TFSharpPlugin.unitypackage). Double click and import it once downloaded. |

|||

3. Go to `Edit` -> `Project Settings` -> `Player` |

|||

4. For each of the platforms you target (**`PC, Mac and Linux Standalone`**, **`iOS`** or **`Android`**): |

|||

1. Go into `Other Settings`. |

|||

2. Select `Scripting Runtime Version` to `Experimental (.NET 4.6 Equivalent)` |

|||

3. In `Scripting Defined Symbols`, add the flag `ENABLE_TENSORFLOW` |

|||

Because TensorFlowSharp support is still experimental, it is disabled by |

|||

default. In order to enable it, you must follow these steps. Please note that |

|||

the `Internal` Brain mode will only be available once completing these steps. |

|||

|

|||

1. Make sure the TensorFlowSharp plugin is in your `Assets` folder. A Plugins |

|||

folder which includes TF# can be downloaded |

|||

[here](https://s3.amazonaws.com/unity-agents/0.2/TFSharpPlugin.unitypackage). |

|||

Double click and import it once downloaded. You can see if this was |

|||

successfully installed by checking the TensorFlow files in the Project tab |

|||

under `Assets` -> `ML-Agents` -> `Plugins` -> `Computer` |

|||

2. Go to `Edit` -> `Project Settings` -> `Player` |

|||

3. For each of the platforms you target |

|||

(**`PC, Mac and Linux Standalone`**, **`iOS`** or **`Android`**): |

|||

1. Go into `Other Settings`. |

|||

2. Select `Scripting Runtime Version` to |

|||

`Experimental (.NET 4.6 Equivalent)` |

|||

3. In `Scripting Defined Symbols`, add the flag `ENABLE_TENSORFLOW`. |

|||

After typing in, press Enter. |

|||

4. Go to `File` -> `Save Project` |

|||

1. Run the final cell of the notebook under "Export the trained TensorFlow graph" to produce an `<env_name >.bytes` file. |

|||

2. Move `<env_name>.bytes` from `python/models/ppo/` into `unity-environment/Assets/ML-Agents/Examples/3DBall/TFModels/`. |

|||

1. Run the final cell of the notebook under "Export the trained TensorFlow |

|||

graph" to produce an `<env_name >.bytes` file. |

|||

2. Move `<env_name>.bytes` from `python/models/ppo/` into |

|||

`unity-environment/Assets/ML-Agents/Examples/3DBall/TFModels/`. |

|||

6. Drag the `<env_name>.bytes` file from the Project window of the Editor to the `Graph Model` placeholder in the `3DBallBrain` inspector window. |

|||

6. Drag the `<env_name>.bytes` file from the Project window of the Editor |

|||

to the `Graph Model` placeholder in the `3DBallBrain` inspector window. |

|||

If you followed these steps correctly, you should now see the trained model being used to control the behavior of the balance ball within the Editor itself. From here you can re-build the Unity binary, and run it standalone with your agent's new learned behavior built right in. |

|||

If you followed these steps correctly, you should now see the trained model |

|||

being used to control the behavior of the balance ball within the Editor |

|||

itself. From here you can re-build the Unity binary, and run it standalone |

|||

with your agent's new learned behavior built right in. |

|||

|

|||

# Unity ML Agents Documentation |

|||

|

|||

## About |

|||

* [Unity ML Agents Overview](Unity-Agents-Overview.md) |

|||

* [Example Environments](Example-Environments.md) |

|||

# Unity ML-Agents Documentation |

|||

## Tutorials |

|||

* [Installation & Set-up](installation.md) |

|||

## Getting Started |

|||

* [ML-Agents Overview](ML-Agents-Overview.md) |

|||

* [Background: Unity](Background-Unity.md) |

|||

* [Background: Machine Learning](Background-Machine-Learning.md) |

|||

* [Background: TensorFlow](Background-TensorFlow.md) |

|||

* [Installation & Set-up](Installation.md) |

|||

* [Background: Jupyter Notebooks](Background-Jupyter.md) |

|||

* [Docker Set-up (Experimental)](Using-Docker.md) |

|||

* [Making a new Unity Environment](Making-a-new-Unity-Environment.md) |

|||

* [How to use the Python API](Unity-Agents---Python-API.md) |

|||

* [Example Environments](Learning-Environment-Examples.md) |

|||

## Features |

|||

* [Agents SDK Inspector Descriptions](Agents-Editor-Interface.md) |

|||

* [Scene Organization](Organizing-the-Scene.md) |

|||

* [Curriculum Learning](curriculum.md) |

|||

* [Broadcast](broadcast.md) |

|||

* [Monitor](monitor.md) |

|||

## Creating Learning Environments |

|||

* [Making a new Learning Environment](Learning-Environment-Create-New.md) |

|||

* [Designing a Learning Environment](Learning-Environment-Design.md) |

|||

* [Agents](Learning-Environment-Design-Agents.md) |

|||

* [Academy](Learning-Environment-Design-Academy.md) |

|||

* [Brains](Learning-Environment-Design-Brains.md) |

|||

* [Learning Environment Best Practices](Learning-Environment-Best-Practices.md) |

|||

* [TensorFlowSharp in Unity (Experimental)](Using-TensorFlow-Sharp-in-Unity.md) |

|||

|

|||

## Training |

|||

* [Training ML-Agents](Training-ML-Agents.md) |

|||

* [Training with Proximal Policy Optimization](Training-PPO.md) |

|||

* [Training with Curriculum Learning](Training-Curriculum-Learning.md) |

|||

* [Training with Imitation Learning](Training-Imitation-Learning.md) |

|||

* [TensorflowSharp in Unity [Experimental]](Using-TensorFlow-Sharp-in-Unity-(Experimental).md) |

|||

* [Instanciating and Destroying agents](Instantiating-Destroying-Agents.md) |

|||

|

|||

## Best Practices |

|||

* [Best practices when creating an Environment](best-practices.md) |

|||

* [Best practices when training using PPO](best-practices-ppo.md) |

|||

* [Using TensorBoard to Observe Training](Using-Tensorboard.md) |

|||

* [Limitations & Common Issues](Limitations-&-Common-Issues.md) |

|||

* [ML-Agents Glossary](Glossary.md) |

|||

* [Limitations & Common Issues](Limitations-and-Common-Issues.md) |

|||

|

|||

## C# API and Components |

|||

* Academy |

|||

* Brain |

|||

* Agent |

|||

* CoreBrain |

|||

* Decision |

|||

* Monitor |

|||

|

|||

## Python API |

|||

* [How to use the Python API](Python-API.md) |

|||

|

|||

|

|||

# Jupyter |

|||

|

|||

**Work In Progress** |

|||

|

|||

[Jupyter](https://jupyter.org) is a fantastic tool for writing code with |

|||

embedded visualizations. We provide several such notebooks for testing your |

|||

Python installation and training behaviors. For a walkthrough of how to use |

|||

Jupyter, see |

|||

[Running the Jupyter Notebook](http://jupyter-notebook-beginner-guide.readthedocs.io/en/latest/execute.html) |

|||

in the _Jupyter/IPython Quick Start Guide_. |

|||

|

|||

To launch Jupyter, run in the command line: |

|||

|

|||

`jupyter notebook` |

|||

|

|||

Then navigate to `localhost:8888` to access the notebooks. |

|||

|

|||

# Background: Machine Learning |

|||

|

|||

**Work In Progress** |

|||

|

|||

We will not attempt to provide a thorough treatment of machine learning |

|||

as there are fantastic resources online. However, given that a number |

|||

of users of ML-Agents might not have a formal machine learning background, |

|||

this section provides an overview of terminology to facilitate the |

|||

understanding of ML-Agents. |

|||

|

|||

Machine learning, a branch of artificial intelligence, focuses on learning patterns |

|||

from data. The three main classes of machine learning algorithms include: |

|||

unsupervised learning, supervised learning and reinforcement learning. |

|||

Each class of algorithm learns from a different type of data. The following paragraphs |

|||

provide an overview for each of these classes of machine learning, as well as introductory examples. |

|||

|

|||

## Unsupervised Learning |

|||

|

|||

The goal of unsupervised learning is to group or cluster similar items in a |

|||

data set. For example, consider the players of a game. We may want to group |

|||

the players depending on how engaged they are with the game. This would enable |

|||

us to target different groups (e.g. for highly-engaged players we might |

|||

invite them to be beta testers for new features, while for unengaged players |

|||

we might email them helpful tutorials). Say that we wish to split our players |

|||

into two groups. We would first define basic attributes of the players, such |

|||

as the number of hours played, total money spent on in-app purchases and |

|||

number of levels completed. We can then feed this data set (three attributes |

|||

for every player) to an unsupervised learning algorithm where we specify the |

|||

number of groups to be two. The algorithm would then split the data set of |

|||

players into two groups where the players within each group would be similar |

|||

to each other. Given the attributes we used to describe each player, in this |

|||

case, the output would be a split of all the players into two groups, where |

|||

one group would semantically represent the engaged players and the second |

|||

group would semantically represent the unengaged players. |

|||

|

|||

With unsupervised learning, we did not provide specific examples of which |

|||

players are considered engaged and which are considered unengaged. We just |

|||

defined the appropriate attributes and relied on the algorithm to uncover |

|||

the two groups on its own. This type of data set is typically called an |

|||

unlabeled data set as it is lacking these direct labels. Consequently, |

|||

unsupervised learning can be helpful in situations where these labels can be |

|||

expensive or hard to produce. In the next paragraph, we overview supervised |

|||

learning algorithms which accept input labels in addition to attributes. |

|||

|

|||

## Supervised Learning |

|||

|

|||

In supervised learning, we do not want to just group similar items but directly |

|||

learn a mapping from each item to the group (or class) that it belongs to. |

|||

Returning to our earlier example of |

|||

clustering players, let's say we now wish to predict which of our players are |

|||

about to churn (that is stop playing the game for the next 30 days). We |

|||

can look into our historical records and create a data set that |

|||

contains attributes of our players in addition to a label indicating whether |

|||

they have churned or not. Note that the player attributes we use for this |

|||

churn prediction task may be different from the ones we used for our earlier |

|||

clustering task. We can then feed this data set (attributes **and** label for |

|||

each player) into a supervised learning algorithm which would learn a mapping |

|||

from the player attributes to a label indicating whether that player |

|||

will churn or not. The intuition is that the supervised learning algorithm |

|||

will learn which values of these attributes typically correspond to players |

|||

who have churned and not churned (for example, it may learn that players |

|||

who spend very little and play for very short periods will most likely churn). |

|||

Now given this learned model, we can provide it the attributes of a |

|||

new player (one that recently started playing the game) and it would output |

|||

a _predicted_ label for that player. This prediction is the algorithms |

|||

expectation of whether the player will churn or not. |

|||

We can now use these predictions to target the players |

|||

who are expected to churn and entice them to continue playing the game. |

|||

|

|||

As you may have noticed, for both supervised and unsupervised learning, there |

|||

are two tasks that need to be performed: attribute selection and model |

|||

selection. Attribute selection (also called feature selection) pertains to |

|||

selecting how we wish to represent the entity of interest, in this case, the |

|||

player. Model selection, on the other hand, pertains to selecting the |

|||

algorithm (and its parameters) that perform the task well. Both of these |

|||

tasks are active areas of machine learning research and, in practice, require |

|||

several iterations to achieve good performance. |

|||

|

|||

We now switch to reinforcement learning, the third class of |

|||

machine learning algorithms, and arguably the one most relevant for ML-Agents. |

|||

|

|||

## Reinforcement Learning |

|||

|

|||

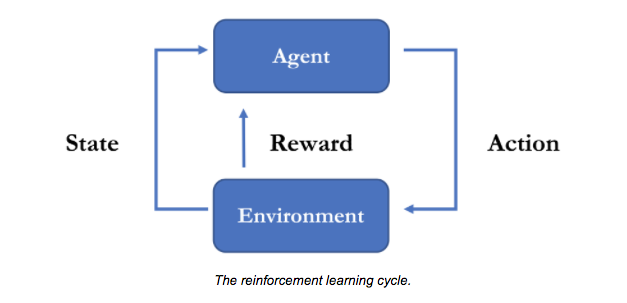

Reinforcement learning can be viewed as a form of learning for sequential |

|||

decision making that is commonly associated with controlling robots (but is, |

|||

in fact, much more general). Consider an autonomous firefighting robot that is |

|||

tasked with navigating into an area, finding the fire and neutralizing it. At |

|||

any given moment, the robot perceives the environment through its sensors (e.g. |

|||

camera, heat, touch), processes this information and produces an action (e.g. |

|||

move to the left, rotate the water hose, turn on the water). In other words, |

|||

it is continuously making decisions about how to interact in this environment |

|||

given its view of the world (i.e. sensors input) and objective (i.e. |

|||

neutralizing the fire). Teaching a robot to be a successful firefighting |

|||

machine is precisely what reinforcement learning is designed to do. |

|||

|

|||

More specifically, the goal of reinforcement learning is to learn a **policy**, |

|||

which is essentially a mapping from **observations** to **actions**. An |

|||

observation is what the robot can measure from its **environment** (in this |

|||

case, all its sensory inputs) and an action, in its most raw form, is a change |

|||

to the configuration of the robot (e.g. position of its base, position of |

|||

its water hose and whether the hose is on or off). |

|||

|

|||

The last remaining piece |

|||

of the reinforcement learning task is the **reward signal**. When training a |

|||

robot to be a mean firefighting machine, we provide it with rewards (positive |

|||

and negative) indicating how well it is doing on completing the task. |

|||

Note that the robot does not _know_ how to put out fires before it is trained. |

|||

It learns the objective because it receives a large positive reward when it puts |

|||

out the fire and a small negative reward for every passing second. The fact that |

|||

rewards are sparse (i.e. may not be provided at every step, but only when a |

|||

robot arrives at a success or failure situation), is a defining characteristic of |

|||

reinforcement learning and precisely why learning good policies can be difficult |

|||

(and/or time-consuming) for complex environments. |

|||

|

|||

<p align="center"> |

|||

<img src="images/rl_cycle.png" alt="The reinforcement learning cycle."/> |

|||

</p> |

|||

|

|||

[Learning a policy](https://blogs.unity3d.com/2017/08/22/unity-ai-reinforcement-learning-with-q-learning/) |

|||

usually requires many trials and iterative |

|||

policy updates. More specifically, the robot is placed in several |

|||

fire situations and over time learns an optimal policy which allows it |

|||

to put our fires more effectively. Obviously, we cannot expect to train a |

|||

robot repeatedly in the real world, particularly when fires are involved. This |

|||

is precisely why the use of |

|||

[Unity as a simulator](https://blogs.unity3d.com/2018/01/23/designing-safer-cities-through-simulations/) |

|||

serves as the perfect training grounds for learning such behaviors. |

|||

While our discussion of reinforcement learning has centered around robots, |

|||

there are strong parallels between robots and characters in a game. In fact, |

|||

in many ways, one can view a non-playable character (NPC) as a virtual |

|||

robot, with its own observations about the environment, its own set of actions |

|||

and a specific objective. Thus it is natural to explore how we can |

|||

train behaviors within Unity using reinforcement learning. This is precisely |

|||

what ML-Agents offers. The video linked below includes a reinforcement |

|||

learning demo showcasing training character behaviors using ML-Agents. |

|||

|

|||

<p align="center"> |

|||

<a href="http://www.youtube.com/watch?feature=player_embedded&v=fiQsmdwEGT8" target="_blank"> |

|||

<img src="http://img.youtube.com/vi/fiQsmdwEGT8/0.jpg" alt="RL Demo" width="400" border="10" /> |

|||

</a> |

|||

</p> |

|||

|

|||

Similar to both unsupervised and supervised learning, reinforcement learning |

|||

also involves two tasks: attribute selection and model selection. |

|||

Attribute selection is defining the set of observations for the robot |

|||

that best help it complete its objective, while model selection is defining |

|||

the form of the policy (mapping from observations to actions) and its |

|||

parameters. In practice, training behaviors is an iterative process that may |

|||

require changing the attribute and model choices. |

|||

|

|||

## Training and Inference |

|||

|

|||

One common aspect of all three branches of machine learning is that they |

|||

all involve a **training phase** and an **inference phase**. While the |

|||

details of the training and inference phases are different for each of the |

|||

three, at a high-level, the training phase involves building a model |

|||

using the provided data, while the inference phase involves applying this |

|||

model to new, previously unseen, data. More specifically: |

|||

* For our unsupervised learning |

|||

example, the training phase learns the optimal two clusters based |

|||

on the data describing existing players, while the inference phase assigns a |

|||

new player to one of these two clusters. |

|||

* For our supervised learning example, the |

|||

training phase learns the mapping from player attributes to player label |

|||

(whether they churned or not), and the inference phase predicts whether |

|||

a new player will churn or not based on that learned mapping. |

|||

* For our reinforcement learning example, the training phase learns the |

|||

optimal policy through guided trials, and in the inference phase, the agent |

|||

observes and tales actions in the wild using its learned policy. |

|||

|

|||

To briefly summarize: all three classes of algorithms involve training |

|||

and inference phases in addition to attribute and model selections. What |

|||

ultimately separates them is the type of data available to learn from. In |

|||

unsupervised learning our data set was a collection of attributes, in |

|||

supervised learning our data set was a collection of attribute-label pairs, |

|||

and, lastly, in reinforcement learning our data set was a collection of |

|||

observation-action-reward tuples. |

|||

|

|||

## Deep Learning |

|||

|

|||

To be completed. |

|||

|

|||

Link to TensorFlow background page. |

|||

|

|||

# Background: TensorFlow |

|||

|

|||

**Work In Progress** |

|||

|

|||

## TensorFlow |

|||

|

|||

[TensorFlow](https://www.tensorflow.org/) is a deep learning library. |

|||

|

|||

Link to Arthur's content? |

|||

|

|||

A few words about TensorFlow and why/how it is relevant would be nice. |

|||

|

|||

TensorFlow is used for training the machine learning models in ML-Agents. |

|||

Unless you are implementing new algorithms, the use of TensorFlow |

|||

is mostly abstracted away and behind the scenes. |

|||

|

|||

## TensorBoard |

|||

|

|||

One component of training models with TensorFlow is setting the |

|||

values of certain model attributes (called _hyperparameters_). Finding the |

|||

right values of these hyperparameters can require a few iterations. |

|||

Consequently, we leverage a visualization tool within TensorFlow called |

|||

[TensorBoard](https://www.tensorflow.org/programmers_guide/summaries_and_tensorboard). |

|||

It allows the visualization of certain agent attributes (e.g. reward) |

|||

throughout training which can be helpful in both building |

|||

intuitions for the different hyperparameters and setting the optimal values for |

|||

your Unity environment. We provide more details on setting the hyperparameters |

|||

in later parts of the documentation, but, in the meantime, if you are |

|||

unfamiliar with TensorBoard we recommend this |

|||

[tutorial](https://github.com/dandelionmane/tf-dev-summit-tensorboard-tutorial). |

|||

|

|||

## TensorFlowSharp |

|||

|

|||

Third-party used in Internal Brain mode. |

|||

|

|||

|

|||

|

|||

|

|||

# Background: Unity |

|||

|

|||

If you are not familiar with the [Unity Engine](https://unity3d.com/unity), |

|||

we highly recommend the |

|||

[Unity Manual](https://docs.unity3d.com/Manual/index.html) and |

|||

[Tutorials page](https://unity3d.com/learn/tutorials). The |

|||

[Roll-a-ball tutorial](https://unity3d.com/learn/tutorials/s/roll-ball-tutorial) |

|||

is sufficient to learn all the basic concepts of Unity to get started with |

|||

ML-Agents: |

|||

* [Editor](https://docs.unity3d.com/Manual/UsingTheEditor.html) |

|||

* [Interface](https://docs.unity3d.com/Manual/LearningtheInterface.html) |

|||

* [Scene](https://docs.unity3d.com/Manual/CreatingScenes.html) |

|||

* [GameObjects](https://docs.unity3d.com/Manual/GameObjects.html) |

|||

* [Rigidbody](https://docs.unity3d.com/ScriptReference/Rigidbody.html) |

|||

* [Camera](https://docs.unity3d.com/Manual/Cameras.html) |

|||

* [Scripting](https://docs.unity3d.com/Manual/ScriptingSection.html) |

|||

* [Ordering of event functions](https://docs.unity3d.com/Manual/ExecutionOrder.html) |

|||

(e.g. FixedUpdate, Update) |

|||

|

|||

# Contribution Guidelines |

|||

|

|||

Reference code of conduct. |

|||

|

|||

## GitHub Workflow |

|||

|

|||

## Environments |

|||

|

|||

We are also actively open to adding community contributed environments as |

|||

examples, as long as they are small, simple, demonstrate a unique feature of |

|||

the platform, and provide a unique non-trivial challenge to modern |

|||

machine learning algorithms. Feel free to submit these environments with a |

|||

Pull-Request explaining the nature of the environment and task. |

|||

|

|||

TODO: above paragraph needs expansion. |

|||

|

|||

## Algorithms |

|||

|

|||

## Style Guide |

|||

|

|||

|

|||

# ML-Agents Glossary |

|||

|

|||

**Work In Progress** |

|||

|

|||

# Docker Set-up _[Experimental]_ |

|||

|

|||

**Work In Progress** |

|||

|

|||

# Installation & Set-up |

|||

|

|||

To install and use ML-Agents, you need install Unity, clone this repository |

|||

and install Python with additional dependencies. Each of the subsections |

|||

below overviews each step, in addition to an experimental Docker set-up. |

|||

|

|||

## Install **Unity 2017.1** or Later |

|||

|

|||

[Download](https://store.unity.com/download) and install Unity. |

|||

|

|||

## Clone the ml-agents Repository |

|||

|

|||

Once installed, you will want to clone the ML-Agents GitHub repository. |

|||

|

|||

git clone git@github.com:Unity-Technologies/ml-agents.git |

|||

|

|||

The `unity-environment` directory in this repository contains the Unity Assets |

|||

to add to your projects. The `python` directory contains the training code. |

|||

Both directories are located at the root of the repository. |

|||

|

|||

## Install Python |

|||

|

|||

In order to use ML-Agents, you need Python (2 or 3; 64 bit required) along with |

|||

the dependencies listed in the [requirements file](../python/requirements.txt). |

|||

Some of the primary dependencies include: |

|||

- [TensorFlow](Background-TensorFlow.md) |

|||

- [Jupyter](Background-Jupyter.md) |

|||

|

|||

### Windows Users |

|||

|

|||

If you are a Windows user who is new to Python and TensorFlow, follow |

|||

[this guide](https://unity3d.college/2017/10/25/machine-learning-in-unity3d-setting-up-the-environment-tensorflow-for-agentml-on-windows-10/) |

|||

to set up your Python environment. |

|||

|

|||

### Mac and Unix Users |

|||

|

|||

If your Python environment doesn't include `pip`, see these |

|||

[instructions](https://packaging.python.org/guides/installing-using-linux-tools/#installing-pip-setuptools-wheel-with-linux-package-managers) |

|||

on installing it. |

|||

|

|||

To install dependencies, go into the `python` subdirectory of the repository, |

|||

and run (depending on your Python version) from the command line: |

|||

|

|||

pip install . |

|||

|

|||

or |

|||

|

|||

pip3 install . |

|||

|

|||

## Docker-based Installation _[Experimental]_ |

|||

|

|||

If you'd like to use Docker for ML-Agents, please follow |

|||

[this guide](Using-Docker.md). |

|||

|

|||

## Help |

|||

|

|||

If you run into any problems installing ML-Agents, |

|||

[submit an issue](https://github.com/Unity-Technologies/ml-agents/issues) and |

|||

make sure to cite relevant information on OS, Python version, and exact error |

|||

message (whenever possible). |

|||

|

|||

|

|||

# Environment Design Best Practices |

|||

|

|||

## General |

|||

* It is often helpful to start with the simplest version of the problem, to ensure the agent can learn it. From there increase |

|||

complexity over time. This can either be done manually, or via Curriculum Learning, where a set of lessons which progressively increase in difficulty are presented to the agent ([learn more here](Training-Curriculum-Learning.md)). |

|||

* When possible, it is often helpful to ensure that you can complete the task by using a Player Brain to control the agent. |

|||

|

|||

## Rewards |

|||

* The magnitude of any given reward should typically not be greater than 1.0 in order to ensure a more stable learning process. |

|||

* Positive rewards are often more helpful to shaping the desired behavior of an agent than negative rewards. |

|||

* For locomotion tasks, a small positive reward (+0.1) for forward velocity is typically used. |

|||

* If you want the agent to finish a task quickly, it is often helpful to provide a small penalty every step (-0.05) that the agent does not complete the task. In this case completion of the task should also coincide with the end of the episode. |

|||

* Overly-large negative rewards can cause undesirable behavior where an agent learns to avoid any behavior which might produce the negative reward, even if it is also behavior which can eventually lead to a positive reward. |

|||

|

|||

## States |

|||

* States should include all variables relevant to allowing the agent to take the optimally informed decision. |

|||

* Categorical state variables such as type of object (Sword, Shield, Bow) should be encoded in one-hot fashion (ie `3` -> `0, 0, 1`). |

|||

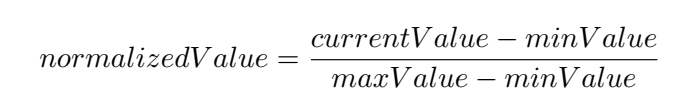

* Besides encoding non-numeric values, all inputs should be normalized to be in the range 0 to +1 (or -1 to 1). For example rotation information on GameObjects should be recorded as `state.Add(transform.rotation.eulerAngles.y/180.0f-1.0f);` rather than `state.Add(transform.rotation.y);`. See the equation below for one approach of normaliztaion. |

|||

* Positional information of relevant GameObjects should be encoded in relative coordinates wherever possible. This is often relative to the agent position. |

|||

|

|||

|

|||

|

|||

## Actions |

|||

* When using continuous control, action values should be clipped to an appropriate range. |

|||

* Be sure to set the action-space-size to the number of used actions, and not greater, as doing the latter can interfere with the efficency of the training process. |

|||

|

|||

# Making a new Learning Environment |

|||

|

|||

This tutorial walks through the process of creating a Unity Environment. A Unity Environment is an application built using the Unity Engine which can be used to train Reinforcement Learning agents. |

|||

|

|||

|

|||

|

|||

In this example, we will train a ball to roll to a randomly placed cube. The ball also learns to avoid falling off the platform. |

|||

|

|||

## Overview |

|||

|

|||

Using ML-Agents in a Unity project involves the following basic steps: |

|||

|

|||

1. Create an environment for your agents to live in. An environment can range from a simple physical simulation containing a few objects to an entire game or ecosystem. |

|||

2. Implement an Academy subclass and add it to a GameObject in the Unity scene containing the environment. This GameObject will serve as the parent for any Brain objects in the scene. Your Academy class can implement a few optional methods to update the scene independently of any agents. For example, you can add, move, or delete agents and other entities in the environment. |

|||

3. Add one or more Brain objects to the scene as children of the Academy. |

|||

4. Implement your Agent subclasses. An Agent subclass defines the code an agent uses to observe its environment, to carry out assigned actions, and to calculate the rewards used for reinforcement training. You can also implement optional methods to reset the agent when it has finished or failed its task. |

|||

5. Add your Agent subclasses to appropriate GameObjects, typically, the object in the scene that represents the agent in the simulation. Each Agent object must be assigned a Brain object. |

|||

6. If training, set the Brain type to External and [run the training process](Training-PPO.md). |

|||

|

|||

|

|||

**Note:** If you are unfamiliar with Unity, refer to [Learning the interface](https://docs.unity3d.com/Manual/LearningtheInterface.html) in the Unity Manual if an Editor task isn't explained sufficiently in this tutorial. |

|||

|

|||

If you haven't already, follow the [installation instructions](Installation.md). |

|||

|

|||

## Set Up the Unity Project |

|||

|

|||

The first task to accomplish is simply creating a new Unity project and importing the ML-Agents assets into it: |

|||

|

|||

1. Launch the Unity Editor and create a new project named "RollerBall". |

|||

|

|||

2. In a file system window, navigate to the folder containing your cloned ML-Agents repository. |

|||

|

|||

3. Drag the `ML-Agents` folder from `unity-environments/Assets` to the Unity Editor Project window. |

|||

|

|||

Your Unity **Project** window should contain the following assets: |

|||

|

|||

|

|||

|

|||

## Create the Environment: |

|||

|

|||

Next, we will create a very simple scene to act as our ML-Agents environment. The "physical" components of the environment include a Plane to act as the floor for the agent to move around on, a Cube to act as the goal or target for the agent to seek, and a Sphere to represent the agent itself. |

|||

|

|||

**Create the floor plane:** |

|||

|

|||

1. Right click in Hierarchy window, select 3D Object > Plane. |

|||

2. Name the GameObject "Floor." |

|||

3. Select Plane to view its properties in the Inspector window. |

|||

4. Set Transform to Position = (0,0,0), Rotation = (0,0,0), Scale = (1,1,1). |

|||

5. On the Plane's Mesh Renderer, expand the Materials property and change the default-material to *floor*. |

|||

|

|||

(To set a new material, click the small circle icon next to the current material name. This opens the **Object Picker** dialog so that you can choose the a different material from the list of all materials currently in the project.) |

|||

|

|||

|

|||

|

|||

**Add the Target Cube** |

|||

|

|||

1. Right click in Hierarchy window, select 3D Object > Cube. |

|||

2. Name the GameObject "Target" |

|||

3. Select Target to view its properties in the Inspector window. |

|||

4. Set Transform to Position = (3,0.5,3), Rotation = (0,0,0), Scale = (1,1,1). |

|||

5. On the Cube's Mesh Renderer, expand the Materials property and change the default-material to *block*. |

|||

|

|||

|

|||

|

|||

**Add the Agent Sphere** |

|||

|

|||

1. Right click in Hierarchy window, select 3D Object > Sphere. |

|||

2. Name the GameObject "RollerAgent" |

|||

3. Select Target to view its properties in the Inspector window. |

|||

4. Set Transform to Position = (0,0.5,0), Rotation = (0,0,0), Scale = (1,1,1). |

|||

5. On the Sphere's Mesh Renderer, expand the Materials property and change the default-material to *checker 1*. |

|||

6. Click **Add Component**. |

|||

7. Add the Physics/Rigidbody component to the Sphere. (Adding a Rigidbody ) |

|||

|

|||

|

|||

|

|||

Note that we will create an Agent subclass to add to this GameObject as a component later in the tutorial. |

|||

|

|||

**Add Empty GameObjects to Hold the Academy and Brain** |

|||

|

|||

1. Right click in Hierarchy window, select Create Empty. |

|||

2. Name the GameObject "Academy" |

|||

3. Right-click on the Academy GameObject and select Create Empty. |

|||

4. Name this child of the Academy, "Brain". |

|||

|

|||

|

|||

|

|||

You can adjust the camera angles to give a better view of the scene at runtime. The next steps will be to create and add the ML-Agent components. |

|||

|

|||

## Implement an Academy |

|||

|

|||

The Academy object coordinates the ML-Agents in the scene and drives the decision-making portion of the simulation loop. Every ML-Agent scene needs one Academy instance. Since the base Academy classis abstract, you must make your own subclass even if you don't need to use any of the methods for a particular environment. |

|||

|

|||

First, add a New Script component to the Academy GameObject created earlier: |

|||

|

|||

1. Select the Academy GameObject to view it in the Inspector window. |

|||

2. Click **Add Component**. |

|||

3. Click **New Script** in the list of components (at the bottom). |

|||

4. Name the script "RollerAcademy". |

|||

5. Click **Create and Add**. |

|||

|

|||

Next, edit the new `RollerAcademy` script: |

|||

|

|||

1. In the Unity Project window, double-click the `RollerAcademy` script to open it in your code editor. (By default new scripts are placed directly in the **Assets** folder.) |

|||

2. In the editor, change the base class from `MonoBehaviour` to `Academy`. |

|||

3. Delete the `Start()` and `Update()` methods that were added by default. |

|||

|

|||

In such a basic scene, we don't need the Academy to initialize, reset, or otherwise control any objects in the environment so we have the simplest possible Academy implementation: |

|||

|

|||

public class RollerAcademy : Academy { } |

|||

|

|||

The default settings for the Academy properties are also fine for this environment, so we don't need to change anything for the RollerAcademy component in the Inspector window. |

|||

|

|||

|

|||

|

|||