浏览代码

Curiosity Driven Exploration & Pyramids Environments (#739)

Curiosity Driven Exploration & Pyramids Environments (#739)

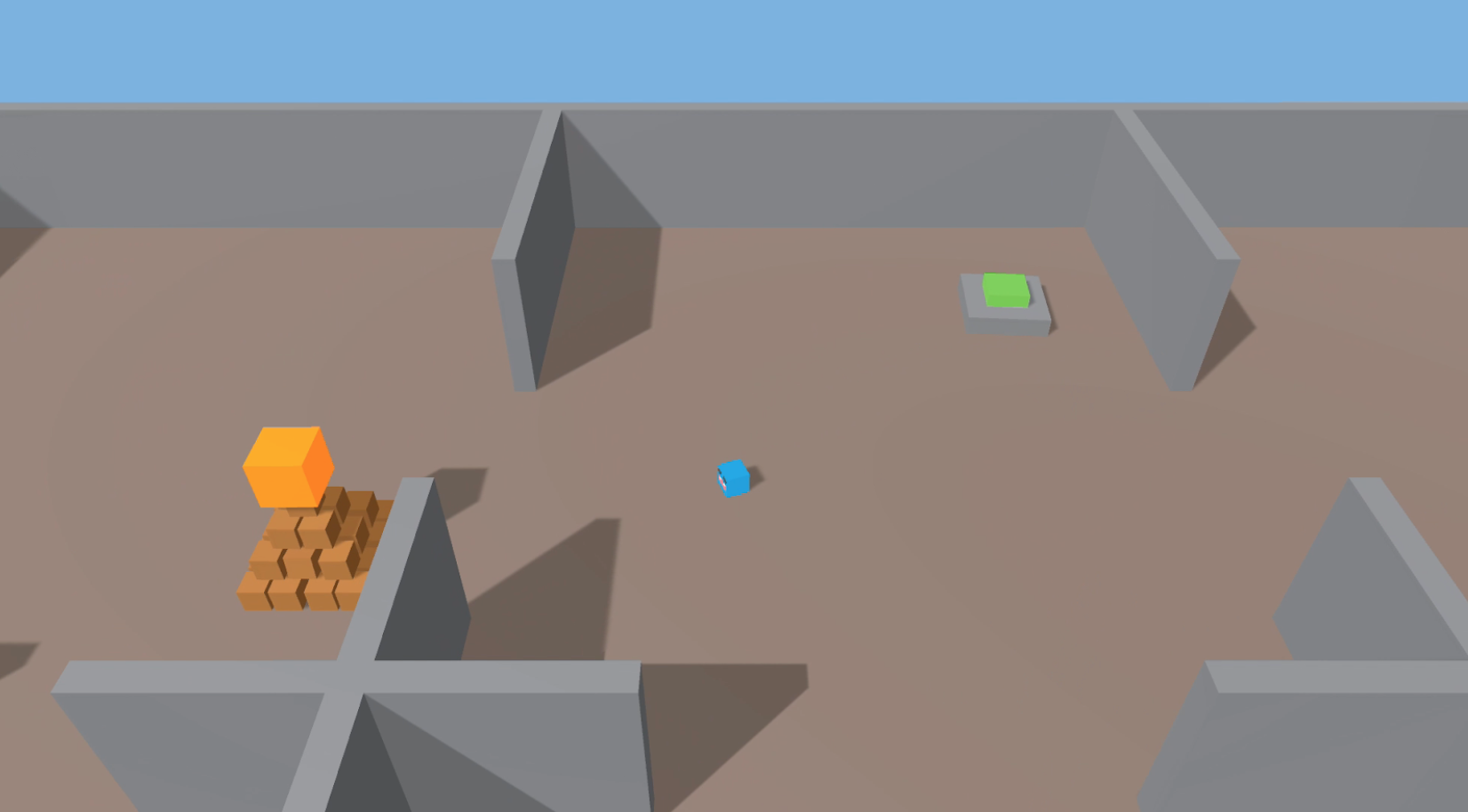

* Adds implementation of Curiosity-driven Exploration by Self-supervised Prediction (https://arxiv.org/abs/1705.05363) to PPO trainer. * To enable, set use_curiosity flag to true in hyperparameter file. * Includes refactor of unitytrainers model code to accommodate new feature. * Adds new Pyramids environment (w/ documentation). Environment contains sparse reward, and can only be solved using PPO+Curiosity./develop-generalizationTraining-TrainerController

当前提交

c17937ef

共有 59 个文件被更改,包括 7466 次插入 和 119 次删除

-

15docs/Learning-Environment-Examples.md

-

5docs/Training-ML-Agents.md

-

16docs/Training-PPO.md

-

5docs/Using-Tensorboard.md

-

34python/trainer_config.yaml

-

2python/unitytrainers/bc/models.py

-

179python/unitytrainers/models.py

-

112python/unitytrainers/ppo/models.py

-

160python/unitytrainers/ppo/trainer.py

-

3python/unitytrainers/trainer_controller.py

-

10unity-environment/Assets/ML-Agents/Scripts/Agent.cs

-

4unity-environment/ProjectSettings/TagManager.asset

-

996docs/images/pyramids.png

-

8unity-environment/Assets/ML-Agents/Examples/Pyramids.meta

-

8unity-environment/Assets/ML-Agents/Examples/Pyramids/Materials.meta

-

76unity-environment/Assets/ML-Agents/Examples/Pyramids/Materials/Brick.mat

-

8unity-environment/Assets/ML-Agents/Examples/Pyramids/Materials/Brick.mat.meta

-

76unity-environment/Assets/ML-Agents/Examples/Pyramids/Materials/Gold.mat

-

8unity-environment/Assets/ML-Agents/Examples/Pyramids/Materials/Gold.mat.meta

-

76unity-environment/Assets/ML-Agents/Examples/Pyramids/Materials/agent.mat

-

8unity-environment/Assets/ML-Agents/Examples/Pyramids/Materials/agent.mat.meta

-

76unity-environment/Assets/ML-Agents/Examples/Pyramids/Materials/black.mat

-

8unity-environment/Assets/ML-Agents/Examples/Pyramids/Materials/black.mat.meta

-

76unity-environment/Assets/ML-Agents/Examples/Pyramids/Materials/ground.mat

-

8unity-environment/Assets/ML-Agents/Examples/Pyramids/Materials/ground.mat.meta

-

76unity-environment/Assets/ML-Agents/Examples/Pyramids/Materials/red.mat

-

8unity-environment/Assets/ML-Agents/Examples/Pyramids/Materials/red.mat.meta

-

76unity-environment/Assets/ML-Agents/Examples/Pyramids/Materials/wall.mat

-

8unity-environment/Assets/ML-Agents/Examples/Pyramids/Materials/wall.mat.meta

-

76unity-environment/Assets/ML-Agents/Examples/Pyramids/Materials/white.mat

-

8unity-environment/Assets/ML-Agents/Examples/Pyramids/Materials/white.mat.meta

-

8unity-environment/Assets/ML-Agents/Examples/Pyramids/Prefabs.meta

-

1001unity-environment/Assets/ML-Agents/Examples/Pyramids/Prefabs/AreaPB.prefab

-

8unity-environment/Assets/ML-Agents/Examples/Pyramids/Prefabs/AreaPB.prefab.meta

-

1001unity-environment/Assets/ML-Agents/Examples/Pyramids/Prefabs/BrickPyramid.prefab

-

8unity-environment/Assets/ML-Agents/Examples/Pyramids/Prefabs/BrickPyramid.prefab.meta

-

1001unity-environment/Assets/ML-Agents/Examples/Pyramids/Prefabs/StonePyramid.prefab

-

8unity-environment/Assets/ML-Agents/Examples/Pyramids/Prefabs/StonePyramid.prefab.meta

-

8unity-environment/Assets/ML-Agents/Examples/Pyramids/Scenes.meta

-

1001unity-environment/Assets/ML-Agents/Examples/Pyramids/Scenes/Pyramids.unity

-

7unity-environment/Assets/ML-Agents/Examples/Pyramids/Scenes/Pyramids.unity.meta

-

8unity-environment/Assets/ML-Agents/Examples/Pyramids/Scripts.meta

-

18unity-environment/Assets/ML-Agents/Examples/Pyramids/Scripts/PyramidAcademy.cs

-

11unity-environment/Assets/ML-Agents/Examples/Pyramids/Scripts/PyramidAcademy.cs.meta

-

117unity-environment/Assets/ML-Agents/Examples/Pyramids/Scripts/PyramidAgent.cs

-

11unity-environment/Assets/ML-Agents/Examples/Pyramids/Scripts/PyramidAgent.cs.meta

-

55unity-environment/Assets/ML-Agents/Examples/Pyramids/Scripts/PyramidArea.cs

-

11unity-environment/Assets/ML-Agents/Examples/Pyramids/Scripts/PyramidArea.cs.meta

-

47unity-environment/Assets/ML-Agents/Examples/Pyramids/Scripts/PyramidSwitch.cs

-

11unity-environment/Assets/ML-Agents/Examples/Pyramids/Scripts/PyramidSwitch.cs.meta

-

8unity-environment/Assets/ML-Agents/Examples/Pyramids/TFModels.meta

-

1001unity-environment/Assets/ML-Agents/Examples/Pyramids/TFModels/Pyramids.bytes

-

7unity-environment/Assets/ML-Agents/Examples/Pyramids/TFModels/Pyramids.bytes.meta

|

|||

fileFormatVersion: 2 |

|||

guid: d970a35d94c53437b9ebc56130744a23 |

|||

folderAsset: yes |

|||

DefaultImporter: |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: e21d506a8dc40465eae48bae17b75e49 |

|||

folderAsset: yes |

|||

DefaultImporter: |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

%YAML 1.1 |

|||

%TAG !u! tag:unity3d.com,2011: |

|||

--- !u!21 &2100000 |

|||

Material: |

|||

serializedVersion: 6 |

|||

m_ObjectHideFlags: 0 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 0} |

|||

m_Name: Brick |

|||

m_Shader: {fileID: 46, guid: 0000000000000000f000000000000000, type: 0} |

|||

m_ShaderKeywords: |

|||

m_LightmapFlags: 4 |

|||

m_EnableInstancingVariants: 0 |

|||

m_DoubleSidedGI: 0 |

|||

m_CustomRenderQueue: -1 |

|||

stringTagMap: {} |

|||

disabledShaderPasses: [] |

|||

m_SavedProperties: |

|||

serializedVersion: 3 |

|||

m_TexEnvs: |

|||

- _BumpMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailAlbedoMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailMask: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailNormalMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _EmissionMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _MainTex: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _MetallicGlossMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _OcclusionMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _ParallaxMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

m_Floats: |

|||

- _BumpScale: 1 |

|||

- _Cutoff: 0.5 |

|||

- _DetailNormalMapScale: 1 |

|||

- _DstBlend: 0 |

|||

- _GlossMapScale: 1 |

|||

- _Glossiness: 0 |

|||

- _GlossyReflections: 1 |

|||

- _Metallic: 0 |

|||

- _Mode: 0 |

|||

- _OcclusionStrength: 1 |

|||

- _Parallax: 0.02 |

|||

- _SmoothnessTextureChannel: 0 |

|||

- _SpecularHighlights: 1 |

|||

- _SrcBlend: 1 |

|||

- _UVSec: 0 |

|||

- _ZWrite: 1 |

|||

m_Colors: |

|||

- _Color: {r: 0.5660378, g: 0.37924042, b: 0.18956926, a: 1} |

|||

- _EmissionColor: {r: 0, g: 0, b: 0, a: 1} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 3061b37049eb04ddfa822f606369919d |

|||

NativeFormatImporter: |

|||

externalObjects: {} |

|||

mainObjectFileID: 2100000 |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

%YAML 1.1 |

|||

%TAG !u! tag:unity3d.com,2011: |

|||

--- !u!21 &2100000 |

|||

Material: |

|||

serializedVersion: 6 |

|||

m_ObjectHideFlags: 0 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 0} |

|||

m_Name: Gold |

|||

m_Shader: {fileID: 46, guid: 0000000000000000f000000000000000, type: 0} |

|||

m_ShaderKeywords: _EMISSION |

|||

m_LightmapFlags: 1 |

|||

m_EnableInstancingVariants: 0 |

|||

m_DoubleSidedGI: 0 |

|||

m_CustomRenderQueue: -1 |

|||

stringTagMap: {} |

|||

disabledShaderPasses: [] |

|||

m_SavedProperties: |

|||

serializedVersion: 3 |

|||

m_TexEnvs: |

|||

- _BumpMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailAlbedoMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailMask: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailNormalMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _EmissionMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _MainTex: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _MetallicGlossMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _OcclusionMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _ParallaxMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

m_Floats: |

|||

- _BumpScale: 1 |

|||

- _Cutoff: 0.5 |

|||

- _DetailNormalMapScale: 1 |

|||

- _DstBlend: 0 |

|||

- _GlossMapScale: 1 |

|||

- _Glossiness: 0.5 |

|||

- _GlossyReflections: 1 |

|||

- _Metallic: 0.926 |

|||

- _Mode: 0 |

|||

- _OcclusionStrength: 1 |

|||

- _Parallax: 0.02 |

|||

- _SmoothnessTextureChannel: 0 |

|||

- _SpecularHighlights: 1 |

|||

- _SrcBlend: 1 |

|||

- _UVSec: 0 |

|||

- _ZWrite: 1 |

|||

m_Colors: |

|||

- _Color: {r: 0.5660378, g: 0.5660378, b: 0.5660378, a: 1} |

|||

- _EmissionColor: {r: 1, g: 0.505618, b: 0, a: 1} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 860625a788df041aeb5c35413765e9df |

|||

NativeFormatImporter: |

|||

externalObjects: {} |

|||

mainObjectFileID: 2100000 |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

%YAML 1.1 |

|||

%TAG !u! tag:unity3d.com,2011: |

|||

--- !u!21 &2100000 |

|||

Material: |

|||

serializedVersion: 6 |

|||

m_ObjectHideFlags: 0 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 0} |

|||

m_Name: agent |

|||

m_Shader: {fileID: 46, guid: 0000000000000000f000000000000000, type: 0} |

|||

m_ShaderKeywords: |

|||

m_LightmapFlags: 4 |

|||

m_EnableInstancingVariants: 0 |

|||

m_DoubleSidedGI: 0 |

|||

m_CustomRenderQueue: -1 |

|||

stringTagMap: {} |

|||

disabledShaderPasses: [] |

|||

m_SavedProperties: |

|||

serializedVersion: 3 |

|||

m_TexEnvs: |

|||

- _BumpMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailAlbedoMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailMask: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailNormalMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _EmissionMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _MainTex: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _MetallicGlossMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _OcclusionMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _ParallaxMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

m_Floats: |

|||

- _BumpScale: 1 |

|||

- _Cutoff: 0.5 |

|||

- _DetailNormalMapScale: 1 |

|||

- _DstBlend: 0 |

|||

- _GlossMapScale: 1 |

|||

- _Glossiness: 0.5 |

|||

- _GlossyReflections: 1 |

|||

- _Metallic: 0 |

|||

- _Mode: 0 |

|||

- _OcclusionStrength: 1 |

|||

- _Parallax: 0.02 |

|||

- _SmoothnessTextureChannel: 0 |

|||

- _SpecularHighlights: 1 |

|||

- _SrcBlend: 1 |

|||

- _UVSec: 0 |

|||

- _ZWrite: 1 |

|||

m_Colors: |

|||

- _Color: {r: 0.24345203, g: 0.4278206, b: 0.503, a: 1} |

|||

- _EmissionColor: {r: 0, g: 0, b: 0, a: 1} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 7ec5b27785bab4f37a9ae5faa93c92b7 |

|||

NativeFormatImporter: |

|||

externalObjects: {} |

|||

mainObjectFileID: 0 |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

%YAML 1.1 |

|||

%TAG !u! tag:unity3d.com,2011: |

|||

--- !u!21 &2100000 |

|||

Material: |

|||

serializedVersion: 6 |

|||

m_ObjectHideFlags: 0 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 0} |

|||

m_Name: black |

|||

m_Shader: {fileID: 46, guid: 0000000000000000f000000000000000, type: 0} |

|||

m_ShaderKeywords: |

|||

m_LightmapFlags: 4 |

|||

m_EnableInstancingVariants: 0 |

|||

m_DoubleSidedGI: 0 |

|||

m_CustomRenderQueue: -1 |

|||

stringTagMap: {} |

|||

disabledShaderPasses: [] |

|||

m_SavedProperties: |

|||

serializedVersion: 3 |

|||

m_TexEnvs: |

|||

- _BumpMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailAlbedoMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailMask: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailNormalMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _EmissionMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _MainTex: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _MetallicGlossMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _OcclusionMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _ParallaxMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

m_Floats: |

|||

- _BumpScale: 1 |

|||

- _Cutoff: 0.5 |

|||

- _DetailNormalMapScale: 1 |

|||

- _DstBlend: 0 |

|||

- _GlossMapScale: 1 |

|||

- _Glossiness: 0 |

|||

- _GlossyReflections: 1 |

|||

- _Metallic: 0 |

|||

- _Mode: 0 |

|||

- _OcclusionStrength: 1 |

|||

- _Parallax: 0.02 |

|||

- _SmoothnessTextureChannel: 0 |

|||

- _SpecularHighlights: 1 |

|||

- _SrcBlend: 1 |

|||

- _UVSec: 0 |

|||

- _ZWrite: 1 |

|||

m_Colors: |

|||

- _Color: {r: 0.19852942, g: 0.19852942, b: 0.19852942, a: 1} |

|||

- _EmissionColor: {r: 0, g: 0, b: 0, a: 1} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 45735b9be79ab49b887c5f09cbb914b9 |

|||

NativeFormatImporter: |

|||

externalObjects: {} |

|||

mainObjectFileID: 0 |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

%YAML 1.1 |

|||

%TAG !u! tag:unity3d.com,2011: |

|||

--- !u!21 &2100000 |

|||

Material: |

|||

serializedVersion: 6 |

|||

m_ObjectHideFlags: 0 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 0} |

|||

m_Name: ground |

|||

m_Shader: {fileID: 46, guid: 0000000000000000f000000000000000, type: 0} |

|||

m_ShaderKeywords: |

|||

m_LightmapFlags: 4 |

|||

m_EnableInstancingVariants: 0 |

|||

m_DoubleSidedGI: 0 |

|||

m_CustomRenderQueue: -1 |

|||

stringTagMap: {} |

|||

disabledShaderPasses: [] |

|||

m_SavedProperties: |

|||

serializedVersion: 3 |

|||

m_TexEnvs: |

|||

- _BumpMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailAlbedoMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailMask: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailNormalMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _EmissionMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _MainTex: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _MetallicGlossMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _OcclusionMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _ParallaxMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

m_Floats: |

|||

- _BumpScale: 1 |

|||

- _Cutoff: 0.5 |

|||

- _DetailNormalMapScale: 1 |

|||

- _DstBlend: 0 |

|||

- _GlossMapScale: 1 |

|||

- _Glossiness: 0.2 |

|||

- _GlossyReflections: 1 |

|||

- _Metallic: 0.2 |

|||

- _Mode: 0 |

|||

- _OcclusionStrength: 1 |

|||

- _Parallax: 0.02 |

|||

- _SmoothnessTextureChannel: 0 |

|||

- _SpecularHighlights: 1 |

|||

- _SrcBlend: 1 |

|||

- _UVSec: 0 |

|||

- _ZWrite: 1 |

|||

m_Colors: |

|||

- _Color: {r: 0.49056602, g: 0.4321629, b: 0.3910644, a: 1} |

|||

- _EmissionColor: {r: 0, g: 0, b: 0, a: 1} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 7f92b7019e6e5485fa6201e1db7c5658 |

|||

NativeFormatImporter: |

|||

externalObjects: {} |

|||

mainObjectFileID: 0 |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

%YAML 1.1 |

|||

%TAG !u! tag:unity3d.com,2011: |

|||

--- !u!21 &2100000 |

|||

Material: |

|||

serializedVersion: 6 |

|||

m_ObjectHideFlags: 0 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 0} |

|||

m_Name: red |

|||

m_Shader: {fileID: 46, guid: 0000000000000000f000000000000000, type: 0} |

|||

m_ShaderKeywords: |

|||

m_LightmapFlags: 4 |

|||

m_EnableInstancingVariants: 0 |

|||

m_DoubleSidedGI: 0 |

|||

m_CustomRenderQueue: -1 |

|||

stringTagMap: {} |

|||

disabledShaderPasses: [] |

|||

m_SavedProperties: |

|||

serializedVersion: 3 |

|||

m_TexEnvs: |

|||

- _BumpMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailAlbedoMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailMask: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailNormalMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _EmissionMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _MainTex: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _MetallicGlossMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _OcclusionMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _ParallaxMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

m_Floats: |

|||

- _BumpScale: 1 |

|||

- _Cutoff: 0.5 |

|||

- _DetailNormalMapScale: 1 |

|||

- _DstBlend: 0 |

|||

- _GlossMapScale: 1 |

|||

- _Glossiness: 0 |

|||

- _GlossyReflections: 1 |

|||

- _Metallic: 0 |

|||

- _Mode: 0 |

|||

- _OcclusionStrength: 1 |

|||

- _Parallax: 0.02 |

|||

- _SmoothnessTextureChannel: 0 |

|||

- _SpecularHighlights: 1 |

|||

- _SrcBlend: 1 |

|||

- _UVSec: 0 |

|||

- _ZWrite: 1 |

|||

m_Colors: |

|||

- _Color: {r: 0.72794116, g: 0.35326555, b: 0.35326555, a: 1} |

|||

- _EmissionColor: {r: 0, g: 0, b: 0, a: 1} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: fee4f54a87e3a4da494cc22082809bb4 |

|||

NativeFormatImporter: |

|||

externalObjects: {} |

|||

mainObjectFileID: 0 |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

%YAML 1.1 |

|||

%TAG !u! tag:unity3d.com,2011: |

|||

--- !u!21 &2100000 |

|||

Material: |

|||

serializedVersion: 6 |

|||

m_ObjectHideFlags: 0 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 0} |

|||

m_Name: wall |

|||

m_Shader: {fileID: 46, guid: 0000000000000000f000000000000000, type: 0} |

|||

m_ShaderKeywords: |

|||

m_LightmapFlags: 4 |

|||

m_EnableInstancingVariants: 0 |

|||

m_DoubleSidedGI: 0 |

|||

m_CustomRenderQueue: -1 |

|||

stringTagMap: {} |

|||

disabledShaderPasses: [] |

|||

m_SavedProperties: |

|||

serializedVersion: 3 |

|||

m_TexEnvs: |

|||

- _BumpMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailAlbedoMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailMask: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailNormalMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _EmissionMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _MainTex: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _MetallicGlossMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _OcclusionMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _ParallaxMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

m_Floats: |

|||

- _BumpScale: 1 |

|||

- _Cutoff: 0.5 |

|||

- _DetailNormalMapScale: 1 |

|||

- _DstBlend: 0 |

|||

- _GlossMapScale: 1 |

|||

- _Glossiness: 0.5 |

|||

- _GlossyReflections: 1 |

|||

- _Metallic: 0 |

|||

- _Mode: 0 |

|||

- _OcclusionStrength: 1 |

|||

- _Parallax: 0.02 |

|||

- _SmoothnessTextureChannel: 0 |

|||

- _SpecularHighlights: 1 |

|||

- _SrcBlend: 1 |

|||

- _UVSec: 0 |

|||

- _ZWrite: 1 |

|||

m_Colors: |

|||

- _Color: {r: 0.44705883, g: 0.4509804, b: 0.4627451, a: 0} |

|||

- _EmissionColor: {r: 0, g: 0, b: 0, a: 1} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 816cdc1b97eae4fe1bd0e092e5f7ed04 |

|||

NativeFormatImporter: |

|||

externalObjects: {} |

|||

mainObjectFileID: 0 |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

%YAML 1.1 |

|||

%TAG !u! tag:unity3d.com,2011: |

|||

--- !u!21 &2100000 |

|||

Material: |

|||

serializedVersion: 6 |

|||

m_ObjectHideFlags: 0 |

|||

m_PrefabParentObject: {fileID: 0} |

|||

m_PrefabInternal: {fileID: 0} |

|||

m_Name: white |

|||

m_Shader: {fileID: 46, guid: 0000000000000000f000000000000000, type: 0} |

|||

m_ShaderKeywords: |

|||

m_LightmapFlags: 4 |

|||

m_EnableInstancingVariants: 0 |

|||

m_DoubleSidedGI: 0 |

|||

m_CustomRenderQueue: -1 |

|||

stringTagMap: {} |

|||

disabledShaderPasses: [] |

|||

m_SavedProperties: |

|||

serializedVersion: 3 |

|||

m_TexEnvs: |

|||

- _BumpMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailAlbedoMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailMask: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _DetailNormalMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _EmissionMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _MainTex: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _MetallicGlossMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _OcclusionMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

- _ParallaxMap: |

|||

m_Texture: {fileID: 0} |

|||

m_Scale: {x: 1, y: 1} |

|||

m_Offset: {x: 0, y: 0} |

|||

m_Floats: |

|||

- _BumpScale: 1 |

|||

- _Cutoff: 0.5 |

|||

- _DetailNormalMapScale: 1 |

|||

- _DstBlend: 0 |

|||

- _GlossMapScale: 1 |

|||

- _Glossiness: 0 |

|||

- _GlossyReflections: 1 |

|||

- _Metallic: 0 |

|||

- _Mode: 0 |

|||

- _OcclusionStrength: 1 |

|||

- _Parallax: 0.02 |

|||

- _SmoothnessTextureChannel: 0 |

|||

- _SpecularHighlights: 1 |

|||

- _SrcBlend: 1 |

|||

- _UVSec: 0 |

|||

- _ZWrite: 1 |

|||

m_Colors: |

|||

- _Color: {r: 1, g: 1, b: 1, a: 1} |

|||

- _EmissionColor: {r: 0, g: 0, b: 0, a: 1} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 83456930795894bb5b51f4e8a620bc8b |

|||

NativeFormatImporter: |

|||

externalObjects: {} |

|||

mainObjectFileID: 0 |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 3ce93d04f41114481ac56aefa2c93bb2 |

|||

folderAsset: yes |

|||

DefaultImporter: |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

1001

unity-environment/Assets/ML-Agents/Examples/Pyramids/Prefabs/AreaPB.prefab

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

|

|||

fileFormatVersion: 2 |

|||

guid: bd804431e808a492bb5658bcd296e58e |

|||

NativeFormatImporter: |

|||

externalObjects: {} |

|||

mainObjectFileID: 100100000 |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

1001

unity-environment/Assets/ML-Agents/Examples/Pyramids/Prefabs/BrickPyramid.prefab

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

|

|||

fileFormatVersion: 2 |

|||

guid: 8be2b3870e2cd4ad8bbf080059b2a132 |

|||

NativeFormatImporter: |

|||

externalObjects: {} |

|||

mainObjectFileID: 100100000 |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

1001

unity-environment/Assets/ML-Agents/Examples/Pyramids/Prefabs/StonePyramid.prefab

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

|

|||

fileFormatVersion: 2 |

|||

guid: 41512dd84b60643ceb3855fcf9d7d318 |

|||

NativeFormatImporter: |

|||

externalObjects: {} |

|||

mainObjectFileID: 100100000 |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: f391d733a889c4e2f92d1cfdf0912976 |

|||

folderAsset: yes |

|||

DefaultImporter: |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

1001

unity-environment/Assets/ML-Agents/Examples/Pyramids/Scenes/Pyramids.unity

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

|

|||

fileFormatVersion: 2 |

|||

guid: 35afbc150a44b4aa69ca04685486b5c4 |

|||

DefaultImporter: |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 4c6b273d7fcab4956958a9049c2a850c |

|||

folderAsset: yes |

|||

DefaultImporter: |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

using System.Collections; |

|||

using System.Collections.Generic; |

|||

using UnityEngine; |

|||

using UnityEngine.UI; |

|||

|

|||

public class PyramidAcademy : Academy |

|||

{ |

|||

|

|||

public override void AcademyReset() |

|||

{ |

|||

|

|||

} |

|||

|

|||

public override void AcademyStep() |

|||

{ |

|||

|

|||

} |

|||

} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: dba8df9c8b16946dc88d331a301d0ab3 |

|||

MonoImporter: |

|||

externalObjects: {} |

|||

serializedVersion: 2 |

|||

defaultReferences: [] |

|||

executionOrder: 0 |

|||

icon: {instanceID: 0} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

using System; |

|||

using System.Collections; |

|||

using System.Collections.Generic; |

|||

using System.Linq; |

|||

using UnityEngine; |

|||

using Random = UnityEngine.Random; |

|||

|

|||

public class PyramidAgent : Agent |

|||

{ |

|||

public GameObject area; |

|||

private PyramidArea myArea; |

|||

private Rigidbody agentRb; |

|||

private RayPerception rayPer; |

|||

private PyramidSwitch switchLogic; |

|||

public GameObject areaSwitch; |

|||

public bool useVectorObs; |

|||

|

|||

public override void InitializeAgent() |

|||

{ |

|||

base.InitializeAgent(); |

|||

agentRb = GetComponent<Rigidbody>(); |

|||

myArea = area.GetComponent<PyramidArea>(); |

|||

rayPer = GetComponent<RayPerception>(); |

|||

switchLogic = areaSwitch.GetComponent<PyramidSwitch>(); |

|||

} |

|||

|

|||

public override void CollectObservations() |

|||

{ |

|||

if (useVectorObs) |

|||

{ |

|||

const float rayDistance = 35f; |

|||

float[] rayAngles = {20f, 90f, 160f, 45f, 135f, 70f, 110f}; |

|||

float[] rayAngles1 = {25f, 95f, 165f, 50f, 140f, 75f, 115f}; |

|||

float[] rayAngles2 = {15f, 85f, 155f, 40f, 130f, 65f, 105f}; |

|||

|

|||

string[] detectableObjects = {"block", "wall", "goal", "switchOff", "switchOn", "stone"}; |

|||

AddVectorObs(rayPer.Perceive(rayDistance, rayAngles, detectableObjects, 0f, 0f)); |

|||

AddVectorObs(rayPer.Perceive(rayDistance, rayAngles1, detectableObjects, 0f, 5f)); |

|||

AddVectorObs(rayPer.Perceive(rayDistance, rayAngles2, detectableObjects, 0f, 10f)); |

|||

AddVectorObs(switchLogic.GetState()); |

|||

AddVectorObs(transform.InverseTransformDirection(agentRb.velocity)); |

|||

} |

|||

} |

|||

|

|||

public void MoveAgent(float[] act) |

|||

{ |

|||

var dirToGo = Vector3.zero; |

|||

var rotateDir = Vector3.zero; |

|||

|

|||

if (brain.brainParameters.vectorActionSpaceType == SpaceType.continuous) |

|||

{ |

|||

dirToGo = transform.forward * Mathf.Clamp(act[0], -1f, 1f); |

|||

rotateDir = transform.up * Mathf.Clamp(act[1], -1f, 1f); |

|||

} |

|||

else |

|||

{ |

|||

var action = Mathf.FloorToInt(act[0]); |

|||

switch (action) |

|||

{ |

|||

case 0: |

|||

dirToGo = transform.forward * 1f; |

|||

break; |

|||

case 1: |

|||

dirToGo = transform.forward * -1f; |

|||

break; |

|||

case 2: |

|||

rotateDir = transform.up * 1f; |

|||

break; |

|||

case 3: |

|||

rotateDir = transform.up * -1f; |

|||

break; |

|||

} |

|||

} |

|||

transform.Rotate(rotateDir, Time.deltaTime * 200f); |

|||

agentRb.AddForce(dirToGo * 2f, ForceMode.VelocityChange); |

|||

} |

|||

|

|||

public override void AgentAction(float[] vectorAction, string textAction) |

|||

{ |

|||

AddReward(-1f / agentParameters.maxStep); |

|||

MoveAgent(vectorAction); |

|||

} |

|||

|

|||

public override void AgentReset() |

|||

{ |

|||

var enumerable = Enumerable.Range(0, 9).OrderBy(x => Guid.NewGuid()).Take(9); |

|||

var items = enumerable.ToArray(); |

|||

|

|||

myArea.CleanPyramidArea(); |

|||

|

|||

agentRb.velocity = Vector3.zero; |

|||

myArea.PlaceObject(gameObject, items[0]); |

|||

transform.rotation = Quaternion.Euler(new Vector3(0f, Random.Range(0, 360))); |

|||

|

|||

switchLogic.ResetSwitch(items[1], items[2]); |

|||

myArea.CreateStonePyramid(1, items[3]); |

|||

myArea.CreateStonePyramid(1, items[4]); |

|||

myArea.CreateStonePyramid(1, items[5]); |

|||

myArea.CreateStonePyramid(1, items[6]); |

|||

myArea.CreateStonePyramid(1, items[7]); |

|||

myArea.CreateStonePyramid(1, items[8]); |

|||

} |

|||

|

|||

private void OnCollisionEnter(Collision collision) |

|||

{ |

|||

if (collision.gameObject.CompareTag("goal")) |

|||

{ |

|||

SetReward(2f); |

|||

Done(); |

|||

} |

|||

} |

|||

|

|||

public override void AgentOnDone() |

|||

{ |

|||

|

|||

} |

|||

} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: b8db44472779248d3be46895c4d562d5 |

|||

MonoImporter: |

|||

externalObjects: {} |

|||

serializedVersion: 2 |

|||

defaultReferences: [] |

|||

executionOrder: 0 |

|||

icon: {instanceID: 0} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

using System.Collections; |

|||

using System.Collections.Generic; |

|||

using UnityEngine; |

|||

|

|||

public class PyramidArea : Area |

|||

{ |

|||

public GameObject pyramid; |

|||

public GameObject stonePyramid; |

|||

public GameObject[] spawnAreas; |

|||

public int numPyra; |

|||

public float range; |

|||

|

|||

public void CreatePyramid(int numObjects, int spawnAreaIndex) |

|||

{ |

|||

CreateObject(numObjects, pyramid, spawnAreaIndex); |

|||

} |

|||

|

|||

public void CreateStonePyramid(int numObjects, int spawnAreaIndex) |

|||

{ |

|||

CreateObject(numObjects, stonePyramid, spawnAreaIndex); |

|||

} |

|||

|

|||

private void CreateObject(int numObjects, GameObject desiredObject, int spawnAreaIndex) |

|||

{ |

|||

for (var i = 0; i < numObjects; i++) |

|||

{ |

|||

var newObject = Instantiate(desiredObject, Vector3.zero, |

|||

Quaternion.Euler(0f, 0f, 0f), transform); |

|||

PlaceObject(newObject, spawnAreaIndex); |

|||

} |

|||

} |

|||

|

|||

public void PlaceObject(GameObject objectToPlace, int spawnAreaIndex) |

|||

{ |

|||

var spawnTransform = spawnAreas[spawnAreaIndex].transform; |

|||

var xRange = spawnTransform.localScale.x / 2.1f; |

|||

var zRange = spawnTransform.localScale.z / 2.1f; |

|||

|

|||

objectToPlace.transform.position = new Vector3(Random.Range(-xRange, xRange), 2f, Random.Range(-zRange, zRange)) |

|||

+ spawnTransform.position; |

|||

} |

|||

|

|||

public void CleanPyramidArea() |

|||

{ |

|||

foreach (Transform child in transform) if (child.CompareTag("pyramid")) |

|||

{ |

|||

Destroy(child.gameObject); |

|||

} |

|||

} |

|||

|

|||

public override void ResetArea() |

|||

{ |

|||

|

|||

} |

|||

} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: e048de15d0b8a4643a75c2b09981792e |

|||

MonoImporter: |

|||

externalObjects: {} |

|||

serializedVersion: 2 |

|||

defaultReferences: [] |

|||

executionOrder: 0 |

|||

icon: {instanceID: 0} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

using System.Collections; |

|||

using System.Collections.Generic; |

|||

using UnityEngine; |

|||

|

|||

public class PyramidSwitch : MonoBehaviour |

|||

{ |

|||

public Material onMaterial; |

|||

public Material offMaterial; |

|||

public GameObject myButton; |

|||

private bool state; |

|||

private GameObject area; |

|||

private PyramidArea areaComponent; |

|||

private int pyramidIndex; |

|||

|

|||

public bool GetState() |

|||

{ |

|||

return state; |

|||

} |

|||

|

|||

private void Start() |

|||

{ |

|||

area = gameObject.transform.parent.gameObject; |

|||

areaComponent = area.GetComponent<PyramidArea>(); |

|||

} |

|||

|

|||

public void ResetSwitch(int spawnAreaIndex, int pyramidSpawnIndex) |

|||

{ |

|||

areaComponent.PlaceObject(gameObject, spawnAreaIndex); |

|||

state = false; |

|||

pyramidIndex = pyramidSpawnIndex; |

|||

tag = "switchOff"; |

|||

transform.rotation = Quaternion.Euler(0f, 0f, 0f); |

|||

myButton.GetComponent<Renderer>().material = offMaterial; |

|||

} |

|||

|

|||

private void OnCollisionEnter(Collision other) |

|||

{ |

|||

if (other.gameObject.CompareTag("agent") && state == false) |

|||

{ |

|||

myButton.GetComponent<Renderer>().material = onMaterial; |

|||

state = true; |

|||

areaComponent.CreatePyramid(1, pyramidIndex); |

|||

tag = "switchOn"; |

|||

} |

|||

} |

|||

|

|||

} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: abd01d977612744528db278c446e9a11 |

|||

MonoImporter: |

|||

externalObjects: {} |

|||

serializedVersion: 2 |

|||

defaultReferences: [] |

|||

executionOrder: 0 |

|||

icon: {instanceID: 0} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: c577914cc4ace45baa8c4dd54778ae00 |

|||

folderAsset: yes |

|||

DefaultImporter: |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

1001

unity-environment/Assets/ML-Agents/Examples/Pyramids/TFModels/Pyramids.bytes

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

|

|||

fileFormatVersion: 2 |

|||

guid: 97f59608051e548d9a79803894260d13 |

|||

TextScriptImporter: |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

撰写

预览

正在加载...

取消

保存

Reference in new issue