当前提交

b4f52c88

共有 51 个文件被更改,包括 2887 次插入 和 5855 次删除

-

2.yamato/gym-interface-test.yml

-

4.yamato/protobuf-generation-test.yml

-

2.yamato/python-ll-api-test.yml

-

5.yamato/standalone-build-test.yml

-

11.yamato/training-int-tests.yml

-

2README.md

-

4com.unity.ml-agents/CHANGELOG.md

-

24com.unity.ml-agents/Runtime/Academy.cs

-

6com.unity.ml-agents/Runtime/Agent.cs

-

34com.unity.ml-agents/Runtime/DecisionRequester.cs

-

44com.unity.ml-agents/Runtime/SideChannels/IncomingMessage.cs

-

69com.unity.ml-agents/Runtime/Timer.cs

-

47com.unity.ml-agents/Tests/Editor/MLAgentsEditModeTest.cs

-

102com.unity.ml-agents/Tests/Editor/PublicAPI/PublicApiValidation.cs

-

26com.unity.ml-agents/Tests/Editor/SideChannelTests.cs

-

2com.unity.ml-agents/Tests/Editor/TimerTest.cs

-

16com.unity.ml-agents/package.json

-

3docs/Getting-Started.md

-

10docs/ML-Agents-Overview.md

-

1docs/Migrating.md

-

2docs/Training-ML-Agents.md

-

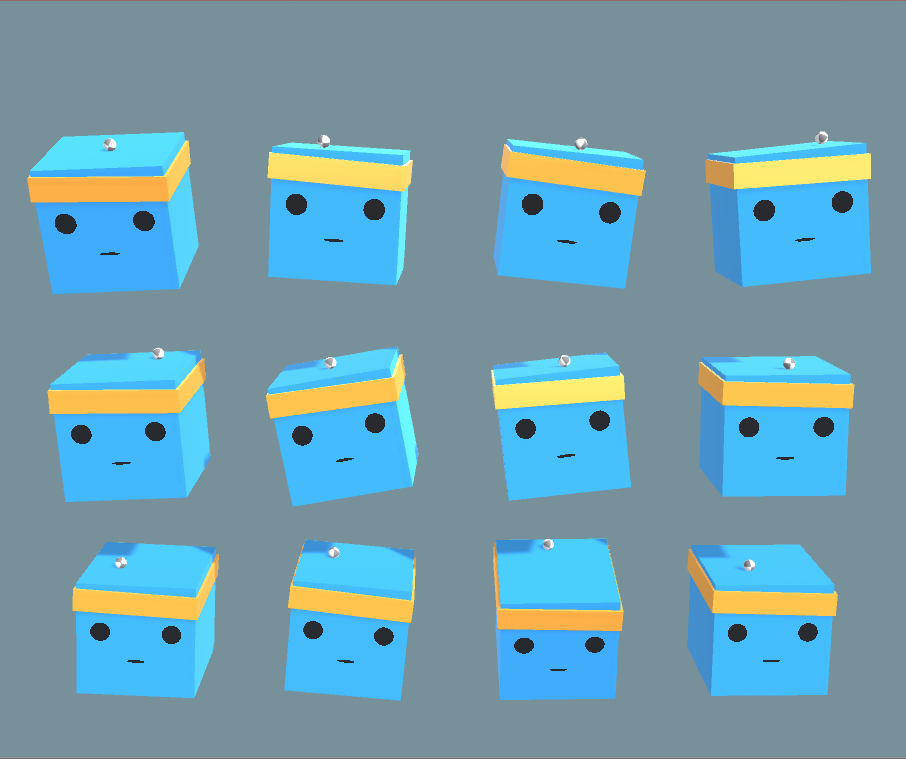

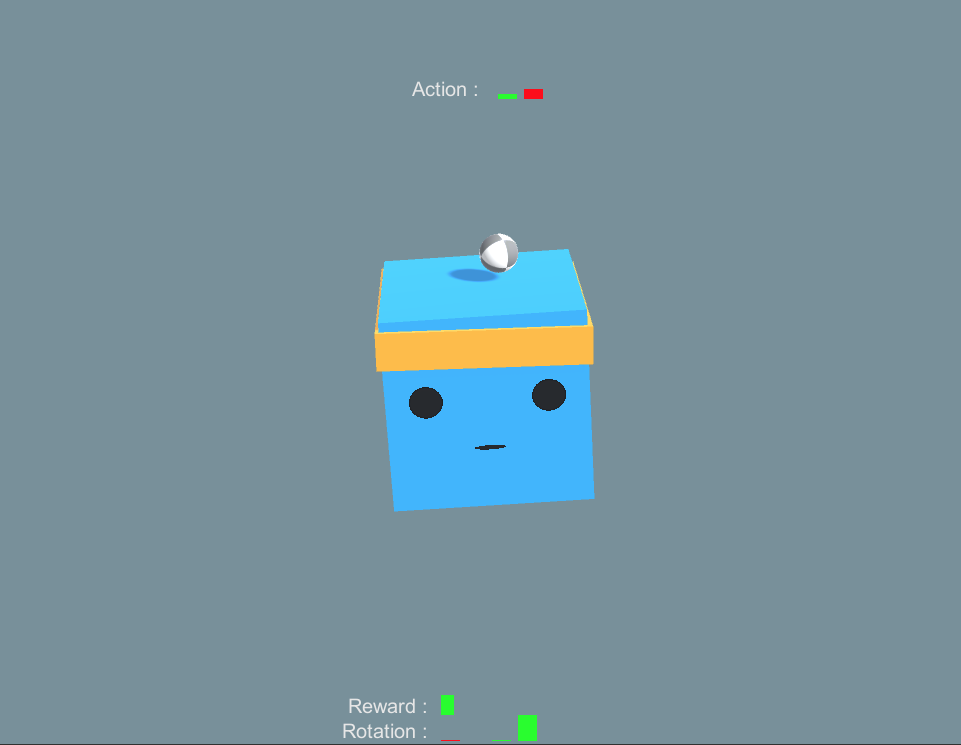

999docs/images/3dball_big.png

-

852docs/images/3dball_small.png

-

974docs/images/curriculum.png

-

999docs/images/ml-agents-LSTM.png

-

181docs/images/monitor.png

-

2ml-agents-envs/mlagents_envs/environment.py

-

38ml-agents-envs/mlagents_envs/side_channel/incoming_message.py

-

20ml-agents-envs/mlagents_envs/tests/test_side_channel.py

-

7ml-agents-envs/mlagents_envs/tests/test_timers.py

-

39ml-agents-envs/mlagents_envs/timers.py

-

1ml-agents/mlagents/trainers/agent_processor.py

-

14ml-agents/mlagents/trainers/demo_loader.py

-

1ml-agents/mlagents/trainers/env_manager.py

-

12ml-agents/mlagents/trainers/learn.py

-

24ml-agents/mlagents/trainers/tests/test_demo_loader.py

-

2ml-agents/tests/yamato/scripts/run_llapi.py

-

2ml-agents/tests/yamato/standalone_build_tests.py

-

13ml-agents/tests/yamato/training_int_tests.py

-

37ml-agents/tests/yamato/yamato_utils.py

-

1utils/make_readme_table.py

-

129com.unity.ml-agents/Tests/Runtime/RuntimeAPITest.cs

-

11com.unity.ml-agents/Tests/Runtime/RuntimeAPITest.cs.meta

-

25com.unity.ml-agents/Tests/Runtime/Unity.ML-Agents.Runtime.Tests.asmdef

-

7com.unity.ml-agents/Tests/Runtime/Unity.ML-Agents.Runtime.Tests.asmdef.meta

-

429com.unity.ml-agents/Tests/Runtime/SerializeTestScene.unity

-

7com.unity.ml-agents/Tests/Runtime/SerializeTestScene.unity.meta

-

1001docs/images/banana.png

-

1001docs/images/running-a-pretrained-model.gif

-

497docs/images/3dballhard.png

-

1001docs/images/bananaimitation.png

|

|||

{ |

|||

"name": "com.unity.ml-agents", |

|||

"displayName":"ML Agents", |

|||

"version": "0.15.0-preview", |

|||

"unity": "2018.4", |

|||

"description": "Add interactivity to your game with Machine Learning Agents trained using Deep Reinforcement Learning.", |

|||

"dependencies": { |

|||

"com.unity.barracuda": "0.6.1-preview" |

|||

} |

|||

"name": "com.unity.ml-agents", |

|||

"displayName": "ML Agents", |

|||

"version": "0.15.1-preview", |

|||

"unity": "2018.4", |

|||

"description": "Add interactivity to your game with Machine Learning Agents trained using Deep Reinforcement Learning.", |

|||

"dependencies": { |

|||

"com.unity.barracuda": "0.6.1-preview" |

|||

} |

|||

} |

|||

999

docs/images/3dball_big.png

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

999

docs/images/ml-agents-LSTM.png

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

|

|||

#if UNITY_INCLUDE_TESTS

|

|||

using System.Collections; |

|||

using System.Collections.Generic; |

|||

using MLAgents; |

|||

using MLAgents.Policies; |

|||

using MLAgents.Sensors; |

|||

using NUnit.Framework; |

|||

using UnityEngine; |

|||

using UnityEngine.TestTools; |

|||

|

|||

namespace Tests |

|||

{ |

|||

|

|||

public class PublicApiAgent : Agent |

|||

{ |

|||

public int numHeuristicCalls; |

|||

|

|||

public override float[] Heuristic() |

|||

{ |

|||

numHeuristicCalls++; |

|||

return base.Heuristic(); |

|||

} |

|||

}// Simple SensorComponent that sets up a StackingSensor

|

|||

public class StackingComponent : SensorComponent |

|||

{ |

|||

public SensorComponent wrappedComponent; |

|||

public int numStacks; |

|||

|

|||

public override ISensor CreateSensor() |

|||

{ |

|||

var wrappedSensor = wrappedComponent.CreateSensor(); |

|||

return new StackingSensor(wrappedSensor, numStacks); |

|||

} |

|||

|

|||

public override int[] GetObservationShape() |

|||

{ |

|||

int[] shape = (int[]) wrappedComponent.GetObservationShape().Clone(); |

|||

for (var i = 0; i < shape.Length; i++) |

|||

{ |

|||

shape[i] *= numStacks; |

|||

} |

|||

|

|||

return shape; |

|||

} |

|||

} |

|||

|

|||

public class RuntimeApiTest |

|||

{ |

|||

[SetUp] |

|||

public static void Setup() |

|||

{ |

|||

Academy.Instance.AutomaticSteppingEnabled = false; |

|||

} |

|||

|

|||

[UnityTest] |

|||

public IEnumerator RuntimeApiTestWithEnumeratorPasses() |

|||

{ |

|||

var gameObject = new GameObject(); |

|||

|

|||

var behaviorParams = gameObject.AddComponent<BehaviorParameters>(); |

|||

behaviorParams.brainParameters.vectorObservationSize = 3; |

|||

behaviorParams.brainParameters.numStackedVectorObservations = 2; |

|||

behaviorParams.brainParameters.vectorActionDescriptions = new[] { "TestActionA", "TestActionB" }; |

|||

behaviorParams.brainParameters.vectorActionSize = new[] { 2, 2 }; |

|||

behaviorParams.brainParameters.vectorActionSpaceType = SpaceType.Discrete; |

|||

behaviorParams.behaviorName = "TestBehavior"; |

|||

behaviorParams.TeamId = 42; |

|||

behaviorParams.useChildSensors = true; |

|||

|

|||

|

|||

// Can't actually create an Agent with InferenceOnly and no model, so change back

|

|||

behaviorParams.behaviorType = BehaviorType.Default; |

|||

|

|||

var sensorComponent = gameObject.AddComponent<RayPerceptionSensorComponent3D>(); |

|||

sensorComponent.sensorName = "ray3d"; |

|||

sensorComponent.detectableTags = new List<string> { "Player", "Respawn" }; |

|||

sensorComponent.raysPerDirection = 3; |

|||

|

|||

// Make a StackingSensor that wraps the RayPerceptionSensorComponent3D

|

|||

// This isn't necessarily practical, just to ensure that it can be done

|

|||

var wrappingSensorComponent = gameObject.AddComponent<StackingComponent>(); |

|||

wrappingSensorComponent.wrappedComponent = sensorComponent; |

|||

wrappingSensorComponent.numStacks = 3; |

|||

|

|||

// ISensor isn't set up yet.

|

|||

Assert.IsNull(sensorComponent.raySensor); |

|||

|

|||

|

|||

// Make sure we can set the behavior type correctly after the agent is initialized

|

|||

// (this creates a new policy).

|

|||

behaviorParams.behaviorType = BehaviorType.HeuristicOnly; |

|||

|

|||

// Agent needs to be added after everything else is setup.

|

|||

var agent = gameObject.AddComponent<PublicApiAgent>(); |

|||

|

|||

// DecisionRequester has to be added after Agent.

|

|||

var decisionRequester = gameObject.AddComponent<DecisionRequester>(); |

|||

decisionRequester.DecisionPeriod = 2; |

|||

decisionRequester.TakeActionsBetweenDecisions = true; |

|||

|

|||

|

|||

// Initialization should set up the sensors

|

|||

Assert.IsNotNull(sensorComponent.raySensor); |

|||

|

|||

// Let's change the inference device

|

|||

var otherDevice = behaviorParams.inferenceDevice == InferenceDevice.CPU ? InferenceDevice.GPU : InferenceDevice.CPU; |

|||

agent.SetModel(behaviorParams.behaviorName, behaviorParams.model, otherDevice); |

|||

|

|||

agent.AddReward(1.0f); |

|||

|

|||

// skip a frame.

|

|||

yield return null; |

|||

|

|||

Academy.Instance.EnvironmentStep(); |

|||

|

|||

var actions = agent.GetAction(); |

|||

// default Heuristic implementation should return zero actions.

|

|||

Assert.AreEqual(new[] {0.0f, 0.0f}, actions); |

|||

Assert.AreEqual(1, agent.numHeuristicCalls); |

|||

|

|||

Academy.Instance.EnvironmentStep(); |

|||

Assert.AreEqual(1, agent.numHeuristicCalls); |

|||

|

|||

Academy.Instance.EnvironmentStep(); |

|||

Assert.AreEqual(2, agent.numHeuristicCalls); |

|||

} |

|||

} |

|||

} |

|||

#endif

|

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 17878576e4ed14b09875e37394e5ad90 |

|||

MonoImporter: |

|||

externalObjects: {} |

|||

serializedVersion: 2 |

|||

defaultReferences: [] |

|||

executionOrder: 0 |

|||

icon: {instanceID: 0} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

{ |

|||

"name": "Tests", |

|||

"references": [ |

|||

"Unity.ML-Agents", |

|||

"Barracuda", |

|||

"Unity.ML-Agents.CommunicatorObjects", |

|||

"Unity.ML-Agents.Editor" |

|||

], |

|||

"optionalUnityReferences": [ |

|||

"TestAssemblies" |

|||

], |

|||

"includePlatforms": [], |

|||

"excludePlatforms": [], |

|||

"allowUnsafeCode": false, |

|||

"overrideReferences": true, |

|||

"precompiledReferences": [ |

|||

"System.IO.Abstractions.dll", |

|||

"System.IO.Abstractions.TestingHelpers.dll", |

|||

"Google.Protobuf.dll" |

|||

], |

|||

"autoReferenced": false, |

|||

"defineConstraints": [ |

|||

"UNITY_INCLUDE_TESTS" |

|||

] |

|||

} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: d29014db7ebcd4cf4a14f537fbf02110 |

|||

AssemblyDefinitionImporter: |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

%YAML 1.1 |

|||

%TAG !u! tag:unity3d.com,2011: |

|||

--- !u!29 &1 |

|||

OcclusionCullingSettings: |

|||

m_ObjectHideFlags: 0 |

|||

serializedVersion: 2 |

|||

m_OcclusionBakeSettings: |

|||

smallestOccluder: 5 |

|||

smallestHole: 0.25 |

|||

backfaceThreshold: 100 |

|||

m_SceneGUID: 00000000000000000000000000000000 |

|||

m_OcclusionCullingData: {fileID: 0} |

|||

--- !u!104 &2 |

|||

RenderSettings: |

|||

m_ObjectHideFlags: 0 |

|||

serializedVersion: 9 |

|||

m_Fog: 0 |

|||

m_FogColor: {r: 0.5, g: 0.5, b: 0.5, a: 1} |

|||

m_FogMode: 3 |

|||

m_FogDensity: 0.01 |

|||

m_LinearFogStart: 0 |

|||

m_LinearFogEnd: 300 |

|||

m_AmbientSkyColor: {r: 0.212, g: 0.227, b: 0.259, a: 1} |

|||

m_AmbientEquatorColor: {r: 0.114, g: 0.125, b: 0.133, a: 1} |

|||

m_AmbientGroundColor: {r: 0.047, g: 0.043, b: 0.035, a: 1} |

|||

m_AmbientIntensity: 1 |

|||

m_AmbientMode: 0 |

|||

m_SubtractiveShadowColor: {r: 0.42, g: 0.478, b: 0.627, a: 1} |

|||

m_SkyboxMaterial: {fileID: 10304, guid: 0000000000000000f000000000000000, type: 0} |

|||

m_HaloStrength: 0.5 |

|||

m_FlareStrength: 1 |

|||

m_FlareFadeSpeed: 3 |

|||

m_HaloTexture: {fileID: 0} |

|||

m_SpotCookie: {fileID: 10001, guid: 0000000000000000e000000000000000, type: 0} |

|||

m_DefaultReflectionMode: 0 |

|||

m_DefaultReflectionResolution: 128 |

|||

m_ReflectionBounces: 1 |

|||

m_ReflectionIntensity: 1 |

|||

m_CustomReflection: {fileID: 0} |

|||

m_Sun: {fileID: 0} |

|||

m_IndirectSpecularColor: {r: 0.44657898, g: 0.49641287, b: 0.5748173, a: 1} |

|||

m_UseRadianceAmbientProbe: 0 |

|||

--- !u!157 &3 |

|||

LightmapSettings: |

|||

m_ObjectHideFlags: 0 |

|||

serializedVersion: 11 |

|||

m_GIWorkflowMode: 0 |

|||

m_GISettings: |

|||

serializedVersion: 2 |

|||

m_BounceScale: 1 |

|||

m_IndirectOutputScale: 1 |

|||

m_AlbedoBoost: 1 |

|||

m_EnvironmentLightingMode: 0 |

|||

m_EnableBakedLightmaps: 1 |

|||

m_EnableRealtimeLightmaps: 1 |

|||

m_LightmapEditorSettings: |

|||

serializedVersion: 10 |

|||

m_Resolution: 2 |

|||

m_BakeResolution: 40 |

|||

m_AtlasSize: 1024 |

|||

m_AO: 0 |

|||

m_AOMaxDistance: 1 |

|||

m_CompAOExponent: 1 |

|||

m_CompAOExponentDirect: 0 |

|||

m_Padding: 2 |

|||

m_LightmapParameters: {fileID: 0} |

|||

m_LightmapsBakeMode: 1 |

|||

m_TextureCompression: 1 |

|||

m_FinalGather: 0 |

|||

m_FinalGatherFiltering: 1 |

|||

m_FinalGatherRayCount: 256 |

|||

m_ReflectionCompression: 2 |

|||

m_MixedBakeMode: 2 |

|||

m_BakeBackend: 1 |

|||

m_PVRSampling: 1 |

|||

m_PVRDirectSampleCount: 32 |

|||

m_PVRSampleCount: 500 |

|||

m_PVRBounces: 2 |

|||

m_PVRFilterTypeDirect: 0 |

|||

m_PVRFilterTypeIndirect: 0 |

|||

m_PVRFilterTypeAO: 0 |

|||

m_PVRFilteringMode: 1 |

|||

m_PVRCulling: 1 |

|||

m_PVRFilteringGaussRadiusDirect: 1 |

|||

m_PVRFilteringGaussRadiusIndirect: 5 |

|||

m_PVRFilteringGaussRadiusAO: 2 |

|||

m_PVRFilteringAtrousPositionSigmaDirect: 0.5 |

|||

m_PVRFilteringAtrousPositionSigmaIndirect: 2 |

|||

m_PVRFilteringAtrousPositionSigmaAO: 1 |

|||

m_ShowResolutionOverlay: 1 |

|||

m_LightingDataAsset: {fileID: 0} |

|||

m_UseShadowmask: 1 |

|||

--- !u!196 &4 |

|||

NavMeshSettings: |

|||

serializedVersion: 2 |

|||

m_ObjectHideFlags: 0 |

|||

m_BuildSettings: |

|||

serializedVersion: 2 |

|||

agentTypeID: 0 |

|||

agentRadius: 0.5 |

|||

agentHeight: 2 |

|||

agentSlope: 45 |

|||

agentClimb: 0.4 |

|||

ledgeDropHeight: 0 |

|||

maxJumpAcrossDistance: 0 |

|||

minRegionArea: 2 |

|||

manualCellSize: 0 |

|||

cellSize: 0.16666667 |

|||

manualTileSize: 0 |

|||

tileSize: 256 |

|||

accuratePlacement: 0 |

|||

debug: |

|||

m_Flags: 0 |

|||

m_NavMeshData: {fileID: 0} |

|||

--- !u!1 &106586301 |

|||

GameObject: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

serializedVersion: 6 |

|||

m_Component: |

|||

- component: {fileID: 106586304} |

|||

- component: {fileID: 106586303} |

|||

- component: {fileID: 106586302} |

|||

m_Layer: 0 |

|||

m_Name: Agent |

|||

m_TagString: Untagged |

|||

m_Icon: {fileID: 0} |

|||

m_NavMeshLayer: 0 |

|||

m_StaticEditorFlags: 0 |

|||

m_IsActive: 1 |

|||

--- !u!114 &106586302 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 106586301} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: c3d607733e457478885f15ee89725709, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

agentParameters: |

|||

maxStep: 5000 |

|||

hasUpgradedFromAgentParameters: 1 |

|||

maxStep: 5000 |

|||

--- !u!114 &106586303 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 106586301} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: 5d1c4e0b1822b495aa52bc52839ecb30, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

m_BrainParameters: |

|||

vectorObservationSize: 1 |

|||

numStackedVectorObservations: 1 |

|||

vectorActionSize: 01000000 |

|||

vectorActionDescriptions: [] |

|||

vectorActionSpaceType: 0 |

|||

m_Model: {fileID: 0} |

|||

m_InferenceDevice: 0 |

|||

m_BehaviorType: 0 |

|||

m_BehaviorName: My Behavior |

|||

m_TeamID: 0 |

|||

m_UseChildSensors: 1 |

|||

--- !u!4 &106586304 |

|||

Transform: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 106586301} |

|||

m_LocalRotation: {x: 0, y: 0, z: 0, w: 1} |

|||

m_LocalPosition: {x: 0, y: 0, z: 0} |

|||

m_LocalScale: {x: 1, y: 1, z: 1} |

|||

m_Children: |

|||

- {fileID: 1471486645} |

|||

m_Father: {fileID: 0} |

|||

m_RootOrder: 2 |

|||

m_LocalEulerAnglesHint: {x: 0, y: 0, z: 0} |

|||

--- !u!1 &185701317 |

|||

GameObject: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

serializedVersion: 6 |

|||

m_Component: |

|||

- component: {fileID: 185701319} |

|||

- component: {fileID: 185701318} |

|||

m_Layer: 0 |

|||

m_Name: Directional Light |

|||

m_TagString: Untagged |

|||

m_Icon: {fileID: 0} |

|||

m_NavMeshLayer: 0 |

|||

m_StaticEditorFlags: 0 |

|||

m_IsActive: 1 |

|||

--- !u!108 &185701318 |

|||

Light: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 185701317} |

|||

m_Enabled: 1 |

|||

serializedVersion: 8 |

|||

m_Type: 1 |

|||

m_Color: {r: 1, g: 0.95686275, b: 0.8392157, a: 1} |

|||

m_Intensity: 1 |

|||

m_Range: 10 |

|||

m_SpotAngle: 30 |

|||

m_CookieSize: 10 |

|||

m_Shadows: |

|||

m_Type: 2 |

|||

m_Resolution: -1 |

|||

m_CustomResolution: -1 |

|||

m_Strength: 1 |

|||

m_Bias: 0.05 |

|||

m_NormalBias: 0.4 |

|||

m_NearPlane: 0.2 |

|||

m_Cookie: {fileID: 0} |

|||

m_DrawHalo: 0 |

|||

m_Flare: {fileID: 0} |

|||

m_RenderMode: 0 |

|||

m_CullingMask: |

|||

serializedVersion: 2 |

|||

m_Bits: 4294967295 |

|||

m_Lightmapping: 4 |

|||

m_LightShadowCasterMode: 0 |

|||

m_AreaSize: {x: 1, y: 1} |

|||

m_BounceIntensity: 1 |

|||

m_ColorTemperature: 6570 |

|||

m_UseColorTemperature: 0 |

|||

m_ShadowRadius: 0 |

|||

m_ShadowAngle: 0 |

|||

--- !u!4 &185701319 |

|||

Transform: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 185701317} |

|||

m_LocalRotation: {x: 0.40821788, y: -0.23456968, z: 0.10938163, w: 0.8754261} |

|||

m_LocalPosition: {x: 0, y: 3, z: 0} |

|||

m_LocalScale: {x: 1, y: 1, z: 1} |

|||

m_Children: [] |

|||

m_Father: {fileID: 0} |

|||

m_RootOrder: 1 |

|||

m_LocalEulerAnglesHint: {x: 50, y: -30, z: 0} |

|||

--- !u!1 &804630118 |

|||

GameObject: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

serializedVersion: 6 |

|||

m_Component: |

|||

- component: {fileID: 804630121} |

|||

- component: {fileID: 804630120} |

|||

- component: {fileID: 804630119} |

|||

m_Layer: 0 |

|||

m_Name: Main Camera |

|||

m_TagString: MainCamera |

|||

m_Icon: {fileID: 0} |

|||

m_NavMeshLayer: 0 |

|||

m_StaticEditorFlags: 0 |

|||

m_IsActive: 1 |

|||

--- !u!81 &804630119 |

|||

AudioListener: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 804630118} |

|||

m_Enabled: 1 |

|||

--- !u!20 &804630120 |

|||

Camera: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 804630118} |

|||

m_Enabled: 1 |

|||

serializedVersion: 2 |

|||

m_ClearFlags: 1 |

|||

m_BackGroundColor: {r: 0.19215687, g: 0.3019608, b: 0.4745098, a: 0} |

|||

m_projectionMatrixMode: 1 |

|||

m_SensorSize: {x: 36, y: 24} |

|||

m_LensShift: {x: 0, y: 0} |

|||

m_GateFitMode: 2 |

|||

m_FocalLength: 50 |

|||

m_NormalizedViewPortRect: |

|||

serializedVersion: 2 |

|||

x: 0 |

|||

y: 0 |

|||

width: 1 |

|||

height: 1 |

|||

near clip plane: 0.3 |

|||

far clip plane: 1000 |

|||

field of view: 60 |

|||

orthographic: 0 |

|||

orthographic size: 5 |

|||

m_Depth: -1 |

|||

m_CullingMask: |

|||

serializedVersion: 2 |

|||

m_Bits: 4294967295 |

|||

m_RenderingPath: -1 |

|||

m_TargetTexture: {fileID: 0} |

|||

m_TargetDisplay: 0 |

|||

m_TargetEye: 3 |

|||

m_HDR: 1 |

|||

m_AllowMSAA: 1 |

|||

m_AllowDynamicResolution: 0 |

|||

m_ForceIntoRT: 0 |

|||

m_OcclusionCulling: 1 |

|||

m_StereoConvergence: 10 |

|||

m_StereoSeparation: 0.022 |

|||

--- !u!4 &804630121 |

|||

Transform: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 804630118} |

|||

m_LocalRotation: {x: 0, y: 0, z: 0, w: 1} |

|||

m_LocalPosition: {x: 0, y: 1, z: -10} |

|||

m_LocalScale: {x: 1, y: 1, z: 1} |

|||

m_Children: [] |

|||

m_Father: {fileID: 0} |

|||

m_RootOrder: 0 |

|||

m_LocalEulerAnglesHint: {x: 0, y: 0, z: 0} |

|||

--- !u!1 &1471486644 |

|||

GameObject: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

serializedVersion: 6 |

|||

m_Component: |

|||

- component: {fileID: 1471486645} |

|||

- component: {fileID: 1471486648} |

|||

- component: {fileID: 1471486647} |

|||

- component: {fileID: 1471486646} |

|||

m_Layer: 0 |

|||

m_Name: Cube |

|||

m_TagString: Untagged |

|||

m_Icon: {fileID: 0} |

|||

m_NavMeshLayer: 0 |

|||

m_StaticEditorFlags: 0 |

|||

m_IsActive: 1 |

|||

--- !u!4 &1471486645 |

|||

Transform: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 1471486644} |

|||

m_LocalRotation: {x: 0, y: 0, z: 0, w: 1} |

|||

m_LocalPosition: {x: 0, y: 0, z: 0} |

|||

m_LocalScale: {x: 1, y: 1, z: 1} |

|||

m_Children: [] |

|||

m_Father: {fileID: 106586304} |

|||

m_RootOrder: 0 |

|||

m_LocalEulerAnglesHint: {x: 0, y: 0, z: 0} |

|||

--- !u!65 &1471486646 |

|||

BoxCollider: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 1471486644} |

|||

m_Material: {fileID: 0} |

|||

m_IsTrigger: 0 |

|||

m_Enabled: 1 |

|||

serializedVersion: 2 |

|||

m_Size: {x: 1, y: 1, z: 1} |

|||

m_Center: {x: 0, y: 0, z: 0} |

|||

--- !u!23 &1471486647 |

|||

MeshRenderer: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 1471486644} |

|||

m_Enabled: 1 |

|||

m_CastShadows: 1 |

|||

m_ReceiveShadows: 1 |

|||

m_DynamicOccludee: 1 |

|||

m_MotionVectors: 1 |

|||

m_LightProbeUsage: 1 |

|||

m_ReflectionProbeUsage: 1 |

|||

m_RenderingLayerMask: 1 |

|||

m_RendererPriority: 0 |

|||

m_Materials: |

|||

- {fileID: 10303, guid: 0000000000000000f000000000000000, type: 0} |

|||

m_StaticBatchInfo: |

|||

firstSubMesh: 0 |

|||

subMeshCount: 0 |

|||

m_StaticBatchRoot: {fileID: 0} |

|||

m_ProbeAnchor: {fileID: 0} |

|||

m_LightProbeVolumeOverride: {fileID: 0} |

|||

m_ScaleInLightmap: 1 |

|||

m_PreserveUVs: 0 |

|||

m_IgnoreNormalsForChartDetection: 0 |

|||

m_ImportantGI: 0 |

|||

m_StitchLightmapSeams: 0 |

|||

m_SelectedEditorRenderState: 3 |

|||

m_MinimumChartSize: 4 |

|||

m_AutoUVMaxDistance: 0.5 |

|||

m_AutoUVMaxAngle: 89 |

|||

m_LightmapParameters: {fileID: 0} |

|||

m_SortingLayerID: 0 |

|||

m_SortingLayer: 0 |

|||

m_SortingOrder: 0 |

|||

--- !u!33 &1471486648 |

|||

MeshFilter: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 1471486644} |

|||

m_Mesh: {fileID: 10202, guid: 0000000000000000e000000000000000, type: 0} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 60783bd849bd242eeb66243542762b23 |

|||

DefaultImporter: |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

1001

docs/images/banana.png

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

1001

docs/images/running-a-pretrained-model.gif

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

497

docs/images/3dballhard.png

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

1001

docs/images/bananaimitation.png

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

撰写

预览

正在加载...

取消

保存

Reference in new issue