浏览代码

Merge branch 'develop-hybrid-actions-singleton' into develop-hybrid-actions-csharp

/MLA-1734-demo-provider

Merge branch 'develop-hybrid-actions-singleton' into develop-hybrid-actions-csharp

/MLA-1734-demo-provider

当前提交

a7d04be6

共有 105 个文件被更改,包括 6632 次插入 和 293 次删除

-

10README.md

-

5com.unity.ml-agents.extensions/Documentation~/com.unity.ml-agents.extensions.md

-

7com.unity.ml-agents.extensions/Tests/Editor/Sensors/RigidBodySensorTests.cs

-

2com.unity.ml-agents/Documentation~/com.unity.ml-agents.md

-

4com.unity.ml-agents/Runtime/Academy.cs

-

2com.unity.ml-agents/Runtime/Actuators/IActionReceiver.cs

-

2com.unity.ml-agents/Runtime/Actuators/IDiscreteActionMask.cs

-

26com.unity.ml-agents/Runtime/Agent.cs

-

2com.unity.ml-agents/Runtime/Demonstrations/DemonstrationRecorder.cs

-

2com.unity.ml-agents/Runtime/DiscreteActionMasker.cs

-

2com.unity.ml-agents/Runtime/SensorHelper.cs

-

4docs/Installation-Anaconda-Windows.md

-

6docs/Installation.md

-

21docs/Learning-Environment-Examples.md

-

2docs/Training-on-Amazon-Web-Service.md

-

4docs/Unity-Inference-Engine.md

-

78ml-agents-envs/mlagents_envs/base_env.py

-

26ml-agents/mlagents/trainers/agent_processor.py

-

12ml-agents/mlagents/trainers/env_manager.py

-

12ml-agents/mlagents/trainers/policy/policy.py

-

22ml-agents/mlagents/trainers/policy/tf_policy.py

-

10ml-agents/mlagents/trainers/policy/torch_policy.py

-

6ml-agents/mlagents/trainers/ppo/optimizer_tf.py

-

3ml-agents/mlagents/trainers/simple_env_manager.py

-

7ml-agents/mlagents/trainers/subprocess_env_manager.py

-

20ml-agents/mlagents/trainers/tests/mock_brain.py

-

4ml-agents/mlagents/trainers/tests/tensorflow/test_simple_rl.py

-

2ml-agents/mlagents/trainers/tests/tensorflow/test_tf_policy.py

-

27ml-agents/mlagents/trainers/tests/test_agent_processor.py

-

4ml-agents/mlagents/trainers/tests/test_trajectory.py

-

2ml-agents/mlagents/trainers/tests/torch/test_distributions.py

-

82ml-agents/mlagents/trainers/tests/torch/test_hybrid.py

-

10ml-agents/mlagents/trainers/tests/torch/test_policy.py

-

11ml-agents/mlagents/trainers/tests/torch/test_ppo.py

-

4ml-agents/mlagents/trainers/tests/torch/test_simple_rl.py

-

4ml-agents/mlagents/trainers/tests/torch/test_utils.py

-

14ml-agents/mlagents/trainers/torch/action_flattener.py

-

44ml-agents/mlagents/trainers/torch/action_log_probs.py

-

84ml-agents/mlagents/trainers/torch/action_model.py

-

21ml-agents/mlagents/trainers/torch/agent_action.py

-

12ml-agents/mlagents/trainers/torch/components/bc/module.py

-

2ml-agents/mlagents/trainers/torch/distributions.py

-

25ml-agents/mlagents/trainers/trajectory.py

-

1utils/make_readme_table.py

-

8Project/Assets/ML-Agents/Examples/Match3.meta

-

67com.unity.ml-agents.extensions/Documentation~/Match3.md

-

3com.unity.ml-agents.extensions/Runtime/Match3.meta

-

3com.unity.ml-agents.extensions/Tests/Editor/Match3.meta

-

75config/ppo/Match3.yaml

-

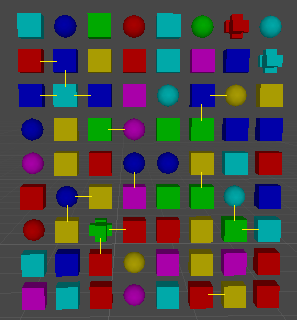

77docs/images/match3.png

-

8Project/Assets/ML-Agents/Examples/Match3/Prefabs.meta

-

174Project/Assets/ML-Agents/Examples/Match3/Prefabs/Match3Heuristic.prefab

-

7Project/Assets/ML-Agents/Examples/Match3/Prefabs/Match3Heuristic.prefab.meta

-

170Project/Assets/ML-Agents/Examples/Match3/Prefabs/Match3VectorObs.prefab

-

7Project/Assets/ML-Agents/Examples/Match3/Prefabs/Match3VectorObs.prefab.meta

-

170Project/Assets/ML-Agents/Examples/Match3/Prefabs/Match3VisualObs.prefab

-

7Project/Assets/ML-Agents/Examples/Match3/Prefabs/Match3VisualObs.prefab.meta

-

8Project/Assets/ML-Agents/Examples/Match3/Scenes.meta

-

1001Project/Assets/ML-Agents/Examples/Match3/Scenes/Match3.unity

-

7Project/Assets/ML-Agents/Examples/Match3/Scenes/Match3.unity.meta

-

8Project/Assets/ML-Agents/Examples/Match3/Scripts.meta

-

373Project/Assets/ML-Agents/Examples/Match3/Scripts/Match3Agent.cs

-

3Project/Assets/ML-Agents/Examples/Match3/Scripts/Match3Agent.cs.meta

-

272Project/Assets/ML-Agents/Examples/Match3/Scripts/Match3Board.cs

-

11Project/Assets/ML-Agents/Examples/Match3/Scripts/Match3Board.cs.meta

-

102Project/Assets/ML-Agents/Examples/Match3/Scripts/Match3Drawer.cs

-

3Project/Assets/ML-Agents/Examples/Match3/Scripts/Match3Drawer.cs.meta

-

8Project/Assets/ML-Agents/Examples/Match3/TFModels.meta

-

1001Project/Assets/ML-Agents/Examples/Match3/TFModels/Match3VectorObs.onnx

-

14Project/Assets/ML-Agents/Examples/Match3/TFModels/Match3VectorObs.onnx.meta

-

1001Project/Assets/ML-Agents/Examples/Match3/TFModels/Match3VisualObs.nn

-

11Project/Assets/ML-Agents/Examples/Match3/TFModels/Match3VisualObs.nn.meta

-

233com.unity.ml-agents.extensions/Runtime/Match3/AbstractBoard.cs

-

3com.unity.ml-agents.extensions/Runtime/Match3/AbstractBoard.cs.meta

-

120com.unity.ml-agents.extensions/Runtime/Match3/Match3Actuator.cs

-

3com.unity.ml-agents.extensions/Runtime/Match3/Match3Actuator.cs.meta

-

49com.unity.ml-agents.extensions/Runtime/Match3/Match3ActuatorComponent.cs

-

3com.unity.ml-agents.extensions/Runtime/Match3/Match3ActuatorComponent.cs.meta

-

297com.unity.ml-agents.extensions/Runtime/Match3/Match3Sensor.cs

-

3com.unity.ml-agents.extensions/Runtime/Match3/Match3Sensor.cs.meta

-

43com.unity.ml-agents.extensions/Runtime/Match3/Match3SensorComponent.cs

-

3com.unity.ml-agents.extensions/Runtime/Match3/Match3SensorComponent.cs.meta

-

260com.unity.ml-agents.extensions/Runtime/Match3/Move.cs

-

3com.unity.ml-agents.extensions/Runtime/Match3/Move.cs.meta

-

152com.unity.ml-agents.extensions/Tests/Editor/Match3/AbstractBoardTests.cs

-

3com.unity.ml-agents.extensions/Tests/Editor/Match3/AbstractBoardTests.cs.meta

-

115com.unity.ml-agents.extensions/Tests/Editor/Match3/Match3ActuatorTests.cs

-

3com.unity.ml-agents.extensions/Tests/Editor/Match3/Match3ActuatorTests.cs.meta

-

314com.unity.ml-agents.extensions/Tests/Editor/Match3/Match3SensorTests.cs

-

3com.unity.ml-agents.extensions/Tests/Editor/Match3/Match3SensorTests.cs.meta

-

60com.unity.ml-agents.extensions/Tests/Editor/Match3/MoveTests.cs

-

3com.unity.ml-agents.extensions/Tests/Editor/Match3/MoveTests.cs.meta

-

3com.unity.ml-agents.extensions/Tests/Editor/Match3/match3obs0.png

|

|||

fileFormatVersion: 2 |

|||

guid: 85094c6352d9e43c497a54fef35e4d76 |

|||

folderAsset: yes |

|||

DefaultImporter: |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

# Match-3 Game Support |

|||

|

|||

We provide some utilities to integrate ML-Agents with Match-3 games. |

|||

|

|||

## AbstractBoard class |

|||

The `AbstractBoard` is the bridge between ML-Agents and your game. It allows ML-Agents to |

|||

* ask your game what the "color" of a cell is |

|||

* ask whether the cell is a "special" piece type or not |

|||

* ask your game whether a move is allowed |

|||

* request that your game make a move |

|||

|

|||

These are handled by implementing the `GetCellType()`, `IsMoveValid()`, and `MakeMove()` abstract methods. |

|||

|

|||

The AbstractBoard also tracks the number of rows, columns, and potential piece types that the board can have. |

|||

|

|||

#### `public abstract int GetCellType(int row, int col)` |

|||

Returns the "color" of piece at the given row and column. |

|||

This should be between 0 and NumCellTypes-1 (inclusive). |

|||

The actual order of the values doesn't matter. |

|||

|

|||

#### `public abstract int GetSpecialType(int row, int col)` |

|||

Returns the special type of the piece at the given row and column. |

|||

This should be between 0 and NumSpecialTypes (inclusive). |

|||

The actual order of the values doesn't matter. |

|||

|

|||

#### `public abstract bool IsMoveValid(Move m)` |

|||

Check whether the particular `Move` is valid for the game. |

|||

The actual results will depend on the rules of the game, but we provide the `SimpleIsMoveValid()` method |

|||

that handles basic match3 rules with no special or immovable pieces. |

|||

|

|||

#### `public abstract bool MakeMove(Move m)` |

|||

Instruct the game to make the given move. Returns true if the move was made. |

|||

Note that during training, a move that was marked as invalid may occasionally still be |

|||

requested. If this happens, it is safe to do nothing and request another move. |

|||

|

|||

## Move struct |

|||

The Move struct encapsulates a swap of two adjacent cells. You can get the number of potential moves |

|||

for a board of a given size with. `Move.NumPotentialMoves(NumRows, NumColumns)`. There are two helper |

|||

functions to create a new `Move`: |

|||

* `public static Move FromMoveIndex(int moveIndex, int maxRows, int maxCols)` can be used to |

|||

iterate over all potential moves for the board by looping from 0 to `Move.NumPotentialMoves()` |

|||

* `public static Move FromPositionAndDirection(int row, int col, Direction dir, int maxRows, int maxCols)` creates |

|||

a `Move` from a row, column, and direction (and board size). |

|||

|

|||

## `Match3Sensor` and `Match3SensorComponent` classes |

|||

The `Match3Sensor` generates observations about the state using the `AbstractBoard` interface. You can |

|||

choose whether to use vector or "visual" observations; in theory, visual observations should perform |

|||

better because they are 2-dimensional like the board, but we need to experiment more on this. |

|||

|

|||

A `Match3SensorComponent` generates a `Match3Sensor` at runtime, and should be added to the same GameObject |

|||

as your `Agent` implementation. You do not need to write any additional code to use them. |

|||

|

|||

## `Match3Actuator` and `Match3ActuatorComponent` classes |

|||

The `Match3Actuator` converts actions from training or inference into a `Move` that is sent to` AbstractBoard.MakeMove()` |

|||

It also checks `AbstractBoard.IsMoveValid` for each potential move and uses this to set the action mask for Agent. |

|||

|

|||

A `Match3ActuatorComponent` generates a `Match3Actuator` at runtime, and should be added to the same GameObject |

|||

as your `Agent` implementation. You do not need to write any additional code to use them. |

|||

|

|||

# Setting up match-3 simulation |

|||

* Implement the `AbstractBoard` methods to integrate with your game. |

|||

* Give the `Agent` rewards when it does what you want it to (match multiple pieces in a row, clears pieces of a certain |

|||

type, etc). |

|||

* Add the `Agent`, `AbstractBoard` implementation, `Match3SensorComponent`, and `Match3ActuatorComponent` to the same |

|||

`GameObject`. |

|||

* Call `Agent.RequestDecision()` when you're ready for the `Agent` to make a move on the next `Academy` step. During |

|||

the next `Academy` step, the `MakeMove()` method on the board will be called. |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 569f8fa2b7dd477c9b71f09e9d633832 |

|||

timeCreated: 1600465975 |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 77b0212dde404f7c8ce9aac13bd550b8 |

|||

timeCreated: 1601332716 |

|||

|

|||

behaviors: |

|||

Match3VectorObs: |

|||

trainer_type: ppo |

|||

hyperparameters: |

|||

batch_size: 64 |

|||

buffer_size: 12000 |

|||

learning_rate: 0.0003 |

|||

beta: 0.001 |

|||

epsilon: 0.2 |

|||

lambd: 0.99 |

|||

num_epoch: 3 |

|||

learning_rate_schedule: constant |

|||

network_settings: |

|||

normalize: true |

|||

hidden_units: 128 |

|||

num_layers: 2 |

|||

vis_encode_type: match3 |

|||

reward_signals: |

|||

extrinsic: |

|||

gamma: 0.99 |

|||

strength: 1.0 |

|||

keep_checkpoints: 5 |

|||

max_steps: 5000000 |

|||

time_horizon: 1000 |

|||

summary_freq: 10000 |

|||

threaded: true |

|||

Match3VisualObs: |

|||

trainer_type: ppo |

|||

hyperparameters: |

|||

batch_size: 64 |

|||

buffer_size: 12000 |

|||

learning_rate: 0.0003 |

|||

beta: 0.001 |

|||

epsilon: 0.2 |

|||

lambd: 0.99 |

|||

num_epoch: 3 |

|||

learning_rate_schedule: constant |

|||

network_settings: |

|||

normalize: true |

|||

hidden_units: 128 |

|||

num_layers: 2 |

|||

vis_encode_type: match3 |

|||

reward_signals: |

|||

extrinsic: |

|||

gamma: 0.99 |

|||

strength: 1.0 |

|||

keep_checkpoints: 5 |

|||

max_steps: 5000000 |

|||

time_horizon: 1000 |

|||

summary_freq: 10000 |

|||

threaded: true |

|||

Match3SimpleHeuristic: |

|||

# Settings can be very simple since we don't care about actually training the model |

|||

trainer_type: ppo |

|||

hyperparameters: |

|||

batch_size: 64 |

|||

buffer_size: 128 |

|||

network_settings: |

|||

hidden_units: 4 |

|||

num_layers: 1 |

|||

max_steps: 5000000 |

|||

summary_freq: 10000 |

|||

threaded: true |

|||

Match3GreedyHeuristic: |

|||

# Settings can be very simple since we don't care about actually training the model |

|||

trainer_type: ppo |

|||

hyperparameters: |

|||

batch_size: 64 |

|||

buffer_size: 128 |

|||

network_settings: |

|||

hidden_units: 4 |

|||

num_layers: 1 |

|||

max_steps: 5000000 |

|||

summary_freq: 10000 |

|||

threaded: true |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 8519802844d8d4233b4c6f6758ab8322 |

|||

folderAsset: yes |

|||

DefaultImporter: |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

%YAML 1.1 |

|||

%TAG !u! tag:unity3d.com,2011: |

|||

--- !u!1 &3508723250470608007 |

|||

GameObject: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

serializedVersion: 6 |

|||

m_Component: |

|||

- component: {fileID: 3508723250470608008} |

|||

- component: {fileID: 3508723250470608010} |

|||

- component: {fileID: 3508723250470608012} |

|||

- component: {fileID: 3508723250470608011} |

|||

- component: {fileID: 3508723250470608009} |

|||

- component: {fileID: 3508723250470608013} |

|||

- component: {fileID: 3508723250470608014} |

|||

m_Layer: 0 |

|||

m_Name: Match3 Agent |

|||

m_TagString: Untagged |

|||

m_Icon: {fileID: 0} |

|||

m_NavMeshLayer: 0 |

|||

m_StaticEditorFlags: 0 |

|||

m_IsActive: 1 |

|||

--- !u!4 &3508723250470608008 |

|||

Transform: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 3508723250470608007} |

|||

m_LocalRotation: {x: 0, y: 0, z: 0, w: 1} |

|||

m_LocalPosition: {x: 0, y: 0, z: 0} |

|||

m_LocalScale: {x: 1, y: 1, z: 1} |

|||

m_Children: [] |

|||

m_Father: {fileID: 3508723250774301920} |

|||

m_RootOrder: 0 |

|||

m_LocalEulerAnglesHint: {x: 0, y: 0, z: 0} |

|||

--- !u!114 &3508723250470608010 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 3508723250470608007} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: 5d1c4e0b1822b495aa52bc52839ecb30, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

m_BrainParameters: |

|||

VectorObservationSize: 0 |

|||

NumStackedVectorObservations: 1 |

|||

VectorActionSize: |

|||

VectorActionDescriptions: [] |

|||

VectorActionSpaceType: 0 |

|||

m_Model: {fileID: 11400000, guid: c34da50737a3c4a50918002b20b2b927, type: 3} |

|||

m_InferenceDevice: 0 |

|||

m_BehaviorType: 0 |

|||

m_BehaviorName: Match3SmartHeuristic |

|||

TeamId: 0 |

|||

m_UseChildSensors: 1 |

|||

m_UseChildActuators: 1 |

|||

m_ObservableAttributeHandling: 0 |

|||

--- !u!114 &3508723250470608012 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 3508723250470608007} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: d982f0cd92214bd2b689be838fa40c44, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

agentParameters: |

|||

maxStep: 0 |

|||

hasUpgradedFromAgentParameters: 1 |

|||

MaxStep: 0 |

|||

Board: {fileID: 0} |

|||

MoveTime: 0.25 |

|||

MaxMoves: 500 |

|||

UseSmartHeuristic: 1 |

|||

--- !u!114 &3508723250470608011 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 3508723250470608007} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: abebb7ad4a5547d7a3b04373784ff195, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

DebugEdgeIndex: -1 |

|||

--- !u!114 &3508723250470608009 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 3508723250470608007} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: 6d852a063770348b68caa91b8e7642a5, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

Rows: 9 |

|||

Columns: 8 |

|||

NumCellTypes: 6 |

|||

NumSpecialTypes: 2 |

|||

RandomSeed: -1 |

|||

BasicCellPoints: 1 |

|||

SpecialCell1Points: 2 |

|||

SpecialCell2Points: 3 |

|||

--- !u!114 &3508723250470608013 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 3508723250470608007} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: 08e4b0da54cb4d56bfcbae22dd49ab8d, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

ForceHeuristic: 1 |

|||

--- !u!114 &3508723250470608014 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 3508723250470608007} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: 530d2f105aa145bd8a00e021bdd925fd, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

ObservationType: 0 |

|||

--- !u!1 &3508723250774301855 |

|||

GameObject: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

serializedVersion: 6 |

|||

m_Component: |

|||

- component: {fileID: 3508723250774301920} |

|||

m_Layer: 0 |

|||

m_Name: Match3Heuristic |

|||

m_TagString: Untagged |

|||

m_Icon: {fileID: 0} |

|||

m_NavMeshLayer: 0 |

|||

m_StaticEditorFlags: 0 |

|||

m_IsActive: 1 |

|||

--- !u!4 &3508723250774301920 |

|||

Transform: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 3508723250774301855} |

|||

m_LocalRotation: {x: 0, y: 0, z: 0, w: 1} |

|||

m_LocalPosition: {x: 0, y: 0, z: 0} |

|||

m_LocalScale: {x: 1, y: 1, z: 1} |

|||

m_Children: |

|||

- {fileID: 3508723250470608008} |

|||

m_Father: {fileID: 0} |

|||

m_RootOrder: 0 |

|||

m_LocalEulerAnglesHint: {x: 0, y: 0, z: 0} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 2fafdcd0587684641b03b11f04454f1b |

|||

PrefabImporter: |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

%YAML 1.1 |

|||

%TAG !u! tag:unity3d.com,2011: |

|||

--- !u!1 &2118285883905619929 |

|||

GameObject: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

serializedVersion: 6 |

|||

m_Component: |

|||

- component: {fileID: 2118285883905619878} |

|||

m_Layer: 0 |

|||

m_Name: Match3VectorObs |

|||

m_TagString: Untagged |

|||

m_Icon: {fileID: 0} |

|||

m_NavMeshLayer: 0 |

|||

m_StaticEditorFlags: 0 |

|||

m_IsActive: 1 |

|||

--- !u!4 &2118285883905619878 |

|||

Transform: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 2118285883905619929} |

|||

m_LocalRotation: {x: 0, y: 0, z: 0, w: 1} |

|||

m_LocalPosition: {x: 0, y: 0, z: 0} |

|||

m_LocalScale: {x: 1, y: 1, z: 1} |

|||

m_Children: |

|||

- {fileID: 2118285884327540686} |

|||

m_Father: {fileID: 0} |

|||

m_RootOrder: 0 |

|||

m_LocalEulerAnglesHint: {x: 0, y: 0, z: 0} |

|||

--- !u!1 &2118285884327540673 |

|||

GameObject: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

serializedVersion: 6 |

|||

m_Component: |

|||

- component: {fileID: 2118285884327540686} |

|||

- component: {fileID: 2118285884327540684} |

|||

- component: {fileID: 2118285884327540682} |

|||

- component: {fileID: 2118285884327540685} |

|||

- component: {fileID: 2118285884327540687} |

|||

- component: {fileID: 2118285884327540683} |

|||

- component: {fileID: 2118285884327540680} |

|||

m_Layer: 0 |

|||

m_Name: Match3 Agent |

|||

m_TagString: Untagged |

|||

m_Icon: {fileID: 0} |

|||

m_NavMeshLayer: 0 |

|||

m_StaticEditorFlags: 0 |

|||

m_IsActive: 1 |

|||

--- !u!4 &2118285884327540686 |

|||

Transform: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 2118285884327540673} |

|||

m_LocalRotation: {x: 0, y: 0, z: 0, w: 1} |

|||

m_LocalPosition: {x: 0, y: 0, z: 0} |

|||

m_LocalScale: {x: 1, y: 1, z: 1} |

|||

m_Children: [] |

|||

m_Father: {fileID: 2118285883905619878} |

|||

m_RootOrder: 0 |

|||

m_LocalEulerAnglesHint: {x: 0, y: 0, z: 0} |

|||

--- !u!114 &2118285884327540684 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 2118285884327540673} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: 5d1c4e0b1822b495aa52bc52839ecb30, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

m_BrainParameters: |

|||

VectorObservationSize: 0 |

|||

NumStackedVectorObservations: 1 |

|||

VectorActionSize: |

|||

VectorActionDescriptions: [] |

|||

VectorActionSpaceType: 0 |

|||

m_Model: {fileID: 11400000, guid: 9e89b8e81974148d3b7213530d00589d, type: 3} |

|||

m_InferenceDevice: 0 |

|||

m_BehaviorType: 0 |

|||

m_BehaviorName: Match3VectorObs |

|||

TeamId: 0 |

|||

m_UseChildSensors: 1 |

|||

m_UseChildActuators: 1 |

|||

m_ObservableAttributeHandling: 0 |

|||

--- !u!114 &2118285884327540682 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 2118285884327540673} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: d982f0cd92214bd2b689be838fa40c44, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

agentParameters: |

|||

maxStep: 0 |

|||

hasUpgradedFromAgentParameters: 1 |

|||

MaxStep: 0 |

|||

Board: {fileID: 0} |

|||

MoveTime: 0.25 |

|||

MaxMoves: 500 |

|||

--- !u!114 &2118285884327540685 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 2118285884327540673} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: abebb7ad4a5547d7a3b04373784ff195, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

DebugEdgeIndex: -1 |

|||

--- !u!114 &2118285884327540687 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 2118285884327540673} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: 6d852a063770348b68caa91b8e7642a5, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

Rows: 9 |

|||

Columns: 8 |

|||

NumCellTypes: 6 |

|||

NumSpecialTypes: 2 |

|||

RandomSeed: -1 |

|||

--- !u!114 &2118285884327540683 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 2118285884327540673} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: 08e4b0da54cb4d56bfcbae22dd49ab8d, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

ForceRandom: 0 |

|||

--- !u!114 &2118285884327540680 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 2118285884327540673} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: 530d2f105aa145bd8a00e021bdd925fd, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

ObservationType: 0 |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 6944ca02359f5427aa13c8551236a824 |

|||

PrefabImporter: |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

%YAML 1.1 |

|||

%TAG !u! tag:unity3d.com,2011: |

|||

--- !u!1 &3019509691567202678 |

|||

GameObject: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

serializedVersion: 6 |

|||

m_Component: |

|||

- component: {fileID: 3019509691567202569} |

|||

m_Layer: 0 |

|||

m_Name: Match3VisualObs |

|||

m_TagString: Untagged |

|||

m_Icon: {fileID: 0} |

|||

m_NavMeshLayer: 0 |

|||

m_StaticEditorFlags: 0 |

|||

m_IsActive: 1 |

|||

--- !u!4 &3019509691567202569 |

|||

Transform: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 3019509691567202678} |

|||

m_LocalRotation: {x: 0, y: 0, z: 0, w: 1} |

|||

m_LocalPosition: {x: 0, y: 0, z: 0} |

|||

m_LocalScale: {x: 1, y: 1, z: 1} |

|||

m_Children: |

|||

- {fileID: 3019509692332007777} |

|||

m_Father: {fileID: 0} |

|||

m_RootOrder: 0 |

|||

m_LocalEulerAnglesHint: {x: 0, y: 0, z: 0} |

|||

--- !u!1 &3019509692332007790 |

|||

GameObject: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

serializedVersion: 6 |

|||

m_Component: |

|||

- component: {fileID: 3019509692332007777} |

|||

- component: {fileID: 3019509692332007779} |

|||

- component: {fileID: 3019509692332007781} |

|||

- component: {fileID: 3019509692332007778} |

|||

- component: {fileID: 3019509692332007776} |

|||

- component: {fileID: 3019509692332007780} |

|||

- component: {fileID: 3019509692332007783} |

|||

m_Layer: 0 |

|||

m_Name: Match3 Agent |

|||

m_TagString: Untagged |

|||

m_Icon: {fileID: 0} |

|||

m_NavMeshLayer: 0 |

|||

m_StaticEditorFlags: 0 |

|||

m_IsActive: 1 |

|||

--- !u!4 &3019509692332007777 |

|||

Transform: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 3019509692332007790} |

|||

m_LocalRotation: {x: 0, y: 0, z: 0, w: 1} |

|||

m_LocalPosition: {x: 0, y: 0, z: 0} |

|||

m_LocalScale: {x: 1, y: 1, z: 1} |

|||

m_Children: [] |

|||

m_Father: {fileID: 3019509691567202569} |

|||

m_RootOrder: 0 |

|||

m_LocalEulerAnglesHint: {x: 0, y: 0, z: 0} |

|||

--- !u!114 &3019509692332007779 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 3019509692332007790} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: 5d1c4e0b1822b495aa52bc52839ecb30, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

m_BrainParameters: |

|||

VectorObservationSize: 0 |

|||

NumStackedVectorObservations: 1 |

|||

VectorActionSize: |

|||

VectorActionDescriptions: [] |

|||

VectorActionSpaceType: 0 |

|||

m_Model: {fileID: 11400000, guid: 48d14da88fea74d0693c691c6e3f2e34, type: 3} |

|||

m_InferenceDevice: 0 |

|||

m_BehaviorType: 0 |

|||

m_BehaviorName: Match3VisualObs |

|||

TeamId: 0 |

|||

m_UseChildSensors: 1 |

|||

m_UseChildActuators: 1 |

|||

m_ObservableAttributeHandling: 0 |

|||

--- !u!114 &3019509692332007781 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 3019509692332007790} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: d982f0cd92214bd2b689be838fa40c44, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

agentParameters: |

|||

maxStep: 0 |

|||

hasUpgradedFromAgentParameters: 1 |

|||

MaxStep: 0 |

|||

Board: {fileID: 0} |

|||

MoveTime: 0.25 |

|||

MaxMoves: 500 |

|||

--- !u!114 &3019509692332007778 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 3019509692332007790} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: abebb7ad4a5547d7a3b04373784ff195, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

DebugEdgeIndex: -1 |

|||

--- !u!114 &3019509692332007776 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 3019509692332007790} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: 6d852a063770348b68caa91b8e7642a5, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

Rows: 9 |

|||

Columns: 8 |

|||

NumCellTypes: 6 |

|||

NumSpecialTypes: 2 |

|||

RandomSeed: -1 |

|||

--- !u!114 &3019509692332007780 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 3019509692332007790} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: 08e4b0da54cb4d56bfcbae22dd49ab8d, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

ForceRandom: 0 |

|||

--- !u!114 &3019509692332007783 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 3019509692332007790} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: 530d2f105aa145bd8a00e021bdd925fd, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

ObservationType: 2 |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: aaa471bd5e2014848a66917476671aed |

|||

PrefabImporter: |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: e033fb0df67684ebf961ed115870ff10 |

|||

folderAsset: yes |

|||

DefaultImporter: |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

1001

Project/Assets/ML-Agents/Examples/Match3/Scenes/Match3.unity

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

|

|||

fileFormatVersion: 2 |

|||

guid: 2e09c5458f1494f9dad9cd6d09dff964 |

|||

DefaultImporter: |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: be7a27f4291944d3dba4f696e1af4209 |

|||

folderAsset: yes |

|||

DefaultImporter: |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

using System; |

|||

using UnityEngine; |

|||

using Unity.MLAgents; |

|||

using Unity.MLAgents.Actuators; |

|||

using Unity.MLAgents.Extensions.Match3; |

|||

|

|||

namespace Unity.MLAgentsExamples |

|||

{ |

|||

|

|||

/// <summary>

|

|||

/// State of the "game" when showing all steps of the simulation. This is only used outside of training.

|

|||

/// The state diagram is

|

|||

///

|

|||

/// | <--------------------------------------- ^

|

|||

/// | |

|

|||

/// v |

|

|||

/// +--------+ +-------+ +-----+ +------+

|

|||

/// |Find | ---> |Clear | ---> |Drop | ---> |Fill |

|

|||

/// |Matches | |Matched| | | |Empty |

|

|||

/// +--------+ +-------+ +-----+ +------+

|

|||

///

|

|||

/// | ^

|

|||

/// | |

|

|||

/// v |

|

|||

///

|

|||

/// +--------+

|

|||

/// |Wait for|

|

|||

/// |Move |

|

|||

/// +--------+

|

|||

///

|

|||

/// The stats advances each "MoveTime" seconds.

|

|||

/// </summary>

|

|||

enum State |

|||

{ |

|||

/// <summary>

|

|||

/// Guard value, should never happen.

|

|||

/// </summary>

|

|||

Invalid = -1, |

|||

|

|||

/// <summary>

|

|||

/// Look for matches. If there are matches, the next state is ClearMatched, otherwise WaitForMove.

|

|||

/// </summary>

|

|||

FindMatches = 0, |

|||

|

|||

/// <summary>

|

|||

/// Remove matched cells and replace them with a placeholder value.

|

|||

/// </summary>

|

|||

ClearMatched = 1, |

|||

|

|||

/// <summary>

|

|||

/// Move cells "down" to fill empty space.

|

|||

/// </summary>

|

|||

Drop = 2, |

|||

|

|||

/// <summary>

|

|||

/// Replace empty cells with new random values.

|

|||

/// </summary>

|

|||

FillEmpty = 3, |

|||

|

|||

/// <summary>

|

|||

/// Request a move from the Agent.

|

|||

/// </summary>

|

|||

WaitForMove = 4, |

|||

} |

|||

|

|||

public enum HeuristicQuality |

|||

{ |

|||

/// <summary>

|

|||

/// The heuristic will pick any valid move at random.

|

|||

/// </summary>

|

|||

RandomValidMove, |

|||

|

|||

/// <summary>

|

|||

/// The heuristic will pick the move that scores the most points.

|

|||

/// This only looks at the immediate move, and doesn't consider where cells will fall.

|

|||

/// </summary>

|

|||

Greedy |

|||

} |

|||

|

|||

public class Match3Agent : Agent |

|||

{ |

|||

[HideInInspector] |

|||

public Match3Board Board; |

|||

|

|||

public float MoveTime = 1.0f; |

|||

public int MaxMoves = 500; |

|||

|

|||

|

|||

public HeuristicQuality HeuristicQuality = HeuristicQuality.RandomValidMove; |

|||

|

|||

State m_CurrentState = State.WaitForMove; |

|||

float m_TimeUntilMove; |

|||

private int m_MovesMade; |

|||

|

|||

private System.Random m_Random; |

|||

private const float k_RewardMultiplier = 0.01f; |

|||

|

|||

void Awake() |

|||

{ |

|||

Board = GetComponent<Match3Board>(); |

|||

var seed = Board.RandomSeed == -1 ? gameObject.GetInstanceID() : Board.RandomSeed + 1; |

|||

m_Random = new System.Random(seed); |

|||

} |

|||

|

|||

public override void OnEpisodeBegin() |

|||

{ |

|||

base.OnEpisodeBegin(); |

|||

|

|||

Board.InitSettled(); |

|||

m_CurrentState = State.FindMatches; |

|||

m_TimeUntilMove = MoveTime; |

|||

m_MovesMade = 0; |

|||

} |

|||

|

|||

private void FixedUpdate() |

|||

{ |

|||

if (Academy.Instance.IsCommunicatorOn) |

|||

{ |

|||

FastUpdate(); |

|||

} |

|||

else |

|||

{ |

|||

AnimatedUpdate(); |

|||

} |

|||

|

|||

// We can't use the normal MaxSteps system to decide when to end an episode,

|

|||

// since different agents will make moves at different frequencies (depending on the number of

|

|||

// chained moves). So track a number of moves per Agent and manually interrupt the episode.

|

|||

if (m_MovesMade >= MaxMoves) |

|||

{ |

|||

EpisodeInterrupted(); |

|||

} |

|||

} |

|||

|

|||

void FastUpdate() |

|||

{ |

|||

while (true) |

|||

{ |

|||

var hasMatched = Board.MarkMatchedCells(); |

|||

if (!hasMatched) |

|||

{ |

|||

break; |

|||

} |

|||

var pointsEarned = Board.ClearMatchedCells(); |

|||

AddReward(k_RewardMultiplier * pointsEarned); |

|||

Board.DropCells(); |

|||

Board.FillFromAbove(); |

|||

} |

|||

|

|||

while (!HasValidMoves()) |

|||

{ |

|||

// Shuffle the board until we have a valid move.

|

|||

Board.InitSettled(); |

|||

} |

|||

RequestDecision(); |

|||

m_MovesMade++; |

|||

} |

|||

|

|||

void AnimatedUpdate() |

|||

{ |

|||

m_TimeUntilMove -= Time.deltaTime; |

|||

if (m_TimeUntilMove > 0.0f) |

|||

{ |

|||

return; |

|||

} |

|||

|

|||

m_TimeUntilMove = MoveTime; |

|||

|

|||

var nextState = State.Invalid; |

|||

switch (m_CurrentState) |

|||

{ |

|||

case State.FindMatches: |

|||

var hasMatched = Board.MarkMatchedCells(); |

|||

nextState = hasMatched ? State.ClearMatched : State.WaitForMove; |

|||

if (nextState == State.WaitForMove) |

|||

{ |

|||

m_MovesMade++; |

|||

} |

|||

break; |

|||

case State.ClearMatched: |

|||

var pointsEarned = Board.ClearMatchedCells(); |

|||

AddReward(k_RewardMultiplier * pointsEarned); |

|||

nextState = State.Drop; |

|||

break; |

|||

case State.Drop: |

|||

Board.DropCells(); |

|||

nextState = State.FillEmpty; |

|||

break; |

|||

case State.FillEmpty: |

|||

Board.FillFromAbove(); |

|||

nextState = State.FindMatches; |

|||

break; |

|||

case State.WaitForMove: |

|||

while (true) |

|||

{ |

|||

// Shuffle the board until we have a valid move.

|

|||

bool hasMoves = HasValidMoves(); |

|||

if (hasMoves) |

|||

{ |

|||

break; |

|||

} |

|||

Board.InitSettled(); |

|||

} |

|||

RequestDecision(); |

|||

|

|||

nextState = State.FindMatches; |

|||

break; |

|||

default: |

|||

throw new ArgumentOutOfRangeException(); |

|||

} |

|||

|

|||

m_CurrentState = nextState; |

|||

} |

|||

|

|||

bool HasValidMoves() |

|||

{ |

|||

foreach (var move in Board.ValidMoves()) |

|||

{ |

|||

return true; |

|||

} |

|||

|

|||

return false; |

|||

} |

|||

|

|||

public override void Heuristic(in ActionBuffers actionsOut) |

|||

{ |

|||

var discreteActions = actionsOut.DiscreteActions; |

|||

discreteActions[0] = GreedyMove(); |

|||

} |

|||

|

|||

int GreedyMove() |

|||

{ |

|||

var pointsByType = new[] { Board.BasicCellPoints, Board.SpecialCell1Points, Board.SpecialCell2Points }; |

|||

|

|||

var bestMoveIndex = 0; |

|||

var bestMovePoints = -1; |

|||

var numMovesAtCurrentScore = 0; |

|||

|

|||

foreach (var move in Board.ValidMoves()) |

|||

{ |

|||

var movePoints = HeuristicQuality == HeuristicQuality.Greedy ? EvalMovePoints(move, pointsByType) : 1; |

|||

if (movePoints < bestMovePoints) |

|||

{ |

|||

// Worse, skip

|

|||

continue; |

|||

} |

|||

|

|||

if (movePoints > bestMovePoints) |

|||

{ |

|||

// Better, keep

|

|||

bestMovePoints = movePoints; |

|||

bestMoveIndex = move.MoveIndex; |

|||

numMovesAtCurrentScore = 1; |

|||

} |

|||

else |

|||

{ |

|||

// Tied for best - use reservoir sampling to make sure we select from equal moves uniformly.

|

|||

// See https://en.wikipedia.org/wiki/Reservoir_sampling#Simple_algorithm

|

|||

numMovesAtCurrentScore++; |

|||

var randVal = m_Random.Next(0, numMovesAtCurrentScore); |

|||

if (randVal == 0) |

|||

{ |

|||

// Keep the new one

|

|||

bestMoveIndex = move.MoveIndex; |

|||

} |

|||

} |

|||

} |

|||

|

|||

return bestMoveIndex; |

|||

} |

|||

|

|||

int EvalMovePoints(Move move, int[] pointsByType) |

|||

{ |

|||

// Counts the expected points for making the move.

|

|||

var moveVal = Board.GetCellType(move.Row, move.Column); |

|||

var moveSpecial = Board.GetSpecialType(move.Row, move.Column); |

|||

var (otherRow, otherCol) = move.OtherCell(); |

|||

var oppositeVal = Board.GetCellType(otherRow, otherCol); |

|||

var oppositeSpecial = Board.GetSpecialType(otherRow, otherCol); |

|||

|

|||

|

|||

int movePoints = EvalHalfMove( |

|||

otherRow, otherCol, moveVal, moveSpecial, move.Direction, pointsByType |

|||

); |

|||

int otherPoints = EvalHalfMove( |

|||

move.Row, move.Column, oppositeVal, oppositeSpecial, move.OtherDirection(), pointsByType |

|||

); |

|||

return movePoints + otherPoints; |

|||

} |

|||

|

|||

int EvalHalfMove(int newRow, int newCol, int newValue, int newSpecial, Direction incomingDirection, int[] pointsByType) |

|||

{ |

|||

// This is a essentially a duplicate of AbstractBoard.CheckHalfMove but also counts the points for the move.

|

|||

int matchedLeft = 0, matchedRight = 0, matchedUp = 0, matchedDown = 0; |

|||

int scoreLeft = 0, scoreRight = 0, scoreUp = 0, scoreDown = 0; |

|||

|

|||

if (incomingDirection != Direction.Right) |

|||

{ |

|||

for (var c = newCol - 1; c >= 0; c--) |

|||

{ |

|||

if (Board.GetCellType(newRow, c) == newValue) |

|||

{ |

|||

matchedLeft++; |

|||

scoreLeft += pointsByType[Board.GetSpecialType(newRow, c)]; |

|||

} |

|||

else |

|||

break; |

|||

} |

|||

} |

|||

|

|||

if (incomingDirection != Direction.Left) |

|||

{ |

|||

for (var c = newCol + 1; c < Board.Columns; c++) |

|||

{ |

|||

if (Board.GetCellType(newRow, c) == newValue) |

|||

{ |

|||

matchedRight++; |

|||

scoreRight += pointsByType[Board.GetSpecialType(newRow, c)]; |

|||

} |

|||

else |

|||

break; |

|||

} |

|||

} |

|||

|

|||

if (incomingDirection != Direction.Down) |

|||

{ |

|||

for (var r = newRow + 1; r < Board.Rows; r++) |

|||

{ |

|||

if (Board.GetCellType(r, newCol) == newValue) |

|||

{ |

|||

matchedUp++; |

|||

scoreUp += pointsByType[Board.GetSpecialType(r, newCol)]; |

|||

} |

|||

else |

|||

break; |

|||

} |

|||

} |

|||

|

|||

if (incomingDirection != Direction.Up) |

|||

{ |

|||

for (var r = newRow - 1; r >= 0; r--) |

|||

{ |

|||

if (Board.GetCellType(r, newCol) == newValue) |

|||

{ |

|||

matchedDown++; |

|||

scoreDown += pointsByType[Board.GetSpecialType(r, newCol)]; |

|||

} |

|||

else |

|||

break; |

|||

} |

|||

} |

|||

|

|||

if ((matchedUp + matchedDown >= 2) || (matchedLeft + matchedRight >= 2)) |

|||

{ |

|||

// It's a match. Start from counting the piece being moved

|

|||

var totalScore = pointsByType[newSpecial]; |

|||

if (matchedUp + matchedDown >= 2) |

|||

{ |

|||

totalScore += scoreUp + scoreDown; |

|||

} |

|||

|

|||

if (matchedLeft + matchedRight >= 2) |

|||

{ |

|||

totalScore += scoreLeft + scoreRight; |

|||

} |

|||

return totalScore; |

|||

} |

|||

|

|||

return 0; |

|||

} |

|||

} |

|||

|

|||

} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: d982f0cd92214bd2b689be838fa40c44 |

|||

timeCreated: 1598221207 |

|||

|

|||

using Unity.MLAgents.Extensions.Match3; |

|||

using UnityEngine; |

|||

|

|||

namespace Unity.MLAgentsExamples |

|||

{ |

|||

|

|||

|

|||

public class Match3Board : AbstractBoard |

|||

{ |

|||

public int RandomSeed = -1; |

|||

|

|||

public const int k_EmptyCell = -1; |

|||

[Tooltip("Points earned for clearing a basic cell (cube)")] |

|||

public int BasicCellPoints = 1; |

|||

|

|||

[Tooltip("Points earned for clearing a special cell (sphere)")] |

|||

public int SpecialCell1Points = 2; |

|||

|

|||

[Tooltip("Points earned for clearing an extra special cell (plus)")] |

|||

public int SpecialCell2Points = 3; |

|||

|

|||

(int, int)[,] m_Cells; |

|||

bool[,] m_Matched; |

|||

|

|||

System.Random m_Random; |

|||

|

|||

void Awake() |

|||

{ |

|||

m_Cells = new (int, int)[Columns, Rows]; |

|||

m_Matched = new bool[Columns, Rows]; |

|||

|

|||

m_Random = new System.Random(RandomSeed == -1 ? gameObject.GetInstanceID() : RandomSeed); |

|||

|

|||

InitRandom(); |

|||

} |

|||

|

|||

public override bool MakeMove(Move move) |

|||

{ |

|||

if (!IsMoveValid(move)) |

|||

{ |

|||

return false; |

|||

} |

|||

var originalValue = m_Cells[move.Column, move.Row]; |

|||

var (otherRow, otherCol) = move.OtherCell(); |

|||

var destinationValue = m_Cells[otherCol, otherRow]; |

|||

|

|||

m_Cells[move.Column, move.Row] = destinationValue; |

|||

m_Cells[otherCol, otherRow] = originalValue; |

|||

return true; |

|||

} |

|||

|

|||

public override int GetCellType(int row, int col) |

|||

{ |

|||

return m_Cells[col, row].Item1; |

|||

} |

|||

|

|||

public override int GetSpecialType(int row, int col) |

|||

{ |

|||

return m_Cells[col, row].Item2; |

|||

} |

|||

|

|||

public override bool IsMoveValid(Move m) |

|||

{ |

|||

if (m_Cells == null) |

|||

{ |

|||

return false; |

|||

} |

|||

|

|||

return SimpleIsMoveValid(m); |

|||

} |

|||

|

|||

public bool MarkMatchedCells(int[,] cells = null) |

|||

{ |

|||

ClearMarked(); |

|||

bool madeMatch = false; |

|||

for (var i = 0; i < Rows; i++) |

|||

{ |

|||

for (var j = 0; j < Columns; j++) |

|||

{ |

|||

// Check vertically

|

|||

var matchedRows = 0; |

|||

for (var iOffset = i; iOffset < Rows; iOffset++) |

|||

{ |

|||

if (m_Cells[j, i].Item1 != m_Cells[j, iOffset].Item1) |

|||

{ |

|||

break; |

|||

} |

|||

|

|||

matchedRows++; |

|||

} |

|||

|

|||

if (matchedRows >= 3) |

|||

{ |

|||

madeMatch = true; |

|||

for (var k = 0; k < matchedRows; k++) |

|||

{ |

|||

m_Matched[j, i + k] = true; |

|||

} |

|||

} |

|||

|

|||

// Check vertically

|

|||

var matchedCols = 0; |

|||

for (var jOffset = j; jOffset < Columns; jOffset++) |

|||

{ |

|||

if (m_Cells[j, i].Item1 != m_Cells[jOffset, i].Item1) |

|||

{ |

|||

break; |

|||

} |

|||

|

|||

matchedCols++; |

|||

} |

|||

|

|||

if (matchedCols >= 3) |

|||

{ |

|||

madeMatch = true; |

|||

for (var k = 0; k < matchedCols; k++) |

|||

{ |

|||

m_Matched[j + k, i] = true; |

|||

} |

|||

} |

|||

} |

|||

} |

|||

|

|||

return madeMatch; |

|||

} |

|||

|

|||

/// <summary>

|

|||

/// Sets cells that are matched to the empty cell, and returns the score earned.

|

|||

/// </summary>

|

|||

/// <returns></returns>

|

|||

public int ClearMatchedCells() |

|||

{ |

|||

var pointsByType = new[] { BasicCellPoints, SpecialCell1Points, SpecialCell2Points }; |

|||

int pointsEarned = 0; |

|||

for (var i = 0; i < Rows; i++) |

|||

{ |

|||

for (var j = 0; j < Columns; j++) |

|||

{ |

|||

if (m_Matched[j, i]) |

|||

{ |

|||

var speciaType = GetSpecialType(i, j); |

|||

pointsEarned += pointsByType[speciaType]; |

|||

m_Cells[j, i] = (k_EmptyCell, 0); |

|||

} |

|||

} |

|||

} |

|||

|

|||

ClearMarked(); // TODO clear here or at start of matching?

|

|||

return pointsEarned; |

|||

} |

|||

|

|||

public bool DropCells() |

|||

{ |

|||

var madeChanges = false; |

|||

// Gravity is applied in the negative row direction

|

|||

for (var j = 0; j < Columns; j++) |

|||

{ |

|||

var writeIndex = 0; |

|||

for (var readIndex = 0; readIndex < Rows; readIndex++) |

|||

{ |

|||

m_Cells[j, writeIndex] = m_Cells[j, readIndex]; |

|||

if (m_Cells[j, readIndex].Item1 != k_EmptyCell) |

|||

{ |

|||

writeIndex++; |

|||

} |

|||

} |

|||

|

|||

// Fill in empties at the end

|

|||

for (; writeIndex < Rows; writeIndex++) |

|||

{ |

|||

madeChanges = true; |

|||

m_Cells[j, writeIndex] = (k_EmptyCell, 0); |

|||

} |

|||

} |

|||

|

|||

return madeChanges; |

|||

} |

|||

|

|||

public bool FillFromAbove() |

|||

{ |

|||

bool madeChanges = false; |

|||

for (var i = 0; i < Rows; i++) |

|||

{ |

|||

for (var j = 0; j < Columns; j++) |

|||

{ |

|||

if (m_Cells[j, i].Item1 == k_EmptyCell) |

|||

{ |

|||

madeChanges = true; |

|||

m_Cells[j, i] = (GetRandomCellType(), GetRandomSpecialType()); |

|||

} |

|||

} |

|||

} |

|||

|

|||

return madeChanges; |

|||

} |

|||

|

|||

public (int, int)[,] Cells |

|||

{ |

|||

get { return m_Cells; } |

|||

} |

|||

|

|||

public bool[,] Matched |

|||

{ |

|||

get { return m_Matched; } |

|||

} |

|||

|

|||

// Initialize the board to random values.

|

|||

public void InitRandom() |

|||

{ |

|||

for (var i = 0; i < Rows; i++) |

|||

{ |

|||

for (var j = 0; j < Columns; j++) |

|||

{ |

|||

m_Cells[j, i] = (GetRandomCellType(), GetRandomSpecialType()); |

|||

} |

|||

} |

|||

} |

|||

|

|||

public void InitSettled() |

|||

{ |

|||

InitRandom(); |

|||

while (true) |

|||

{ |

|||

var anyMatched = MarkMatchedCells(); |

|||

if (!anyMatched) |

|||

{ |

|||

return; |

|||

} |

|||

ClearMatchedCells(); |

|||

DropCells(); |

|||

FillFromAbove(); |

|||

} |

|||

} |

|||

|

|||

void ClearMarked() |

|||

{ |

|||

for (var i = 0; i < Rows; i++) |

|||

{ |

|||

for (var j = 0; j < Columns; j++) |

|||

{ |

|||

m_Matched[j, i] = false; |

|||

} |

|||

} |

|||

} |

|||

|

|||

int GetRandomCellType() |

|||

{ |

|||

return m_Random.Next(0, NumCellTypes); |

|||

} |

|||

|

|||

int GetRandomSpecialType() |

|||

{ |

|||

// 1 in N chance to get a type-2 special

|

|||

// 2 in N chance to get a type-1 special

|

|||

// otherwise 0 (boring)

|

|||

var N = 10; |

|||

var val = m_Random.Next(0, N); |

|||

if (val == 0) |

|||

{ |

|||

return 2; |

|||

} |

|||

|

|||

if (val <= 2) |

|||

{ |

|||

return 1; |

|||

} |

|||

|

|||

return 0; |

|||

} |

|||

|

|||

} |

|||

} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 6d852a063770348b68caa91b8e7642a5 |

|||

MonoImporter: |

|||

externalObjects: {} |

|||

serializedVersion: 2 |

|||

defaultReferences: [] |

|||

executionOrder: 0 |

|||

icon: {instanceID: 0} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

using UnityEngine; |

|||

using Unity.MLAgents.Extensions.Match3; |

|||

|

|||

namespace Unity.MLAgentsExamples |

|||

{ |

|||

public class Match3Drawer : MonoBehaviour |

|||

{ |

|||

public int DebugMoveIndex = -1; |

|||

|

|||

static Color[] s_Colors = new[] |

|||

{ |

|||

Color.red, |

|||

Color.green, |

|||

Color.blue, |

|||

Color.cyan, |

|||

Color.magenta, |

|||

Color.yellow, |

|||

Color.gray, |

|||

Color.black, |

|||

}; |

|||

|

|||

private static Color s_EmptyColor = new Color(0.5f, 0.5f, 0.5f, .25f); |

|||

|

|||

|

|||

void OnDrawGizmos() |

|||

{ |

|||

// TODO replace Gizmos for drawing the game state with proper GameObjects and animations.

|

|||

var cubeSize = .5f; |

|||

var cubeSpacing = .75f; |

|||

var matchedWireframeSize = .5f * (cubeSize + cubeSpacing); |

|||

|

|||

var board = GetComponent<Match3Board>(); |

|||

if (board == null) |

|||

{ |

|||

return; |

|||

} |

|||

|

|||

for (var i = 0; i < board.Rows; i++) |

|||

{ |

|||

for (var j = 0; j < board.Columns; j++) |

|||

{ |

|||

var value = board.Cells != null ? board.GetCellType(i, j) : Match3Board.k_EmptyCell; |

|||

if (value >= 0 && value < s_Colors.Length) |

|||

{ |

|||

Gizmos.color = s_Colors[value]; |

|||

} |

|||

else |

|||

{ |

|||

Gizmos.color = s_EmptyColor; |

|||

} |

|||

|

|||

var pos = new Vector3(j, i, 0); |

|||

pos *= cubeSpacing; |

|||

|

|||

var specialType = board.Cells != null ? board.GetSpecialType(i, j) : 0; |

|||

if (specialType == 2) |

|||

{ |

|||

Gizmos.DrawCube(transform.TransformPoint(pos), cubeSize * new Vector3(1f, .5f, .5f)); |

|||

Gizmos.DrawCube(transform.TransformPoint(pos), cubeSize * new Vector3(.5f, 1f, .5f)); |

|||

Gizmos.DrawCube(transform.TransformPoint(pos), cubeSize * new Vector3(.5f, .5f, 1f)); |

|||

} |

|||

else if (specialType == 1) |

|||

{ |

|||

Gizmos.DrawSphere(transform.TransformPoint(pos), .5f * cubeSize); |

|||

} |

|||

else |

|||

{ |

|||

Gizmos.DrawCube(transform.TransformPoint(pos), cubeSize * Vector3.one); |

|||

} |

|||

|

|||

Gizmos.color = Color.yellow; |

|||

if (board.Matched != null && board.Matched[j, i]) |

|||

{ |

|||

Gizmos.DrawWireCube(transform.TransformPoint(pos), matchedWireframeSize * Vector3.one); |

|||

} |

|||

} |

|||

} |

|||

|

|||

// Draw valid moves

|

|||

foreach (var move in board.AllMoves()) |

|||

{ |

|||

if (DebugMoveIndex >= 0 && move.MoveIndex != DebugMoveIndex) |

|||

{ |

|||

continue; |

|||

} |

|||

|

|||

if (!board.IsMoveValid(move)) |

|||

{ |

|||

continue; |

|||

} |

|||

|

|||

var (otherRow, otherCol) = move.OtherCell(); |

|||

var pos = new Vector3(move.Column, move.Row, 0) * cubeSpacing; |

|||

var otherPos = new Vector3(otherCol, otherRow, 0) * cubeSpacing; |

|||

|

|||

var oneQuarter = Vector3.Lerp(pos, otherPos, .25f); |

|||

var threeQuarters = Vector3.Lerp(pos, otherPos, .75f); |

|||

Gizmos.DrawLine(transform.TransformPoint(oneQuarter), transform.TransformPoint(threeQuarters)); |

|||

} |

|||

} |

|||

} |

|||

} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: abebb7ad4a5547d7a3b04373784ff195 |

|||

timeCreated: 1598221188 |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 504c8f923fdf448e795936f2900a5fd4 |

|||

folderAsset: yes |

|||

DefaultImporter: |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

1001

Project/Assets/ML-Agents/Examples/Match3/TFModels/Match3VectorObs.onnx

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

|

|||

fileFormatVersion: 2 |

|||

guid: 9e89b8e81974148d3b7213530d00589d |

|||

ScriptedImporter: |

|||

fileIDToRecycleName: |

|||

11400000: main obj |

|||

11400002: model data |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

script: {fileID: 11500000, guid: 683b6cb6d0a474744822c888b46772c9, type: 3} |

|||

optimizeModel: 1 |

|||

forceArbitraryBatchSize: 1 |

|||

treatErrorsAsWarnings: 0 |

|||

1001

Project/Assets/ML-Agents/Examples/Match3/TFModels/Match3VisualObs.nn

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

|

|||

fileFormatVersion: 2 |

|||

guid: 48d14da88fea74d0693c691c6e3f2e34 |

|||

ScriptedImporter: |

|||

fileIDToRecycleName: |

|||

11400000: main obj |

|||

11400002: model data |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

script: {fileID: 11500000, guid: 19ed1486aa27d4903b34839f37b8f69f, type: 3} |

|||

|

|||

using System; |

|||

using System.Collections.Generic; |

|||

using UnityEngine; |

|||

|

|||

namespace Unity.MLAgents.Extensions.Match3 |

|||

{ |

|||

public abstract class AbstractBoard : MonoBehaviour |

|||

{ |

|||

/// <summary>

|

|||

/// Number of rows on the board

|

|||

/// </summary>

|

|||

public int Rows; |

|||

|

|||

/// <summary>

|

|||

/// Number of columns on the board

|

|||

/// </summary>

|

|||

public int Columns; |

|||

|

|||

/// <summary>

|

|||

/// Maximum number of different types of cells (colors, pieces, etc).

|

|||

/// </summary>

|

|||

public int NumCellTypes; |

|||

|

|||

/// <summary>

|

|||

/// Maximum number of special types. This can be zero, in which case

|

|||

/// all cells of the same type are assumed to be equivalent.

|

|||

/// </summary>

|

|||

public int NumSpecialTypes; |

|||

|

|||

/// <summary>

|

|||

/// Returns the "color" of the piece at the given row and column.

|

|||

/// This should be between 0 and NumCellTypes-1 (inclusive).

|

|||

/// The actual order of the values doesn't matter.

|

|||

/// </summary>

|

|||

/// <param name="row"></param>

|

|||

/// <param name="col"></param>

|

|||

/// <returns></returns>

|

|||

public abstract int GetCellType(int row, int col); |

|||

|

|||

/// <summary>

|

|||

/// Returns the special type of the piece at the given row and column.

|

|||

/// This should be between 0 and NumSpecialTypes (inclusive).

|

|||

/// The actual order of the values doesn't matter.

|

|||

/// </summary>

|

|||

/// <param name="row"></param>

|

|||

/// <param name="col"></param>

|

|||

/// <returns></returns>

|

|||

public abstract int GetSpecialType(int row, int col); |

|||

|

|||

/// <summary>

|

|||

/// Check whether the particular Move is valid for the game.

|

|||

/// The actual results will depend on the rules of the game, but we provide SimpleIsMoveValid()

|

|||

/// that handles basic match3 rules with no special or immovable pieces.

|

|||

/// </summary>

|

|||

/// <param name="m"></param>

|

|||

/// <returns></returns>

|

|||

public abstract bool IsMoveValid(Move m); |

|||

|

|||

/// <summary>

|

|||

/// Instruct the game to make the given move. Returns true if the move was made.

|

|||

/// Note that during training, a move that was marked as invalid may occasionally still be

|

|||

/// requested. If this happens, it is safe to do nothing and request another move.

|

|||

/// </summary>

|

|||

/// <param name="m"></param>

|

|||

/// <returns></returns>

|

|||

public abstract bool MakeMove(Move m); |

|||

|

|||

/// <summary>

|

|||

/// Return the total number of moves possible for the board.

|

|||

/// </summary>

|

|||

/// <returns></returns>

|

|||

public int NumMoves() |

|||

{ |

|||

return Move.NumPotentialMoves(Rows, Columns); |

|||

} |

|||

|

|||

/// <summary>

|

|||

/// An optional callback for when the all moves are invalid. Ideally, the game state should

|

|||

/// be changed before this happens, but this is a way to get notified if not.

|

|||

/// </summary>

|

|||

public Action OnNoValidMovesAction; |

|||

|

|||

/// <summary>

|

|||

/// Iterate through all Moves on the board.

|

|||

/// </summary>

|

|||

/// <returns></returns>

|

|||

public IEnumerable<Move> AllMoves() |

|||

{ |

|||

var currentMove = Move.FromMoveIndex(0, Rows, Columns); |

|||

for (var i = 0; i < NumMoves(); i++) |

|||

{ |

|||

yield return currentMove; |

|||

currentMove.Next(Rows, Columns); |

|||

} |

|||

} |

|||

|

|||

/// <summary>

|

|||

/// Iterate through all valid Moves on the board.

|

|||

/// </summary>

|

|||

/// <returns></returns>

|

|||

public IEnumerable<Move> ValidMoves() |

|||

{ |

|||

var currentMove = Move.FromMoveIndex(0, Rows, Columns); |

|||

for (var i = 0; i < NumMoves(); i++) |

|||

{ |

|||

if (IsMoveValid(currentMove)) |

|||

{ |

|||

yield return currentMove; |

|||

} |

|||