浏览代码

Grid Sensor (#4399)

Grid Sensor (#4399)

Co-authored-by: Chris Elion <chris.elion@unity3d.com>/MLA-1734-demo-provider

当前提交

a117c932

共有 50 个文件被更改,包括 7711 次插入 和 24 次删除

-

8com.unity.ml-agents.extensions/CHANGELOG.md

-

1com.unity.ml-agents.extensions/Tests/Editor/Unity.ML-Agents.Extensions.EditorTests.asmdef

-

60ml-agents-envs/mlagents_envs/rpc_utils.py

-

48ml-agents-envs/mlagents_envs/tests/test_rpc_utils.py

-

1001Project/Assets/ML-Agents/Examples/FoodCollector/Prefabs/GridFoodCollectorArea.prefab

-

7Project/Assets/ML-Agents/Examples/FoodCollector/Prefabs/GridFoodCollectorArea.prefab.meta

-

949Project/Assets/ML-Agents/Examples/FoodCollector/Scenes/GridFoodCollector.unity

-

7Project/Assets/ML-Agents/Examples/FoodCollector/Scenes/GridFoodCollector.unity.meta

-

1001Project/Assets/ML-Agents/Examples/FoodCollector/TFModels/GridFoodCollector.nn

-

11Project/Assets/ML-Agents/Examples/FoodCollector/TFModels/GridFoodCollector.nn.meta

-

223com.unity.ml-agents.extensions/Documentation~/Grid-Sensor.md

-

115com.unity.ml-agents.extensions/Runtime/Sensors/CountingGridSensor.cs

-

11com.unity.ml-agents.extensions/Runtime/Sensors/CountingGridSensor.cs.meta

-

876com.unity.ml-agents.extensions/Runtime/Sensors/GridSensor.cs

-

11com.unity.ml-agents.extensions/Runtime/Sensors/GridSensor.cs.meta

-

396com.unity.ml-agents.extensions/Tests/Editor/Sensors/ChannelHotPerceiveTests.cs

-

11com.unity.ml-agents.extensions/Tests/Editor/Sensors/ChannelHotPerceiveTests.cs.meta

-

93com.unity.ml-agents.extensions/Tests/Editor/Sensors/ChannelHotShapeTests.cs

-

11com.unity.ml-agents.extensions/Tests/Editor/Sensors/ChannelHotShapeTests.cs.meta

-

395com.unity.ml-agents.extensions/Tests/Editor/Sensors/ChannelPerceiveTests.cs

-

11com.unity.ml-agents.extensions/Tests/Editor/Sensors/ChannelPerceiveTests.cs.meta

-

70com.unity.ml-agents.extensions/Tests/Editor/Sensors/ChannelShapeTests.cs

-

11com.unity.ml-agents.extensions/Tests/Editor/Sensors/ChannelShapeTests.cs.meta

-

147com.unity.ml-agents.extensions/Tests/Editor/Sensors/CountingGridSensorPerceiveTests.cs

-

11com.unity.ml-agents.extensions/Tests/Editor/Sensors/CountingGridSensorPerceiveTests.cs.meta

-

41com.unity.ml-agents.extensions/Tests/Editor/Sensors/CountingGridSensorShapeTests.cs

-

11com.unity.ml-agents.extensions/Tests/Editor/Sensors/CountingGridSensorShapeTests.cs.meta

-

113com.unity.ml-agents.extensions/Tests/Editor/Sensors/GridObservationPerceiveTests.cs

-

11com.unity.ml-agents.extensions/Tests/Editor/Sensors/GridObservationPerceiveTests.cs.meta

-

161com.unity.ml-agents.extensions/Tests/Editor/Sensors/GridSensorTestUtils.cs

-

11com.unity.ml-agents.extensions/Tests/Editor/Sensors/GridSensorTestUtils.cs.meta

-

8com.unity.ml-agents.extensions/Tests/Utils.meta

-

26config/ppo/GridFoodCollector.yaml

-

28config/sac/GridFoodCollector.yaml

-

1001com.unity.ml-agents.extensions/Documentation~/images/gridobs-vs-vectorobs.gif

-

20com.unity.ml-agents.extensions/Documentation~/images/gridsensor-example-camera.png

-

94com.unity.ml-agents.extensions/Documentation~/images/gridsensor-example-gridsensor.png

-

67com.unity.ml-agents.extensions/Documentation~/images/gridsensor-example-raycast.png

-

79com.unity.ml-agents.extensions/Documentation~/images/gridsensor-example.png

-

504com.unity.ml-agents.extensions/Documentation~/images/persp_ortho_proj.png

-

8com.unity.ml-agents.extensions/Tests/Utils/GridObsTestComponents.meta

-

9com.unity.ml-agents.extensions/Tests/Utils/GridObsTestComponents/GridSensorDummyData.cs

-

11com.unity.ml-agents.extensions/Tests/Utils/GridObsTestComponents/GridSensorDummyData.cs.meta

-

14com.unity.ml-agents.extensions/Tests/Utils/GridObsTestComponents/SimpleTestGridSensor.cs

-

11com.unity.ml-agents.extensions/Tests/Utils/GridObsTestComponents/SimpleTestGridSensor.cs.meta

-

15com.unity.ml-agents.extensions/Tests/Utils/Unity.ML-Agents.Extensions.TestUtils.asmdef

-

7com.unity.ml-agents.extensions/Tests/Utils/Unity.ML-Agents.Extensions.TestUtils.asmdef.meta

1001

Project/Assets/ML-Agents/Examples/FoodCollector/Prefabs/GridFoodCollectorArea.prefab

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

|

|||

fileFormatVersion: 2 |

|||

guid: b5339e4b990ade14f992aadf3bf8591b |

|||

PrefabImporter: |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

%YAML 1.1 |

|||

%TAG !u! tag:unity3d.com,2011: |

|||

--- !u!29 &1 |

|||

OcclusionCullingSettings: |

|||

m_ObjectHideFlags: 0 |

|||

serializedVersion: 2 |

|||

m_OcclusionBakeSettings: |

|||

smallestOccluder: 5 |

|||

smallestHole: 0.25 |

|||

backfaceThreshold: 100 |

|||

m_SceneGUID: 00000000000000000000000000000000 |

|||

m_OcclusionCullingData: {fileID: 0} |

|||

--- !u!104 &2 |

|||

RenderSettings: |

|||

m_ObjectHideFlags: 0 |

|||

serializedVersion: 9 |

|||

m_Fog: 0 |

|||

m_FogColor: {r: 0.5, g: 0.5, b: 0.5, a: 1} |

|||

m_FogMode: 3 |

|||

m_FogDensity: 0.01 |

|||

m_LinearFogStart: 0 |

|||

m_LinearFogEnd: 300 |

|||

m_AmbientSkyColor: {r: 0.8, g: 0.8, b: 0.8, a: 1} |

|||

m_AmbientEquatorColor: {r: 0.6965513, g: 0, b: 1, a: 1} |

|||

m_AmbientGroundColor: {r: 1, g: 0.45977026, b: 0, a: 1} |

|||

m_AmbientIntensity: 1 |

|||

m_AmbientMode: 3 |

|||

m_SubtractiveShadowColor: {r: 0.42, g: 0.478, b: 0.627, a: 1} |

|||

m_SkyboxMaterial: {fileID: 10304, guid: 0000000000000000f000000000000000, type: 0} |

|||

m_HaloStrength: 0.5 |

|||

m_FlareStrength: 1 |

|||

m_FlareFadeSpeed: 3 |

|||

m_HaloTexture: {fileID: 0} |

|||

m_SpotCookie: {fileID: 10001, guid: 0000000000000000e000000000000000, type: 0} |

|||

m_DefaultReflectionMode: 0 |

|||

m_DefaultReflectionResolution: 128 |

|||

m_ReflectionBounces: 1 |

|||

m_ReflectionIntensity: 1 |

|||

m_CustomReflection: {fileID: 0} |

|||

m_Sun: {fileID: 0} |

|||

m_IndirectSpecularColor: {r: 0.44971228, g: 0.49977815, b: 0.57563734, a: 1} |

|||

m_UseRadianceAmbientProbe: 0 |

|||

--- !u!157 &3 |

|||

LightmapSettings: |

|||

m_ObjectHideFlags: 0 |

|||

serializedVersion: 11 |

|||

m_GIWorkflowMode: 0 |

|||

m_GISettings: |

|||

serializedVersion: 2 |

|||

m_BounceScale: 1 |

|||

m_IndirectOutputScale: 1 |

|||

m_AlbedoBoost: 1 |

|||

m_EnvironmentLightingMode: 0 |

|||

m_EnableBakedLightmaps: 1 |

|||

m_EnableRealtimeLightmaps: 1 |

|||

m_LightmapEditorSettings: |

|||

serializedVersion: 10 |

|||

m_Resolution: 2 |

|||

m_BakeResolution: 40 |

|||

m_AtlasSize: 1024 |

|||

m_AO: 0 |

|||

m_AOMaxDistance: 1 |

|||

m_CompAOExponent: 1 |

|||

m_CompAOExponentDirect: 0 |

|||

m_Padding: 2 |

|||

m_LightmapParameters: {fileID: 15204, guid: 0000000000000000f000000000000000, |

|||

type: 0} |

|||

m_LightmapsBakeMode: 1 |

|||

m_TextureCompression: 1 |

|||

m_FinalGather: 0 |

|||

m_FinalGatherFiltering: 1 |

|||

m_FinalGatherRayCount: 256 |

|||

m_ReflectionCompression: 2 |

|||

m_MixedBakeMode: 2 |

|||

m_BakeBackend: 0 |

|||

m_PVRSampling: 1 |

|||

m_PVRDirectSampleCount: 32 |

|||

m_PVRSampleCount: 500 |

|||

m_PVRBounces: 2 |

|||

m_PVRFilterTypeDirect: 0 |

|||

m_PVRFilterTypeIndirect: 0 |

|||

m_PVRFilterTypeAO: 0 |

|||

m_PVRFilteringMode: 2 |

|||

m_PVRCulling: 1 |

|||

m_PVRFilteringGaussRadiusDirect: 1 |

|||

m_PVRFilteringGaussRadiusIndirect: 5 |

|||

m_PVRFilteringGaussRadiusAO: 2 |

|||

m_PVRFilteringAtrousPositionSigmaDirect: 0.5 |

|||

m_PVRFilteringAtrousPositionSigmaIndirect: 2 |

|||

m_PVRFilteringAtrousPositionSigmaAO: 1 |

|||

m_ShowResolutionOverlay: 1 |

|||

m_LightingDataAsset: {fileID: 112000002, guid: 03723c7f910c3423aa1974f1b9ce8392, |

|||

type: 2} |

|||

m_UseShadowmask: 1 |

|||

--- !u!196 &4 |

|||

NavMeshSettings: |

|||

serializedVersion: 2 |

|||

m_ObjectHideFlags: 0 |

|||

m_BuildSettings: |

|||

serializedVersion: 2 |

|||

agentTypeID: 0 |

|||

agentRadius: 0.5 |

|||

agentHeight: 2 |

|||

agentSlope: 45 |

|||

agentClimb: 0.4 |

|||

ledgeDropHeight: 0 |

|||

maxJumpAcrossDistance: 0 |

|||

minRegionArea: 2 |

|||

manualCellSize: 0 |

|||

cellSize: 0.16666667 |

|||

manualTileSize: 0 |

|||

tileSize: 256 |

|||

accuratePlacement: 0 |

|||

debug: |

|||

m_Flags: 0 |

|||

m_NavMeshData: {fileID: 0} |

|||

--- !u!1001 &190823800 |

|||

PrefabInstance: |

|||

m_ObjectHideFlags: 0 |

|||

serializedVersion: 2 |

|||

m_Modification: |

|||

m_TransformParent: {fileID: 0} |

|||

m_Modifications: |

|||

- target: {fileID: 1819751139121548, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_Name |

|||

value: GridFoodCollectorArea |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalPosition.x |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalPosition.y |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalPosition.z |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalRotation.x |

|||

value: -0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalRotation.y |

|||

value: -0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalRotation.z |

|||

value: -0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalRotation.w |

|||

value: 1 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_RootOrder |

|||

value: 6 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalEulerAnglesHint.x |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalEulerAnglesHint.y |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalEulerAnglesHint.z |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

m_RemovedComponents: [] |

|||

m_SourcePrefab: {fileID: 100100000, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

--- !u!1 &273651478 |

|||

GameObject: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

serializedVersion: 6 |

|||

m_Component: |

|||

- component: {fileID: 273651479} |

|||

- component: {fileID: 273651481} |

|||

- component: {fileID: 273651480} |

|||

m_Layer: 5 |

|||

m_Name: Text |

|||

m_TagString: Untagged |

|||

m_Icon: {fileID: 0} |

|||

m_NavMeshLayer: 0 |

|||

m_StaticEditorFlags: 0 |

|||

m_IsActive: 1 |

|||

--- !u!224 &273651479 |

|||

RectTransform: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 273651478} |

|||

m_LocalRotation: {x: -0, y: -0, z: -0, w: 1} |

|||

m_LocalPosition: {x: 0, y: 0, z: 0} |

|||

m_LocalScale: {x: 1, y: 1, z: 1} |

|||

m_Children: [] |

|||

m_Father: {fileID: 1799584681} |

|||

m_RootOrder: 0 |

|||

m_LocalEulerAnglesHint: {x: 0, y: 0, z: 0} |

|||

m_AnchorMin: {x: 0, y: 0} |

|||

m_AnchorMax: {x: 1, y: 1} |

|||

m_AnchoredPosition: {x: 0, y: 0} |

|||

m_SizeDelta: {x: 0, y: 0} |

|||

m_Pivot: {x: 0.5, y: 0.5} |

|||

--- !u!114 &273651480 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 273651478} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: 5f7201a12d95ffc409449d95f23cf332, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

--- !u!222 &273651481 |

|||

CanvasRenderer: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 273651478} |

|||

m_CullTransparentMesh: 0 |

|||

--- !u!1 &378228137 |

|||

GameObject: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

serializedVersion: 6 |

|||

m_Component: |

|||

- component: {fileID: 378228141} |

|||

- component: {fileID: 378228140} |

|||

- component: {fileID: 378228139} |

|||

- component: {fileID: 378228138} |

|||

m_Layer: 5 |

|||

m_Name: Canvas |

|||

m_TagString: Untagged |

|||

m_Icon: {fileID: 0} |

|||

m_NavMeshLayer: 0 |

|||

m_StaticEditorFlags: 0 |

|||

m_IsActive: 1 |

|||

--- !u!114 &378228138 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 378228137} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: dc42784cf147c0c48a680349fa168899, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

--- !u!114 &378228139 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 378228137} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: 0cd44c1031e13a943bb63640046fad76, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

--- !u!223 &378228140 |

|||

Canvas: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 378228137} |

|||

m_Enabled: 1 |

|||

serializedVersion: 3 |

|||

m_RenderMode: 0 |

|||

m_Camera: {fileID: 0} |

|||

m_PlaneDistance: 100 |

|||

m_PixelPerfect: 0 |

|||

m_ReceivesEvents: 1 |

|||

m_OverrideSorting: 0 |

|||

m_OverridePixelPerfect: 0 |

|||

m_SortingBucketNormalizedSize: 0 |

|||

m_AdditionalShaderChannelsFlag: 0 |

|||

m_SortingLayerID: 0 |

|||

m_SortingOrder: 0 |

|||

m_TargetDisplay: 0 |

|||

--- !u!224 &378228141 |

|||

RectTransform: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 378228137} |

|||

m_LocalRotation: {x: 0, y: 0, z: 0, w: 1} |

|||

m_LocalPosition: {x: 0, y: 0, z: 0} |

|||

m_LocalScale: {x: 0, y: 0, z: 0} |

|||

m_Children: |

|||

- {fileID: 1799584681} |

|||

- {fileID: 1086444498} |

|||

m_Father: {fileID: 0} |

|||

m_RootOrder: 2 |

|||

m_LocalEulerAnglesHint: {x: 0, y: 0, z: 0} |

|||

m_AnchorMin: {x: 0, y: 0} |

|||

m_AnchorMax: {x: 0, y: 0} |

|||

m_AnchoredPosition: {x: 0, y: 0} |

|||

m_SizeDelta: {x: 0, y: 0} |

|||

m_Pivot: {x: 0, y: 0} |

|||

--- !u!1001 &392794583 |

|||

PrefabInstance: |

|||

m_ObjectHideFlags: 0 |

|||

serializedVersion: 2 |

|||

m_Modification: |

|||

m_TransformParent: {fileID: 0} |

|||

m_Modifications: |

|||

- target: {fileID: 1819751139121548, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_Name |

|||

value: GridFoodCollectorArea (1) |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 1819751139121548, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_IsActive |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalPosition.x |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalPosition.y |

|||

value: -50 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalPosition.z |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalRotation.x |

|||

value: -0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalRotation.y |

|||

value: -0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalRotation.z |

|||

value: -0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalRotation.w |

|||

value: 1 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_RootOrder |

|||

value: 7 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalEulerAnglesHint.x |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalEulerAnglesHint.y |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalEulerAnglesHint.z |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

m_RemovedComponents: [] |

|||

m_SourcePrefab: {fileID: 100100000, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

--- !u!1 &499540684 |

|||

GameObject: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

serializedVersion: 6 |

|||

m_Component: |

|||

- component: {fileID: 499540687} |

|||

- component: {fileID: 499540686} |

|||

- component: {fileID: 499540685} |

|||

m_Layer: 0 |

|||

m_Name: EventSystem |

|||

m_TagString: Untagged |

|||

m_Icon: {fileID: 0} |

|||

m_NavMeshLayer: 0 |

|||

m_StaticEditorFlags: 0 |

|||

m_IsActive: 1 |

|||

--- !u!114 &499540685 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 499540684} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: 4f231c4fb786f3946a6b90b886c48677, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

--- !u!114 &499540686 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 499540684} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: 76c392e42b5098c458856cdf6ecaaaa1, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

--- !u!4 &499540687 |

|||

Transform: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 499540684} |

|||

m_LocalRotation: {x: 0, y: 0, z: 0, w: 1} |

|||

m_LocalPosition: {x: 0, y: 0, z: 0} |

|||

m_LocalScale: {x: 1, y: 1, z: 1} |

|||

m_Children: [] |

|||

m_Father: {fileID: 0} |

|||

m_RootOrder: 4 |

|||

m_LocalEulerAnglesHint: {x: 0, y: 0, z: 0} |

|||

--- !u!1001 &916917435 |

|||

PrefabInstance: |

|||

m_ObjectHideFlags: 0 |

|||

serializedVersion: 2 |

|||

m_Modification: |

|||

m_TransformParent: {fileID: 0} |

|||

m_Modifications: |

|||

- target: {fileID: 4943719350691982, guid: 5889392e3f05b448a8a06c5def6c2dec, type: 3} |

|||

propertyPath: m_LocalPosition.x |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4943719350691982, guid: 5889392e3f05b448a8a06c5def6c2dec, type: 3} |

|||

propertyPath: m_LocalPosition.y |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4943719350691982, guid: 5889392e3f05b448a8a06c5def6c2dec, type: 3} |

|||

propertyPath: m_LocalPosition.z |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4943719350691982, guid: 5889392e3f05b448a8a06c5def6c2dec, type: 3} |

|||

propertyPath: m_LocalRotation.x |

|||

value: 0.31598538 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4943719350691982, guid: 5889392e3f05b448a8a06c5def6c2dec, type: 3} |

|||

propertyPath: m_LocalRotation.y |

|||

value: -0.3596048 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4943719350691982, guid: 5889392e3f05b448a8a06c5def6c2dec, type: 3} |

|||

propertyPath: m_LocalRotation.z |

|||

value: 0.13088542 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4943719350691982, guid: 5889392e3f05b448a8a06c5def6c2dec, type: 3} |

|||

propertyPath: m_LocalRotation.w |

|||

value: 0.8681629 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4943719350691982, guid: 5889392e3f05b448a8a06c5def6c2dec, type: 3} |

|||

propertyPath: m_RootOrder |

|||

value: 1 |

|||

objectReference: {fileID: 0} |

|||

m_RemovedComponents: [] |

|||

m_SourcePrefab: {fileID: 100100000, guid: 5889392e3f05b448a8a06c5def6c2dec, type: 3} |

|||

--- !u!1 &1009000883 |

|||

GameObject: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

serializedVersion: 6 |

|||

m_Component: |

|||

- component: {fileID: 1009000884} |

|||

- component: {fileID: 1009000887} |

|||

m_Layer: 0 |

|||

m_Name: OverviewCamera |

|||

m_TagString: MainCamera |

|||

m_Icon: {fileID: 0} |

|||

m_NavMeshLayer: 0 |

|||

m_StaticEditorFlags: 0 |

|||

m_IsActive: 1 |

|||

--- !u!4 &1009000884 |

|||

Transform: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 1009000883} |

|||

m_LocalRotation: {x: 0.2588191, y: 0, z: 0, w: 0.9659258} |

|||

m_LocalPosition: {x: 0, y: 75, z: -140} |

|||

m_LocalScale: {x: 1, y: 1, z: 1} |

|||

m_Children: [] |

|||

m_Father: {fileID: 0} |

|||

m_RootOrder: 0 |

|||

m_LocalEulerAnglesHint: {x: 30, y: 0, z: 0} |

|||

--- !u!20 &1009000887 |

|||

Camera: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 1009000883} |

|||

m_Enabled: 1 |

|||

serializedVersion: 2 |

|||

m_ClearFlags: 2 |

|||

m_BackGroundColor: {r: 0.46666667, g: 0.5647059, b: 0.60784316, a: 1} |

|||

m_projectionMatrixMode: 1 |

|||

m_SensorSize: {x: 36, y: 24} |

|||

m_LensShift: {x: 0, y: 0} |

|||

m_GateFitMode: 2 |

|||

m_FocalLength: 50 |

|||

m_NormalizedViewPortRect: |

|||

serializedVersion: 2 |

|||

x: 0 |

|||

y: 0 |

|||

width: 1 |

|||

height: 1 |

|||

near clip plane: 0.3 |

|||

far clip plane: 1000 |

|||

field of view: 30 |

|||

orthographic: 0 |

|||

orthographic size: 35.13 |

|||

m_Depth: 2 |

|||

m_CullingMask: |

|||

serializedVersion: 2 |

|||

m_Bits: 4294967295 |

|||

m_RenderingPath: -1 |

|||

m_TargetTexture: {fileID: 0} |

|||

m_TargetDisplay: 0 |

|||

m_TargetEye: 3 |

|||

m_HDR: 1 |

|||

m_AllowMSAA: 1 |

|||

m_AllowDynamicResolution: 0 |

|||

m_ForceIntoRT: 1 |

|||

m_OcclusionCulling: 1 |

|||

m_StereoConvergence: 10 |

|||

m_StereoSeparation: 0.022 |

|||

--- !u!1001 &1043871087 |

|||

PrefabInstance: |

|||

m_ObjectHideFlags: 0 |

|||

serializedVersion: 2 |

|||

m_Modification: |

|||

m_TransformParent: {fileID: 0} |

|||

m_Modifications: |

|||

- target: {fileID: 1819751139121548, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_Name |

|||

value: GridFoodCollectorArea (2) |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 1819751139121548, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_IsActive |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalPosition.x |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalPosition.y |

|||

value: -100 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalPosition.z |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalRotation.x |

|||

value: -0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalRotation.y |

|||

value: -0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalRotation.z |

|||

value: -0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalRotation.w |

|||

value: 1 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_RootOrder |

|||

value: 8 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalEulerAnglesHint.x |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalEulerAnglesHint.y |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalEulerAnglesHint.z |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

m_RemovedComponents: [] |

|||

m_SourcePrefab: {fileID: 100100000, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

--- !u!1 &1086444495 |

|||

GameObject: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

serializedVersion: 6 |

|||

m_Component: |

|||

- component: {fileID: 1086444498} |

|||

- component: {fileID: 1086444497} |

|||

- component: {fileID: 1086444496} |

|||

m_Layer: 5 |

|||

m_Name: Text |

|||

m_TagString: Untagged |

|||

m_Icon: {fileID: 0} |

|||

m_NavMeshLayer: 0 |

|||

m_StaticEditorFlags: 0 |

|||

m_IsActive: 1 |

|||

--- !u!114 &1086444496 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 1086444495} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 708705254, guid: f70555f144d8491a825f0804e09c671c, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

m_Material: {fileID: 0} |

|||

m_Color: {r: 1, g: 1, b: 1, a: 1} |

|||

m_RaycastTarget: 1 |

|||

m_OnCullStateChanged: |

|||

m_PersistentCalls: |

|||

m_Calls: [] |

|||

m_FontData: |

|||

m_Font: {fileID: 10102, guid: 0000000000000000e000000000000000, type: 0} |

|||

m_FontSize: 14 |

|||

m_FontStyle: 0 |

|||

m_BestFit: 0 |

|||

m_MinSize: 10 |

|||

m_MaxSize: 40 |

|||

m_Alignment: 0 |

|||

m_AlignByGeometry: 0 |

|||

m_RichText: 1 |

|||

m_HorizontalOverflow: 0 |

|||

m_VerticalOverflow: 0 |

|||

m_LineSpacing: 1 |

|||

m_Text: |

|||

--- !u!222 &1086444497 |

|||

CanvasRenderer: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 1086444495} |

|||

m_CullTransparentMesh: 0 |

|||

--- !u!224 &1086444498 |

|||

RectTransform: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 1086444495} |

|||

m_LocalRotation: {x: 0, y: 0, z: 0, w: 1} |

|||

m_LocalPosition: {x: 0, y: 0, z: 0} |

|||

m_LocalScale: {x: 1, y: 1, z: 1} |

|||

m_Children: [] |

|||

m_Father: {fileID: 378228141} |

|||

m_RootOrder: 1 |

|||

m_LocalEulerAnglesHint: {x: 0, y: 0, z: 0} |

|||

m_AnchorMin: {x: 0.5, y: 0.5} |

|||

m_AnchorMax: {x: 0.5, y: 0.5} |

|||

m_AnchoredPosition: {x: -1000, y: -239.57645} |

|||

m_SizeDelta: {x: 160, y: 30} |

|||

m_Pivot: {x: 0.5, y: 0.5} |

|||

--- !u!1 &1574236047 |

|||

GameObject: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

serializedVersion: 6 |

|||

m_Component: |

|||

- component: {fileID: 1574236049} |

|||

- component: {fileID: 1574236048} |

|||

m_Layer: 0 |

|||

m_Name: FoodCollectorSettings |

|||

m_TagString: Untagged |

|||

m_Icon: {fileID: 0} |

|||

m_NavMeshLayer: 0 |

|||

m_StaticEditorFlags: 0 |

|||

m_IsActive: 1 |

|||

--- !u!114 &1574236048 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 1574236047} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: be4599983abb14917a1c76329db0b6b0, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

agents: [] |

|||

listArea: [] |

|||

totalScore: 0 |

|||

scoreText: {fileID: 1086444496} |

|||

--- !u!4 &1574236049 |

|||

Transform: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 1574236047} |

|||

m_LocalRotation: {x: 0, y: 0, z: 0, w: 1} |

|||

m_LocalPosition: {x: 0.71938086, y: 0.27357092, z: 4.1970553} |

|||

m_LocalScale: {x: 1, y: 1, z: 1} |

|||

m_Children: [] |

|||

m_Father: {fileID: 0} |

|||

m_RootOrder: 3 |

|||

m_LocalEulerAnglesHint: {x: 0, y: 0, z: 0} |

|||

--- !u!1 &1799584680 |

|||

GameObject: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

serializedVersion: 6 |

|||

m_Component: |

|||

- component: {fileID: 1799584681} |

|||

- component: {fileID: 1799584683} |

|||

- component: {fileID: 1799584682} |

|||

m_Layer: 5 |

|||

m_Name: Panel |

|||

m_TagString: Untagged |

|||

m_Icon: {fileID: 0} |

|||

m_NavMeshLayer: 0 |

|||

m_StaticEditorFlags: 0 |

|||

m_IsActive: 0 |

|||

--- !u!224 &1799584681 |

|||

RectTransform: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 1799584680} |

|||

m_LocalRotation: {x: 0, y: 0, z: 0, w: 1} |

|||

m_LocalPosition: {x: 0, y: 0, z: 0} |

|||

m_LocalScale: {x: 1, y: 1, z: 1} |

|||

m_Children: |

|||

- {fileID: 273651479} |

|||

m_Father: {fileID: 378228141} |

|||

m_RootOrder: 0 |

|||

m_LocalEulerAnglesHint: {x: 0, y: 0, z: 0} |

|||

m_AnchorMin: {x: 0, y: 0} |

|||

m_AnchorMax: {x: 1, y: 1} |

|||

m_AnchoredPosition: {x: 0, y: 0} |

|||

m_SizeDelta: {x: 0, y: 0} |

|||

m_Pivot: {x: 0.5, y: 0.5} |

|||

--- !u!114 &1799584682 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 1799584680} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: fe87c0e1cc204ed48ad3b37840f39efc, type: 3} |

|||

m_Name: |

|||

m_EditorClassIdentifier: |

|||

--- !u!222 &1799584683 |

|||

CanvasRenderer: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 1799584680} |

|||

m_CullTransparentMesh: 0 |

|||

--- !u!1001 &1985725465 |

|||

PrefabInstance: |

|||

m_ObjectHideFlags: 0 |

|||

serializedVersion: 2 |

|||

m_Modification: |

|||

m_TransformParent: {fileID: 0} |

|||

m_Modifications: |

|||

- target: {fileID: 1819751139121548, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_Name |

|||

value: GridFoodCollectorArea (3) |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 1819751139121548, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_IsActive |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalPosition.x |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalPosition.y |

|||

value: -150 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalPosition.z |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalRotation.x |

|||

value: -0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalRotation.y |

|||

value: -0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalRotation.z |

|||

value: -0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalRotation.w |

|||

value: 1 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_RootOrder |

|||

value: 9 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalEulerAnglesHint.x |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalEulerAnglesHint.y |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 4688212428263696, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

propertyPath: m_LocalEulerAnglesHint.z |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

m_RemovedComponents: [] |

|||

m_SourcePrefab: {fileID: 100100000, guid: b5339e4b990ade14f992aadf3bf8591b, type: 3} |

|||

--- !u!1001 &2124876351 |

|||

PrefabInstance: |

|||

m_ObjectHideFlags: 0 |

|||

serializedVersion: 2 |

|||

m_Modification: |

|||

m_TransformParent: {fileID: 0} |

|||

m_Modifications: |

|||

- target: {fileID: 224194346362733190, guid: 3ce107b4a79bc4eef83afde434932a68, |

|||

type: 3} |

|||

propertyPath: m_LocalPosition.x |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 224194346362733190, guid: 3ce107b4a79bc4eef83afde434932a68, |

|||

type: 3} |

|||

propertyPath: m_LocalPosition.y |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 224194346362733190, guid: 3ce107b4a79bc4eef83afde434932a68, |

|||

type: 3} |

|||

propertyPath: m_LocalPosition.z |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 224194346362733190, guid: 3ce107b4a79bc4eef83afde434932a68, |

|||

type: 3} |

|||

propertyPath: m_LocalRotation.x |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 224194346362733190, guid: 3ce107b4a79bc4eef83afde434932a68, |

|||

type: 3} |

|||

propertyPath: m_LocalRotation.y |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 224194346362733190, guid: 3ce107b4a79bc4eef83afde434932a68, |

|||

type: 3} |

|||

propertyPath: m_LocalRotation.z |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 224194346362733190, guid: 3ce107b4a79bc4eef83afde434932a68, |

|||

type: 3} |

|||

propertyPath: m_LocalRotation.w |

|||

value: 1 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 224194346362733190, guid: 3ce107b4a79bc4eef83afde434932a68, |

|||

type: 3} |

|||

propertyPath: m_RootOrder |

|||

value: 5 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 224194346362733190, guid: 3ce107b4a79bc4eef83afde434932a68, |

|||

type: 3} |

|||

propertyPath: m_AnchoredPosition.x |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 224194346362733190, guid: 3ce107b4a79bc4eef83afde434932a68, |

|||

type: 3} |

|||

propertyPath: m_AnchoredPosition.y |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 224194346362733190, guid: 3ce107b4a79bc4eef83afde434932a68, |

|||

type: 3} |

|||

propertyPath: m_SizeDelta.x |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 224194346362733190, guid: 3ce107b4a79bc4eef83afde434932a68, |

|||

type: 3} |

|||

propertyPath: m_SizeDelta.y |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 224194346362733190, guid: 3ce107b4a79bc4eef83afde434932a68, |

|||

type: 3} |

|||

propertyPath: m_AnchorMin.x |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 224194346362733190, guid: 3ce107b4a79bc4eef83afde434932a68, |

|||

type: 3} |

|||

propertyPath: m_AnchorMin.y |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 224194346362733190, guid: 3ce107b4a79bc4eef83afde434932a68, |

|||

type: 3} |

|||

propertyPath: m_AnchorMax.x |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 224194346362733190, guid: 3ce107b4a79bc4eef83afde434932a68, |

|||

type: 3} |

|||

propertyPath: m_AnchorMax.y |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 224194346362733190, guid: 3ce107b4a79bc4eef83afde434932a68, |

|||

type: 3} |

|||

propertyPath: m_Pivot.x |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

- target: {fileID: 224194346362733190, guid: 3ce107b4a79bc4eef83afde434932a68, |

|||

type: 3} |

|||

propertyPath: m_Pivot.y |

|||

value: 0 |

|||

objectReference: {fileID: 0} |

|||

m_RemovedComponents: [] |

|||

m_SourcePrefab: {fileID: 100100000, guid: 3ce107b4a79bc4eef83afde434932a68, type: 3} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 07d4146bc5bfaba4ab42d159c49f4d7b |

|||

DefaultImporter: |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

1001

Project/Assets/ML-Agents/Examples/FoodCollector/TFModels/GridFoodCollector.nn

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

|

|||

fileFormatVersion: 2 |

|||

guid: 699f852e79b5ba642871514fb1fb9843 |

|||

ScriptedImporter: |

|||

fileIDToRecycleName: |

|||

11400000: main obj |

|||

11400002: model data |

|||

externalObjects: {} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

script: {fileID: 11500000, guid: 19ed1486aa27d4903b34839f37b8f69f, type: 3} |

|||

|

|||

# Summary |

|||

|

|||

The Grid Sensor combines the generality of data extraction from Raycasts with the image processing power of Convolutional Neural Networks. The Grid Sensor can be used to collect data in the general form of a "Width x Height x Channel" matrix which can be used for training Reinforcement Learning agents or for data analysis. |

|||

|

|||

|

|||

# Motivation |

|||

|

|||

In MLAgents there are 2 main sensors for observing information that is "physically" around the agent. |

|||

|

|||

**Raycasts** |

|||

|

|||

Raycasts provide the agent the ability to see things along prespecified lines of sight, similar to LIDAR. The kind of data it can extract is open to the developer from things like: |

|||

* The type of an object (enemy, npc, etc) |

|||

* The health of a unit |

|||

* the damage-per-second of a weapon on the ground |

|||

|

|||

This is simple to implement and provides enough information for most simple games. When few are used, they are computationally fast. However, there are multiple limiting factors: |

|||

* The rays need to be at the same height as the things the agent should observe |

|||

* Objects can remain hidden by line of sight and if the knowledge of those objects is crucial to the success of the agent, then this limitation must be compensated for by the agents networks capacity (i.e., need a bigger brain with memory) |

|||

* The order of the raycasts (one raycast being to the left/right of another) is thrown away at the model level and must be learned by the agent which extends training time. Multiple raycasts exacerbates this issue. |

|||

* Typically the length of the raycasts is limited because the agent need not know about objects that are at the other side of the level. Combined with few raycasts for computational efficiency, this means that an agent may not observe objects that fall between these rays and the issue becomes worse as the objects reduce in size. |

|||

|

|||

**Camera** |

|||

|

|||

The Camera provides the agent with either a grayscale or an RGB image of the game environment. It goes without saying that there non-linear relationships between nearby pixels in an image. It is this intuition that helps form the basis of Convolutional Neural Networks (CNNs) and established the literature of designing networks that take advantage of these relationships between pixels. Following this established literature of CNNs on image based data, the MLAgent's Camera Sensor provides a means by which the agent can include high dimensional inputs (images) into its observation stream. |

|||

However the Camera Sensor has its own drawbacks as well. |

|||

* It requires render the scene and thus is computationally slower than alternatives that do not use rendering |

|||

* It has yet been shown that the Camera Sensor can be used on a headless machine which means it is not yet possible (if at all) to train an agent on a headless infrastructure. |

|||

* If the textures of the important objects in the game are updated, the agent needs to be retrained. |

|||

* The RGB of the camera only provides a maximum of 3 channels to the agent. |

|||

|

|||

These limitations provided the motivation towards the development of the Grid Sensor and Grid Observations as described below. |

|||

|

|||

# Contribution |

|||

|

|||

An image can be thought of as a matrix of a predefined width (W) and a height (H) and each pixel can be thought of as simply an array of length 3 (in the case of RGB), `[Red, Green, Blue]` holding the different channel information of the color (channel) intensities at that pixel location. Thus an image is just a 3 dimensional matrix of size WxHx3. A Grid Observation can be thought of as a generalization of this setup where in place of a pixel there is a "cell" which is an array of length N representing different channel intensities at that cell position. From a Convolutional Neural Network point of view, the introduction of multiple channels in an "image" isn't a new concept. One such example is using an RGB-Depth image which is used in several robotics applications. The distinction of Grid Observations is what the data within the channels represents. Instead of limiting the channels to color intensities, the channels within a cell of a Grid Observation generalize to any data that can be represented by a single number (float or int). |

|||

|

|||

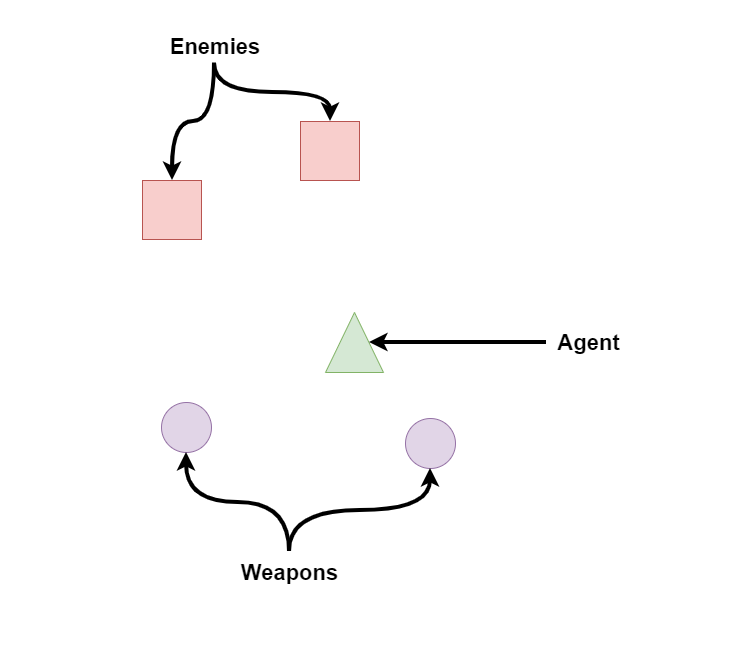

Before jumping into the details of the Grid Sensor, an important thing to note is the agent performance and qualitatively different behavior over raycasts. Unity MLAgent's comes with a suite of example environments. One in particular, the [Food Collector](https://github.com/Unity-Technologies/ml-agents/blob/master/docs/Learning-Environment-Examples.md#food-collector), has been the focus of the Grid Sensor development. |

|||

|

|||

The Food Collector environment can be described as: |

|||

* Set-up: A multi-agent environment where agents compete to collect food. |

|||

* Goal: The agents must learn to collect as many green food spheres as possible while avoiding red spheres. |

|||

* Agents: The environment contains 5 agents with same Behavior Parameters. |

|||

|

|||

When applying the Grid Sensor to this environment, in place of the Raycast Vector Sensor or the Camera Sensor, a Mean Reward of 40-50 is observed. This performance is on par with what is seen by agents trained with RayCasts but the side-by-side comparison of trained agents, shows a qualitative difference in behavior. A deeper study and interpretation of the qualitative differences between agents trained with Raycasts and Vector Sensors verses Grid Sensors is left to future studies. |

|||

|

|||

<img src="images/gridobs-vs-vectorobs.gif" align="middle" width="3000"/> |

|||

|

|||

## Overview |

|||

|

|||

There are 3 main phases to the Grid Sensor: |

|||

1. **Collection** - data is extracted from observed objects |

|||

2. **Encoding** - the extracted data is encoded into a grid observation |

|||

3. **Communication** - the grid observation is sent to python or used by a trained model |

|||

|

|||

These phases are described in the following sections. |

|||

|

|||

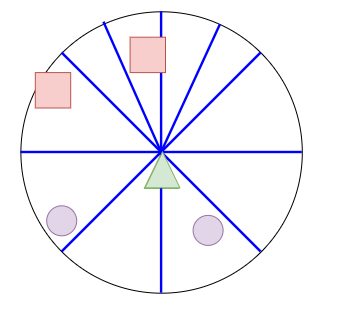

## Collection |

|||

|

|||

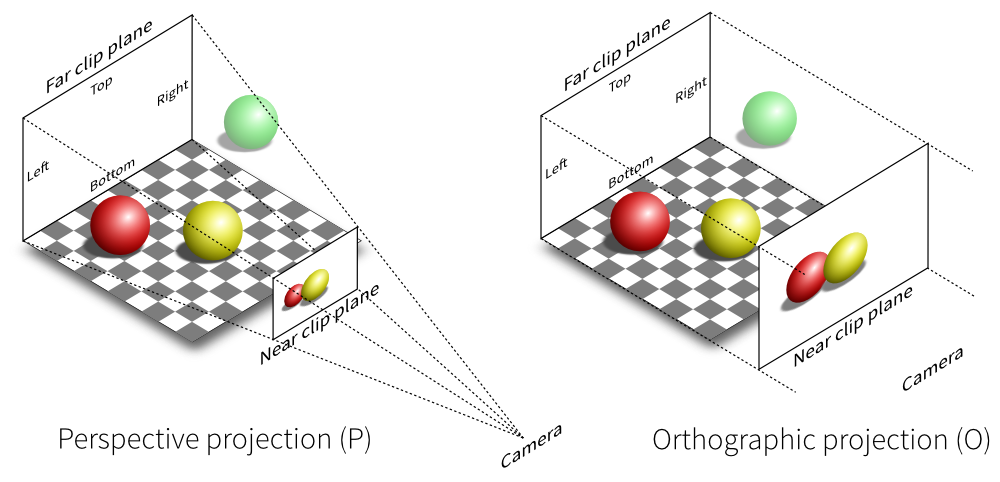

A Grid Sensor is the Grid Observation analog of a Unity Camera but with some notable differences. The sensor is made up of a grid of identical Box Colliders which designate the "cells" of the grid. The Grid Sensor also has a list of "detectable objects" in the form of Unity gameobject tags. When an object that is tagged as a detectable object is present within a cell's Box Collider, that cell is "activated" and a method on the Grid Sensor extracts data from said object and associates that data with the position of the activated cell. Thus the Grid Sensor is always orthographic: |

|||

|

|||

<img src="images/persp_ortho_proj.png" width="500"> |

|||

<cite><a href="https://www.geofx.com/graphics/nehe-three-js/lessons17-24/lesson21/lesson21.html">geofx.com</a></cite> |

|||

|

|||

In practice it has been useful to center the Grid Sensor on the agent in such a way that it is equivalent to having a "top-down" orthographic view of the agent. |

|||

|

|||

Just like the Raycasts mentioned earlier, the Grid Sensor can extract any kind of data from a detected object and just like the Camera, the Grid Sensor maintains the spacial relationship between nearby cells that allows one to take advantage of the CNN literature. Thus the Grid Sensor tries to take the best of both sensors and combines them to something that is more expressive. |

|||

|

|||

### Example of Grid Observations |

|||

A Grid Observation is best described using an example and a side by side comparison with the Raycasts and the Camera. |

|||

|

|||

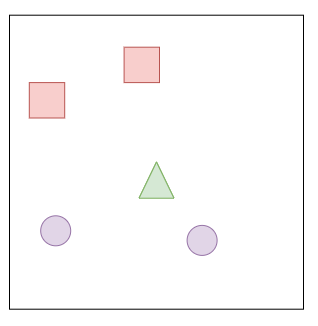

Lets imagine a scenario where an agent is faced with 2 enemies and there are 2 "equipable" weapons somewhat behind the agent. Lets also keep in mind some important properties of the enemies and weapons that would be useful for the agent to know. For simplicity, lets assume enemies represent their health as a percentage (0-100%). Lets also assume that enemies and weapons are the only 2 kind of objects that the agent would see in the entire game. |

|||

|

|||

<img src="images/gridsensor-example.png" align="middle" width="3000"/> |

|||

|

|||

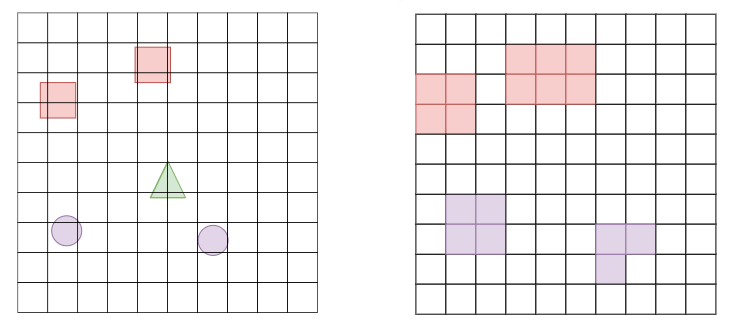

#### Raycasts |

|||

If a raycast hits an object, not only could we get the distance (normalized by the maximum raycast distance) we would be able to extract its type (enemy vs weapon) and if its an enemy then we could get its health (e.g., .6). |

|||

|

|||

There are many ways in which one could encode this information but one reasonable encoding is this: |

|||

``` |

|||

raycastData = [isWeapon, isEnemy, health, normalizedDistance] |

|||

``` |

|||

|

|||

For example, if the raycast hit nothing then this would be represented by `[0, 0, 0, 1]`. |

|||

If instead the raycast hit an enemy with 60% health that is 50% of the maximum raycast distance, the data would be represented by `[0, 1, .6, .5]`. |

|||

|

|||

The limitations of raycasts which were presented above are easy to visualize in the below image. The agent is unable to see where the weapons are and only sees one of the enemies. Typically in the MLAgents examples, this situation is mitigated by including previous frames of data so that the agent observes changes through time. However, in more complex games, it is not difficult to imagine scenarios where an agent would not be able to observe important information using only Raycasts. |

|||

|

|||

<img src="images/gridsensor-example-raycast.png" align="middle" width="3000"/> |

|||

|

|||

#### Camera |

|||

|

|||

Instead, if we used a camera, the agent would be able to see around itself. It would be able to see both enemies and weapons (assuming its field of view was wide enough) and this could be processed by a CNN to encode this information. However, ignoring the obvious limitation that the game would have to be rendered, the agent would not have immediate access to the health value of the enemies. Perhaps textures are added to include "visible damage" to the enemies or there may be health bars above the enemies heads but both of these additions are subject to change, especially in a game that is in development. By using the camera only, it forces the agent to learn a different behavior as it is not able to access what would otherwise be accessible data. |

|||

|

|||

<img src="images/gridsensor-example-camera.png" align="middle" width="3000"/> |

|||

|

|||

#### Grid Sensor |

|||

|

|||

The data extraction method of the Grid Sensor is as open-ended as using the Raycasts to collect data. The `GetObjectData` method on the Grid Sensor can be overridden to collect whatever information is deemed useful for the performance of the agent. By default, only the tag is used. |

|||

|

|||

```csharp |

|||

protected virtual float[] GetObjectData(GameObject currentColliderGo, float typeIndex, float normalizedDistance) |

|||

``` |

|||

|

|||

Following the same data extraction method presented in the section on raycasts, if a Grid Sensor was used instead of Raycasts or a Camera, then not only would the agent be able to extract the health value of the enemies but it would also be able to encode the relative positions of those objects as is done with Camera. Additionally, as the texture of the objects is not used, this data can be collected without rendering the scene. |

|||

|

|||

<img src="images/gridsensor-example-gridsensor.png" align="middle" width="3000"/> |

|||

|

|||

At the end of the Collection phase, each cell with an object inside of it has `GetObjectData` called and the returned values (named `channelValues`) is then processed in the Encoding phase which is described in the next section. |

|||

|

|||

#### CountingGridSensor |

|||

|

|||

The CountingGridSensor builds on the GridSesnor to perform the specific job of counting the number of object types that are based on the different detectable object tags. The encoding and is meant to exploit a key feature of the Grid Sensor. In both the Channel and the Channel Hot DepthTypes, the closest detectable object, in relation to the agent, that lays within a cell is used for encoding the value for that cell. In the CountingGridSensor, the number of each type of object is recorded and then normalized according to a max count, stored in the ChannelDepth. |

|||

|

|||

An example of the CountingGridSensor can be found below. |

|||

|

|||

|

|||

## Encoding |

|||

|

|||

In order to support different ways of representing the data extracted from an object, multiple "depth types" were implemented. Each has pros and cons and, depending on the use-case of the Grid Sensor, one may be more beneficial than the others. |

|||

|

|||

The data stored that is extracted during the *Collection* phase, and stored in `channelValues`, may come from different sources. For instance, going back the Enemy/Weapon example in the previous section, an enemy's health is continuous whereas the object type (enemy or weapon) is categorical data. This distinction is important as categorical data requires a different encoding mechanism than continuous data. |

|||

|

|||

The Grid Sensor handles this distinction with 4 properties that define how this data is to be encoded: |

|||

* DepthType - Enum signifying the encoding mode: Channel, ChannelHot |

|||

* ObservationPerCell - the total number of values that are in each cell of the grid observation |

|||

* ChannelDepth - int[] describing the range of each data within the `channelValues` |

|||

* ChannelOffset - int[] describing the number of encoded values that come before each data within `channelValues` |

|||

|

|||

The ChannelDepth and the DepthType are user defined and gives control to the developer to how they can encode their data. The ChannelDepth and ChannelOffset are both initialized and used in different ways depending on the ChannelDepth and the DepthType. |

|||

|

|||

How categorical and continuous data is treated is different between the different DepthTypes as will be explored in the sections below. The sections will use an on-going example similar to example mentioned earlier where, within a cell, the sensor observes: `an enemy with 60% health`. Thus the cell contains 2 kinds of data: categorical data (object type) and the continuous data (health). Additionally, the order of the observed tags is important as it allows one to encode the tag of the observed object by its index within list of observed tags. Note that in the example, the observed tags is defined as ["weapon", "enemy"]. |

|||

|

|||

### Channel Based |

|||

|

|||

The Channel Based Grid Observations is perhaps the simplest in terms of usability and similarity with other machine learning applications. Each grid is of size WxHxC where C is the number of channels. To distinguish between categorical and continuous data, one would use the ChannelDepth array to signify the ranges that the values in the `channelValues` array could take. If one sets ChannelDepth[i] to be 1, it is assumed that the value of `channelValues[i]` is already normalized. Else ChannelDepth[i] represents the total number of possible values that `channelValues[i]` can take. |

|||

|

|||

Using the example described earlier, if one was using Channel Based Grid Observations, they would have a ChannelDepth = {2, 1} to describe that there are two possible values for the first channel and the 1 represents that the second channel is already normalized. |

|||

As the "enemy" is in the second position of the observed tags, its value can be normalized by: |

|||

``` |

|||

num = detectableObjects.IndexOfTag("enemy")/ChannelDepth[0] = 2/2 = 1; |

|||

``` |

|||

|

|||

By using this formula, if there wasn't an object within the cell then the value would be 0. |

|||

|

|||

As the ChannelDepth for the second channel is defined as 1, the collected health value (60% = 0.6) can be encoded directly. Thus the encoded data at this cell is: |

|||

`[1, .6]` |

|||

|

|||

At the end of the Encoding phase, the resulting Grid Observation would be a WxHx2 matrix. |

|||

|

|||

### Channel Hot |

|||

|

|||

The Channel Hot DepthType generalizes the classic OneHot encoding to differentiate combinations of different data. Rather than normalizing the data like in the Channel Based section, each element of `channelValues` is represented by an encoding based on the ChannelDepth. If ChannelDepth[i] = 1, then this represents that `channelValues[i]` is already normalized (between 0-1) and will be used directly within the encoding. However if ChannelDepth[i] is an integer greater than 1, then the value in `channelValues[i]` will be converted into a OneHot encoding based on the following: |

|||

|

|||

``` |

|||

float[] arr = new float[ChannelDepth[i] + 1]; |

|||

int index = (int) channelValues[i] + 1; |

|||

arr[index] = 1; |

|||

return arr; |

|||

``` |

|||

|

|||

The `+ 1` allows the first index of `arr` to be reserved for encoding "empty". |

|||

|

|||

The encoding of each channel is then concatenated together. Clearly using this setup allows the developer to be able to encode values using the classic OneHot encoding. Below are some different variations of the ChannelDepth which create different encodings of the example: |

|||

|

|||

##### ChannelDepth = {3, 1} |

|||

The first element, 3, signifies that there are 3 possibilities for the first channel and as the "enemy" is 2nd in the detected objects list, the "enemy" in the example is encoded as `[0, 0, 1]` where the first index represents "no object". The second element, 1, signifies that the health is already normalized and, following the table, is used directly. The resulting encoding is thus: |

|||

``` |

|||

[0, 0, 1, 0.6] |

|||

``` |

|||

|

|||

##### ChannelDepth = {3, 5} |

|||

|

|||

Like in the previous example, the "enemy" in the example is encoded as `[0, 0, 1]`. For the "health" however, the 5 signifies that the health should be represented by a OneHot encoding of 5 possible values, and in this case that encoding is `round(.6*5) = round(3) = 3 => [0, 0, 0, 1, 0]`. |

|||

|

|||

This encoding would then be concatenated together with the "enemy" encoding resulting in: |

|||

``` |

|||

enemy encoding => [0, 0, 1] |

|||

health encoding => [0, 0, 0, 1, 0] |

|||

final encoding => [0, 0, 1, 0, 0, 0, 1, 0] |

|||

``` |

|||

|

|||

The table below describes how other values of health would be mapped to OneHot encoding representations: |

|||

|

|||

| Range | OneHot Encoding | |

|||

|------------------|-----------------| |

|||

| health = 0 | [1, 0, 0, 0, 0] | |

|||

| 0 < health < .3 | [0, 1, 0, 0, 0] | |

|||

| .3 < health < .5 | [0, 0, 1, 0, 0] | |

|||

| .5 < health < .7 | [0, 0, 0, 1, 0] | |

|||

| .7 < health <= 1 | [0, 0, 0, 0, 1] | |

|||

|

|||

|

|||

##### ChannelDepth = {1, 1} |

|||

This setting of ChannelDepth would throw an error as there is not enough information to encode the categorical data of the object type. |

|||

|

|||

|

|||

### CountingGridSensor |

|||

|

|||

As introduced above, the CountingGridSensor inherits from the GridSensor for the sole purpose of counting the different objects that lay within a cell. In order to normalize the counts so that the grid can be properly encoded as PNG, the ChannelDepth is used to represent the "maximum count" of each type. For the working example, if the ChannelDepth is set as {50, 10}, which represents that the maximum count for objects with the "weapon" and "enemy" tag is 50 and 10, respectively, then the resulting data would be: |

|||

``` |

|||

encoding = [0 weapons/ 50 weapons, 1 enemy / 10 enemies] = [0, .1] |

|||

``` |

|||

|

|||

## Communication |

|||

|

|||

At the end of the Encoding phase, all of the data for a Grid Observation is placed into a float[] referred to as the perception buffer. Now the data is ready to be sent to either the python side for training or to be used by a trained model within Unity. This is where the Grid Sensor takes advantage of 2D textures and the PNG encoding schema to reduce the number of bytes that are being sent. |

|||

|

|||

The 2D texture is a Unity class that encodes the colors of an image. It is used for many ways through out Unity but it has 2 specific methods that the Grid Sensor takes advantage of: |

|||

|

|||

`SetPixels` takes a 2D array of Colors and assigns the color values to the texture. |

|||

|

|||

`EncodeToPNG` returns a byte[] containing the PNG encoding of the colors of the texture. |

|||

|

|||

Together these 2 functions allow one to "push" a WxHx3 normalized array to a PNG byte[]. And indeed, this is how the Camera Sensor in Unity MLAgents sends its data to python. However, the grid sensor can have N channels so there needs to be a more generic way to send the data. |

|||

|

|||

The core idea behind how a Grid Observation is encoded is the following: |

|||

1. split the channels of a Grid Observation into groups of 3 |

|||

2. encode each of these groups as a PNG byte[] |

|||

3. concatenate all byte[] and send the combined array to python |

|||

4. reconstruct the Grid Observation by splitting up the array and decoding the sections |

|||

|

|||

Once the bytes are sent to python, they are then decoded and used as a tensor of the correct shape within the mlagents python codebase. |

|||

|

|||

using System; |

|||

using UnityEngine; |

|||

using UnityEngine.Assertions; |

|||

|

|||

namespace Unity.MLAgents.Extensions.Sensors |

|||

{ |

|||

public class CountingGridSensor : GridSensor |

|||

{ |

|||

/// <inheritdoc/>

|

|||

public override void InitDepthType() |

|||

{ |

|||

ObservationPerCell = ChannelDepth.Length; |

|||

} |

|||

|

|||

/// <summary>

|

|||

/// Overrides the initialization ofthe m_ChannelHotDefaultPerceptionBuffer with 0s

|

|||

/// as the counting grid sensor starts within its initialization equal to 0

|

|||

/// </summary>

|

|||

public override void InitChannelHotDefaultPerceptionBuffer() |

|||

{ |

|||

m_ChannelHotDefaultPerceptionBuffer = new float[ObservationPerCell]; |

|||

} |

|||

|

|||

/// <inheritdoc/>

|

|||

public override void SetParameters(string[] detectableObjects, int[] channelDepth, GridDepthType gridDepthType, |

|||

float cellScaleX, float cellScaleZ, int gridWidth, int gridHeight, int observeMaskInt, bool rotateToAgent, Color[] debugColors) |

|||

{ |

|||

this.ObserveMask = observeMaskInt; |

|||

this.DetectableObjects = detectableObjects; |

|||

this.ChannelDepth = channelDepth; |

|||

if (DetectableObjects.Length != ChannelDepth.Length) |

|||

throw new UnityAgentsException("The channels of a CountingGridSensor is equal to the number of detectableObjects"); |

|||

this.gridDepthType = GridDepthType.Channel; |

|||

this.CellScaleX = cellScaleX; |

|||

this.CellScaleZ = cellScaleZ; |

|||

this.GridNumSideX = gridWidth; |

|||

this.GridNumSideZ = gridHeight; |

|||

this.RotateToAgent = rotateToAgent; |

|||

this.DiffNumSideZX = (GridNumSideZ - GridNumSideX); |

|||

this.OffsetGridNumSide = (GridNumSideZ - 1f) / 2f; |

|||

this.DebugColors = debugColors; |

|||

} |

|||

|

|||

/// <summary>

|

|||

/// For each collider, calls LoadObjectData on the gameobejct

|

|||

/// </summary>

|

|||

/// <param name="foundColliders">The array of colliders</param>

|

|||

/// <param name="cellIndex">The cell index the collider is in</param>

|

|||

/// <param name="cellCenter">the center of the cell the collider is in</param>

|

|||

protected override void ParseColliders(Collider[] foundColliders, int cellIndex, Vector3 cellCenter) |

|||

{ |

|||

GameObject currentColliderGo = null; |

|||

Vector3 closestColliderPoint = Vector3.zero; |

|||

|

|||

for (int i = 0; i < foundColliders.Length; i++) |

|||

{ |

|||

currentColliderGo = foundColliders[i].gameObject; |

|||

|

|||

// Continue if the current collider go is the root reference

|

|||

if (currentColliderGo == rootReference) |

|||

continue; |

|||

|

|||

closestColliderPoint = foundColliders[i].ClosestPointOnBounds(cellCenter); |

|||

|

|||

LoadObjectData(currentColliderGo, cellIndex, |