当前提交

5e980ec1

共有 131 个文件被更改,包括 6269 次插入 和 5295 次删除

-

2.yamato/gym-interface-test.yml

-

4.yamato/protobuf-generation-test.yml

-

5.yamato/python-ll-api-test.yml

-

8.yamato/standalone-build-test.yml

-

11.yamato/training-int-tests.yml

-

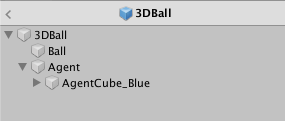

22Project/Assets/ML-Agents/Examples/3DBall/Prefabs/3DBallHardNew.prefab

-

936Project/Assets/ML-Agents/Examples/Basic/Prefabs/Basic.prefab

-

928Project/Assets/ML-Agents/Examples/Bouncer/Prefabs/Environment.prefab

-

22Project/Assets/ML-Agents/Examples/Crawler/Prefabs/DynamicPlatform.prefab

-

13Project/Assets/ML-Agents/Examples/Crawler/Prefabs/FixedPlatform.prefab

-

153Project/Assets/ML-Agents/Examples/FoodCollector/Prefabs/FoodCollectorArea.prefab

-

32Project/Assets/ML-Agents/Examples/GridWorld/Prefabs/Area.prefab

-

43Project/Assets/ML-Agents/Examples/Hallway/Prefabs/SymbolFinderArea.prefab

-

64Project/Assets/ML-Agents/Examples/PushBlock/Prefabs/PushBlockArea.prefab

-

85Project/Assets/ML-Agents/Examples/Pyramids/Prefabs/AreaPB.prefab

-

22Project/Assets/ML-Agents/Examples/Reacher/Prefabs/Agent.prefab

-

6Project/Assets/ML-Agents/Examples/SharedAssets/Scripts/ModelOverrider.cs

-

4Project/Assets/ML-Agents/Examples/SharedAssets/Scripts/ProjectSettingsOverrides.cs

-

3Project/Assets/ML-Agents/Examples/SharedAssets/Scripts/SensorBase.cs

-

256Project/Assets/ML-Agents/Examples/Soccer/Prefabs/SoccerFieldTwos.prefab

-

44Project/Assets/ML-Agents/Examples/Tennis/Prefabs/TennisArea.prefab

-

7Project/Assets/ML-Agents/Examples/Tennis/Scripts/TennisAgent.cs

-

22Project/Assets/ML-Agents/Examples/Walker/Prefabs/WalkerPair.prefab

-

64Project/Assets/ML-Agents/Examples/WallJump/Prefabs/WallJumpArea.prefab

-

3Project/ProjectSettings/GraphicsSettings.asset

-

2Project/ProjectSettings/UnityConnectSettings.asset

-

2README.md

-

7com.unity.ml-agents/CHANGELOG.md

-

24com.unity.ml-agents/Runtime/Academy.cs

-

35com.unity.ml-agents/Runtime/Agent.cs

-

5com.unity.ml-agents/Runtime/Communicator/RpcCommunicator.cs

-

34com.unity.ml-agents/Runtime/DecisionRequester.cs

-

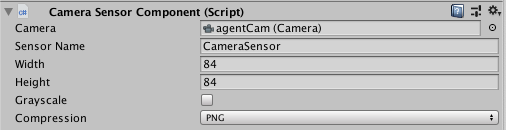

3com.unity.ml-agents/Runtime/Sensors/CameraSensor.cs

-

6com.unity.ml-agents/Runtime/Sensors/ISensor.cs

-

3com.unity.ml-agents/Runtime/Sensors/RayPerceptionSensor.cs

-

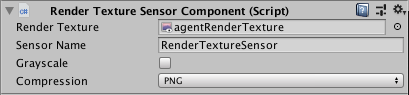

3com.unity.ml-agents/Runtime/Sensors/RenderTextureSensor.cs

-

13com.unity.ml-agents/Runtime/Sensors/StackingSensor.cs

-

6com.unity.ml-agents/Runtime/Sensors/VectorSensor.cs

-

44com.unity.ml-agents/Runtime/SideChannels/IncomingMessage.cs

-

69com.unity.ml-agents/Runtime/Timer.cs

-

59com.unity.ml-agents/Tests/Editor/MLAgentsEditModeTest.cs

-

1com.unity.ml-agents/Tests/Editor/ParameterLoaderTest.cs

-

102com.unity.ml-agents/Tests/Editor/PublicAPI/PublicApiValidation.cs

-

1com.unity.ml-agents/Tests/Editor/Sensor/FloatVisualSensorTests.cs

-

1com.unity.ml-agents/Tests/Editor/Sensor/SensorShapeValidatorTests.cs

-

18com.unity.ml-agents/Tests/Editor/Sensor/StackingSensorTests.cs

-

26com.unity.ml-agents/Tests/Editor/SideChannelTests.cs

-

2com.unity.ml-agents/Tests/Editor/TimerTest.cs

-

16com.unity.ml-agents/package.json

-

3docs/Getting-Started.md

-

10docs/ML-Agents-Overview.md

-

4docs/Migrating.md

-

141docs/Python-API.md

-

10docs/Training-ML-Agents.md

-

11docs/Training-PPO.md

-

11docs/Training-SAC.md

-

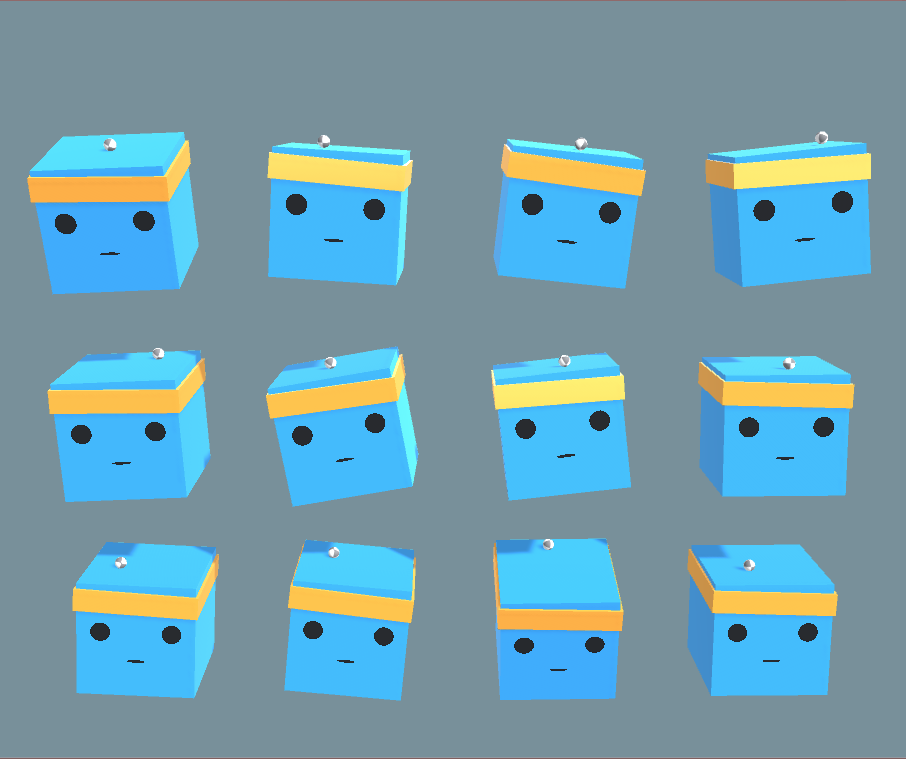

999docs/images/3dball_big.png

-

852docs/images/3dball_small.png

-

974docs/images/curriculum.png

-

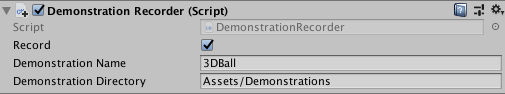

150docs/images/demo_component.png

-

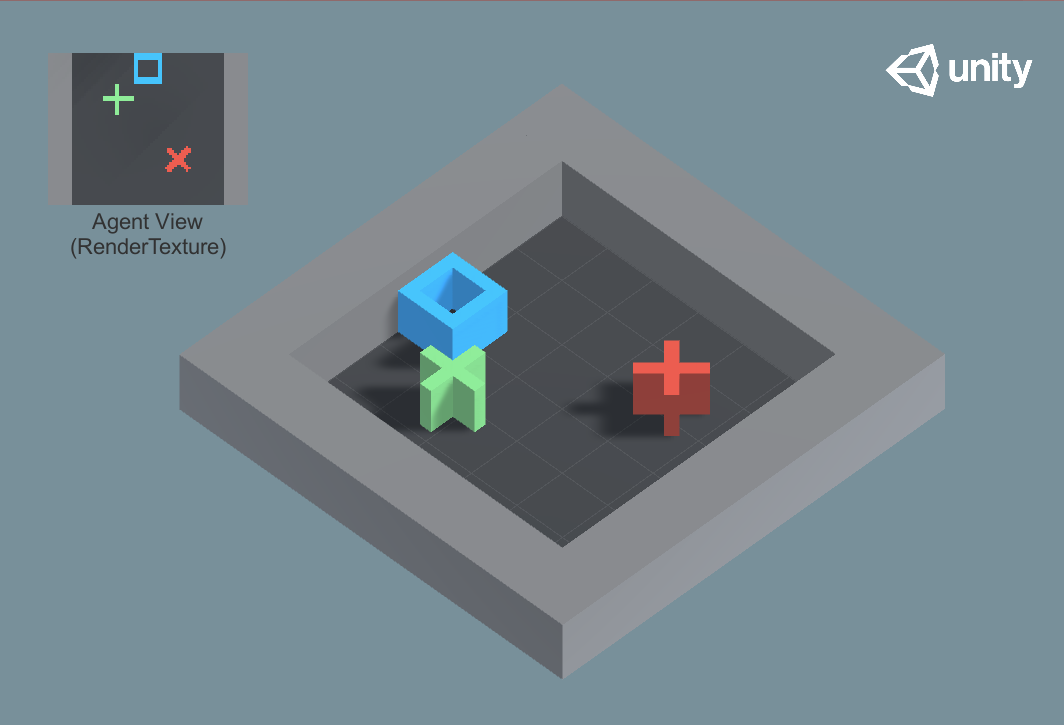

980docs/images/gridworld.png

-

999docs/images/ml-agents-LSTM.png

-

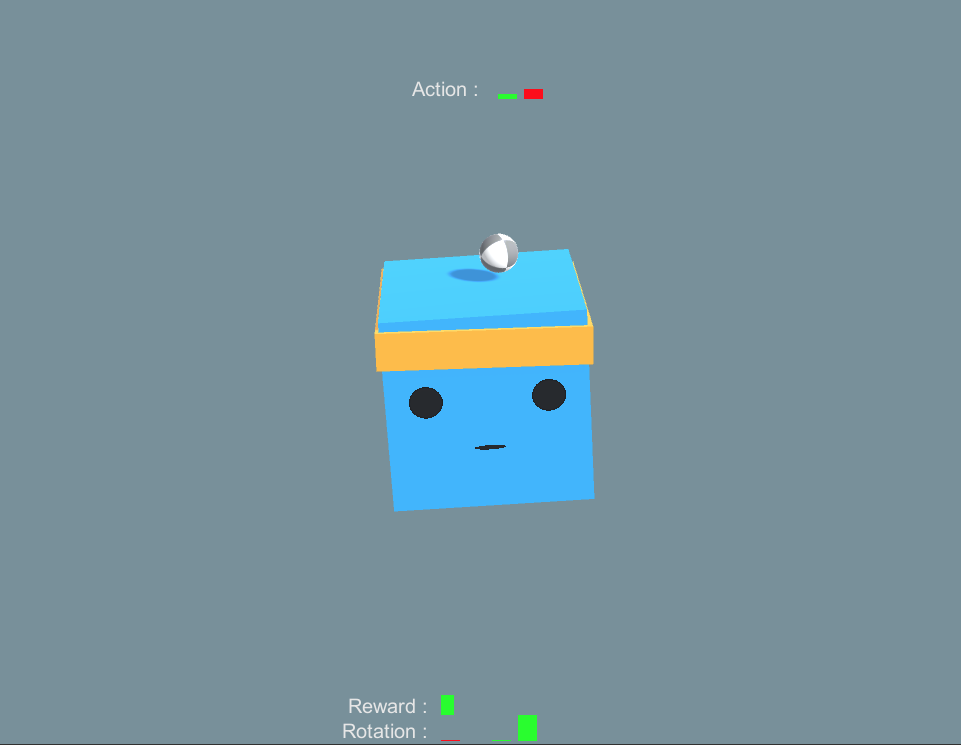

181docs/images/monitor.png

-

219docs/images/platform_prefab.png

-

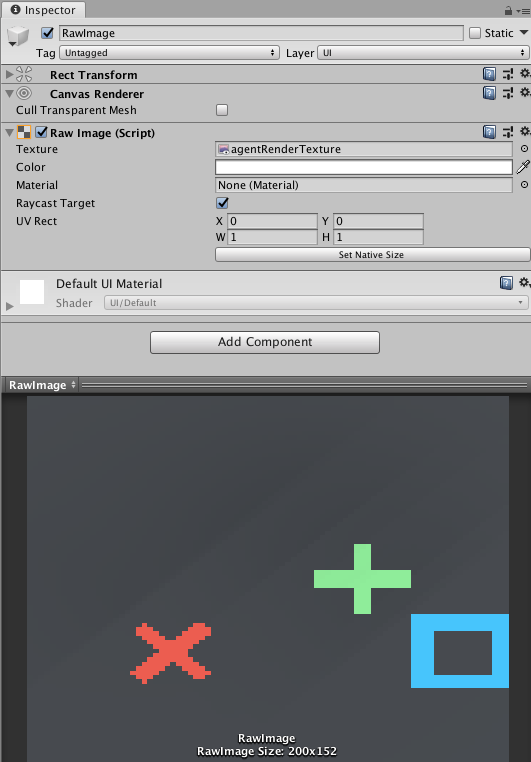

349docs/images/visual-observation-rawimage.png

-

95docs/images/visual-observation-rendertexture.png

-

107docs/images/visual-observation.png

-

15gym-unity/README.md

-

342gym-unity/gym_unity/envs/__init__.py

-

198gym-unity/gym_unity/tests/test_gym.py

-

249ml-agents-envs/mlagents_envs/base_env.py

-

104ml-agents-envs/mlagents_envs/environment.py

-

99ml-agents-envs/mlagents_envs/rpc_utils.py

-

38ml-agents-envs/mlagents_envs/side_channel/incoming_message.py

-

43ml-agents-envs/mlagents_envs/tests/test_envs.py

-

170ml-agents-envs/mlagents_envs/tests/test_rpc_utils.py

-

20ml-agents-envs/mlagents_envs/tests/test_side_channel.py

-

7ml-agents-envs/mlagents_envs/tests/test_timers.py

-

39ml-agents-envs/mlagents_envs/timers.py

-

180ml-agents/mlagents/trainers/agent_processor.py

-

16ml-agents/mlagents/trainers/brain_conversion_utils.py

-

73ml-agents/mlagents/trainers/demo_loader.py

-

37ml-agents/mlagents/trainers/env_manager.py

-

55ml-agents/mlagents/trainers/ghost/trainer.py

-

32ml-agents/mlagents/trainers/learn.py

-

10ml-agents/mlagents/trainers/policy/nn_policy.py

-

4ml-agents/mlagents/trainers/policy/policy.py

-

81ml-agents/mlagents/trainers/policy/tf_policy.py

-

4ml-agents/mlagents/trainers/ppo/trainer.py

-

4ml-agents/mlagents/trainers/sac/trainer.py

-

26ml-agents/mlagents/trainers/simple_env_manager.py

-

24ml-agents/mlagents/trainers/subprocess_env_manager.py

-

42ml-agents/mlagents/trainers/tests/mock_brain.py

-

137ml-agents/mlagents/trainers/tests/simple_test_envs.py

-

54ml-agents/mlagents/trainers/tests/test_agent_processor.py

-

32ml-agents/mlagents/trainers/tests/test_demo_loader.py

-

12ml-agents/mlagents/trainers/tests/test_ghost.py

-

2ml-agents/mlagents/trainers/tests/test_learn.py

-

55ml-agents/mlagents/trainers/tests/test_nn_policy.py

-

24ml-agents/mlagents/trainers/tests/test_policy.py

936

Project/Assets/ML-Agents/Examples/Basic/Prefabs/Basic.prefab

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

928

Project/Assets/ML-Agents/Examples/Bouncer/Prefabs/Environment.prefab

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

|

|||

{ |

|||

"name": "com.unity.ml-agents", |

|||

"displayName":"ML Agents", |

|||

"version": "0.15.0-preview", |

|||

"unity": "2018.4", |

|||

"description": "Add interactivity to your game with Machine Learning Agents trained using Deep Reinforcement Learning.", |

|||

"dependencies": { |

|||

"com.unity.barracuda": "0.6.1-preview" |

|||

} |

|||

"name": "com.unity.ml-agents", |

|||

"displayName": "ML Agents", |

|||

"version": "0.15.1-preview", |

|||

"unity": "2018.4", |

|||

"description": "Add interactivity to your game with Machine Learning Agents trained using Deep Reinforcement Learning.", |

|||

"dependencies": { |

|||

"com.unity.barracuda": "0.6.1-preview" |

|||

} |

|||

} |

|||

999

docs/images/3dball_big.png

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

999

docs/images/ml-agents-LSTM.png

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

部分文件因为文件数量过多而无法显示

撰写

预览

正在加载...

取消

保存

Reference in new issue