当前提交

53bea15c

共有 181 个文件被更改,包括 3535 次插入 和 3419 次删除

-

7.circleci/config.yml

-

6.yamato/com.unity.ml-agents-test.yml

-

8DevProject/Packages/manifest.json

-

4DevProject/ProjectSettings/ProjectVersion.txt

-

12Project/Assets/ML-Agents/Examples/3DBall/Scenes/3DBall.unity

-

12Project/Assets/ML-Agents/Examples/3DBall/Scenes/3DBallHard.unity

-

10Project/Assets/ML-Agents/Examples/3DBall/Scripts/Ball3DAgent.cs

-

10Project/Assets/ML-Agents/Examples/3DBall/Scripts/Ball3DHardAgent.cs

-

9Project/Assets/ML-Agents/Examples/Basic/Scenes/Basic.unity

-

4Project/Assets/ML-Agents/Examples/Basic/Scripts/BasicController.cs

-

14Project/Assets/ML-Agents/Examples/Bouncer/Scripts/BouncerAgent.cs

-

10Project/Assets/ML-Agents/Examples/Crawler/Scenes/CrawlerDynamicTarget.unity

-

6Project/Assets/ML-Agents/Examples/Crawler/Scripts/CrawlerAgent.cs

-

7Project/Assets/ML-Agents/Examples/FoodCollector/Scripts/FoodCollectorAgent.cs

-

1001Project/Assets/ML-Agents/Examples/GridWorld/Demos/ExpertGrid.demo

-

28Project/Assets/ML-Agents/Examples/GridWorld/Scenes/GridWorld.unity

-

12Project/Assets/ML-Agents/Examples/GridWorld/Scripts/GridAgent.cs

-

2Project/Assets/ML-Agents/Examples/GridWorld/Scripts/GridArea.cs

-

9Project/Assets/ML-Agents/Examples/Hallway/Scripts/HallwayAgent.cs

-

9Project/Assets/ML-Agents/Examples/PushBlock/Scripts/PushAgentBasic.cs

-

1001Project/Assets/ML-Agents/Examples/Pyramids/Demos/ExpertPyramid.demo

-

9Project/Assets/ML-Agents/Examples/Pyramids/Scripts/PyramidAgent.cs

-

6Project/Assets/ML-Agents/Examples/Reacher/Scripts/ReacherAgent.cs

-

2Project/Assets/ML-Agents/Examples/SharedAssets/Scripts/GroundContact.cs

-

5Project/Assets/ML-Agents/Examples/SharedAssets/Scripts/ModelOverrider.cs

-

440Project/Assets/ML-Agents/Examples/Soccer/Prefabs/SoccerFieldFives.prefab

-

176Project/Assets/ML-Agents/Examples/Soccer/Prefabs/SoccerFieldTwos.prefab

-

12Project/Assets/ML-Agents/Examples/Soccer/Scripts/AgentSoccer.cs

-

2Project/Assets/ML-Agents/Examples/Soccer/Scripts/SoccerFieldArea.cs

-

34Project/Assets/ML-Agents/Examples/Startup/Scripts/Startup.cs

-

722Project/Assets/ML-Agents/Examples/Template/AgentPrefabsAndColors.unity

-

133Project/Assets/ML-Agents/Examples/Template/Scene.unity

-

4Project/Assets/ML-Agents/Examples/Template/Scripts/TemplateAgent.cs

-

4Project/Assets/ML-Agents/Examples/Tennis/Scripts/HitWall.cs

-

8Project/Assets/ML-Agents/Examples/Tennis/Scripts/TennisAgent.cs

-

8Project/Assets/ML-Agents/Examples/Walker/Scripts/WalkerAgent.cs

-

16Project/Assets/ML-Agents/Examples/WallJump/Scripts/WallJumpAgent.cs

-

4Project/Packages/manifest.json

-

5Project/ProjectSettings/ProjectSettings.asset

-

2Project/ProjectSettings/ProjectVersion.txt

-

110README.md

-

25com.unity.ml-agents/CHANGELOG.md

-

93com.unity.ml-agents/Documentation~/com.unity.ml-agents.md

-

64com.unity.ml-agents/Editor/BehaviorParametersEditor.cs

-

27com.unity.ml-agents/Editor/RayPerceptionSensorComponentBaseEditor.cs

-

10com.unity.ml-agents/Runtime/Academy.cs

-

154com.unity.ml-agents/Runtime/Agent.cs

-

12com.unity.ml-agents/Runtime/Communicator/GrpcExtensions.cs

-

61com.unity.ml-agents/Runtime/Communicator/RpcCommunicator.cs

-

9com.unity.ml-agents/Runtime/DecisionRequester.cs

-

16com.unity.ml-agents/Runtime/Inference/BarracudaModelParamLoader.cs

-

2com.unity.ml-agents/Runtime/Inference/ModelRunner.cs

-

164com.unity.ml-agents/Runtime/Policies/BehaviorParameters.cs

-

20com.unity.ml-agents/Runtime/Policies/BrainParameters.cs

-

19com.unity.ml-agents/Runtime/Sensors/CameraSensor.cs

-

82com.unity.ml-agents/Runtime/Sensors/CameraSensorComponent.cs

-

29com.unity.ml-agents/Runtime/Sensors/RayPerceptionSensor.cs

-

8com.unity.ml-agents/Runtime/Sensors/RayPerceptionSensorComponent3D.cs

-

75com.unity.ml-agents/Runtime/Sensors/RayPerceptionSensorComponentBase.cs

-

28com.unity.ml-agents/Runtime/Sensors/RenderTextureSensor.cs

-

57com.unity.ml-agents/Runtime/Sensors/RenderTextureSensorComponent.cs

-

31com.unity.ml-agents/Runtime/SideChannels/EngineConfigurationChannel.cs

-

109com.unity.ml-agents/Runtime/SideChannels/FloatPropertiesChannel.cs

-

10com.unity.ml-agents/Runtime/SideChannels/RawBytesChannel.cs

-

13com.unity.ml-agents/Runtime/SideChannels/SideChannel.cs

-

178com.unity.ml-agents/Tests/Editor/MLAgentsEditModeTest.cs

-

69com.unity.ml-agents/Tests/Editor/SideChannelTests.cs

-

4com.unity.ml-agents/package.json

-

2config/sac_trainer_config.yaml

-

4config/trainer_config.yaml

-

21docs/Getting-Started-with-Balance-Ball.md

-

160docs/Installation.md

-

5docs/Learning-Environment-Best-Practices.md

-

38docs/Learning-Environment-Create-New.md

-

47docs/Learning-Environment-Design-Agents.md

-

33docs/Learning-Environment-Design.md

-

4docs/Learning-Environment-Examples.md

-

35docs/Limitations.md

-

29docs/Migrating.md

-

236docs/Python-API.md

-

3docs/Readme.md

-

2docs/Training-Curriculum-Learning.md

-

37docs/Training-Imitation-Learning.md

-

1docs/Training-ML-Agents.md

-

2docs/Unity-Inference-Engine.md

-

9docs/Using-Docker.md

-

12docs/Using-Virtual-Environment.md

-

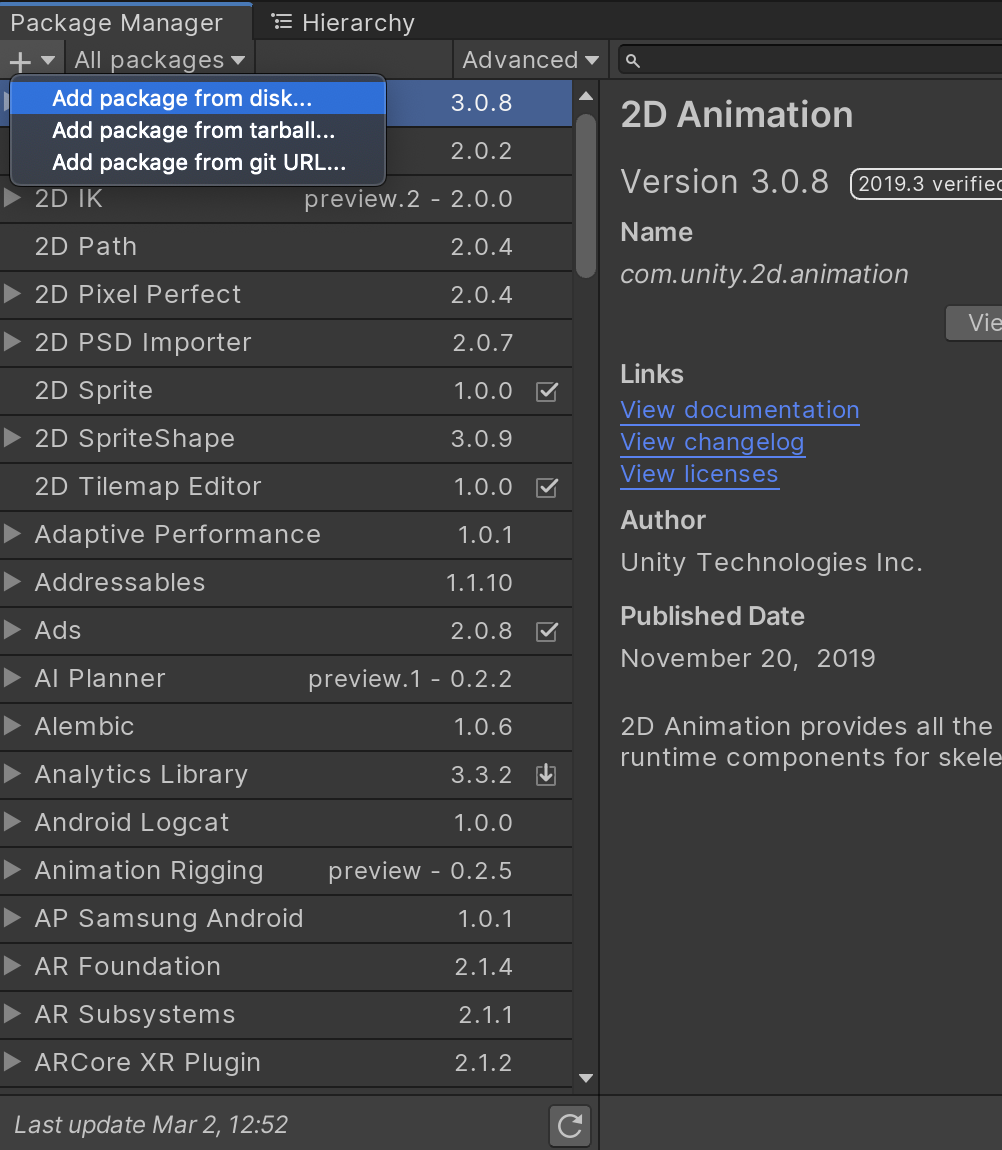

951docs/images/unity_package_manager_window.png

-

2docs/localized/KR/docs/Installation.md

-

2docs/localized/zh-CN/docs/Installation.md

-

2gym-unity/gym_unity/__init__.py

-

2ml-agents-envs/mlagents_envs/__init__.py

-

10ml-agents-envs/mlagents_envs/base_env.py

-

4ml-agents-envs/mlagents_envs/communicator.py

-

7ml-agents-envs/mlagents_envs/environment.py

-

5ml-agents-envs/mlagents_envs/exception.py

-

3ml-agents-envs/mlagents_envs/rpc_communicator.py

-

7ml-agents-envs/mlagents_envs/rpc_utils.py

-

4ml-agents-envs/mlagents_envs/side_channel/__init__.py

-

23ml-agents-envs/mlagents_envs/side_channel/engine_configuration_channel.py

|

|||

m_EditorVersion: 2019.3.0f6 |

|||

m_EditorVersionWithRevision: 2019.3.0f6 (27ab2135bccf) |

|||

m_EditorVersion: 2019.3.3f1 |

|||

m_EditorVersionWithRevision: 2019.3.3f1 (7ceaae5f7503) |

|||

1001

Project/Assets/ML-Agents/Examples/GridWorld/Demos/ExpertGrid.demo

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

1001

Project/Assets/ML-Agents/Examples/Pyramids/Demos/ExpertPyramid.demo

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

722

Project/Assets/ML-Agents/Examples/Template/AgentPrefabsAndColors.unity

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

|

|||

m_EditorVersion: 2018.4.14f1 |

|||

m_EditorVersion: 2018.4.18f1 |

|||

|

|||

Please see the [ML-Agents README)(https://github.com/Unity-Technologies/ml-agents/blob/master/README.md) |

|||

# About ML-Agents package (`com.unity.ml-agents`) |

|||

|

|||

The Unity ML-Agents package contains the C# SDK for the |

|||

[Unity ML-Agents Toolkit](https://github.com/Unity-Technologies/ml-agents). |

|||

|

|||

The package provides the ability for any Unity scene to be converted into a learning |

|||

environment where character behaviors can be trained using a variety of machine learning |

|||

algorithms. Additionally, it enables any trained behavior to be embedded back into the Unity |

|||

scene. More specifically, the package provides the following core functionalities: |

|||

* Define Agents: entities whose behavior will be learned. Agents are entities |

|||

that generate observations (through sensors), take actions and receive rewards from |

|||

the environment. |

|||

* Define Behaviors: entities that specifiy how an agent should act. Multiple agents can |

|||

share the same Behavior and a scene may have multiple Behaviors. |

|||

* Record demonstrations of an agent within the Editor. These demonstrations can be |

|||

valuable to train a behavior for that agent. |

|||

* Embedding a trained behavior into the scene via the |

|||

[Unity Inference Engine](https://docs.unity3d.com/Packages/com.unity.barracuda@latest/index.html). |

|||

Thus an Agent can switch from a learning behavior to an inference behavior. |

|||

|

|||

Note that this package does not contain the machine learning algorithms for training |

|||

behaviors. It relies on a Python package to orchestrate the training. This package |

|||

only enables instrumenting a Unity scene and setting it up for training, and then |

|||

embedding the trained model back into your Unity scene. |

|||

|

|||

## Preview package |

|||

This package is available as a preview, so it is not ready for production use. |

|||

The features and documentation in this package might change before it is verified for release. |

|||

|

|||

|

|||

## Package contents |

|||

|

|||

The following table describes the package folder structure: |

|||

|

|||

|**Location**|**Description**| |

|||

|---|---| |

|||

|*Documentation~*|Contains the documentation for the Unity package.| |

|||

|*Editor*|Contains utilities for Editor windows and drawers.| |

|||

|*Plugins*|Contains third-party DLLs.| |

|||

|*Runtime*|Contains core C# APIs for integrating ML-Agents into your Unity scene. | |

|||

|*Tests*|Contains the unit tests for the package.| |

|||

|

|||

<a name="Installation"></a> |

|||

|

|||

## Installation |

|||

|

|||

To install this package, follow the instructions in the |

|||

[Package Manager documentation](https://docs.unity3d.com/Manual/upm-ui-install.html). |

|||

|

|||

To install the Python package to enable training behaviors, follow the instructions on our |

|||

[GitHub repository](https://github.com/Unity-Technologies/ml-agents/blob/latest_release/docs/Installation.md). |

|||

|

|||

## Requirements |

|||

|

|||

This version of the Unity ML-Agents package is compatible with the following versions of the Unity Editor: |

|||

|

|||

* 2018.4 and later (recommended) |

|||

|

|||

## Known limitations |

|||

|

|||

### Headless Mode |

|||

|

|||

If you enable Headless mode, you will not be able to collect visual observations |

|||

from your agents. |

|||

|

|||

### Rendering Speed and Synchronization |

|||

|

|||

Currently the speed of the game physics can only be increased to 100x real-time. |

|||

The Academy also moves in time with FixedUpdate() rather than Update(), so game |

|||

behavior implemented in Update() may be out of sync with the agent decision |

|||

making. See |

|||

[Execution Order of Event Functions](https://docs.unity3d.com/Manual/ExecutionOrder.html) |

|||

for more information. |

|||

|

|||

You can control the frequency of Academy stepping by calling |

|||

`Academy.Instance.DisableAutomaticStepping()`, and then calling |

|||

`Academy.Instance.EnvironmentStep()` |

|||

|

|||

### Unity Inference Engine Models |

|||

Currently, only models created with our trainers are supported for running |

|||

ML-Agents with a neural network behavior. |

|||

|

|||

|

|||

## Helpful links |

|||

|

|||

If you are new to the Unity ML-Agents package, or have a question after reading |

|||

the documentation, you can checkout our |

|||

[GitHUb Repository](https://github.com/Unity-Technologies/ml-agents), which |

|||

also includes a number of ways to |

|||

[connect with us](https://github.com/Unity-Technologies/ml-agents#community-and-feedback) |

|||

including our [ML-Agents Forum](https://forum.unity.com/forums/ml-agents.453/). |

|||

|

|||

|

|||

{ |

|||

"name": "com.unity.ml-agents", |

|||

"displayName":"ML Agents", |

|||

"version": "0.14.1-preview", |

|||

"version": "0.15.0-preview", |

|||

"com.unity.barracuda": "0.6.0-preview" |

|||

"com.unity.barracuda": "0.6.1-preview" |

|||

} |

|||

} |

|||

|

|||

# Limitations |

|||

|

|||

## Unity SDK |

|||

|

|||

### Headless Mode |

|||

|

|||

If you enable Headless mode, you will not be able to collect visual observations |

|||

from your agents. |

|||

|

|||

### Rendering Speed and Synchronization |

|||

|

|||

Currently the speed of the game physics can only be increased to 100x real-time. |

|||

The Academy also moves in time with FixedUpdate() rather than Update(), so game |

|||

behavior implemented in Update() may be out of sync with the agent decision |

|||

making. See |

|||

[Execution Order of Event Functions](https://docs.unity3d.com/Manual/ExecutionOrder.html) |

|||

for more information. |

|||

|

|||

You can control the frequency of Academy stepping by calling |

|||

`Academy.Instance.DisableAutomaticStepping()`, and then calling |

|||

`Academy.Instance.EnvironmentStep()` |

|||

|

|||

### Unity Inference Engine Models |

|||

Currently, only models created with our trainers are supported for running |

|||

ML-Agents with a neural network behavior. |

|||

|

|||

## Python API |

|||

|

|||

### Python version |

|||

|

|||

As of version 0.3, we no longer support Python 2. |

|||

|

|||

See the package-specific Limitations pages: |

|||

* [Unity `com.unity.mlagents` package](../com.unity.ml-agents/Documentation~/com.unity.ml-agents.md) |

|||

* [`mlagents` Python package](../ml-agents/README.md) |

|||

* [`mlagents_envs` Python package](../ml-agents-envs/README.md) |

|||

* [`gym_unity` Python package](../gym-unity/README.md) |

|||

|

|||

__version__ = "0.15.0.dev0" |

|||

__version__ = "0.16.0.dev0" |

|||

|

|||

__version__ = "0.15.0.dev0" |

|||

__version__ = "0.16.0.dev0" |

|||

|

|||

from mlagents_envs.side_channel.incoming_message import IncomingMessage # noqa |

|||

from mlagents_envs.side_channel.outgoing_message import OutgoingMessage # noqa |

|||

|

|||

from mlagents_envs.side_channel.side_channel import SideChannel # noqa |

|||

部分文件因为文件数量过多而无法显示

撰写

预览

正在加载...

取消

保存

Reference in new issue