浏览代码

refactored the quick start and installation guide, added faq

/develop-generalizationTraining-TrainerController

refactored the quick start and installation guide, added faq

/develop-generalizationTraining-TrainerController

当前提交

0e7c88ee

共有 22 个文件被更改,包括 2108 次插入 和 194 次删除

-

11README.md

-

105docs/Getting-Started-with-Balance-Ball.md

-

14docs/Installation.md

-

2docs/Learning-Environment-Best-Practices.md

-

2docs/Learning-Environment-Design-Agents.md

-

8docs/Learning-Environment-Examples.md

-

12docs/Readme.md

-

12docs/Training-ML-Agents.md

-

6docs/Using-TensorFlow-Sharp-in-Unity.md

-

6docs/localized/zh-CN/docs/Getting-Started-with-Balance-Ball.md

-

2docs/localized/zh-CN/docs/Installation.md

-

3docs/localized/zh-CN/docs/Readme.md

-

2unity-environment/Assets/ML-Agents/Scripts/CoreBrainInternal.cs

-

152docs/Basic-Guide.md

-

91docs/FAQ.md

-

19docs/Limitations.md

-

117docs/images/imported-tensorflowsharp.png

-

60docs/images/project-settings.png

-

1001docs/images/running-a-pretrained-model.gif

-

191docs/images/training-command-example.png

-

419docs/images/training-running.png

-

67docs/Limitations-and-Common-Issues.md

|

|||

# Basic Guide |

|||

|

|||

This guide will show you how to use a pretrained model in an example Unity environment, and show you how to train the model yourself. |

|||

|

|||

If you are not familiar with the [Unity Engine](https://unity3d.com/unity), |

|||

we highly recommend the [Roll-a-ball tutorial](https://unity3d.com/learn/tutorials/s/roll-ball-tutorial) to learn all the basic concepts of Unity. |

|||

|

|||

## Setting up ML-Agents within Unity |

|||

|

|||

In order to use ML-Agents within Unity, you need to change some Unity settings first. Also [TensorFlowSharp plugin](https://github.com/migueldeicaza/TensorFlowSharp) is needed for you to use pretrained model within Unity. |

|||

|

|||

1. Launch Unity |

|||

2. On the Projects dialog, choose the **Open** option at the top of the window. |

|||

3. Using the file dialog that opens, locate the `unity-environment` folder within the ML-Agents project and click **Open**. |

|||

4. Go to **Edit** > **Project Settings** > **Player** |

|||

5. For **each** of the platforms you target |

|||

(**PC, Mac and Linux Standalone**, **iOS** or **Android**): |

|||

1. Option the **Other Settings** section. |

|||

2. Select **Scripting Runtime Version** to |

|||

**Experimental (.NET 4.6 Equivalent)** |

|||

3. In **Scripting Defined Symbols**, add the flag `ENABLE_TENSORFLOW`. |

|||

After typing in the flag name, press Enter. |

|||

6. Go to **File** > **Save Project** |

|||

|

|||

|

|||

|

|||

[Download](https://s3.amazonaws.com/unity-ml-agents/0.3/TFSharpPlugin.unitypackage) the TensorFlowSharp plugin. Then import it into Unity by double clicking the downloaded file. You can check if it was successfully imported by checking the TensorFlow files in the Project window under **Assets** > **ML-Agents** > **Plugins** > **Computer**. |

|||

|

|||

**Note**: If you don't see anything under **Assets**, drag the `ml-agents/unity-environment/Assets/ML-Agents` folder under **Assets** within Project window. |

|||

|

|||

|

|||

|

|||

## Running a Pre-trained Model |

|||

|

|||

1. In the **Project** window, go to `Assets/ML-Agents/Examples/3DBall` folder and open the `3DBall` scene file. |

|||

2. In the **Hierarchy** window, select the **Ball3DBrain** child under the **Ball3DAcademy** GameObject to view its properties in the Inspector window. |

|||

3. On the **Ball3DBrain** object's **Brain** component, change the **Brain Type** to **Internal**. |

|||

4. In the **Project** window, locate the `Assets/ML-Agents/Examples/3DBall/TFModels` folder. |

|||

5. Drag the `3DBall` model file from the `TFModels` folder to the **Graph Model** field of the **Ball3DBrain** object's **Brain** component. |

|||

5. Click the **Play** button and you will see the platforms balance the balls using the pretrained model. |

|||

|

|||

|

|||

|

|||

## Building an Example Environment |

|||

|

|||

The first step is to open the Unity scene containing the 3D Balance Ball |

|||

environment: |

|||

|

|||

1. Launch Unity. |

|||

2. On the Projects dialog, choose the **Open** option at the top of the window. |

|||

3. Using the file dialog that opens, locate the `unity-environment` folder |

|||

within the ML-Agents project and click **Open**. |

|||

4. In the **Project** window, navigate to the folder |

|||

`Assets/ML-Agents/Examples/3DBall/`. |

|||

5. Double-click the `3DBall` file to load the scene containing the Balance |

|||

Ball environment. |

|||

|

|||

|

|||

|

|||

Since we are going to build this environment to conduct training, we need to |

|||

set the brain used by the agents to **External**. This allows the agents to |

|||

communicate with the external training process when making their decisions. |

|||

|

|||

1. In the **Scene** window, click the triangle icon next to the Ball3DAcademy |

|||

object. |

|||

2. Select its child object **Ball3DBrain**. |

|||

3. In the Inspector window, set **Brain Type** to **External**. |

|||

|

|||

|

|||

|

|||

Next, we want the set up scene to play correctly when the training process |

|||

launches our environment executable. This means: |

|||

* The environment application runs in the background |

|||

* No dialogs require interaction |

|||

* The correct scene loads automatically |

|||

|

|||

1. Open Player Settings (menu: **Edit** > **Project Settings** > **Player**). |

|||

2. Under **Resolution and Presentation**: |

|||

- Ensure that **Run in Background** is Checked. |

|||

- Ensure that **Display Resolution Dialog** is set to Disabled. |

|||

3. Open the Build Settings window (menu:**File** > **Build Settings**). |

|||

4. Choose your target platform. |

|||

- (optional) Select “Development Build” to |

|||

[log debug messages](https://docs.unity3d.com/Manual/LogFiles.html). |

|||

5. If any scenes are shown in the **Scenes in Build** list, make sure that |

|||

the 3DBall Scene is the only one checked. (If the list is empty, than only the |

|||

current scene is included in the build). |

|||

6. Click **Build**: |

|||

a. In the File dialog, navigate to the `python` folder in your ML-Agents |

|||

directory. |

|||

b. Assign a file name and click **Save**. |

|||

|

|||

|

|||

|

|||

Now that we have a Unity executable containing the simulation environment, we |

|||

can perform the training. You can ensure that your environment and the Python |

|||

API work as expected, by using the `python/Basics` |

|||

[Jupyter notebook](Background-Jupyter.md) introduced in the next section. |

|||

|

|||

## Using the Basics Jupyter Notebook |

|||

|

|||

The `python/Basics` [Jupyter notebook](Background-Jupyter.md) contains a |

|||

simple walkthrough of the functionality of the Python |

|||

API. It can also serve as a simple test that your environment is configured |

|||

correctly. Within `Basics`, be sure to set `env_name` to the name of the |

|||

Unity executable you built earlier. |

|||

|

|||

More information and documentation is provided in the |

|||

[Python API](Python-API.md) page. |

|||

|

|||

## Training the Brain with Reinforcement Learning |

|||

|

|||

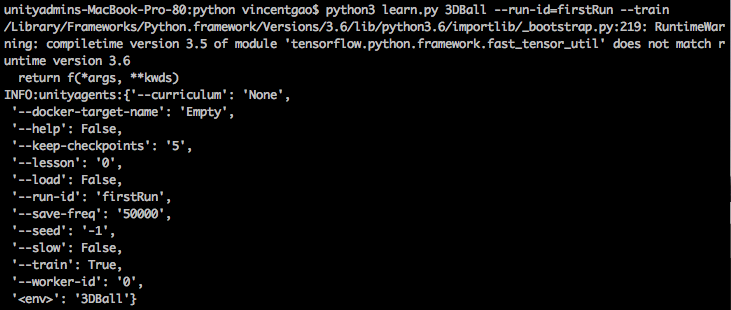

1. Open a command or terminal window. |

|||

2. Nagivate to the folder where you installed ML-Agents. |

|||

3. Change to the python directory. |

|||

4. Run `python3 learn.py <env_name> --run-id=<run-identifier> --train` |

|||

Where: |

|||

- `<env_name>` is the name and path to the executable you exported from Unity (without extension) |

|||

- `<run-identifier>` is a string used to separate the results of different training runs |

|||

- And the `--train` tells learn.py to run a training session (rather than inference) |

|||

|

|||

For example, if you are training with a 3DBall executable you exported to the ml-agents/python directory, run: |

|||

|

|||

``` |

|||

python3 learn.py 3DBall --run-id=firstRun --train |

|||

``` |

|||

|

|||

|

|||

|

|||

**Note**: If you're using Anaconda, don't forget to activate the ml-agents environment first. |

|||

|

|||

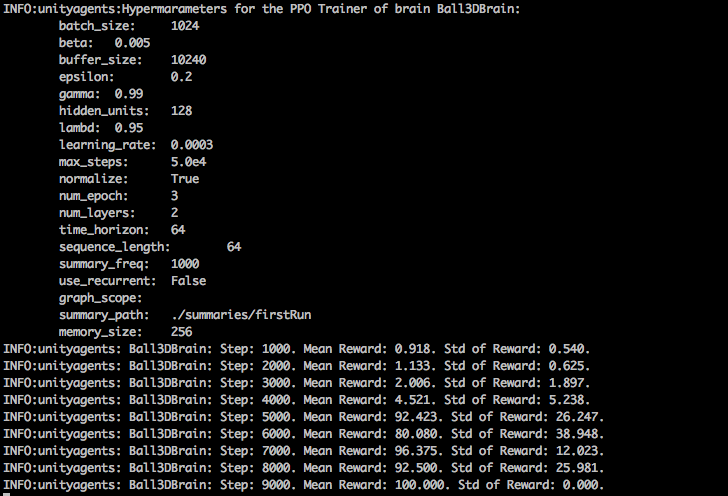

If the learn.py runs correctly and starts training, you should see something like this: |

|||

|

|||

|

|||

|

|||

You can press Ctrl+C to stop the training, and your trained model will be at `ml-agents/python/models/<run-identifier>/<env_name>_<run-identifier>.bytes`, which corresponds to your model's latest checkpoint. You can now embed this trained model into your internal brain by following the steps below, which is similar to the steps described [above](#play-an-example-environment-using-pretrained-model). |

|||

|

|||

1. Move your model file into |

|||

`unity-environment/Assets/ML-Agents/Examples/3DBall/TFModels/`. |

|||

2. Open the Unity Editor, and select the **3DBall** scene as described above. |

|||

3. Select the **Ball3DBrain** object from the Scene hierarchy. |

|||

4. Change the **Type of Brain** to **Internal**. |

|||

5. Drag the `<env_name>_<run-identifier>.bytes` file from the Project window of the Editor |

|||

to the **Graph Model** placeholder in the **Ball3DBrain** inspector window. |

|||

6. Press the Play button at the top of the editor. |

|||

|

|||

## Next Steps |

|||

|

|||

* For more information on ML-Agents, in addition to helpful background, check out the [ML-Agents Overview](ML-Agents-Overview.md) page. |

|||

* For a more detailed walk-through of our 3D Balance Ball environment, check out the [Getting Started](Getting-Started-with-Balance-Ball.md) page. |

|||

* For a "Hello World" introduction to creating your own learning environment, check out the [Making a New Learning Environment](Learning-Environment-Create-New.md) page. |

|||

* For a series of Youtube video tutorials, checkout the [Machine Learning Agents PlayList](https://www.youtube.com/playlist?list=PLX2vGYjWbI0R08eWQkO7nQkGiicHAX7IX) page. |

|||

|

|||

# Frequently Asked Questions |

|||

|

|||

|

|||

### Scripting Runtime Environment not setup correctly |

|||

|

|||

If you haven't switched your scripting runtime version from .NET 3.5 to .NET 4.6, you will see such error message: |

|||

|

|||

``` |

|||

error CS1061: Type `System.Text.StringBuilder' does not contain a definition for `Clear' and no extension method `Clear' of type `System.Text.StringBuilder' could be found. Are you missing an assembly reference? |

|||

``` |

|||

|

|||

This is because .NET 3.5 doesn't support method Clear() for StringBuilder, refer to [Setting Up ML-Agents Within Unity](Installation.md#setting-up-ml-agent-within-unity) for solution. |

|||

|

|||

### TensorFlowSharp flag not turned on. |

|||

|

|||

If you have already imported the TensorFlowSharp plugin, but havn't set ENABLE_TENSORFLOW flag for your scripting define symbols, you will see the following error message: |

|||

|

|||

``` |

|||

You need to install and enable the TensorFlowSharp plugin in order to use the internal brain. |

|||

``` |

|||

|

|||

This error message occurs because the TensorFlowSharp plugin won't be usage without the ENABLE_TENSORFLOW flag, refer to [Setting Up ML-Agents Within Unity](Installation.md#setting-up-ml-agent-within-unity) for solution. |

|||

|

|||

### Tensorflow epsilon placeholder error |

|||

|

|||

If you have a graph placeholder set in the internal Brain inspector that is not present in the TensorFlow graph, you will see some error like this: |

|||

|

|||

``` |

|||

UnityAgentsException: One of the Tensorflow placeholder could not be found. In brain <some_brain_name>, there are no FloatingPoint placeholder named <some_placeholder_name>. |

|||

``` |

|||

|

|||

Solution: Go to all of your Brain object, find `Graph placeholders` and change its `size` to 0 to remove the `epsilon` placeholder. |

|||

|

|||

Similarly, if you have a graph scope set in the internal Brain inspector that is not correctly set, you will see some error like this: |

|||

|

|||

``` |

|||

UnityAgentsException: The node <Wrong_Graph_Scope>/action could not be found. Please make sure the graphScope <Wrong_Graph_Scope>/ is correct |

|||

``` |

|||

|

|||

Solution: Make sure your Graph Scope field matches the corresponding brain object name in your Hierachy Inspector when there is multiple brain. |

|||

|

|||

### Environment Permission Error |

|||

|

|||

If you directly import your Unity environment without building it in the |

|||

editor, you might need to give it additional permissions to execute it. |

|||

|

|||

If you receive such a permission error on macOS, run: |

|||

|

|||

`chmod -R 755 *.app` |

|||

|

|||

or on Linux: |

|||

|

|||

`chmod -R 755 *.x86_64` |

|||

|

|||

On Windows, you can find |

|||

[instructions](https://technet.microsoft.com/en-us/library/cc754344(v=ws.11).aspx). |

|||

|

|||

### Environment Connection Timeout |

|||

|

|||

If you are able to launch the environment from `UnityEnvironment` but |

|||

then receive a timeout error, there may be a number of possible causes. |

|||

* _Cause_: There may be no Brains in your environment which are set |

|||

to `External`. In this case, the environment will not attempt to |

|||

communicate with python. _Solution_: Set the Brains(s) you wish to |

|||

externally control through the Python API to `External` from the |

|||

Unity Editor, and rebuild the environment. |

|||

* _Cause_: On OSX, the firewall may be preventing communication with |

|||

the environment. _Solution_: Add the built environment binary to the |

|||

list of exceptions on the firewall by following |

|||

[instructions](https://support.apple.com/en-us/HT201642). |

|||

* _Cause_: An error happened in the Unity Environment preventing |

|||

communication. _Solution_: Look into the |

|||

[log files](https://docs.unity3d.com/Manual/LogFiles.html) |

|||

generated by the Unity Environment to figure what error happened. |

|||

|

|||

### Communication port {} still in use |

|||

|

|||

If you receive an exception `"Couldn't launch new environment because |

|||

communication port {} is still in use. "`, you can change the worker |

|||

number in the Python script when calling |

|||

|

|||

`UnityEnvironment(file_name=filename, worker_id=X)` |

|||

|

|||

### Mean reward : nan |

|||

|

|||

If you receive a message `Mean reward : nan` when attempting to train a |

|||

model using PPO, this is due to the episodes of the learning environment |

|||

not terminating. In order to address this, set `Max Steps` for either |

|||

the Academy or Agents within the Scene Inspector to a value greater |

|||

than 0. Alternatively, it is possible to manually set `done` conditions |

|||

for episodes from within scripts for custom episode-terminating events. |

|||

|

|||

# Limitations |

|||

|

|||

## Unity SDK |

|||

### Headless Mode |

|||

If you enable Headless mode, you will not be able to collect visual |

|||

observations from your agents. |

|||

|

|||

### Rendering Speed and Synchronization |

|||

Currently the speed of the game physics can only be increased to 100x |

|||

real-time. The Academy also moves in time with FixedUpdate() rather than |

|||

Update(), so game behavior implemented in Update() may be out of sync with the Agent decision making. See [Execution Order of Event Functions](https://docs.unity3d.com/Manual/ExecutionOrder.html) for more information. |

|||

|

|||

## Python API |

|||

|

|||

### Python version |

|||

As of version 0.3, we no longer support Python 2. |

|||

|

|||

### Tensorflow support |

|||

Currently Ml-Agents uses TensorFlow 1.4 due to the version of the TensorFlowSharp plugin we are using. |

|||

1001

docs/images/running-a-pretrained-model.gif

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

|

|||

# Limitations and Common Issues |

|||

|

|||

## Unity SDK |

|||

### Headless Mode |

|||

If you enable Headless mode, you will not be able to collect visual |

|||

observations from your agents. |

|||

|

|||

### Rendering Speed and Synchronization |

|||

Currently the speed of the game physics can only be increased to 100x |

|||

real-time. The Academy also moves in time with FixedUpdate() rather than |

|||

Update(), so game behavior tied to frame updates may be out of sync. |

|||

|

|||

## Python API |

|||

|

|||

### Python version |

|||

As of version 0.3, we no longer support Python 2. |

|||

|

|||

### Environment Permission Error |

|||

|

|||

If you directly import your Unity environment without building it in the |

|||

editor, you might need to give it additional permissions to execute it. |

|||

|

|||

If you receive such a permission error on macOS, run: |

|||

|

|||

`chmod -R 755 *.app` |

|||

|

|||

or on Linux: |

|||

|

|||

`chmod -R 755 *.x86_64` |

|||

|

|||

On Windows, you can find instructions |

|||

[here](https://technet.microsoft.com/en-us/library/cc754344(v=ws.11).aspx). |

|||

|

|||

### Environment Connection Timeout |

|||

|

|||

If you are able to launch the environment from `UnityEnvironment` but |

|||

then receive a timeout error, there may be a number of possible causes. |

|||

* _Cause_: There may be no Brains in your environment which are set |

|||

to `External`. In this case, the environment will not attempt to |

|||

communicate with python. _Solution_: Set the Brains(s) you wish to |

|||

externally control through the Python API to `External` from the |

|||

Unity Editor, and rebuild the environment. |

|||

* _Cause_: On OSX, the firewall may be preventing communication with |

|||

the environment. _Solution_: Add the built environment binary to the |

|||

list of exceptions on the firewall by following instructions |

|||

[here](https://support.apple.com/en-us/HT201642). |

|||

* _Cause_: An error happened in the Unity Environment preventing |

|||

communication. _Solution_: Look into the |

|||

[log files](https://docs.unity3d.com/Manual/LogFiles.html) |

|||

generated by the Unity Environment to figure what error happened. |

|||

|

|||

### Communication port {} still in use |

|||

|

|||

If you receive an exception `"Couldn't launch new environment because |

|||

communication port {} is still in use. "`, you can change the worker |

|||

number in the Python script when calling |

|||

|

|||

`UnityEnvironment(file_name=filename, worker_id=X)` |

|||

|

|||

### Mean reward : nan |

|||

|

|||

If you receive a message `Mean reward : nan` when attempting to train a |

|||

model using PPO, this is due to the episodes of the learning environment |

|||

not terminating. In order to address this, set `Max Steps` for either |

|||

the Academy or Agents within the Scene Inspector to a value greater |

|||

than 0. Alternatively, it is possible to manually set `done` conditions |

|||

for episodes from within scripts for custom episode-terminating events. |

|||

撰写

预览

正在加载...

取消

保存

Reference in new issue