--- !u!104 &2

RenderSettings:

m_ObjectHideFlags: 0

serializedVersion: 8

serializedVersion: 9

m_Fog: 0

m_FogColor: {r: 0.5, g: 0.5, b: 0.5, a: 1}

m_FogMode: 3

m_ReflectionIntensity: 1

m_CustomReflection: {fileID: 0}

m_Sun: {fileID: 762086411}

m_IndirectSpecularColor: {r: 0.4465785, g: 0.49641252, b: 0.574817, a: 1}

m_IndirectSpecularColor: {r: 0.4465934, g: 0.49642956, b: 0.5748249, a: 1}

m_UseRadianceAmbientProbe: 0

--- !u!157 &3

LightmapSettings:

m_ObjectHideFlags: 0

m_EnableBakedLightmaps: 1

m_EnableRealtimeLightmaps: 1

m_LightmapEditorSettings:

serializedVersion: 9

serializedVersion: 10

m_TextureWidth: 1024

m_TextureHeight: 1024

m_AtlasSize: 1024

m_AO: 0

m_AOMaxDistance: 1

m_CompAOExponent: 1

m_PVRFilteringAtrousPositionSigmaDirect: 0.5

m_PVRFilteringAtrousPositionSigmaIndirect: 2

m_PVRFilteringAtrousPositionSigmaAO: 1

m_ShowResolutionOverlay: 1

m_LightingDataAsset: {fileID: 0}

m_UseShadowmask: 1

--- !u!196 &4

propertyPath: m_Name

value: Agent (1)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

m_PrefabParentObject: {fileID: 1644872085946016, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

m_PrefabInternal: {fileID: 103720292}

--- !u!114 &105498462

MonoBehaviour:

m_ObjectHideFlags: 0

m_PrefabParentObject: {fileID: 0}

m_PrefabInternal: {fileID: 0}

m_GameObject: {fileID: 0}

m_Enabled: 1

m_EditorHideFlags: 0

m_Script: {fileID: 11500000, guid: 41e9bda8f3cf1492fa74926a530f6f70, type: 3}

m_Name: (Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)

m_EditorClassIdentifier:

broadcast: 1

continuousPlayerActions: []

discretePlayerActions: []

defaultAction: -1

brain: {fileID: 846768605}

--- !u!114 &169591833

MonoBehaviour:

m_ObjectHideFlags: 0

m_PrefabParentObject: {fileID: 0}

m_PrefabInternal: {fileID: 0}

m_GameObject: {fileID: 0}

m_Enabled: 1

m_EditorHideFlags: 0

m_Script: {fileID: 11500000, guid: 8b23992c8eb17439887f5e944bf04a40, type: 3}

m_Name: (Clone)(Clone)(Clone)(Clone)

m_EditorClassIdentifier:

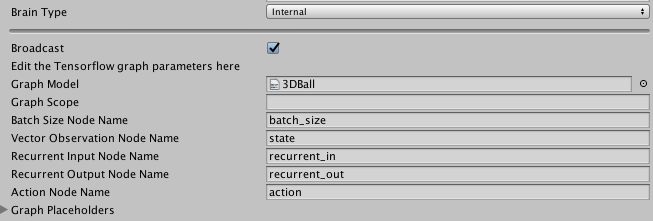

broadcast: 1

graphModel: {fileID: 4900000, guid: 8db6173148a6f4e7fa654ed627c88d7a, type: 3}

graphScope:

graphPlaceholders: []

BatchSizePlaceholderName: batch_size

VectorObservationPlacholderName: state

RecurrentInPlaceholderName: recurrent_in

RecurrentOutPlaceholderName: recurrent_out

VisualObservationPlaceholderName: []

ActionPlaceholderName: action

PreviousActionPlaceholderName: prev_action

brain: {fileID: 846768605}

--- !u!1001 &201192304

Prefab:

m_ObjectHideFlags: 0

propertyPath: brain

value:

objectReference: {fileID: 846768605}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

propertyPath: m_Name

value: Agent (13)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

propertyPath: m_Name

value: Agent (22)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

m_PrefabParentObject: {fileID: 1644872085946016, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

m_PrefabInternal: {fileID: 269521201}

--- !u!114 &327113840

MonoBehaviour:

m_ObjectHideFlags: 0

m_PrefabParentObject: {fileID: 0}

m_PrefabInternal: {fileID: 0}

m_GameObject: {fileID: 0}

m_Enabled: 1

m_EditorHideFlags: 0

m_Script: {fileID: 11500000, guid: 41e9bda8f3cf1492fa74926a530f6f70, type: 3}

m_Name: (Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)

m_EditorClassIdentifier:

broadcast: 1

continuousPlayerActions: []

discretePlayerActions: []

defaultAction: -1

brain: {fileID: 846768605}

--- !u!1 &330647278

GameObject:

m_ObjectHideFlags: 0

m_MotionVectors: 1

m_LightProbeUsage: 1

m_ReflectionProbeUsage: 1

m_RenderingLayerMask: 4294967295

m_Materials:

- {fileID: 2100000, guid: 3736de91af62e4be7a3d8752592c6c61, type: 2}

m_StaticBatchInfo:

propertyPath: m_Name

value: Agent (20)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

propertyPath: m_Name

value: Agent (31)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

propertyPath: m_Name

value: Agent (24)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

propertyPath: m_Name

value: Agent (12)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

propertyPath: m_Name

value: Agent (5)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

propertyPath: m_Name

value: Agent (9)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

m_PrefabParentObject: {fileID: 1644872085946016, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

m_PrefabInternal: {fileID: 547117383}

--- !u!114 &551611617

MonoBehaviour:

m_ObjectHideFlags: 0

m_PrefabParentObject: {fileID: 0}

m_PrefabInternal: {fileID: 0}

m_GameObject: {fileID: 0}

m_Enabled: 1

m_EditorHideFlags: 0

m_Script: {fileID: 11500000, guid: 8b23992c8eb17439887f5e944bf04a40, type: 3}

m_Name: (Clone)(Clone)

m_EditorClassIdentifier:

broadcast: 1

graphModel: {fileID: 4900000, guid: 8db6173148a6f4e7fa654ed627c88d7a, type: 3}

graphScope:

graphPlaceholders:

- name: epsilon

valueType: 1

minValue: -1

maxValue: 1

BatchSizePlaceholderName: batch_size

StatePlacholderName: state

RecurrentInPlaceholderName: recurrent_in

RecurrentOutPlaceholderName: recurrent_out

ObservationPlaceholderName: []

ActionPlaceholderName: action

brain: {fileID: 846768605}

--- !u!1001 &591860098

Prefab:

m_ObjectHideFlags: 0

- target: {fileID: 1395682910799436, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

propertyPath: m_Name

value: Agent (3)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

propertyPath: m_Name

value: Agent (18)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

propertyPath: m_Name

value: Agent (8)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

propertyPath: m_Name

value: Agent (15)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

propertyPath: m_Name

value: Agent (19)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

- component: {fileID: 846768604}

- component: {fileID: 846768605}

m_Layer: 0

m_Name: Brain

m_Name: Reacher Brain

m_TagString: Untagged

m_Icon: {fileID: 0}

m_NavMeshLayer: 0

m_Name:

m_EditorClassIdentifier:

brainParameters:

vectorObservationSize: 26

numStackedVectorObservations: 1

vectorObservationSize: 24

numStackedVectorObservations: 3

vectorActionSize: 4

cameraResolutions: []

vectorActionDescriptions:

-

vectorActionSpaceType: 1

vectorObservationSpaceType: 1

brainType: 0

brainType: 3

- {fileID: 327113840 }

- {fileID: 1028133595 }

- {fileID: 1146467767 }

- {fileID: 551611617 }

instanceID: 95334

- {fileID: 105498462 }

- {fileID: 949391681 }

- {fileID: 1042778401 }

- {fileID: 169591833 }

instanceID: 3972

--- !u!1001 &915173805

Prefab:

m_ObjectHideFlags: 0

propertyPath: m_Name

value: Agent (14)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

m_PrefabParentObject: {fileID: 1644872085946016, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

m_PrefabInternal: {fileID: 915173805}

--- !u!114 &1028133595

--- !u!114 &949391681

MonoBehaviour:

m_ObjectHideFlags: 0

m_PrefabParentObject: {fileID: 0}

m_EditorHideFlags: 0

m_Script: {fileID: 11500000, guid: 943466ab374444748a364f9d6c3e2fe2, type: 3}

m_Name: (Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)

m_Name: (Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)

--- !u!114 &1042778401

MonoBehaviour:

m_ObjectHideFlags: 0

m_PrefabParentObject: {fileID: 0}

m_PrefabInternal: {fileID: 0}

m_GameObject: {fileID: 0}

m_Enabled: 1

m_EditorHideFlags: 0

m_Script: {fileID: 11500000, guid: 35813a1be64e144f887d7d5f15b963fa, type: 3}

m_Name: (Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)

m_EditorClassIdentifier:

brain: {fileID: 846768605}

--- !u!1001 &1065008966

Prefab:

m_ObjectHideFlags: 0

propertyPath: m_Name

value: Agent (11)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

m_PrefabParentObject: {fileID: 1644872085946016, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

m_PrefabInternal: {fileID: 1065008966}

--- !u!114 &1146467767

MonoBehaviour:

m_ObjectHideFlags: 0

m_PrefabParentObject: {fileID: 0}

m_PrefabInternal: {fileID: 0}

m_GameObject: {fileID: 0}

m_Enabled: 1

m_EditorHideFlags: 0

m_Script: {fileID: 11500000, guid: 35813a1be64e144f887d7d5f15b963fa, type: 3}

m_Name: (Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)(Clone)

m_EditorClassIdentifier:

brain: {fileID: 846768605}

--- !u!1001 &1147289133

Prefab:

m_ObjectHideFlags: 0

propertyPath: m_Name

value: Agent (7)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

propertyPath: m_Name

value: Agent (26)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

propertyPath: m_Name

value: Agent (16)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

propertyPath: m_Name

value: Agent (27)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

propertyPath: m_Name

value: Agent (17)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

propertyPath: m_Name

value: Agent (28)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

propertyPath: m_Name

value: Agent (6)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

trainingConfiguration:

width: 80

height: 80

qualityLevel: 1

qualityLevel: 0

timeScale: 100

targetFrameRate: 60

inferenceConfiguration:

propertyPath: m_Name

value: Agent (23)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

- target: {fileID: 1395682910799436, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

propertyPath: m_Name

value: Agent (21)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

propertyPath: m_Name

value: Agent (2)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

propertyPath: m_Name

value: Agent (10)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

propertyPath: m_Name

value: Agent (30)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

propertyPath: m_Name

value: Agent (29)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

propertyPath: m_Name

value: Agent (4)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

m_IsPrefabParent: 0

- target: {fileID: 1395682910799436, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}

propertyPath: m_Name

value: Agent (25)

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.maxStep

value: 4000

objectReference: {fileID: 0}

- target: {fileID: 114955921823023820, guid: 2f13abef2db804f96bdc7692a1dcf2b2,

type: 2}

propertyPath: agentParameters.numberOfActionsBetweenDecisions

value: 4

objectReference: {fileID: 0}

m_RemovedComponents: []

m_ParentPrefab: {fileID: 100100000, guid: 2f13abef2db804f96bdc7692a1dcf2b2, type: 2}