当前提交

d68a833f

共有 51 个文件被更改,包括 4087 次插入 和 281 次删除

-

10.yamato/environments.yml

-

6.yamato/upm-ci-performance.yml

-

22README.md

-

4com.unity.perception/CHANGELOG.md

-

8com.unity.perception/Documentation~/DatasetCapture.md

-

118com.unity.perception/Documentation~/PerceptionCamera.md

-

50com.unity.perception/Documentation~/Randomization/Index.md

-

16com.unity.perception/Documentation~/Randomization/Parameters.md

-

6com.unity.perception/Documentation~/Randomization/RandomizerTags.md

-

20com.unity.perception/Documentation~/Randomization/Randomizers.md

-

8com.unity.perception/Documentation~/Randomization/Samplers.md

-

34com.unity.perception/Documentation~/Randomization/Scenarios.md

-

146com.unity.perception/Documentation~/Schema/Synthetic_Dataset_Schema.md

-

14com.unity.perception/Documentation~/Tutorial/Phase1.md

-

6com.unity.perception/Documentation~/Tutorial/Phase2.md

-

14com.unity.perception/Documentation~/Tutorial/Phase3.md

-

6com.unity.perception/Documentation~/Tutorial/TUTORIAL.md

-

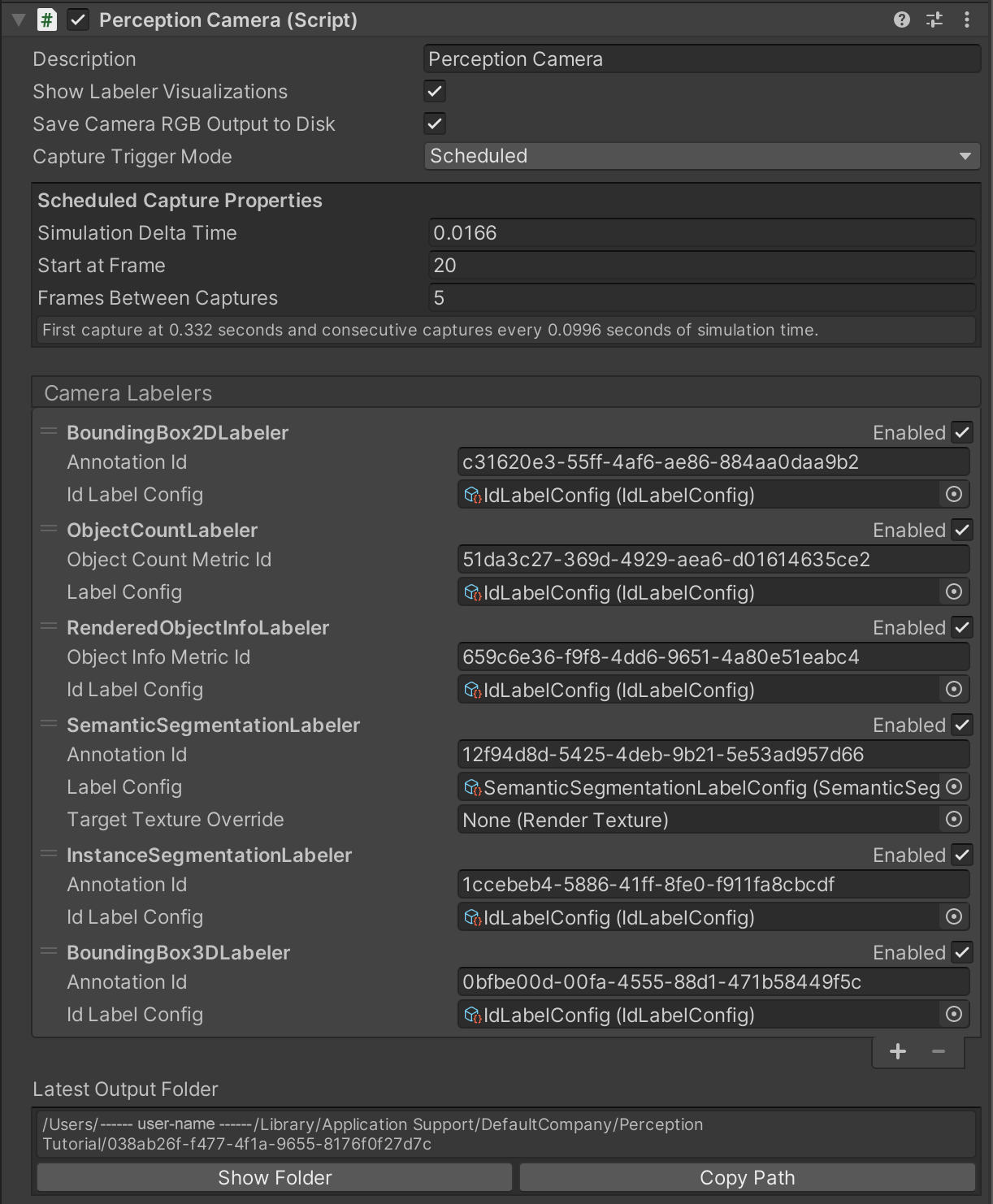

882com.unity.perception/Documentation~/images/PerceptionCameraFinished.png

-

22com.unity.perception/Editor/Randomization/Editors/RunInUnitySimulationWindow.cs

-

6com.unity.perception/Runtime/Randomization/Parameters/CategoricalParameter.cs

-

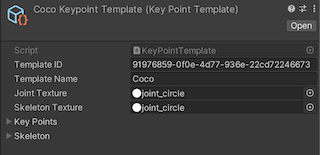

122com.unity.perception/Documentation~/images/keypoint_template_header.png

-

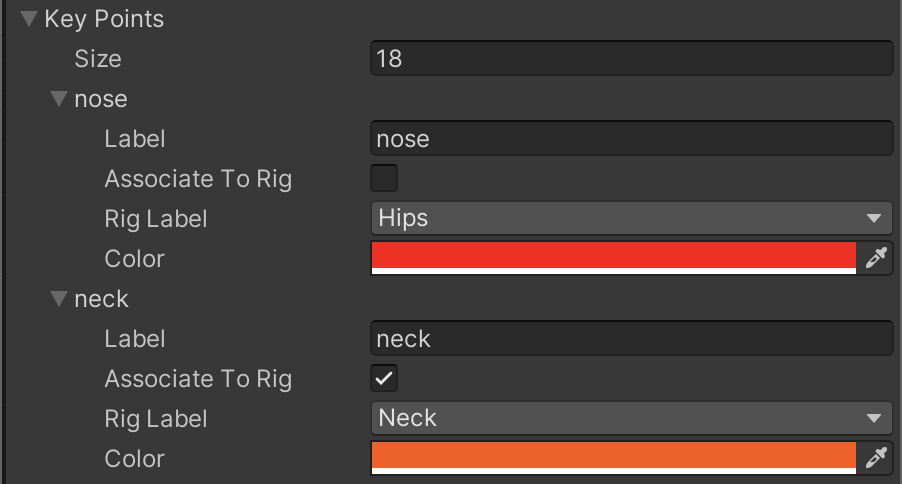

194com.unity.perception/Documentation~/images/keypoint_template_keypoints.png

-

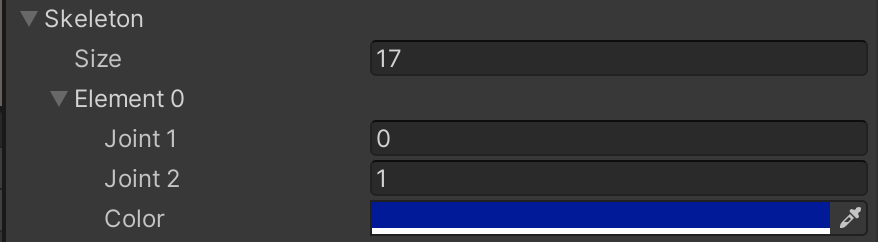

99com.unity.perception/Documentation~/images/keypoint_template_skeleton.png

-

32com.unity.perception/Editor/Randomization/Editors/RandomizerTagEditor.cs

-

3com.unity.perception/Editor/Randomization/Editors/RandomizerTagEditor.cs.meta

-

61com.unity.perception/Runtime/GroundTruth/Labelers/AnimationPoseLabel.cs

-

3com.unity.perception/Runtime/GroundTruth/Labelers/AnimationPoseLabel.cs.meta

-

142com.unity.perception/Runtime/GroundTruth/Labelers/CocoKeypointTemplate.asset

-

8com.unity.perception/Runtime/GroundTruth/Labelers/CocoKeypointTemplate.asset.meta

-

34com.unity.perception/Runtime/GroundTruth/Labelers/JointLabel.cs

-

3com.unity.perception/Runtime/GroundTruth/Labelers/JointLabel.cs.meta

-

437com.unity.perception/Runtime/GroundTruth/Labelers/KeyPointLabeler.cs

-

3com.unity.perception/Runtime/GroundTruth/Labelers/KeyPointLabeler.cs.meta

-

80com.unity.perception/Runtime/GroundTruth/Labelers/KeyPointTemplate.cs

-

3com.unity.perception/Runtime/GroundTruth/Labelers/KeyPointTemplate.cs.meta

-

109com.unity.perception/Runtime/GroundTruth/Labelers/Visualization/VisualizationHelper.cs

-

3com.unity.perception/Runtime/GroundTruth/Labelers/Visualization/VisualizationHelper.cs.meta

-

72com.unity.perception/Runtime/GroundTruth/Resources/AnimationRandomizerController.controller

-

8com.unity.perception/Runtime/GroundTruth/Resources/AnimationRandomizerController.controller.meta

-

1001com.unity.perception/Runtime/GroundTruth/Resources/PlayerIdle.anim

-

8com.unity.perception/Runtime/GroundTruth/Resources/PlayerIdle.anim.meta

-

6com.unity.perception/Runtime/GroundTruth/Resources/joint_circle.png

-

108com.unity.perception/Runtime/GroundTruth/Resources/joint_circle.png.meta

-

10com.unity.perception/Runtime/Randomization/Parameters/ParameterTypes/CategorialParameters/AnimationClipParameter.cs

-

3com.unity.perception/Runtime/Randomization/Parameters/ParameterTypes/CategorialParameters/AnimationClipParameter.cs.meta

-

46com.unity.perception/Runtime/Randomization/Randomizers/RandomizerExamples/Randomizers/AnimationRandomizer.cs

-

3com.unity.perception/Runtime/Randomization/Randomizers/RandomizerExamples/Randomizers/AnimationRandomizer.cs.meta

-

48com.unity.perception/Runtime/Randomization/Randomizers/RandomizerExamples/Tags/AnimationRandomizerTag.cs

-

3com.unity.perception/Runtime/Randomization/Randomizers/RandomizerExamples/Tags/AnimationRandomizerTag.cs.meta

-

307com.unity.perception/Tests/Runtime/GroundTruthTests/KeyPointGroundTruthTests.cs

-

11com.unity.perception/Tests/Runtime/GroundTruthTests/KeyPointGroundTruthTests.cs.meta

|

|||

using UnityEditor; |

|||

using UnityEditor.UIElements; |

|||

using UnityEngine.Experimental.Perception.Randomization.Randomizers; |

|||

using UnityEngine.UIElements; |

|||

|

|||

namespace UnityEngine.Experimental.Perception.Randomization.Editor |

|||

{ |

|||

[CustomEditor(typeof(RandomizerTag), true)] |

|||

public class RandomizerTagEditor : UnityEditor.Editor |

|||

{ |

|||

public override VisualElement CreateInspectorGUI() |

|||

{ |

|||

var rootElement = new VisualElement(); |

|||

CreatePropertyFields(rootElement); |

|||

return rootElement; |

|||

} |

|||

|

|||

void CreatePropertyFields(VisualElement rootElement) |

|||

{ |

|||

var iterator = serializedObject.GetIterator(); |

|||

iterator.NextVisible(true); |

|||

do |

|||

{ |

|||

if (iterator.name == "m_Script") |

|||

continue; |

|||

var propertyField = new PropertyField(iterator.Copy()); |

|||

propertyField.Bind(serializedObject); |

|||

rootElement.Add(propertyField); |

|||

} while (iterator.NextVisible(false)); |

|||

} |

|||

} |

|||

} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 364d57cb71da4535b77257c294c850f7 |

|||

timeCreated: 1611697363 |

|||

|

|||

using System; |

|||

using System.Collections.Generic; |

|||

using System.Linq; |

|||

|

|||

namespace UnityEngine.Perception.GroundTruth |

|||

{ |

|||

/// <summary>

|

|||

/// Record that maps a pose to a timestamp

|

|||

/// </summary>

|

|||

[Serializable] |

|||

public class PoseTimestampRecord |

|||

{ |

|||

/// <summary>

|

|||

/// The percentage within the clip that the pose starts, a value from 0 (beginning) to 1 (end)

|

|||

/// </summary>

|

|||

[Tooltip("The percentage within the clip that the pose starts, a value from 0 (beginning) to 1 (end)")] |

|||

public float startOffsetPercent; |

|||

/// <summary>

|

|||

/// The label to use for any captures inside of this time period

|

|||

/// </summary>

|

|||

public string poseLabel; |

|||

} |

|||

|

|||

/// <summary>

|

|||

/// The animation pose label is a mapping that file that maps a time range in an animation clip to a ground truth

|

|||

/// pose. The timestamp record is defined by a pose label and a duration. The timestamp records are order dependent

|

|||

/// and build on the previous entries. This means that if the first record has a duration of 5, then it will be the label

|

|||

/// for all points in the clip from 0 (the beginning) to the five second mark. The next record will then go from the end

|

|||

/// of the previous clip to its duration. If there is time left over in the flip, the final entry will be used.

|

|||

/// </summary>

|

|||

[CreateAssetMenu(fileName = "AnimationPoseTimestamp", menuName = "Perception/Animation Pose Timestamps")] |

|||

public class AnimationPoseLabel : ScriptableObject |

|||

{ |

|||

/// <summary>

|

|||

/// The animation clip used for all of the timestamps

|

|||

/// </summary>

|

|||

public AnimationClip animationClip; |

|||

/// <summary>

|

|||

/// The list of timestamps, order dependent

|

|||

/// </summary>

|

|||

public List<PoseTimestampRecord> timestamps; |

|||

|

|||

/// <summary>

|

|||

/// Retrieves the pose for the clip at the current time.

|

|||

/// </summary>

|

|||

/// <param name="time">The time in question</param>

|

|||

/// <returns>The pose for the passed in time</returns>

|

|||

public string GetPoseAtTime(float time) |

|||

{ |

|||

if (time < 0 || time > 1) return "unset"; |

|||

|

|||

var i = 1; |

|||

for (i = 1; i < timestamps.Count; i++) |

|||

{ |

|||

if (timestamps[i].startOffsetPercent > time) break; |

|||

} |

|||

|

|||

return timestamps[i - 1].poseLabel; |

|||

} |

|||

} |

|||

} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 4c69656f5dd14516a3a18e42b3b43a4e |

|||

timeCreated: 1611270313 |

|||

|

|||

%YAML 1.1 |

|||

%TAG !u! tag:unity3d.com,2011: |

|||

--- !u!114 &11400000 |

|||

MonoBehaviour: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_GameObject: {fileID: 0} |

|||

m_Enabled: 1 |

|||

m_EditorHideFlags: 0 |

|||

m_Script: {fileID: 11500000, guid: 37a7d6f1a40c45a2981a6291f0d03337, type: 3} |

|||

m_Name: CocoKeypointTemplate |

|||

m_EditorClassIdentifier: |

|||

templateName: Coco |

|||

jointTexture: {fileID: 2800000, guid: e381cbaaf29614168bafc8f7ec5dbfe9, type: 3} |

|||

skeletonTexture: {fileID: 2800000, guid: e381cbaaf29614168bafc8f7ec5dbfe9, type: 3} |

|||

keyPoints: |

|||

- label: nose |

|||

associateToRig: 0 |

|||

rigLabel: 0 |

|||

color: {r: 1, g: 0, b: 0, a: 1} |

|||

- label: neck |

|||

associateToRig: 1 |

|||

rigLabel: 9 |

|||

color: {r: 1, g: 0.3397382, b: 0, a: 1} |

|||

- label: right_shoulder |

|||

associateToRig: 1 |

|||

rigLabel: 14 |

|||

color: {r: 1, g: 0.5694697, b: 0, a: 1} |

|||

- label: right_elbow |

|||

associateToRig: 1 |

|||

rigLabel: 16 |

|||

color: {r: 1, g: 0.8258381, b: 0, a: 1} |

|||

- label: right_wrist |

|||

associateToRig: 1 |

|||

rigLabel: 18 |

|||

color: {r: 0.6454141, g: 1, b: 0, a: 1} |

|||

- label: left_shoulder |

|||

associateToRig: 1 |

|||

rigLabel: 13 |

|||

color: {r: 0.33125544, g: 1, b: 0, a: 1} |

|||

- label: left_elbow |

|||

associateToRig: 1 |

|||

rigLabel: 15 |

|||

color: {r: 0.04907012, g: 1, b: 0, a: 1} |

|||

- label: left_wrist |

|||

associateToRig: 1 |

|||

rigLabel: 17 |

|||

color: {r: 0, g: 1, b: 0.16702724, a: 1} |

|||

- label: right_hip |

|||

associateToRig: 1 |

|||

rigLabel: 2 |

|||

color: {r: 0, g: 1, b: 0.36656523, a: 1} |

|||

- label: right_knee |

|||

associateToRig: 1 |

|||

rigLabel: 4 |

|||

color: {r: 0, g: 1, b: 0.58708096, a: 1} |

|||

- label: right_ankle |

|||

associateToRig: 1 |

|||

rigLabel: 6 |

|||

color: {r: 0, g: 1, b: 0.7695224, a: 1} |

|||

- label: left_hip |

|||

associateToRig: 1 |

|||

rigLabel: 1 |

|||

color: {r: 0, g: 1, b: 1, a: 1} |

|||

- label: left_knee |

|||

associateToRig: 1 |

|||

rigLabel: 3 |

|||

color: {r: 0, g: 0.63836884, b: 1, a: 1} |

|||

- label: left_ankle |

|||

associateToRig: 1 |

|||

rigLabel: 5 |

|||

color: {r: 0, g: 0.29786587, b: 1, a: 1} |

|||

- label: right_eye |

|||

associateToRig: 1 |

|||

rigLabel: 22 |

|||

color: {r: 0.45002556, g: 0, b: 1, a: 1} |

|||

- label: left_eye |

|||

associateToRig: 1 |

|||

rigLabel: 21 |

|||

color: {r: 0.9471822, g: 0, b: 1, a: 1} |

|||

- label: right_ear |

|||

associateToRig: 0 |

|||

rigLabel: 22 |

|||

color: {r: 1, g: 0, b: 0.6039734, a: 1} |

|||

- label: left_ear |

|||

associateToRig: 0 |

|||

rigLabel: 21 |

|||

color: {r: 1, g: 0, b: 0.11927748, a: 1} |

|||

skeleton: |

|||

- joint1: 0 |

|||

joint2: 1 |

|||

color: {r: 0.014684939, g: 0.05894964, b: 0.6226415, a: 1} |

|||

- joint1: 1 |

|||

joint2: 2 |

|||

color: {r: 0.5283019, g: 0, b: 0.074745394, a: 1} |

|||

- joint1: 2 |

|||

joint2: 3 |

|||

color: {r: 0.7830189, g: 0.32108742, b: 0.07756319, a: 1} |

|||

- joint1: 3 |

|||

joint2: 4 |

|||

color: {r: 0.9622642, g: 0.85543716, b: 0, a: 1} |

|||

- joint1: 1 |

|||

joint2: 5 |

|||

color: {r: 0.7019608, g: 0.20392157, b: 0.11461401, a: 1} |

|||

- joint1: 5 |

|||

joint2: 6 |

|||

color: {r: 0.3374826, g: 0.9056604, b: 0.26059094, a: 1} |

|||

- joint1: 6 |

|||

joint2: 7 |

|||

color: {r: 0.04214221, g: 0.4811321, b: 0.03404236, a: 1} |

|||

- joint1: 1 |

|||

joint2: 8 |

|||

color: {r: 0, g: 0.764151, b: 0.22962166, a: 1} |

|||

- joint1: 8 |

|||

joint2: 9 |

|||

color: {r: 0, g: 1, b: 0.3301921, a: 1} |

|||

- joint1: 9 |

|||

joint2: 10 |

|||

color: {r: 0, g: 0.9433962, b: 0.71313965, a: 1} |

|||

- joint1: 1 |

|||

joint2: 11 |

|||

color: {r: 0, g: 1, b: 1, a: 1} |

|||

- joint1: 11 |

|||

joint2: 12 |

|||

color: {r: 0, g: 0.38122815, b: 0.9433962, a: 1} |

|||

- joint1: 12 |

|||

joint2: 13 |

|||

color: {r: 0.20773989, g: 0, b: 0.7169812, a: 1} |

|||

- joint1: 0 |

|||

joint2: 14 |

|||

color: {r: 1, g: 0, b: 0.88550186, a: 1} |

|||

- joint1: 0 |

|||

joint2: 15 |

|||

color: {r: 1, g: 0, b: 0.81438303, a: 1} |

|||

- joint1: 16 |

|||

joint2: 14 |

|||

color: {r: 0.5743165, g: 0, b: 1, a: 1} |

|||

- joint1: 17 |

|||

joint2: 15 |

|||

color: {r: 0.8962264, g: 0, b: 0.12766689, a: 1} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: a29b79d8ce98945a0855b1addec08d86 |

|||

NativeFormatImporter: |

|||

externalObjects: {} |

|||

mainObjectFileID: 11400000 |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

using System; |

|||

using System.Collections.Generic; |

|||

using UnityEngine; |

|||

|

|||

namespace UnityEngine.Perception.GroundTruth |

|||

{ |

|||

/// <summary>

|

|||

/// Label to designate a custom joint/keypoint. These are needed to add body

|

|||

/// parts to a humanoid model that are not contained in its <see cref="Animator"/> <see cref="Avatar"/>

|

|||

/// </summary>

|

|||

public class JointLabel : MonoBehaviour |

|||

{ |

|||

/// <summary>

|

|||

/// Maps this joint to a joint in a <see cref="KeyPointTemplate"/>

|

|||

/// </summary>

|

|||

[Serializable] |

|||

public class TemplateData |

|||

{ |

|||

/// <summary>

|

|||

/// The <see cref="KeyPointTemplate"/> that defines this joint.

|

|||

/// </summary>

|

|||

public KeyPointTemplate template; |

|||

/// <summary>

|

|||

/// The name of the joint.

|

|||

/// </summary>

|

|||

public string label; |

|||

}; |

|||

|

|||

/// <summary>

|

|||

/// List of all of the templates that this joint can be mapped to.

|

|||

/// </summary>

|

|||

public List<TemplateData> templateInformation; |

|||

} |

|||

} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 8cf4fa374b134b1680755f8280ae8e7d |

|||

timeCreated: 1610577744 |

|||

|

|||

using System; |

|||

using System.Collections.Generic; |

|||

using System.Linq; |

|||

using Unity.Collections; |

|||

using Unity.Entities; |

|||

|

|||

namespace UnityEngine.Perception.GroundTruth |

|||

{ |

|||

/// <summary>

|

|||

/// Produces keypoint annotations for a humanoid model. This labeler supports generic

|

|||

/// <see cref="KeyPointTemplate"/>. Template values are mapped to rigged

|

|||

/// <see cref="Animator"/> <seealso cref="Avatar"/>. Custom joints can be

|

|||

/// created by applying <see cref="JointLabel"/> to empty game objects at a body

|

|||

/// part's location.

|

|||

/// </summary>

|

|||

[Serializable] |

|||

public sealed class KeyPointLabeler : CameraLabeler |

|||

{ |

|||

/// <summary>

|

|||

/// The active keypoint template. Required to annotate keypoint data.

|

|||

/// </summary>

|

|||

public KeyPointTemplate activeTemplate; |

|||

|

|||

/// <inheritdoc/>

|

|||

public override string description |

|||

{ |

|||

get => "Produces keypoint annotations for all visible labeled objects that have a humanoid animation avatar component."; |

|||

protected set { } |

|||

} |

|||

|

|||

///<inheritdoc/>

|

|||

protected override bool supportsVisualization => true; |

|||

|

|||

// ReSharper disable MemberCanBePrivate.Global

|

|||

/// <summary>

|

|||

/// The GUID id to associate with the annotations produced by this labeler.

|

|||

/// </summary>

|

|||

public string annotationId = "8b3ef246-daa7-4dd5-a0e8-a943f6e7f8c2"; |

|||

/// <summary>

|

|||

/// The <see cref="IdLabelConfig"/> which associates objects with labels.

|

|||

/// </summary>

|

|||

public IdLabelConfig idLabelConfig; |

|||

// ReSharper restore MemberCanBePrivate.Global

|

|||

|

|||

AnnotationDefinition m_AnnotationDefinition; |

|||

EntityQuery m_EntityQuery; |

|||

Texture2D m_MissingTexture; |

|||

|

|||

/// <summary>

|

|||

/// Action that gets triggered when a new frame of key points are computed.

|

|||

/// </summary>

|

|||

public event Action<List<KeyPointEntry>> KeyPointsComputed; |

|||

|

|||

/// <summary>

|

|||

/// Creates a new key point labeler. This constructor creates a labeler that

|

|||

/// is not valid until a <see cref="IdLabelConfig"/> and <see cref="KeyPointTemplate"/>

|

|||

/// are assigned.

|

|||

/// </summary>

|

|||

public KeyPointLabeler() { } |

|||

|

|||

/// <summary>

|

|||

/// Creates a new key point labeler.

|

|||

/// </summary>

|

|||

/// <param name="config">The Id label config for the labeler</param>

|

|||

/// <param name="template">The active keypoint template</param>

|

|||

public KeyPointLabeler(IdLabelConfig config, KeyPointTemplate template) |

|||

{ |

|||

this.idLabelConfig = config; |

|||

this.activeTemplate = template; |

|||

} |

|||

|

|||

/// <summary>

|

|||

/// Array of animation pose labels which map animation clip times to ground truth pose labels.

|

|||

/// </summary>

|

|||

public AnimationPoseLabel[] poseStateConfigs; |

|||

|

|||

/// <inheritdoc/>

|

|||

protected override void Setup() |

|||

{ |

|||

if (idLabelConfig == null) |

|||

throw new InvalidOperationException("KeyPointLabeler's idLabelConfig field must be assigned"); |

|||

|

|||

m_AnnotationDefinition = DatasetCapture.RegisterAnnotationDefinition("keypoints", new []{TemplateToJson(activeTemplate)}, |

|||

"pixel coordinates of keypoints in a model, along with skeletal connectivity data", id: new Guid(annotationId)); |

|||

|

|||

m_EntityQuery = World.DefaultGameObjectInjectionWorld.EntityManager.CreateEntityQuery(typeof(Labeling), typeof(GroundTruthInfo)); |

|||

|

|||

m_KeyPointEntries = new List<KeyPointEntry>(); |

|||

|

|||

// Texture to use in case the template does not contain a texture for the joints or the skeletal connections

|

|||

m_MissingTexture = new Texture2D(1, 1); |

|||

|

|||

m_KnownStatus = new Dictionary<uint, CachedData>(); |

|||

} |

|||

|

|||

/// <inheritdoc/>

|

|||

protected override void OnBeginRendering() |

|||

{ |

|||

var reporter = perceptionCamera.SensorHandle.ReportAnnotationAsync(m_AnnotationDefinition); |

|||

|

|||

var entities = m_EntityQuery.ToEntityArray(Allocator.TempJob); |

|||

var entityManager = World.DefaultGameObjectInjectionWorld.EntityManager; |

|||

|

|||

m_KeyPointEntries.Clear(); |

|||

|

|||

foreach (var entity in entities) |

|||

{ |

|||

ProcessEntity(entityManager.GetComponentObject<Labeling>(entity)); |

|||

} |

|||

|

|||

entities.Dispose(); |

|||

|

|||

KeyPointsComputed?.Invoke(m_KeyPointEntries); |

|||

reporter.ReportValues(m_KeyPointEntries); |

|||

} |

|||

|

|||

// ReSharper disable InconsistentNaming

|

|||

// ReSharper disable NotAccessedField.Global

|

|||

// ReSharper disable NotAccessedField.Local

|

|||

/// <summary>

|

|||

/// Record storing all of the keypoint data of a labeled gameobject.

|

|||

/// </summary>

|

|||

[Serializable] |

|||

public class KeyPointEntry |

|||

{ |

|||

/// <summary>

|

|||

/// The label id of the entity

|

|||

/// </summary>

|

|||

public int label_id; |

|||

/// <summary>

|

|||

/// The instance id of the entity

|

|||

/// </summary>

|

|||

public uint instance_id; |

|||

/// <summary>

|

|||

/// The template that the points are based on

|

|||

/// </summary>

|

|||

public string template_guid; |

|||

/// <summary>

|

|||

/// Pose ground truth for the current set of keypoints

|

|||

/// </summary>

|

|||

public string pose = "unset"; |

|||

/// <summary>

|

|||

/// Array of all of the keypoints

|

|||

/// </summary>

|

|||

public KeyPoint[] keypoints; |

|||

} |

|||

|

|||

/// <summary>

|

|||

/// The values of a specific keypoint

|

|||

/// </summary>

|

|||

[Serializable] |

|||

public class KeyPoint |

|||

{ |

|||

/// <summary>

|

|||

/// The index of the keypoint in the template file

|

|||

/// </summary>

|

|||

public int index; |

|||

/// <summary>

|

|||

/// The keypoint's x-coordinate pixel location

|

|||

/// </summary>

|

|||

public float x; |

|||

/// <summary>

|

|||

/// The keypoint's y-coordinate pixel location

|

|||

/// </summary>

|

|||

public float y; |

|||

/// <summary>

|

|||

/// The state of the point, 0 = not present, 1 = keypoint is present

|

|||

/// </summary>

|

|||

public int state; |

|||

} |

|||

// ReSharper restore InconsistentNaming

|

|||

// ReSharper restore NotAccessedField.Global

|

|||

// ReSharper restore NotAccessedField.Local

|

|||

|

|||

// Converts a coordinate from world space into pixel space

|

|||

Vector3 ConvertToScreenSpace(Vector3 worldLocation) |

|||

{ |

|||

var pt = perceptionCamera.attachedCamera.WorldToScreenPoint(worldLocation); |

|||

pt.y = Screen.height - pt.y; |

|||

return pt; |

|||

} |

|||

|

|||

List<KeyPointEntry> m_KeyPointEntries; |

|||

|

|||

struct CachedData |

|||

{ |

|||

public bool status; |

|||

public Animator animator; |

|||

public KeyPointEntry keyPoints; |

|||

public List<(JointLabel, int)> overrides; |

|||

} |

|||

|

|||

Dictionary<uint, CachedData> m_KnownStatus; |

|||

|

|||

bool TryToGetTemplateIndexForJoint(KeyPointTemplate template, JointLabel joint, out int index) |

|||

{ |

|||

index = -1; |

|||

|

|||

foreach (var jointTemplate in joint.templateInformation.Where(jointTemplate => jointTemplate.template == template)) |

|||

{ |

|||

for (var i = 0; i < template.keyPoints.Length; i++) |

|||

{ |

|||

if (template.keyPoints[i].label == jointTemplate.label) |

|||

{ |

|||

index = i; |

|||

return true; |

|||

} |

|||

} |

|||

} |

|||

|

|||

return false; |

|||

} |

|||

|

|||

bool DoesTemplateContainJoint(JointLabel jointLabel) |

|||

{ |

|||

foreach (var template in jointLabel.templateInformation) |

|||

{ |

|||

if (template.template == activeTemplate) |

|||

{ |

|||

if (activeTemplate.keyPoints.Any(i => i.label == template.label)) |

|||

{ |

|||

return true; |

|||

} |

|||

} |

|||

} |

|||

|

|||

return false; |

|||

} |

|||

|

|||

void ProcessEntity(Labeling labeledEntity) |

|||

{ |

|||

// Cache out the data of a labeled game object the first time we see it, this will

|

|||

// save performance each frame. Also checks to see if a labeled game object can be annotated.

|

|||

if (!m_KnownStatus.ContainsKey(labeledEntity.instanceId)) |

|||

{ |

|||

var cached = new CachedData() |

|||

{ |

|||

status = false, |

|||

animator = null, |

|||

keyPoints = new KeyPointEntry(), |

|||

overrides = new List<(JointLabel, int)>() |

|||

}; |

|||

|

|||

if (idLabelConfig.TryGetLabelEntryFromInstanceId(labeledEntity.instanceId, out var labelEntry)) |

|||

{ |

|||

var entityGameObject = labeledEntity.gameObject; |

|||

|

|||

cached.keyPoints.instance_id = labeledEntity.instanceId; |

|||

cached.keyPoints.label_id = labelEntry.id; |

|||

cached.keyPoints.template_guid = activeTemplate.templateID.ToString(); |

|||

|

|||

cached.keyPoints.keypoints = new KeyPoint[activeTemplate.keyPoints.Length]; |

|||

for (var i = 0; i < cached.keyPoints.keypoints.Length; i++) |

|||

{ |

|||

cached.keyPoints.keypoints[i] = new KeyPoint { index = i, state = 0 }; |

|||

} |

|||

|

|||

var animator = entityGameObject.transform.GetComponentInChildren<Animator>(); |

|||

if (animator != null) |

|||

{ |

|||

cached.animator = animator; |

|||

cached.status = true; |

|||

} |

|||

|

|||

foreach (var joint in entityGameObject.transform.GetComponentsInChildren<JointLabel>()) |

|||

{ |

|||

if (TryToGetTemplateIndexForJoint(activeTemplate, joint, out var idx)) |

|||

{ |

|||

cached.overrides.Add((joint, idx)); |

|||

cached.status = true; |

|||

} |

|||

} |

|||

} |

|||

|

|||

m_KnownStatus[labeledEntity.instanceId] = cached; |

|||

} |

|||

|

|||

var cachedData = m_KnownStatus[labeledEntity.instanceId]; |

|||

|

|||

if (cachedData.status) |

|||

{ |

|||

var animator = cachedData.animator; |

|||

var keyPoints = cachedData.keyPoints.keypoints; |

|||

|

|||

// Go through all of the rig keypoints and get their location

|

|||

for (var i = 0; i < activeTemplate.keyPoints.Length; i++) |

|||

{ |

|||

var pt = activeTemplate.keyPoints[i]; |

|||

if (pt.associateToRig) |

|||

{ |

|||

var bone = animator.GetBoneTransform(pt.rigLabel); |

|||

if (bone != null) |

|||

{ |

|||

var loc = ConvertToScreenSpace(bone.position); |

|||

keyPoints[i].index = i; |

|||

keyPoints[i].x = loc.x; |

|||

keyPoints[i].y = loc.y; |

|||

keyPoints[i].state = 2; |

|||

} |

|||

} |

|||

} |

|||

|

|||

// Go through all of the additional or override points defined by joint labels and get

|

|||

// their locations

|

|||

foreach (var (joint, idx) in cachedData.overrides) |

|||

{ |

|||

var loc = ConvertToScreenSpace(joint.transform.position); |

|||

keyPoints[idx].index = idx; |

|||

keyPoints[idx].x = loc.x; |

|||

keyPoints[idx].y = loc.y; |

|||

keyPoints[idx].state = 1; |

|||

} |

|||

|

|||

cachedData.keyPoints.pose = "unset"; |

|||

|

|||

if (cachedData.animator != null) |

|||

{ |

|||

cachedData.keyPoints.pose = GetPose(cachedData.animator); |

|||

} |

|||

|

|||

m_KeyPointEntries.Add(cachedData.keyPoints); |

|||

} |

|||

} |

|||

|

|||

string GetPose(Animator animator) |

|||

{ |

|||

var info = animator.GetCurrentAnimatorClipInfo(0); |

|||

|

|||

if (info != null && info.Length > 0) |

|||

{ |

|||

var clip = info[0].clip; |

|||

var timeOffset = animator.GetCurrentAnimatorStateInfo(0).normalizedTime; |

|||

|

|||

if (poseStateConfigs != null) |

|||

{ |

|||

foreach (var p in poseStateConfigs) |

|||

{ |

|||

if (p.animationClip == clip) |

|||

{ |

|||

var time = timeOffset; |

|||

var label = p.GetPoseAtTime(time); |

|||

return label; |

|||

} |

|||

} |

|||

} |

|||

} |

|||

|

|||

return "unset"; |

|||

} |

|||

|

|||

/// <inheritdoc/>

|

|||

protected override void OnVisualize() |

|||

{ |

|||

var jointTexture = activeTemplate.jointTexture; |

|||

if (jointTexture == null) jointTexture = m_MissingTexture; |

|||

|

|||

var skeletonTexture = activeTemplate.skeletonTexture; |

|||

if (skeletonTexture == null) skeletonTexture = m_MissingTexture; |

|||

|

|||

foreach (var entry in m_KeyPointEntries) |

|||

{ |

|||

foreach (var bone in activeTemplate.skeleton) |

|||

{ |

|||

var joint1 = entry.keypoints[bone.joint1]; |

|||

var joint2 = entry.keypoints[bone.joint2]; |

|||

|

|||

if (joint1.state != 0 && joint2.state != 0) |

|||

{ |

|||

VisualizationHelper.DrawLine(joint1.x, joint1.y, joint2.x, joint2.y, bone.color, 8, skeletonTexture); |

|||

} |

|||

} |

|||

|

|||

foreach (var keypoint in entry.keypoints) |

|||

{ |

|||

if (keypoint.state != 0) |

|||

VisualizationHelper.DrawPoint(keypoint.x, keypoint.y, activeTemplate.keyPoints[keypoint.index].color, 8, jointTexture); |

|||

} |

|||

} |

|||

} |

|||

|

|||

// ReSharper disable InconsistentNaming

|

|||

// ReSharper disable NotAccessedField.Local

|

|||

[Serializable] |

|||

struct JointJson |

|||

{ |

|||

public string label; |

|||

public int index; |

|||

} |

|||

|

|||

[Serializable] |

|||

struct SkeletonJson |

|||

{ |

|||

public int joint1; |

|||

public int joint2; |

|||

} |

|||

|

|||

[Serializable] |

|||

struct KeyPointJson |

|||

{ |

|||

public string template_id; |

|||

public string template_name; |

|||

public JointJson[] key_points; |

|||

public SkeletonJson[] skeleton; |

|||

} |

|||

// ReSharper restore InconsistentNaming

|

|||

// ReSharper restore NotAccessedField.Local

|

|||

|

|||

KeyPointJson TemplateToJson(KeyPointTemplate input) |

|||

{ |

|||

var json = new KeyPointJson(); |

|||

json.template_id = input.templateID.ToString(); |

|||

json.template_name = input.templateName; |

|||

json.key_points = new JointJson[input.keyPoints.Length]; |

|||

json.skeleton = new SkeletonJson[input.skeleton.Length]; |

|||

|

|||

for (var i = 0; i < input.keyPoints.Length; i++) |

|||

{ |

|||

json.key_points[i] = new JointJson |

|||

{ |

|||

label = input.keyPoints[i].label, |

|||

index = i |

|||

}; |

|||

} |

|||

|

|||

for (var i = 0; i < input.skeleton.Length; i++) |

|||

{ |

|||

json.skeleton[i] = new SkeletonJson() |

|||

{ |

|||

joint1 = input.skeleton[i].joint1, |

|||

joint2 = input.skeleton[i].joint2 |

|||

}; |

|||

} |

|||

|

|||

return json; |

|||

} |

|||

} |

|||

} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 377d37b913b843b6985fa57c13cb732c |

|||

timeCreated: 1610383503 |

|||

|

|||

using System; |

|||

|

|||

namespace UnityEngine.Perception.GroundTruth |

|||

{ |

|||

/// <summary>

|

|||

/// A definition of a keypoint (joint).

|

|||

/// </summary>

|

|||

[Serializable] |

|||

public class KeyPointDefinition |

|||

{ |

|||

/// <summary>

|

|||

/// The name of the keypoint

|

|||

/// </summary>

|

|||

public string label; |

|||

/// <summary>

|

|||

/// Does this keypoint map directly to a <see cref="Animator"/> <see cref="Avatar"/> <see cref="HumanBodyBones"/>

|

|||

/// </summary>

|

|||

public bool associateToRig = true; |

|||

/// <summary>

|

|||

/// The associated <see cref="HumanBodyBones"/> of the rig

|

|||

/// </summary>

|

|||

public HumanBodyBones rigLabel = HumanBodyBones.Head; |

|||

/// <summary>

|

|||

/// The color of the keypoint in the visualization

|

|||

/// </summary>

|

|||

public Color color; |

|||

} |

|||

|

|||

/// <summary>

|

|||

/// A skeletal connection between two joints.

|

|||

/// </summary>

|

|||

[Serializable] |

|||

public class SkeletonDefinition |

|||

{ |

|||

/// <summary>

|

|||

/// The first joint

|

|||

/// </summary>

|

|||

public int joint1; |

|||

/// <summary>

|

|||

/// The second joint

|

|||

/// </summary>

|

|||

public int joint2; |

|||

/// <summary>

|

|||

/// The color of the skeleton in the visualization

|

|||

/// </summary>

|

|||

public Color color; |

|||

} |

|||

|

|||

/// <summary>

|

|||

/// Template used to define the keypoints of a humanoid asset.

|

|||

/// </summary>

|

|||

[CreateAssetMenu(fileName = "KeypointTemplate", menuName = "Perception/Keypoint Template", order = 2)] |

|||

public class KeyPointTemplate : ScriptableObject |

|||

{ |

|||

/// <summary>

|

|||

/// The <see cref="Guid"/> of the template

|

|||

/// </summary>

|

|||

public string templateID = Guid.NewGuid().ToString(); |

|||

/// <summary>

|

|||

/// The name of the template

|

|||

/// </summary>

|

|||

public string templateName; |

|||

/// <summary>

|

|||

/// Texture to use for the visualization of the joint.

|

|||

/// </summary>

|

|||

public Texture2D jointTexture; |

|||

/// <summary>

|

|||

/// Texture to use for the visualization of the skeletal connection.

|

|||

/// </summary>

|

|||

public Texture2D skeletonTexture; |

|||

/// <summary>

|

|||

/// Array of <see cref="KeyPointDefinition"/> for the template.

|

|||

/// </summary>

|

|||

public KeyPointDefinition[] keyPoints; |

|||

/// <summary>

|

|||

/// Array of the <see cref="SkeletonDefinition"/> for the template.

|

|||

/// </summary>

|

|||

public SkeletonDefinition[] skeleton; |

|||

} |

|||

} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 37a7d6f1a40c45a2981a6291f0d03337 |

|||

timeCreated: 1610633739 |

|||

|

|||

namespace UnityEngine.Perception.GroundTruth |

|||

{ |

|||

/// <summary>

|

|||

/// Helper class that contains common visualization methods useful to ground truth labelers.

|

|||

/// </summary>

|

|||

public static class VisualizationHelper |

|||

{ |

|||

static Texture2D s_OnePixel = new Texture2D(1, 1); |

|||

|

|||

/// <summary>

|

|||

/// Converts a 3D world space coordinate to image pixel space.

|

|||

/// </summary>

|

|||

/// <param name="camera">The rendering camera</param>

|

|||

/// <param name="worldLocation">The 3D world location to convert</param>

|

|||

/// <returns>The coordinate in pixel space</returns>

|

|||

public static Vector3 ConvertToScreenSpace(Camera camera, Vector3 worldLocation) |

|||

{ |

|||

var pt = camera.WorldToScreenPoint(worldLocation); |

|||

pt.y = Screen.height - pt.y; |

|||

return pt; |

|||

} |

|||

|

|||

static Rect ToBoxRect(float x, float y, float halfSize = 3.0f) |

|||

{ |

|||

return new Rect(x - halfSize, y - halfSize, halfSize * 2, halfSize * 2); |

|||

} |

|||

|

|||

/// <summary>

|

|||

/// Draw a point (in pixel space) on the screen

|

|||

/// </summary>

|

|||

/// <param name="pt">The point location, in pixel space</param>

|

|||

/// <param name="color">The color of the point</param>

|

|||

/// <param name="width">The width of the point</param>

|

|||

/// <param name="texture">The texture to use for the point, defaults to a solid pixel</param>

|

|||

public static void DrawPoint(Vector3 pt, Color color, float width = 4.0f, Texture texture = null) |

|||

{ |

|||

DrawPoint(pt.x, pt.y, color, width, texture); |

|||

} |

|||

|

|||

/// <summary>

|

|||

/// Draw a point (in pixel space) on the screen

|

|||

/// </summary>

|

|||

/// <param name="x">The point's x value, in pixel space</param>

|

|||

/// <param name="y">The point's y value, in pixel space</param>

|

|||

/// <param name="color">The color of the point</param>

|

|||

/// <param name="width">The width of the point</param>

|

|||

/// <param name="texture">The texture to use for the point, defaults to a solid pixel</param>

|

|||

public static void DrawPoint(float x, float y, Color color, float width = 4, Texture texture = null) |

|||

{ |

|||

if (texture == null) texture = s_OnePixel; |

|||

var oldColor = GUI.color; |

|||

GUI.color = color; |

|||

GUI.DrawTexture(ToBoxRect(x, y, width * 0.5f), texture); |

|||

GUI.color = oldColor; |

|||

} |

|||

|

|||

static float Magnitude(float p1X, float p1Y, float p2X, float p2Y) |

|||

{ |

|||

var x = p2X - p1X; |

|||

var y = p2Y - p1Y; |

|||

return Mathf.Sqrt(x * x + y * y); |

|||

} |

|||

|

|||

/// <summary>

|

|||

/// Draw's a texture between two locations of a passed in width.

|

|||

/// </summary>

|

|||

/// <param name="p1">The start point in pixel space</param>

|

|||

/// <param name="p2">The end point in pixel space</param>

|

|||

/// <param name="color">The color of the line</param>

|

|||

/// <param name="width">The width of the line</param>

|

|||

/// <param name="texture">The texture to use, if null, will draw a solid line of passed in color</param>

|

|||

public static void DrawLine(Vector2 p1, Vector2 p2, Color color, float width = 3.0f, Texture texture = null) |

|||

{ |

|||

DrawLine(p1.x, p1.y, p2.x, p2.y, color, width, texture); |

|||

} |

|||

|

|||

/// <summary>

|

|||

/// Draw's a texture between two locations of a passed in width.

|

|||

/// </summary>

|

|||

/// <param name="p1X">The start point's x coordinate in pixel space</param>

|

|||

/// <param name="p1Y">The start point's y coordinate in pixel space</param>

|

|||

/// <param name="p2X">The end point's x coordinate in pixel space</param>

|

|||

/// <param name="p2Y">The end point's y coordinate in pixel space</param>

|

|||

/// <param name="color">The color of the line</param>

|

|||

/// <param name="width">The width of the line</param>

|

|||

/// <param name="texture">The texture to use, if null, will draw a solid line of passed in color</param>

|

|||

public static void DrawLine (float p1X, float p1Y, float p2X, float p2Y, Color color, float width = 3.0f, Texture texture = null) |

|||

{ |

|||

if (texture == null) texture = s_OnePixel; |

|||

|

|||

var oldColor = GUI.color; |

|||

|

|||

GUI.color = color; |

|||

|

|||

var matrixBackup = GUI.matrix; |

|||

var angle = Mathf.Atan2 (p2Y - p1Y, p2X - p1X) * 180f / Mathf.PI; |

|||

|

|||

var length = Magnitude(p1X, p1Y, p2X, p2Y); |

|||

|

|||

GUIUtility.RotateAroundPivot (angle, new Vector2(p1X, p1Y)); |

|||

var halfWidth = width * 0.5f; |

|||

GUI.DrawTexture (new Rect (p1X - halfWidth, p1Y - halfWidth, length, width), texture); |

|||

|

|||

GUI.matrix = matrixBackup; |

|||

GUI.color = oldColor; |

|||

} |

|||

|

|||

} |

|||

} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: b4ef50bfa62549848a6d12c049397eba |

|||

timeCreated: 1611019489 |

|||

|

|||

%YAML 1.1 |

|||

%TAG !u! tag:unity3d.com,2011: |

|||

--- !u!91 &9100000 |

|||

AnimatorController: |

|||

m_ObjectHideFlags: 0 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_Name: AnimationRandomizerController |

|||

serializedVersion: 5 |

|||

m_AnimatorParameters: [] |

|||

m_AnimatorLayers: |

|||

- serializedVersion: 5 |

|||

m_Name: Base Layer |

|||

m_StateMachine: {fileID: 1300458365308884037} |

|||

m_Mask: {fileID: 0} |

|||

m_Motions: [] |

|||

m_Behaviours: [] |

|||

m_BlendingMode: 0 |

|||

m_SyncedLayerIndex: -1 |

|||

m_DefaultWeight: 0 |

|||

m_IKPass: 0 |

|||

m_SyncedLayerAffectsTiming: 0 |

|||

m_Controller: {fileID: 9100000} |

|||

--- !u!1107 &1300458365308884037 |

|||

AnimatorStateMachine: |

|||

serializedVersion: 5 |

|||

m_ObjectHideFlags: 1 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_Name: Base Layer |

|||

m_ChildStates: |

|||

- serializedVersion: 1 |

|||

m_State: {fileID: 3212544235944076811} |

|||

m_Position: {x: 390, y: 320, z: 0} |

|||

m_ChildStateMachines: [] |

|||

m_AnyStateTransitions: [] |

|||

m_EntryTransitions: [] |

|||

m_StateMachineTransitions: {} |

|||

m_StateMachineBehaviours: [] |

|||

m_AnyStatePosition: {x: 50, y: 20, z: 0} |

|||

m_EntryPosition: {x: 50, y: 120, z: 0} |

|||

m_ExitPosition: {x: 800, y: 120, z: 0} |

|||

m_ParentStateMachinePosition: {x: 800, y: 20, z: 0} |

|||

m_DefaultState: {fileID: 3212544235944076811} |

|||

--- !u!1102 &3212544235944076811 |

|||

AnimatorState: |

|||

serializedVersion: 5 |

|||

m_ObjectHideFlags: 1 |

|||

m_CorrespondingSourceObject: {fileID: 0} |

|||

m_PrefabInstance: {fileID: 0} |

|||

m_PrefabAsset: {fileID: 0} |

|||

m_Name: RandomState |

|||

m_Speed: 1 |

|||

m_CycleOffset: 0 |

|||

m_Transitions: [] |

|||

m_StateMachineBehaviours: [] |

|||

m_Position: {x: 50, y: 50, z: 0} |

|||

m_IKOnFeet: 0 |

|||

m_WriteDefaultValues: 1 |

|||

m_Mirror: 0 |

|||

m_SpeedParameterActive: 0 |

|||

m_MirrorParameterActive: 0 |

|||

m_CycleOffsetParameterActive: 0 |

|||

m_TimeParameterActive: 0 |

|||

m_Motion: {fileID: 7400000, guid: 11ff68761e3b74dbd84106361e9a4ec7, type: 2} |

|||

m_Tag: |

|||

m_SpeedParameter: |

|||

m_MirrorParameter: |

|||

m_CycleOffsetParameter: |

|||

m_TimeParameter: |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 492c555d00e884241a46c09b49877983 |

|||

NativeFormatImporter: |

|||

externalObjects: {} |

|||

mainObjectFileID: 9100000 |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

1001

com.unity.perception/Runtime/GroundTruth/Resources/PlayerIdle.anim

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

|

|||

fileFormatVersion: 2 |

|||

guid: 11ff68761e3b74dbd84106361e9a4ec7 |

|||

NativeFormatImporter: |

|||

externalObjects: {} |

|||

mainObjectFileID: 0 |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: e381cbaaf29614168bafc8f7ec5dbfe9 |

|||

TextureImporter: |

|||

internalIDToNameTable: [] |

|||

externalObjects: {} |

|||

serializedVersion: 11 |

|||

mipmaps: |

|||

mipMapMode: 0 |

|||

enableMipMap: 0 |

|||

sRGBTexture: 1 |

|||

linearTexture: 0 |

|||

fadeOut: 0 |

|||

borderMipMap: 0 |

|||

mipMapsPreserveCoverage: 0 |

|||

alphaTestReferenceValue: 0.5 |

|||

mipMapFadeDistanceStart: 1 |

|||

mipMapFadeDistanceEnd: 3 |

|||

bumpmap: |

|||

convertToNormalMap: 0 |

|||

externalNormalMap: 0 |

|||

heightScale: 0.25 |

|||

normalMapFilter: 0 |

|||

isReadable: 0 |

|||

streamingMipmaps: 0 |

|||

streamingMipmapsPriority: 0 |

|||

vTOnly: 0 |

|||

grayScaleToAlpha: 0 |

|||

generateCubemap: 6 |

|||

cubemapConvolution: 0 |

|||

seamlessCubemap: 0 |

|||

textureFormat: 1 |

|||

maxTextureSize: 2048 |

|||

textureSettings: |

|||

serializedVersion: 2 |

|||

filterMode: -1 |

|||

aniso: -1 |

|||

mipBias: -100 |

|||

wrapU: 1 |

|||

wrapV: 1 |

|||

wrapW: -1 |

|||

nPOTScale: 0 |

|||

lightmap: 0 |

|||

compressionQuality: 50 |

|||

spriteMode: 1 |

|||

spriteExtrude: 1 |

|||

spriteMeshType: 1 |

|||

alignment: 0 |

|||

spritePivot: {x: 0.5, y: 0.5} |

|||

spritePixelsToUnits: 100 |

|||

spriteBorder: {x: 0, y: 0, z: 0, w: 0} |

|||

spriteGenerateFallbackPhysicsShape: 1 |

|||

alphaUsage: 1 |

|||

alphaIsTransparency: 1 |

|||

spriteTessellationDetail: -1 |

|||

textureType: 8 |

|||

textureShape: 1 |

|||

singleChannelComponent: 0 |

|||

flipbookRows: 1 |

|||

flipbookColumns: 1 |

|||

maxTextureSizeSet: 0 |

|||

compressionQualitySet: 0 |

|||

textureFormatSet: 0 |

|||

ignorePngGamma: 0 |

|||

applyGammaDecoding: 0 |

|||

platformSettings: |

|||

- serializedVersion: 3 |

|||

buildTarget: DefaultTexturePlatform |

|||

maxTextureSize: 2048 |

|||

resizeAlgorithm: 0 |

|||

textureFormat: -1 |

|||

textureCompression: 1 |

|||

compressionQuality: 50 |

|||

crunchedCompression: 0 |

|||

allowsAlphaSplitting: 0 |

|||

overridden: 0 |

|||

androidETC2FallbackOverride: 0 |

|||

forceMaximumCompressionQuality_BC6H_BC7: 0 |

|||

- serializedVersion: 3 |

|||

buildTarget: Standalone |

|||

maxTextureSize: 2048 |

|||

resizeAlgorithm: 0 |

|||

textureFormat: -1 |

|||

textureCompression: 1 |

|||

compressionQuality: 50 |

|||

crunchedCompression: 0 |

|||

allowsAlphaSplitting: 0 |

|||

overridden: 0 |

|||

androidETC2FallbackOverride: 0 |

|||

forceMaximumCompressionQuality_BC6H_BC7: 0 |

|||

spriteSheet: |

|||

serializedVersion: 2 |

|||

sprites: [] |

|||

outline: [] |

|||

physicsShape: [] |

|||

bones: [] |

|||

spriteID: 5e97eb03825dee720800000000000000 |

|||

internalID: 0 |

|||

vertices: [] |

|||

indices: |

|||

edges: [] |

|||

weights: [] |

|||

secondaryTextures: [] |

|||

spritePackingTag: |

|||

pSDRemoveMatte: 0 |

|||

pSDShowRemoveMatteOption: 0 |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

|

|||

using System; |

|||

|

|||

namespace UnityEngine.Experimental.Perception.Randomization.Parameters |

|||

{ |

|||

/// <summary>

|

|||

/// A categorical parameter for animation clips

|

|||

/// </summary>

|

|||

[Serializable] |

|||

public class AnimationClipParameter : CategoricalParameter<AnimationClip> { } |

|||

} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 6af6ee532f5e4e4b83353f2f32105665 |

|||

timeCreated: 1610935882 |

|||

|

|||

using System; |

|||

using UnityEngine; |

|||

using UnityEngine.Experimental.Perception.Randomization.Parameters; |

|||

using UnityEngine.Experimental.Perception.Randomization.Randomizers.SampleRandomizers.Tags; |

|||

using UnityEngine.Experimental.Perception.Randomization.Samplers; |

|||

|

|||

namespace UnityEngine.Experimental.Perception.Randomization.Randomizers.SampleRandomizers |

|||

{ |

|||

/// <summary>

|

|||

/// Chooses a random of frame of a random clip for a game object

|

|||

/// </summary>

|

|||

[Serializable] |

|||

[AddRandomizerMenu("Perception/Animation Randomizer")] |

|||

public class AnimationRandomizer : Randomizer |

|||

{ |

|||

FloatParameter m_FloatParameter = new FloatParameter{ value = new UniformSampler(0, 1) }; |

|||

|

|||

const string k_ClipName = "PlayerIdle"; |

|||

const string k_StateName = "Base Layer.RandomState"; |

|||

|

|||

void RandomizeAnimation(AnimationRandomizerTag tag) |

|||

{ |

|||

var animator = tag.gameObject.GetComponent<Animator>(); |

|||

animator.applyRootMotion = tag.applyRootMotion; |

|||

|

|||

var overrider = tag.animatorOverrideController; |

|||

if (overrider != null && tag.animationClips.GetCategoryCount() > 0) |

|||

{ |

|||

overrider[k_ClipName] = tag.animationClips.Sample(); |

|||

animator.Play(k_StateName, 0, m_FloatParameter.Sample()); |

|||

} |

|||

} |

|||

|

|||

/// <inheritdoc/>

|

|||

protected override void OnIterationStart() |

|||

{ |

|||

if (m_FloatParameter == null) m_FloatParameter = new FloatParameter{ value = new UniformSampler(0, 1) }; |

|||

|

|||

var taggedObjects = tagManager.Query<AnimationRandomizerTag>(); |

|||

foreach (var taggedObject in taggedObjects) |

|||

{ |

|||

RandomizeAnimation(taggedObject); |

|||

} |

|||

} |

|||

} |

|||

} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 8b57910cfd4a4dec90d6aa4a8ef824da |

|||

timeCreated: 1610802187 |

|||

|

|||

using UnityEngine.Experimental.Perception.Randomization.Parameters; |

|||

using UnityEngine.Perception.GroundTruth; |

|||

|

|||

namespace UnityEngine.Experimental.Perception.Randomization.Randomizers.SampleRandomizers.Tags |

|||

{ |

|||

/// <summary>

|

|||

/// Used in conjunction with a <see cref="AnimationRandomizer"/> to select a random animation frame for

|

|||

/// the tagged game object

|

|||

/// </summary>

|

|||

[RequireComponent(typeof(Animator))] |

|||

[AddComponentMenu("Perception/RandomizerTags/Animation Randomizer Tag")] |

|||

public class AnimationRandomizerTag : RandomizerTag |

|||

{ |

|||

/// <summary>

|

|||

/// A list of animation clips from which to choose

|

|||

/// </summary>

|

|||

public AnimationClipParameter animationClips; |

|||

|

|||

/// <summary>

|

|||

/// Apply the root motion to the animator. If true, if an animation has a rotation translation and/or rotation

|

|||

/// that will be applied to the labeled model, which means that the model maybe move to a new position.

|

|||

/// If false, then the model will stay at its current position/rotation.

|

|||

/// </summary>

|

|||

public bool applyRootMotion = false; |

|||

|

|||

/// <summary>

|

|||

/// Gets the animation override controller for an animation randomization. The controller is loaded from

|

|||

/// resources.

|

|||

/// </summary>

|

|||

public AnimatorOverrideController animatorOverrideController |

|||

{ |

|||

get |

|||

{ |

|||

if (m_Controller == null) |

|||

{ |

|||

var animator = gameObject.GetComponent<Animator>(); |

|||

var runtimeAnimatorController = Resources.Load<RuntimeAnimatorController>("AnimationRandomizerController"); |

|||

m_Controller = new AnimatorOverrideController(runtimeAnimatorController); |

|||

animator.runtimeAnimatorController = m_Controller; |

|||

} |

|||

|

|||

return m_Controller; |

|||

} |

|||

} |

|||

|

|||

AnimatorOverrideController m_Controller; |

|||

} |

|||

} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: f8943e41c7c34facb177a5decc1b2aef |

|||

timeCreated: 1610802367 |

|||

|

|||

using System; |

|||

using System.Collections; |

|||

using System.Collections.Generic; |

|||

using System.Linq; |

|||

using NUnit.Framework; |

|||

using UnityEngine; |

|||

using UnityEngine.Perception.GroundTruth; |

|||

using UnityEngine.TestTools; |

|||

|

|||

namespace GroundTruthTests |

|||

{ |

|||

[TestFixture] |

|||

public class KeyPointGroundTruthTests : GroundTruthTestBase |

|||

{ |

|||

static GameObject SetupCamera(IdLabelConfig config, KeyPointTemplate template, Action<List<KeyPointLabeler.KeyPointEntry>> computeListener) |

|||

{ |

|||

var cameraObject = new GameObject(); |

|||

cameraObject.SetActive(false); |

|||

var camera = cameraObject.AddComponent<Camera>(); |

|||

camera.orthographic = false; |

|||

camera.fieldOfView = 60; |

|||

camera.nearClipPlane = 0.3f; |

|||

camera.farClipPlane = 1000; |

|||

|

|||

camera.transform.position = new Vector3(0, 0, -10); |

|||

|

|||

var perceptionCamera = cameraObject.AddComponent<PerceptionCamera>(); |

|||

perceptionCamera.captureRgbImages = false; |

|||

var keyPointLabeler = new KeyPointLabeler(config, template); |

|||

if (computeListener != null) |

|||

keyPointLabeler.KeyPointsComputed += computeListener; |

|||

|

|||

perceptionCamera.AddLabeler(keyPointLabeler); |

|||

|

|||

return cameraObject; |

|||

} |

|||

|

|||

static KeyPointTemplate CreateTestTemplate(Guid guid, string label) |

|||

{ |

|||

var keyPoints = new[] |

|||

{ |

|||

new KeyPointDefinition |

|||

{ |

|||

label = "FrontLowerLeft", |

|||

associateToRig = false, |

|||

color = Color.black |

|||

}, |

|||

new KeyPointDefinition |

|||

{ |

|||

label = "FrontUpperLeft", |

|||

associateToRig = false, |

|||

color = Color.black |

|||

}, |

|||

new KeyPointDefinition |

|||

{ |

|||

label = "FrontUpperRight", |

|||

associateToRig = false, |

|||

color = Color.black |

|||

}, |

|||

new KeyPointDefinition |

|||

{ |

|||

label = "FrontLowerRight", |

|||

associateToRig = false, |

|||

color = Color.black |

|||

}, |

|||

new KeyPointDefinition |

|||

{ |

|||

label = "BackLowerLeft", |

|||

associateToRig = false, |

|||

color = Color.black |

|||

}, |

|||

new KeyPointDefinition |

|||

{ |

|||

label = "BackUpperLeft", |

|||

associateToRig = false, |

|||

color = Color.black |

|||

}, |

|||

new KeyPointDefinition |

|||

{ |

|||

label = "BackUpperRight", |

|||

associateToRig = false, |

|||

color = Color.black |

|||

}, |

|||

new KeyPointDefinition |

|||

{ |

|||

label = "BackLowerRight", |

|||

associateToRig = false, |

|||

color = Color.black |

|||

}, |

|||

new KeyPointDefinition |

|||

{ |

|||

label = "Center", |

|||

associateToRig = false, |

|||

color = Color.black |

|||

} |

|||

}; |

|||

|

|||

var skeleton = new[] |

|||

{ |

|||

new SkeletonDefinition |

|||

{ |

|||

joint1 = 0, |

|||

joint2 = 1, |

|||

color = Color.magenta |

|||

}, |

|||

new SkeletonDefinition |

|||

{ |

|||

joint1 = 1, |

|||

joint2 = 2, |

|||

color = Color.magenta |

|||

}, |

|||

new SkeletonDefinition |

|||

{ |

|||

joint1 = 2, |

|||

joint2 = 3, |

|||

color = Color.magenta |

|||

}, |

|||

new SkeletonDefinition |

|||

{ |

|||

joint1 = 3, |

|||

joint2 = 0, |

|||

color = Color.magenta |

|||

}, |

|||

|

|||

new SkeletonDefinition |

|||

{ |

|||

joint1 = 4, |

|||

joint2 = 5, |

|||

color = Color.blue |

|||

}, |

|||

new SkeletonDefinition |

|||

{ |

|||

joint1 = 5, |

|||

joint2 = 6, |

|||

color = Color.blue |

|||

}, |

|||

new SkeletonDefinition |

|||

{ |

|||

joint1 = 6, |

|||

joint2 = 7, |

|||

color = Color.blue |

|||

}, |

|||

new SkeletonDefinition |

|||

{ |

|||

joint1 = 7, |

|||

joint2 = 4, |

|||

color = Color.blue |

|||

}, |

|||

|

|||

new SkeletonDefinition |

|||

{ |

|||

joint1 = 0, |

|||

joint2 = 4, |

|||

color = Color.green |

|||

}, |

|||

new SkeletonDefinition |

|||

{ |

|||

joint1 = 1, |

|||

joint2 = 5, |

|||

color = Color.green |

|||

}, |

|||

new SkeletonDefinition |

|||

{ |

|||

joint1 = 2, |

|||

joint2 = 6, |

|||

color = Color.green |

|||

}, |

|||

new SkeletonDefinition |

|||

{ |

|||

joint1 = 3, |

|||

joint2 = 7, |

|||

color = Color.green |

|||

}, |

|||

}; |

|||

|

|||

var template = ScriptableObject.CreateInstance<KeyPointTemplate>(); |

|||

template.templateID = guid.ToString(); |

|||

template.templateName = label; |

|||

template.jointTexture = null; |

|||

template.skeletonTexture = null; |

|||

template.keyPoints = keyPoints; |

|||

template.skeleton = skeleton; |

|||

|

|||

return template; |

|||

} |

|||

|

|||

[Test] |

|||

public void KeypointTemplate_CreateTemplateTest() |

|||

{ |

|||

var guid = Guid.NewGuid(); |

|||

const string label = "TestTemplate"; |

|||

var template = CreateTestTemplate(guid, label); |

|||

|

|||

Assert.AreEqual(template.templateID, guid.ToString()); |

|||

Assert.AreEqual(template.templateName, label); |

|||

Assert.IsNull(template.jointTexture); |

|||

Assert.IsNull(template.skeletonTexture); |

|||

Assert.IsNotNull(template.keyPoints); |

|||

Assert.IsNotNull(template.skeleton); |

|||

Assert.AreEqual(template.keyPoints.Length, 9); |

|||

Assert.AreEqual(template.skeleton.Length, 12); |

|||

|

|||

var k0 = template.keyPoints[0]; |

|||

Assert.NotNull(k0); |

|||

Assert.AreEqual(k0.label, "FrontLowerLeft"); |

|||

Assert.False(k0.associateToRig); |

|||

Assert.AreEqual(k0.color, Color.black); |

|||

|

|||

var s0 = template.skeleton[0]; |

|||

Assert.NotNull(s0); |

|||

Assert.AreEqual(s0.joint1, 0); |

|||

Assert.AreEqual(s0.joint2, 1); |

|||

Assert.AreEqual(s0.color, Color.magenta); |

|||

} |

|||

|

|||

static IdLabelConfig SetUpLabelConfig() |

|||

{ |

|||

var cfg = ScriptableObject.CreateInstance<IdLabelConfig>(); |

|||

cfg.Init(new List<IdLabelEntry>() |

|||

{ |

|||

new IdLabelEntry |

|||

{ |

|||

id = 1, |

|||

label = "label" |

|||

} |

|||

}); |

|||

|

|||

return cfg; |

|||

} |

|||

|

|||

static void SetupCubeJoint(GameObject cube, KeyPointTemplate template, string label, float x, float y, float z) |

|||

{ |

|||

var joint = new GameObject(); |

|||

joint.transform.parent = cube.transform; |

|||

joint.transform.localPosition = new Vector3(x, y, z); |

|||

var jointLabel = joint.AddComponent<JointLabel>(); |

|||

jointLabel.templateInformation = new List<JointLabel.TemplateData>(); |

|||

var templateData = new JointLabel.TemplateData |

|||

{ |

|||

template = template, |

|||

label = label |

|||

}; |

|||

jointLabel.templateInformation.Add(templateData); |

|||

} |

|||

|

|||

static void SetupCubeJoints(GameObject cube, KeyPointTemplate template) |

|||

{ |

|||

SetupCubeJoint(cube, template, "FrontLowerLeft", -0.5f, -0.5f, -0.5f); |

|||

SetupCubeJoint(cube, template, "FrontUpperLeft", -0.5f, 0.5f, -0.5f); |

|||

SetupCubeJoint(cube, template, "FrontUpperRight", 0.5f, 0.5f, -0.5f); |

|||

SetupCubeJoint(cube, template, "FrontLowerRight", 0.5f, -0.5f, -0.5f); |

|||

SetupCubeJoint(cube, template, "BackLowerLeft", -0.5f, -0.5f, 0.5f); |

|||

SetupCubeJoint(cube, template, "BackUpperLeft", -0.5f, 0.5f, 0.5f); |

|||

SetupCubeJoint(cube, template, "BackUpperRight", 0.5f, 0.5f, 0.5f); |

|||

SetupCubeJoint(cube, template, "BackLowerRight", 0.5f, -0.5f, 0.5f); |

|||

} |

|||

|

|||

[UnityTest] |

|||

public IEnumerator Keypoint_TestStaticLabeledCube() |

|||

{ |

|||

var incoming = new List<List<KeyPointLabeler.KeyPointEntry>>(); |

|||

var template = CreateTestTemplate(Guid.NewGuid(), "TestTemplate"); |

|||

|

|||

var cam = SetupCamera(SetUpLabelConfig(), template, (data) => |

|||

{ |

|||

incoming.Add(data); |

|||

}); |

|||

|

|||

var cube = TestHelper.CreateLabeledCube(scale: 6, z: 8); |

|||

SetupCubeJoints(cube, template); |

|||

|

|||

cube.SetActive(true); |

|||

cam.SetActive(true); |

|||

|

|||

AddTestObjectForCleanup(cam); |

|||

AddTestObjectForCleanup(cube); |

|||

|

|||

yield return null; |

|||

yield return null; |

|||

|

|||

var testCase = incoming.Last(); |

|||

Assert.AreEqual(1, testCase.Count); |

|||

var t = testCase.First(); |

|||

Assert.NotNull(t); |

|||

Assert.AreEqual(1, t.instance_id); |

|||

Assert.AreEqual(1, t.label_id); |

|||

Assert.AreEqual(template.templateID.ToString(), t.template_guid); |

|||

Assert.AreEqual(9, t.keypoints.Length); |

|||

|

|||

Assert.AreEqual(t.keypoints[0].x, t.keypoints[1].x); |

|||

Assert.AreEqual(t.keypoints[2].x, t.keypoints[3].x); |

|||

Assert.AreEqual(t.keypoints[4].x, t.keypoints[5].x); |

|||

Assert.AreEqual(t.keypoints[6].x, t.keypoints[7].x); |

|||

|

|||

Assert.AreEqual(t.keypoints[0].y, t.keypoints[3].y); |

|||

Assert.AreEqual(t.keypoints[1].y, t.keypoints[2].y); |

|||

Assert.AreEqual(t.keypoints[4].y, t.keypoints[7].y); |

|||

Assert.AreEqual(t.keypoints[5].y, t.keypoints[6].y); |

|||

|

|||

for (var i = 0; i < 9; i++) Assert.AreEqual(i, t.keypoints[i].index); |

|||

for (var i = 0; i < 8; i++) Assert.AreEqual(1, t.keypoints[i].state); |

|||

Assert.Zero(t.keypoints[8].state); |

|||

Assert.Zero(t.keypoints[8].x); |

|||

Assert.Zero(t.keypoints[8].y); |

|||

} |

|||

} |

|||

} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: c62092ba10e4e4a80a0ec03e6e92593a |

|||

MonoImporter: |

|||

externalObjects: {} |

|||

serializedVersion: 2 |

|||

defaultReferences: [] |

|||

executionOrder: 0 |

|||

icon: {instanceID: 0} |

|||

userData: |

|||

assetBundleName: |

|||

assetBundleVariant: |

|||

撰写

预览

正在加载...

取消

保存

Reference in new issue