当前提交

aa96fb53

共有 61 个文件被更改,包括 2709 次插入 和 286 次删除

-

7.github/pull_request_template.md

-

4.yamato/environments.yml

-

17README.md

-

1TestProjects/PerceptionHDRP/Assets/SemanticSegmentationLabelingConfiguration.asset

-

4TestProjects/PerceptionHDRP/Packages/packages-lock.json

-

1TestProjects/PerceptionURP/Assets/SemanticSegmentationLabelingConfiguration.asset

-

4TestProjects/PerceptionURP/Packages/packages-lock.json

-

21com.unity.perception/CHANGELOG.md

-

2com.unity.perception/Documentation~/HPTutorial/TUTORIAL.md

-

98com.unity.perception/Documentation~/PerceptionCamera.md

-

26com.unity.perception/Documentation~/Tutorial/Phase1.md

-

14com.unity.perception/Documentation~/Tutorial/Phase3.md

-

2com.unity.perception/Documentation~/Tutorial/TUTORIAL.md

-

2com.unity.perception/Editor/GroundTruth/IdLabelConfigEditor.cs

-

7com.unity.perception/Editor/GroundTruth/LabelConfigEditor.cs

-

9com.unity.perception/Editor/GroundTruth/PerceptionCameraEditor.cs

-

10com.unity.perception/Editor/GroundTruth/SemanticSegmentationLabelConfigEditor.cs

-

2com.unity.perception/Editor/GroundTruth/Uxml/ColoredLabelElementInLabelConfig.uxml

-

14com.unity.perception/Editor/GroundTruth/Uxml/LabelConfig_Main.uxml

-

15com.unity.perception/Editor/Randomization/Editors/RunInUnitySimulationWindow.cs

-

45com.unity.perception/Runtime/GroundTruth/InstanceIdToColorMapping.cs

-

18com.unity.perception/Runtime/GroundTruth/Labelers/BoundingBox3DLabeler.cs

-

146com.unity.perception/Runtime/GroundTruth/Labelers/KeypointLabeler.cs

-

13com.unity.perception/Runtime/GroundTruth/Labelers/SemanticSegmentationLabeler.cs

-

6com.unity.perception/Runtime/GroundTruth/Labeling/SemanticSegmentationLabelConfig.cs

-

5com.unity.perception/Runtime/GroundTruth/PerceptionCamera.cs

-

2com.unity.perception/Runtime/GroundTruth/RenderPasses/CrossPipelinePasses/SemanticSegmentationCrossPipelinePass.cs

-

13com.unity.perception/Runtime/GroundTruth/RenderPasses/HdrpPasses/GroundTruthPass.cs

-

10com.unity.perception/Runtime/GroundTruth/RenderPasses/HdrpPasses/InstanceSegmentationPass.cs

-

10com.unity.perception/Runtime/GroundTruth/RenderPasses/HdrpPasses/LensDistortionPass.cs

-

10com.unity.perception/Runtime/GroundTruth/RenderPasses/HdrpPasses/SemanticSegmentationPass.cs

-

21com.unity.perception/Runtime/GroundTruth/SimulationState.cs

-

3com.unity.perception/Runtime/Randomization/Randomizers/RandomizerExamples/Randomizers/AnimationRandomizer.cs

-

5com.unity.perception/Runtime/Randomization/Randomizers/RandomizerExamples/Randomizers/BackgroundObjectPlacementRandomizer.cs

-

3com.unity.perception/Runtime/Randomization/Randomizers/RandomizerExamples/Randomizers/ColorRandomizer.cs

-

4com.unity.perception/Runtime/Randomization/Randomizers/RandomizerExamples/Randomizers/ForegroundObjectPlacementRandomizer.cs

-

3com.unity.perception/Runtime/Randomization/Randomizers/RandomizerExamples/Randomizers/HueOffsetRandomizer.cs

-

3com.unity.perception/Runtime/Randomization/Randomizers/RandomizerExamples/Randomizers/RotationRandomizer.cs

-

11com.unity.perception/Runtime/Randomization/Randomizers/RandomizerExamples/Randomizers/SunAngleRandomizer.cs

-

3com.unity.perception/Runtime/Randomization/Randomizers/RandomizerExamples/Randomizers/TextureRandomizer.cs

-

86com.unity.perception/Runtime/Randomization/Randomizers/RandomizerExamples/Utilities/PoissonDiskSampling.cs

-

2com.unity.perception/Runtime/Randomization/Randomizers/RandomizerTag.cs

-

2com.unity.perception/Runtime/Randomization/Samplers/SamplerUtility.cs

-

7com.unity.perception/Runtime/Unity.Perception.Runtime.asmdef

-

16com.unity.perception/Tests/Editor/DatasetCaptureEditorTests.cs

-

263com.unity.perception/Tests/Runtime/GroundTruthTests/BoundingBox3dTests.cs

-

33com.unity.perception/Tests/Runtime/GroundTruthTests/DatasetCaptureSensorSchedulingTests.cs

-

51com.unity.perception/Tests/Runtime/GroundTruthTests/InstanceIdToColorMappingTests.cs

-

299com.unity.perception/Tests/Runtime/GroundTruthTests/KeypointGroundTruthTests.cs

-

38com.unity.perception/Tests/Runtime/GroundTruthTests/SegmentationGroundTruthTests.cs

-

2com.unity.perception/package.json

-

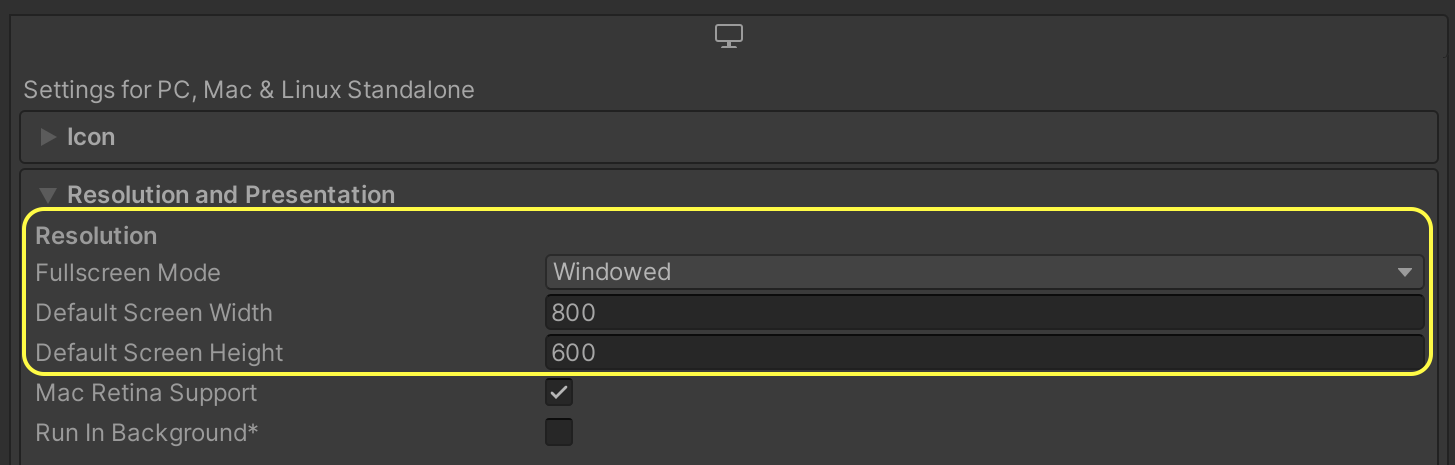

214com.unity.perception/Documentation~/images/build_res.png

-

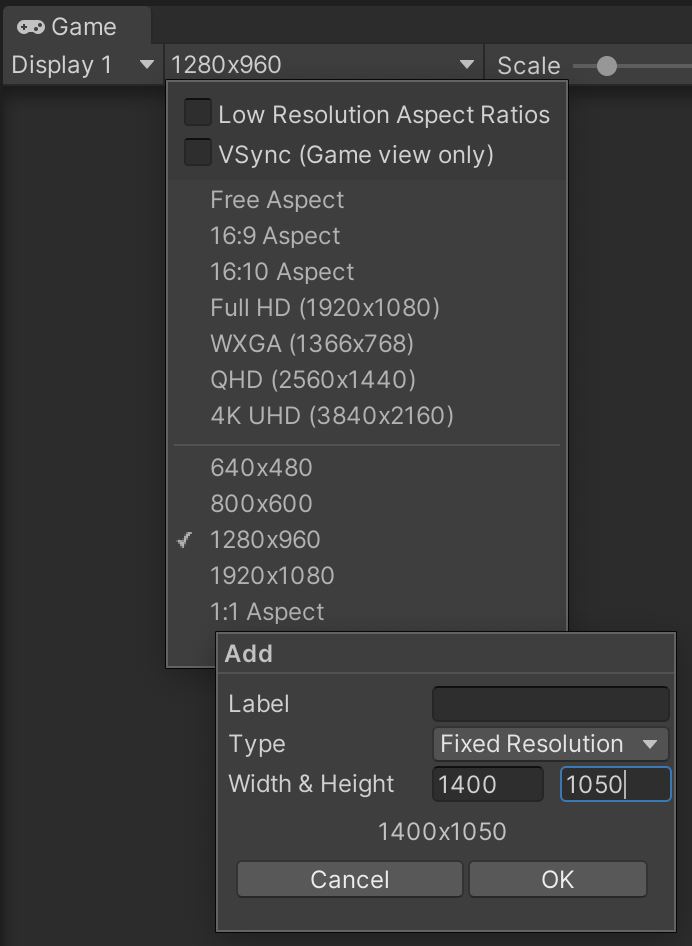

366com.unity.perception/Documentation~/images/gameview_res.png

-

739com.unity.perception/Documentation~/images/robotics_pose.png

-

140com.unity.perception/Documentation~/images/unity-wide-whiteback.png

-

22com.unity.perception/Editor/GroundTruth/JointLabelEditor.cs

-

3com.unity.perception/Editor/GroundTruth/JointLabelEditor.cs.meta

-

24com.unity.perception/Runtime/GroundTruth/Labelers/KeypointObjectFilter.cs

-

3com.unity.perception/Runtime/GroundTruth/Labelers/KeypointObjectFilter.cs.meta

-

91com.unity.perception/Documentation~/GroundTruth/KeypointLabeler.md

|

|||

using System.Linq; |

|||

using UnityEngine; |

|||

using UnityEngine.Perception.GroundTruth; |

|||

|

|||

namespace UnityEditor.Perception.GroundTruth |

|||

{ |

|||

[CustomEditor(typeof(JointLabel))] |

|||

public class JointLabelEditor : Editor |

|||

{ |

|||

public override void OnInspectorGUI() |

|||

{ |

|||

base.OnInspectorGUI(); |

|||

#if UNITY_2020_1_OR_NEWER

|

|||

//GetComponentInParent<T>(bool includeInactive) only exists on 2020.1 and later

|

|||

if (targets.Any(t => ((Component)t).gameObject.GetComponentInParent<Labeling>(true) == null)) |

|||

#else

|

|||

if (targets.Any(t => ((Component)t).GetComponentInParent<Labeling>() == null)) |

|||

#endif

|

|||

EditorGUILayout.HelpBox("No Labeling component detected on parents. Keypoint labeling requires a Labeling component on the root of the object.", MessageType.Info); |

|||

} |

|||

} |

|||

} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 8b21d46736dd4cbb96bf827457752855 |

|||

timeCreated: 1616108507 |

|||

|

|||

namespace UnityEngine.Perception.GroundTruth |

|||

{ |

|||

/// <summary>

|

|||

/// Keypoint filtering modes.

|

|||

/// </summary>

|

|||

public enum KeypointObjectFilter |

|||

{ |

|||

/// <summary>

|

|||

/// Only include objects which are partially visible in the frame.

|

|||

/// </summary>

|

|||

[InspectorName("Visible objects")] |

|||

Visible, |

|||

/// <summary>

|

|||

/// Include visible objects and objects with keypoints in the frame.

|

|||

/// </summary>

|

|||

[InspectorName("Visible and occluded objects")] |

|||

VisibleAndOccluded, |

|||

/// <summary>

|

|||

/// Include all labeled objects containing matching skeletons.

|

|||

/// </summary>

|

|||

[InspectorName("All objects")] |

|||

All |

|||

} |

|||

} |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: a1ea6aeb2b1c476288b4aa0cbc795280 |

|||

timeCreated: 1616179952 |

|||

|

|||

# Keypoint Labeler |

|||

|

|||

The Keypoint Labeler captures the screen locations of specific points on labeled GameObjects. The typical use of this Labeler is capturing human pose estimation data, but it can be used to capture points on any kind of object. The Labeler uses a [Keypoint Template](#KeypointTemplate) which defines the keypoints to capture for the model and the skeletal connections between those keypoints. The positions of the keypoints are recorded in pixel coordinates. |

|||

|

|||

## Data Format |

|||

The keypoints captured each frame are in the following format: |

|||

``` |

|||

keypoints { |

|||

label_id: <int> -- Integer identifier of the label |

|||

instance_id: <str> -- UUID of the instance. |

|||

template_guid: <str> -- UUID of the keypoint template |

|||

pose: <str> -- Current pose |

|||

keypoints [ -- Array of keypoint data, one entry for each keypoint defined in associated template file. |

|||

{ |

|||

index: <int> -- Index of keypoint in template |

|||

x: <float> -- X pixel coordinate of keypoint |

|||

y: <float> -- Y pixel coordinate of keypoint |

|||

state: <int> -- Visibility state |

|||

}, ... |

|||

] |

|||

} |

|||

``` |

|||

|

|||

The `state` entry has three possible values: |

|||

* 0 - the keypoint either does not exist or is outside of the image's bounds |

|||

* 1 - the keypoint exists inside of the image bounds but cannot be seen because the object is not visible at its location in the image |

|||

* 2 - the keypoint exists and the object is visible at its location |

|||

|

|||

The annotation definition, captured by the Keypoint Labeler once in each dataset, describes points being captured and their skeletal connections. These are defined by the [Keypoint Template](#KeypointTemplate). |

|||

``` |

|||

annotation_definition.spec { |

|||

template_id: <str> -- The UUID of the template |

|||

template_name: <str> -- Human readable name of the template |

|||

key_points [ -- Array of joints defined in this template |

|||

{ |

|||

label: <str> -- The label of the joint |

|||

index: <int> -- The index of the joint |

|||

}, ... |

|||

] |

|||

skeleton [ -- Array of skeletal connections (which joints have connections between one another) defined in this template |

|||

{ |

|||

joint1: <int> -- The first joint of the connection |

|||

joint2: <int> -- The second joint of the connection |

|||

}, ... |

|||

] |

|||

} |

|||

``` |

|||

|

|||

## Setup |

|||

The Keypoint Labeler captures keypoints each frame from each object in the scene that meets the following conditions: |

|||

|

|||

* The object or its children are at least partially visible in the frame |

|||

* The _Object Filter_ option on the Keypoint Labeler can be used to also include fully occluded or off-screen objects |

|||

* The root object has a `Labeling` component |

|||

* The object matches at least one entry in the Keypoint Template by either: |

|||

* Containing an Animator with a [humanoid avatar](https://docs.unity3d.com/Manual/ConfiguringtheAvatar.html) whose rig matches a keypoint OR |

|||

* Containing children with Joint Label components whose labels match keypoints |

|||

|

|||

For a tutorial on setting up your project for keypoint labeling, see the [Human Pose Labeling and Randomization Tutorial](../HPTutorial/TUTORIAL.md). |

|||

|

|||

## Keypoint Template |

|||

|

|||

Keypoint Templates are used to define the keypoints and skeletal connections captured by the Keypoint Labeler. The Keypoint Template takes advantage of Unity's humanoid animation rig, and allows the user to automatically associate template keypoints to animation rig joints. Additionally, the user can choose to ignore the rigged points, or add points not defined in the rig. |

|||

|

|||

A [COCO](https://cocodataset.org/#home) Keypoint Template is included in the Perception package. |

|||

|

|||

### Editor |

|||

|

|||

The Keypoint Template editor allows the user to create/modify a Keypoint Template. The editor consists of the header information, the keypoint array, and the skeleton array. |

|||

|

|||

|

|||

<br/>_Header section of the keypoint template_ |

|||

|

|||

In the header section, a user can change the name of the template and supply textures that they would like to use for the keypoint visualization. |

|||

|

|||

|

|||

<br/>_Keypoint section of the keypoint template_ |

|||

|

|||

The keypoint section allows the user to create/edit keypoints and associate them with Unity animation rig points. Each keypoint record |

|||

has 4 fields: label (the name of the keypoint), Associate to Rig (a boolean value which, if true, automatically maps the keypoint to |

|||

the GameObject defined by the rig), Rig Label (only needed if Associate To Rig is true, defines which rig component to associate with |

|||

the keypoint), and Color (RGB color value of the keypoint in the visualization). |

|||

|

|||

|

|||

<br/>_Skeleton section of the keypoint template_ |

|||

|

|||

The skeleton section allows the user to create connections between joints, basically defining the skeleton of a labeled object. |

|||

|

|||

#### Animation Pose Label |

|||

|

|||

This file is used to define timestamps in an animation to a pose label. |

|||

撰写

预览

正在加载...

取消

保存

Reference in new issue