当前提交

66c0e4f6

共有 14 个文件被更改,包括 591 次插入 和 89 次删除

-

14README.md

-

29TestProjects/PerceptionURP/Assets/Scenes/SampleScene.unity

-

2TestProjects/PerceptionURP/ProjectSettings/QualitySettings.asset

-

68com.unity.perception/Documentation~/GettingStarted.md

-

30com.unity.perception/Documentation~/GroundTruth-Labeling.md

-

35com.unity.perception/Documentation~/SetupSteps.md

-

24com.unity.perception/Documentation~/index.md

-

3TestProjects/PerceptionURP/Assets/ExampleScripts.meta

-

21com.unity.perception/Documentation~/PerceptionCamera.md

-

91com.unity.perception/Documentation~/SimulationManager.md

-

292com.unity.perception/Documentation~/images/PerceptionCamera.png

-

68TestProjects/PerceptionURP/Assets/ExampleScripts/CustomAnnotationAndMetricReporter.cs

-

3TestProjects/PerceptionURP/Assets/ExampleScripts/CustomAnnotationAndMetricReporter.cs.meta

|

|||

_Note: This document is a work in progress_ |

|||

|

|||

You can add a Labeling component to individual GameModels within a scene although it is a good practice to create a prefab of a GameModel and apply the Labeling component to it. |

|||

The Labeling components contain properties that control the number of labels applied to the GameModel. “Classes” has a property named “size”, this identifies how many labels are applied to a GameModel. Default = 0 (no label). Setting “size” to 1 will expose an “Element 0” parameter and an input field allowing for a custom label as text or numbers (combination of both) that can be used to label the asset. |

|||

You can add a Labeling component to individual GameObjects within a scene although it is a good practice to create a prefab of a GameModel and apply the Labeling component to it. |

|||

Multiple labels can be used by setting “size” to 2 or more. These additional Elements (labels) can be used for any purpose in development. For example in SynthDet labels have a hierarchy where Element0 is the highest level label identifying an GameModel in a very general category. Subsequent categories become more focused in identifying what types and groups an object can be classified. The last Element is reserved for the specific name (or label) the asset is defined as. |

|||

Multiple labels can be assigned to the same `Labeling`. When ground truth which requires unique labels per object is being generated, the first label in the `Labeling` present anywhere in the `LabelingConfiguration` is used. |

|||

Semantic segmentation (and other metrics) require a labeling configuration file located here: |

|||

This file gives a list of all labels currently being used in the data set and what RGB value they are associated with. This file can be used as is or created by the developer. When a Semantic segmentation output is generated the per pixel RGB value can be used to identify the object for the algorithm. |

|||

|

|||

Note: the labeling configuration file is not validated and must be managed by the developer. |

|||

Many labelers require require a `Labeling Configuration` asset. |

|||

This file specifies a list of all labels to be captured in the dataset for a labeler along with extra information used by the various labelers. |

|||

Generally algorithm testing and training requires a single label on an asset for proper identification (“chair”, “table”, “door, “window”, etc.) In Unity SynthDet a labeling hierarchy is used to identify assets at a higher level and/or more granularly. |

|||

Generally algorithm testing and training requires a single label on an asset for proper identification such as “chair”, “table”, or “door". To maximize asset reuse, however, it is useful to give each object multiple labels in a hierarchy. |

|||

Example |

|||

An asset representing a box of Rice Krispies cereal is labeled as: food\cereal\kellogs\ricekrispies |

|||

“food” - type |

|||

“cereal” - subtype |

|||

“kellogs” - main descriptor |

|||

“ricekrispies” - sub descriptor |

|||

For example |

|||

An asset representing a box of Rice Krispies cereal could be labeled as `food\cereal\kellogs\ricekrispies` |

|||

|

|||

* “food” - type |

|||

* “cereal” - subtype |

|||

* “kellogs” - main descriptor |

|||

* “ricekrispies” - sub descriptor |

|||

If the goal of the algorithm is to identify all objects in a scene that is “food” that label is available and can be used. Conversely if the goal is to identify only Rice Krispies cereal within a scene that label is also available. Depending on the goal of the algorithm any mix of labels in the hierarchy can be used at the discretion of the developer. |

|||

If the goal of the algorithm is to identify all objects in a scene that is “food” that label is available and can be used. Conversely if the goal is to identify only Rice Krispies cereal within a scene that label is also available. Depending on the goal of the algorithm any mix of labels in the hierarchy can be used. |

|||

|

|||

# Setup for local development |

|||

* Clone the [Perception](https://github.com/Unity-Technologies/com.unity.perception) repository |

|||

* Install and use Unity latest [2019.3 Unity editor](https://unity.com/releases/2019-3) |

|||

|

|||

## Setting up a Project |

|||

Below are two options for getting started using the Perception package. Option 1 is opening existing test projects in the repository. Option 2 new Unity project and integrate the Perception package. |

|||

|

|||

### Option 1: PerceptionHDRP & PerceptionURP Projects |

|||

The repository includes two projects for local development in `TestProjects` folder, one set up for HDRP and the other for URP. You can open these with the Unity |

|||

editor you installed in Setup instructions. |

|||

|

|||

<img src="images/TestProjects.PNG" align="middle"/> |

|||

|

|||

### Option 2: Create a new Project |

|||

These option is walkthrough in creating a new project, then adding the Perception SDK package to the project for development use. |

|||

# Installing the Perception package in your project |

|||

You can also set up a new or existing project to use Perception. |

|||

#### Create a new project |

|||

1. Create a new HDRP project or open an existing project |

|||

1. Creating anew HDRP project can be done by creating a new project using the HDRP template |

|||

2. Back in Unity editor, got Window -> Package Manager |

|||

1. Add the High Definition RP package, version 7.1.2 or later from the packages list |

|||

2. In the Package Manager window find and click the ***+*** button in the upper lefthand corner of the window |

|||

3. Select the ***add package from disk*** option |

|||

4. Navigate to the com.unity.perception folder in your cloned repository and select the package.json file |

|||

3. Once you have a project with Perception SDK installed you can move forward to the Getting Started walkthrough |

|||

1. Clone the [Perception](https://github.com/Unity-Technologies/com.unity.perception) repository |

|||

1. Install the latest [2019.3 Unity Editor](https://unity.com/releases/2019-3) |

|||

1. Create a new HDRP or URP project, or open an existing project |

|||

2. Open `Window` -> `Package Manager` |

|||

1. In the Package Manager window find and click the ***+*** button in the upper lefthand corner of the window |

|||

2. Select the ***add package from disk*** option |

|||

3. Navigate to the com.unity.perception folder in your cloned repository and select the package.json file |

|||

Once completed you can move on to the getting started steps, click [here](Documentation~/GettingStarted.md) to start project setup. |

|||

Once completed you can continue with the [getting started steps](GettingStarted.md). |

|||

|

|||

# About the Perception SDK |

|||

com.unity.perception provides a toolkit for generating large-scale datasets for perception-based machine learning training and validation. It is focused on a handful of camera-based use cases for now and will ultimately expand to other forms of sensors and machine learning tasks. |

|||

<img src="images/banner2.PNG" align="middle"/> |

|||

# Technical details |

|||

## Requirements |

|||

# Unity Perception package (com.unity.perception) |

|||

The Perception package provides a toolkit for generating large-scale datasets for perception-based machine learning training and validation. It is focused on capturing ground truth for camera-based use cases for now and will ultimately expand to other forms of sensors and machine learning tasks. |

|||

This version of _Perception_ is compatible Unity Editor 2019.3 and later |

|||

> The Perception package is in active development. Its features and API are subject to significant change as development progresses. |

|||

|

|||

[Installation instructions](SetupSteps.md) |

|||

|

|||

[Setting up your first perception scene](GettingStarted.md) |

|||

|Ground Truth|Captures semantic segmentation, bounding boxes, and other forms of ground truth.| |

|||

|

|||

|Feature|Description |

|||

|Labeling|MonoBehaviour which marks an object and its descendants with a set of labels| |

|||

|Labeling Configuration|Asset which defines a taxonomy of labels used for ground truth generation | |

|||

|Perception Camera|Captures RGB images and ground truth on a Unity Camera| |

|||

|---|---| |

|||

|[Labeling](GroundTruth-Labeling.md)|Component which marks a GameObject and its descendants with a set of labels| |

|||

|[Labeling Configuration](GroundTruth-Labeling.md#LabelingConfiguration)|Asset which defines a taxonomy of labels for ground truth generation| |

|||

|[Perception Camera](PerceptionCamera.md)|Captures RGB images and ground truth from a [Camera](https://docs.unity3d.com/Manual/class-Camera.html)| |

|||

|[SimulationManager](SimulationManager.md)|Ensures sensors are triggered at proper rates and accepts data for the JSON dataset| |

|||

|

|||

fileFormatVersion: 2 |

|||

guid: c9dacfa32295429db4916fd3ba0a3b40 |

|||

timeCreated: 1591367506 |

|||

|

|||

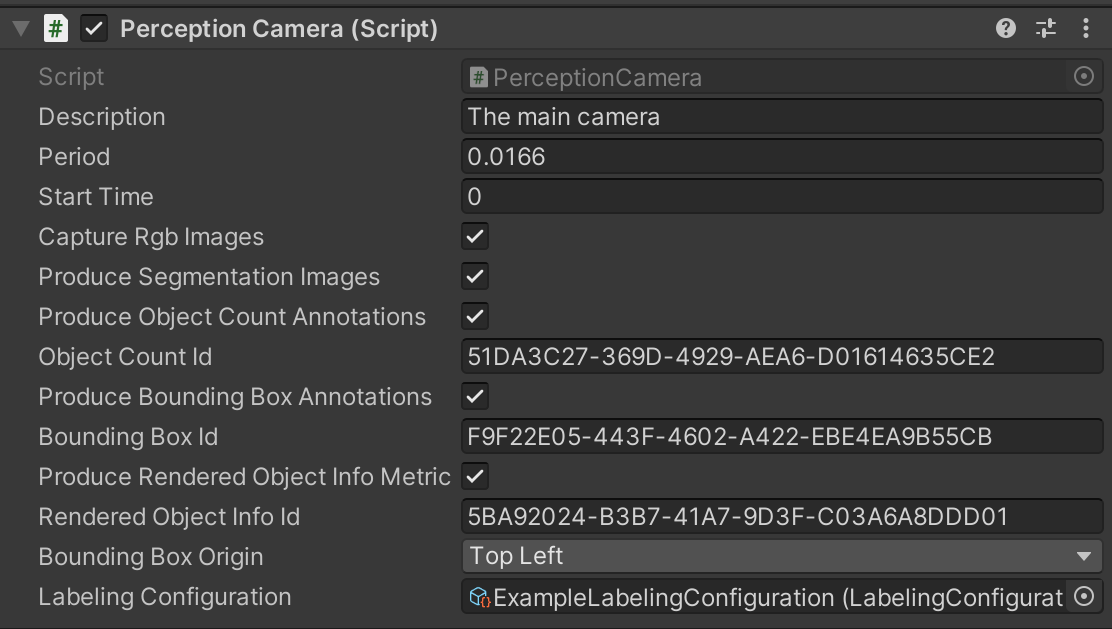

# The Perception Camera component |

|||

The Perception Camera component ensures the attached [Camera](https://docs.unity3d.com/Manual/class-Camera.html) runs at deterministic rates and captures RGB and other Camera-related ground truth to the [JSON dataset](Schema/Synthetic_Dataset_Schema.md) using [SimulationManager](SimulationManager.md). It supports HDRP and URP. |

|||

|

|||

<img src="images/PerceptionCamera.PNG" align="middle"/> |

|||

|

|||

## Properties |

|||

| Property: | Function: | |

|||

|--|--| |

|||

| Description | A description of the camera to be registered in the JSON dataset. | |

|||

| Period | The amount of simulation time in seconds between frames for this camera. For more on sensor scheduling, see [SimulationManager](SimulationManager.md). | |

|||

| Start Time | The simulation time at which to run the first frame. This time will offset the period, useful for allowing multiple cameras to run at the right times relative to each other. | |

|||

| Capture Rgb Images | When this is checked, RGB images will be captured as PNG files in the dataset each frame. | |

|||

| Produce Segmentation Images| When this is checked at startup, semantic segmentation images will be captured as PNG files in the dataset each frame. Pixel colors are derived from the [Labeling](GroundTruth-Labeling.md) components attached to the GameObjects and the Labeling Configuration specified on the Perception Camera. | |

|||

|Produce Object Count Annotations| When this is checked at startup, a metric reporting object counts for each label in the Labeling Configuration will be added to the dataset each frame. | |

|||

|Object Count Id| The ID to use in the dataset for the Object Count metric. | |

|||

|Produce Bounding Box Annotations| When this is checked at startup, an annotation reporting 2D bounding boxes of each labeled GameObject will be added to the dataset each frame. | |

|||

|Bounding Box Id| The ID to use in the dataset for the Bounding Box annotation.| |

|||

|Produce Rendered Object Info Metric|When this is checked at startup, a metric reporting visible pixels, instance ID and resolved label will be reported for each object each frame. Labels are resolved using the Labeling Configuration. | |

|||

|Rendered Object Info Id| The ID to use in the dataset for the Rendered Object Info annotation.| |

|||

|Bounding Box Origin|Whether the X/Y values reported for bounding boxes should start at the bottom-left or top-left of the object in the frame. | |

|||

|Labeling Configuration| The Labeling Configuration used to resolve each object to a single label, label ID, or semantic segmentation pixel value in the ground truth generated by this Perception Camera.| |

|||

|

|||

# SimulationManager |

|||

|

|||

`SimulationManager` tracks egos, sensors, annotations, and metrics, combining them into a unified [JSON-based dataset](Schema/Synthetic_Dataset_Schema.md) on disk. It also controls the simulation time elapsed per frame to accommodate the active sensors. |

|||

|

|||

|

|||

## Sensor scheduling |

|||

While sensors are registered, `SimulationManager` ensures that frame timing is deterministic and run at the appropriate simulation times to let each sensor run at its own rate. |

|||

|

|||

Using [Time.CaptureDeltaTime](https://docs.unity3d.com/ScriptReference/Time-captureDeltaTime.html), it also decouples wall clock time from simulation time, allowing the simulation to run as fast as possible. |

|||

|

|||

## Custom sensors |

|||

Custom sensors can be registered using `SimulationManager.RegisterSensor()`. The `period` passed in at registration time determines how often in simulation time frames should be scheduled for the sensor to run. The sensor implementation would then check `ShouldCaptureThisFrame` on the returned `SensorHandle` each frame to determine whether it is time for the sensor to perform a capture. `SensorHandle.ReportCapture` should then be called in each of these frames to report the state of the sensor to populate the dataset. |

|||

|

|||

## Custom annotations and metrics |

|||

In addition to the common annotations and metrics produced by [PerceptionCamera](PerceptionCamera.md), scripts can produce their own via `SimulationManager`. Annotation and metric definitions must first be registered using `SimulationManager.RegisterAnnotationDefinition()` or `SimulationManager.RegisterMetricDefinition()`. These return `AnnotationDefinition` and `MetricDefinition` instances which can then be used to report values during runtime. |

|||

|

|||

Annotations and metrics are always associated with the frame they are reported in. They may also be associated with a specific sensor by using the `Report*` methods on `SensorHandle`. |

|||

|

|||

### Example |

|||

<!-- If you change this, change it in PerceptionURP/Assets/Examples/CustomAnnotationAndMetricReporter.cs as well --> |

|||

```csharp |

|||

using System; |

|||

using UnityEngine; |

|||

using UnityEngine.Perception.GroundTruth; |

|||

|

|||

[RequireComponent(typeof(PerceptionCamera))] |

|||

public class CustomAnnotationAndMetricReporter : MonoBehaviour |

|||

{ |

|||

public GameObject light; |

|||

public GameObject target; |

|||

|

|||

MetricDefinition lightMetricDefinition; |

|||

AnnotationDefinition boundingBoxAnnotationDefinition; |

|||

SensorHandle cameraSensorHandle; |

|||

|

|||

public void Start() |

|||

{ |

|||

//Metrics and annotations are registered up-front |

|||

lightMetricDefinition = SimulationManager.RegisterMetricDefinition( |

|||

"Light position", |

|||

"The world-space position of the light", |

|||

Guid.Parse("1F6BFF46-F884-4CC5-A878-DB987278FE35")); |

|||

boundingBoxAnnotationDefinition = SimulationManager.RegisterAnnotationDefinition( |

|||

"Target bounding box", |

|||

"The position of the target in the camera's local space", |

|||

id: Guid.Parse("C0B4A22C-0420-4D9F-BAFC-954B8F7B35A7")); |

|||

} |

|||

|

|||

public void Update() |

|||

{ |

|||

//Report the light's position by manually creating the json array string. |

|||

var lightPos = light.transform.position; |

|||

SimulationManager.ReportMetric(lightMetricDefinition, |

|||

$@"[{{ ""x"": {lightPos.x}, ""y"": {lightPos.y}, ""z"": {lightPos.z} }}]"); |

|||

//compute the location of the object in the camera's local space |

|||

Vector3 targetPos = transform.worldToLocalMatrix * target.transform.position; |

|||

//Report using the PerceptionCamera's SensorHandle if scheduled this frame |

|||

var sensorHandle = GetComponent<PerceptionCamera>().SensorHandle; |

|||

if (sensorHandle.ShouldCaptureThisFrame) |

|||

{ |

|||

sensorHandle.ReportAnnotationValues( |

|||

boundingBoxAnnotationDefinition, |

|||

new[] { targetPos }); |

|||

} |

|||

} |

|||

} |

|||

|

|||

// Example metric that is added each frame in the dataset: |

|||

// { |

|||

// "capture_id": null, |

|||

// "annotation_id": null, |

|||

// "sequence_id": "9768671e-acea-4c9e-a670-0f2dba5afe12", |

|||

// "step": 1, |

|||

// "metric_definition": "1f6bff46-f884-4cc5-a878-db987278fe35", |

|||

// "values": [{ "x": 96.1856, "y": 192.676, "z": -193.8386 }] |

|||

// }, |

|||

|

|||

// Example annotation that is added to each capture in the dataset: |

|||

// { |

|||

// "id": "33f5a3aa-3e5e-48f1-8339-6cbd64ed4562", |

|||

// "annotation_definition": "c0b4a22c-0420-4d9f-bafc-954b8f7b35a7", |

|||

// "values": [ |

|||

// [ |

|||

// -1.03097284, |

|||

// 0.07265166, |

|||

// -6.318692 |

|||

// ] |

|||

// ] |

|||

// } |

|||

|

|||

``` |

|||

|

|||

using System; |

|||

using UnityEngine; |

|||

using UnityEngine.Perception.GroundTruth; |

|||

|

|||

[RequireComponent(typeof(PerceptionCamera))] |

|||

public class CustomAnnotationAndMetricReporter : MonoBehaviour |

|||

{ |

|||

public GameObject light; |

|||

public GameObject target; |

|||

|

|||

MetricDefinition lightMetricDefinition; |

|||

AnnotationDefinition boundingBoxAnnotationDefinition; |

|||

SensorHandle cameraSensorHandle; |

|||

|

|||

public void Start() |

|||

{ |

|||

//Metrics and annotations are registered up-front

|

|||

lightMetricDefinition = SimulationManager.RegisterMetricDefinition( |

|||

"Light position", |

|||

"The world-space position of the light", |

|||

Guid.Parse("1F6BFF46-F884-4CC5-A878-DB987278FE35")); |

|||

boundingBoxAnnotationDefinition = SimulationManager.RegisterAnnotationDefinition( |

|||

"Target bounding box", |

|||

"The position of the target in the camera's local space", |

|||

id: Guid.Parse("C0B4A22C-0420-4D9F-BAFC-954B8F7B35A7")); |

|||

} |

|||

|

|||

public void Update() |

|||

{ |

|||

//Report the light's position by manually creating the json array string.

|

|||

var lightPos = light.transform.position; |

|||

SimulationManager.ReportMetric(lightMetricDefinition, |

|||

$@"[{{ ""x"": {lightPos.x}, ""y"": {lightPos.y}, ""z"": {lightPos.z} }}]"); |

|||

//compute the location of the object in the camera's local space

|

|||

Vector3 targetPos = transform.worldToLocalMatrix * target.transform.position; |

|||

//Report using the PerceptionCamera's SensorHandle if scheduled this frame

|

|||

var sensorHandle = GetComponent<PerceptionCamera>().SensorHandle; |

|||

if (sensorHandle.ShouldCaptureThisFrame) |

|||

{ |

|||

sensorHandle.ReportAnnotationValues( |

|||

boundingBoxAnnotationDefinition, |

|||

new[] { targetPos }); |

|||

} |

|||

} |

|||

} |

|||

|

|||

// Example metric that is added each frame in the dataset:

|

|||

// {

|

|||

// "capture_id": null,

|

|||

// "annotation_id": null,

|

|||

// "sequence_id": "9768671e-acea-4c9e-a670-0f2dba5afe12",

|

|||

// "step": 1,

|

|||

// "metric_definition": "1f6bff46-f884-4cc5-a878-db987278fe35",

|

|||

// "values": [{ "x": 96.1856, "y": 192.676, "z": -193.8386 }]

|

|||

// },

|

|||

|

|||

// Example annotation that is added to each capture in the dataset:

|

|||

// {

|

|||

// "id": "33f5a3aa-3e5e-48f1-8339-6cbd64ed4562",

|

|||

// "annotation_definition": "c0b4a22c-0420-4d9f-bafc-954b8f7b35a7",

|

|||

// "values": [

|

|||

// [

|

|||

// -1.03097284,

|

|||

// 0.07265166,

|

|||

// -6.318692

|

|||

// ]

|

|||

// ]

|

|||

// }

|

|||

|

|||

fileFormatVersion: 2 |

|||

guid: 7c51d9f2c5784bb4aee3fdf021966e14 |

|||

timeCreated: 1591367532 |

|||

撰写

预览

正在加载...

取消

保存

Reference in new issue