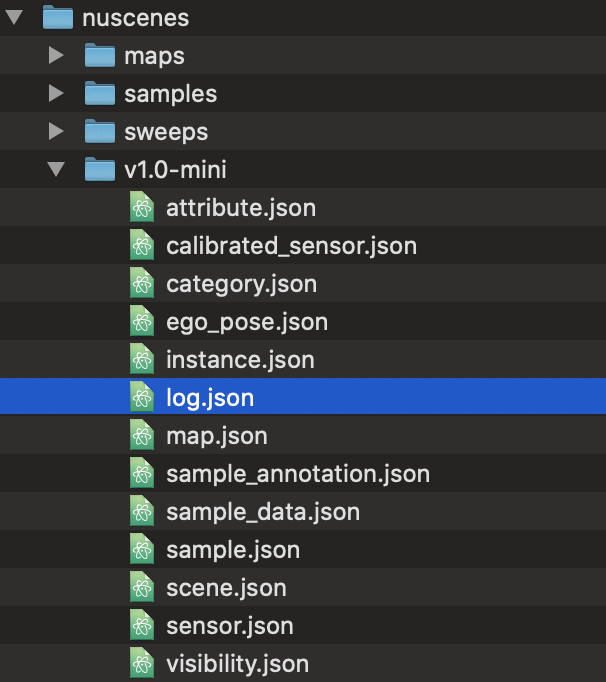

当前提交

35110fd1

共有 33 个文件被更改,包括 4481 次插入 和 28 次删除

-

2.yamato/upm-ci-full.yml

-

54com.unity.perception/Runtime/GroundTruth/PerceptionCamera.cs

-

2com.unity.perception/Tests/Runtime/GroundTruthTests/PerceptionCameraIntegrationTests.cs

-

386com.unity.perception/Documentation~/Schema/Synthetic_Dataset_Schema.md

-

61com.unity.perception/Documentation~/Schema/captures_steps_timestamps.png

-

950com.unity.perception/Documentation~/Schema/image_0.png

-

70com.unity.perception/Documentation~/Schema/image_2.png

-

77com.unity.perception/Documentation~/Schema/image_3.png

-

63com.unity.perception/Documentation~/Schema/image_4.png

-

241com.unity.perception/Documentation~/Schema/image_5.png

-

8com.unity.perception/Documentation~/Schema/mock_data/calib000000.txt

-

65com.unity.perception/Documentation~/Schema/mock_data/simrun/Dataset/annotation_definitions.json

-

41com.unity.perception/Documentation~/Schema/mock_data/simrun/Dataset/captures_000.json

-

142com.unity.perception/Documentation~/Schema/mock_data/simrun/Dataset/captures_001.json

-

9com.unity.perception/Documentation~/Schema/mock_data/simrun/Dataset/egos.json

-

15com.unity.perception/Documentation~/Schema/mock_data/simrun/Dataset/metric_definitions.json

-

40com.unity.perception/Documentation~/Schema/mock_data/simrun/Dataset/metrics_000.json

-

17com.unity.perception/Documentation~/Schema/mock_data/simrun/Dataset/sensors.json

-

15com.unity.perception/Documentation~/Schema/mock_data/simrun/README.md

-

0com.unity.perception/Documentation~/Schema/mock_data/simrun/annotations/instance_segmantation_000.png

-

0com.unity.perception/Documentation~/Schema/mock_data/simrun/annotations/lidar_semantic_segmentation_000.pcd

-

188com.unity.perception/Documentation~/Schema/mock_data/simrun/annotations/sementic_segmantation_000.png

-

154com.unity.perception/Documentation~/Schema/mock_data/simrun/annotations/sementic_segmantation_001.png

-

962com.unity.perception/Documentation~/Schema/mock_data/simrun/captures/camera_000.png

-

933com.unity.perception/Documentation~/Schema/mock_data/simrun/captures/camera_001.png

-

0com.unity.perception/Documentation~/Schema/mock_data/simrun/captures/lidar_000.pcd

-

14com.unity.perception/Documentation~/Schema/mock_data/simrun_manifest.csv

|

|||

# Synthetic Dataset Schema |

|||

|

|||

Synthetic datasets generated by the Perception package are captured in JSON. |

|||

This document describes the schema used to store the data. |

|||

This schema provides a generic structure for simulation output which can be easily consumed to show statistics or train machine learning models. |

|||

Synthetic datasets are composed of sensor captures, annotations, and metrics e.g. images and 2d bounding box labels. |

|||

This data comes in various forms and might be captured by different sensors and annotation mechanisms. |

|||

Multiple sensors may be producing captures at different frequencies. |

|||

The dataset organizes all of the data into a single set of JSON files. |

|||

|

|||

## Goals |

|||

|

|||

* It should include captured sensor data and annotations in one well-defined format. |

|||

This allows us to maintain a contract between the Perception package and the dataset consumers (e.g. Statistics and ML Modeling...) |

|||

|

|||

* It should maintain relationships between captured data and annotations that were taken by the same sensor at the same time. |

|||

It should also maintain relationships between consecutive captures for time-related perception tasks (e.g. object tracking). |

|||

|

|||

* It should support streaming data, since the data will be created on the fly during the simulation from multiple processes or cloud instances. |

|||

|

|||

* It should be able to easily support new types of sensors and annotations. |

|||

|

|||

* It assumes the synthetic dataset are captured in a directory structure, but does not make assumptions about transmission of storage of the dataset on a particular database management system. |

|||

|

|||

## Terminology |

|||

|

|||

* **simulation**: one or more executions of a Unity player build, potentially with different parameterization. |

|||

|

|||

* **capture**: a full rendering process of a Unity sensor which saved the rendered result to data files e.g. |

|||

(PNG, [pcd](https://www.online-convert.com/file-format/pcd), etc). |

|||

|

|||

* **sequence**: a time-ordered series of captures generated by a simulation. |

|||

|

|||

* **annotation**: data (e.g. bounding boxes or semantic segmentation) recorded that is used to describe a particular capture at the same timestamp. |

|||

A capture might include multiple types of annotations. |

|||

|

|||

* **step**: id for data-producing frames in the simulation. |

|||

|

|||

* **ego**: a frame of reference for a collection of sensors (camera/LIDAR/radar) attached to it. |

|||

For example, for a robot with two cameras attached, the robot would be the ego containing the two sensors. |

|||

|

|||

* **label**: a string token (e.g. car, human.adult, etc.) that represents a semantic type, or class. |

|||

One GameObject might have multiple labels used for different annotation purposes. |

|||

For more on adding labels to GameObjects, see [labeling](../GroundTruth-Labeling.md). |

|||

|

|||

* **coordinate systems**: there are three coordinate systems used in the schema |

|||

|

|||

* **global coordinate system**: coordinate with respect to the global origin in Unity. |

|||

|

|||

* **ego coordinate system**: coordinate with respect to an ego object. |

|||

Typically, this refers to an object moving in the Unity scene. |

|||

|

|||

* **sensor coordinate system**: coordinate with respect to a sensor. |

|||

This is useful for ML model training for a single sensor, which can be transformed from a global coordinate system and ego coordinate system. |

|||

Raw value of object pose using the sensor coordinate system is rarely recorded in simulation. |

|||

|

|||

## Design |

|||

|

|||

The schema is based on the [nuScenes data format](#heading=h.ng38ehm32lgs). |

|||

The main difference between this schema and nuScenes is that we use **document based schema design** instead of **relational database schema design**. |

|||

This means that instead of requiring multiple id-based "joins" to explore the data, data is nested and sometimes duplicated for ease of consumption. |

|||

|

|||

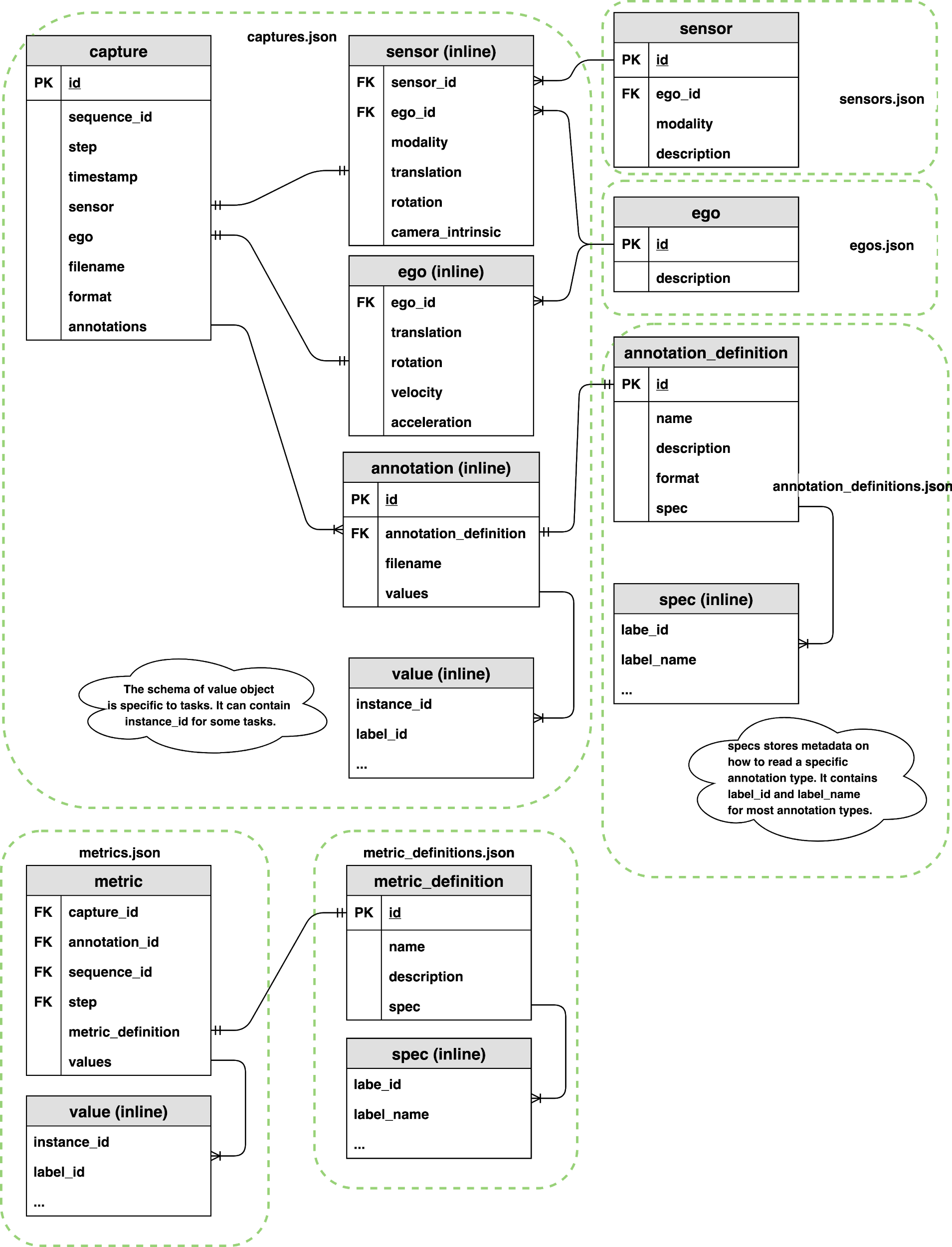

## Components |

|||

|

|||

|

|||

### capture |

|||

|

|||

A capture record contains the relationship between a captured file, a collection of annotations, and extra metadata that describes the state of the sensor. |

|||

|

|||

``` |

|||

capture { |

|||

id: <str> -- UUID of the capture. |

|||

sequence_id: <str> -- UUID of the sequence. |

|||

step: <int> -- The index of capture in the sequence. This field is used to order of captures within a sequence. |

|||

timestamp: <int> -- Timestamp in milliseconds since the sequence started. |

|||

sensor: <obj> -- Attributes of the sensor. see below. |

|||

ego: <obj> -- Ego pose of this sample. See below. |

|||

filename: <str> -- A single file that stores sensor captured data. (e.g. camera_000.png, points_123.pcd, etc.) |

|||

format: <str> -- The format of the sensor captured file. (e.g. png, pcd, etc.) |

|||

annotations: [<obj>,...] [optional] -- List of the annotations in this capture. See below. |

|||

} |

|||

``` |

|||

|

|||

#### sequence, step and timestamp |

|||

|

|||

In some use cases, two consecutive captures might not be related in time during simulation. |

|||

For example, if we generate randomly placed objects in a scene for X steps of simulation. |

|||

In this case, sequence, step and timestamp are irrelevant for the captured data. |

|||

We can add a default value to the sequence, step and timestamp for these types of captures. |

|||

|

|||

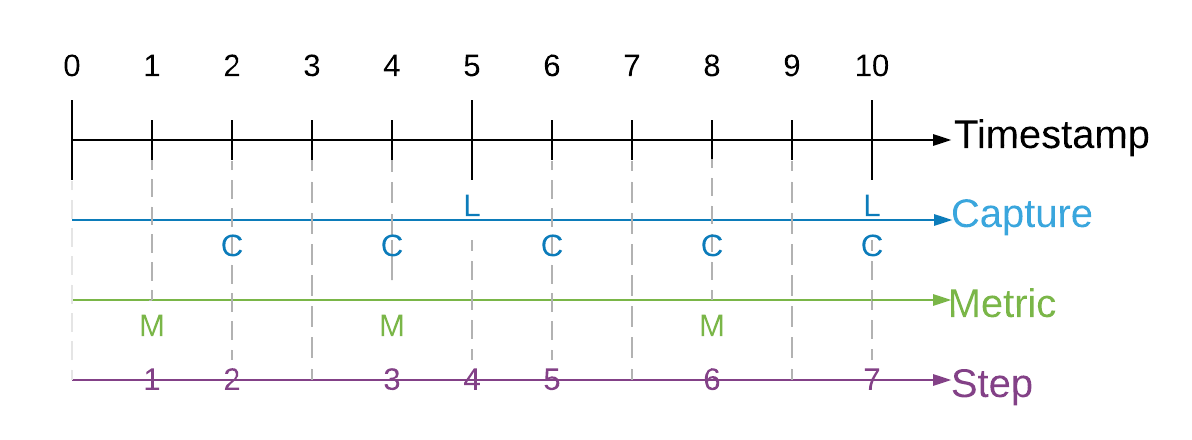

In cases where we need to maintain time order relationship between captures (e.g. a sequence of camera capture in a 10 second video) and [metrics](#heading=h.9mpbqqwxedti), we need to add a sequence, step and timestamp to maintain the time ordered relationship of captures. |

|||

Sequence represents the collection of any time ordered captures and annotations. |

|||

Timestamps refer to the simulation wall clock in milliseconds since the sequence started. |

|||

Steps are integer values which increase when a capture or metric event is triggered. |

|||

We cannot use timestamps to synchronize between two different events because timestamps are floats and therefore make poor indices. |

|||

Instead, we use a "step" counter which make it easy to associate metrics and captures that occur at the same time. |

|||

Below is an illustration of how captures, metrics, timestamps and steps are synchronized. |

|||

|

|||

|

|||

|

|||

Since each sensor might trigger captures at different frequencies, at the same timestamp we might contain 0 to N captures, where N is the total number of sensors included in this scene. |

|||

If two sensors are captured at the same timestamp, they should share the same sequence, step and timestamp value. |

|||

|

|||

|

|||

|

|||

Physical camera sensors require some time to finish exposure. |

|||

Physical LIDAR sensor requires some time to finish one 360 scan. |

|||

How do we define the timestamp of the sample in simulation? |

|||

Following the nuScene sensor [synchronization](https://www.nuscenes.org/data-collection) strategy, we define a reference line from ego origin to the ego’s "forward" traveling direction. |

|||

The timestamp of the LIDAR scan is the time when the full rotation of the current LIDAR frame is achieved. |

|||

A full rotation is defined as the 360 sweep between two consecutive times passing the reference line. |

|||

The timestamp of the camera is the exposure trigger time in simulation. |

|||

|

|||

#### capture.ego |

|||

|

|||

An ego record stores the ego status data when a sample is created. |

|||

It includes translation, rotation, velocity and acceleration (optional) of the ego. |

|||

The pose is with respect to the **global coordinate system** of a Unity scene. |

|||

|

|||

``` |

|||

ego { |

|||

ego_id: <str> -- Foreign key pointing to ego.id. |

|||

translation: <float, float, float> -- Position in meters: (x, y, z) with respect to the global coordinate system. |

|||

rotation: <float, float, float, float> -- Orientation as quaternion: w, x, y, z. |

|||

velocity: <float, float, float> -- Velocity in meters per second as v_x, v_y, v_z. |

|||

acceleration: <float, float, float> [optional] -- Acceleration in meters per second^2 as a_x, a_y, a_z. |

|||

} |

|||

``` |

|||

|

|||

#### capture.sensor |

|||

|

|||

A sensor record contains attributes of the sensor at the time of the capture. |

|||

Different sensor modalities may contain additional keys (e.g. field of view FOV for camera, beam density for LIDAR). |

|||

|

|||

``` |

|||

sensor { |

|||

sensor_id: <str> -- Foreign key pointing to sensor.id. |

|||

ego_id: <str> -- Foreign key pointing to ego.id. |

|||

modality: <str> {camera, lidar, radar, sonar,...} -- Sensor modality. |

|||

translation: <float, float, float> -- Position in meters: (x, y, z) with respect to the ego coordinate system. This is typically fixed during the simulation, but we can allow small variation for domain randomization. |

|||

rotation: <float, float, float, float> -- Orientation as quaternion: (w, x, y, z) with respect to ego coordinate system. This is typically fixed during the simulation, but we can allow small variation for domain randomization. |

|||

camera_intrinsic: <3x3 float matrix> [optional] -- Intrinsic camera calibration. Empty for sensors that are not cameras. |

|||

|

|||

# add arbitrary optional key-value pairs for sensor attributes |

|||

} |

|||

``` |

|||

|

|||

|

|||

reference: [camera_intrinsic](https://www.mathworks.com/help/vision/ug/camera-calibration.html#bu0ni74) |

|||

|

|||

#### capture.annotation |

|||

|

|||

An annotation record contains the ground truth for a sensor either inline or in a separate file. |

|||

A single capture may contain many annotations. |

|||

|

|||

``` |

|||

annotation { |

|||

id: <str> -- UUID of the annotation. |

|||

annotation_definition: <int> -- Foreign key which points to an annotation_definition.id. see below |

|||

filename: <str> [optional] -- Path to a single file that stores annotations. (e.g. sementic_000.png etc.) |

|||

values: [<obj>,...] [optional] -- List of objects that store annotation data (e.g. polygon, 2d bounding box, 3d bounding box, etc). The data should be processed according to a given annotation_definition.id. |

|||

} |

|||

``` |

|||

|

|||

#### Example annotation files |

|||

|

|||

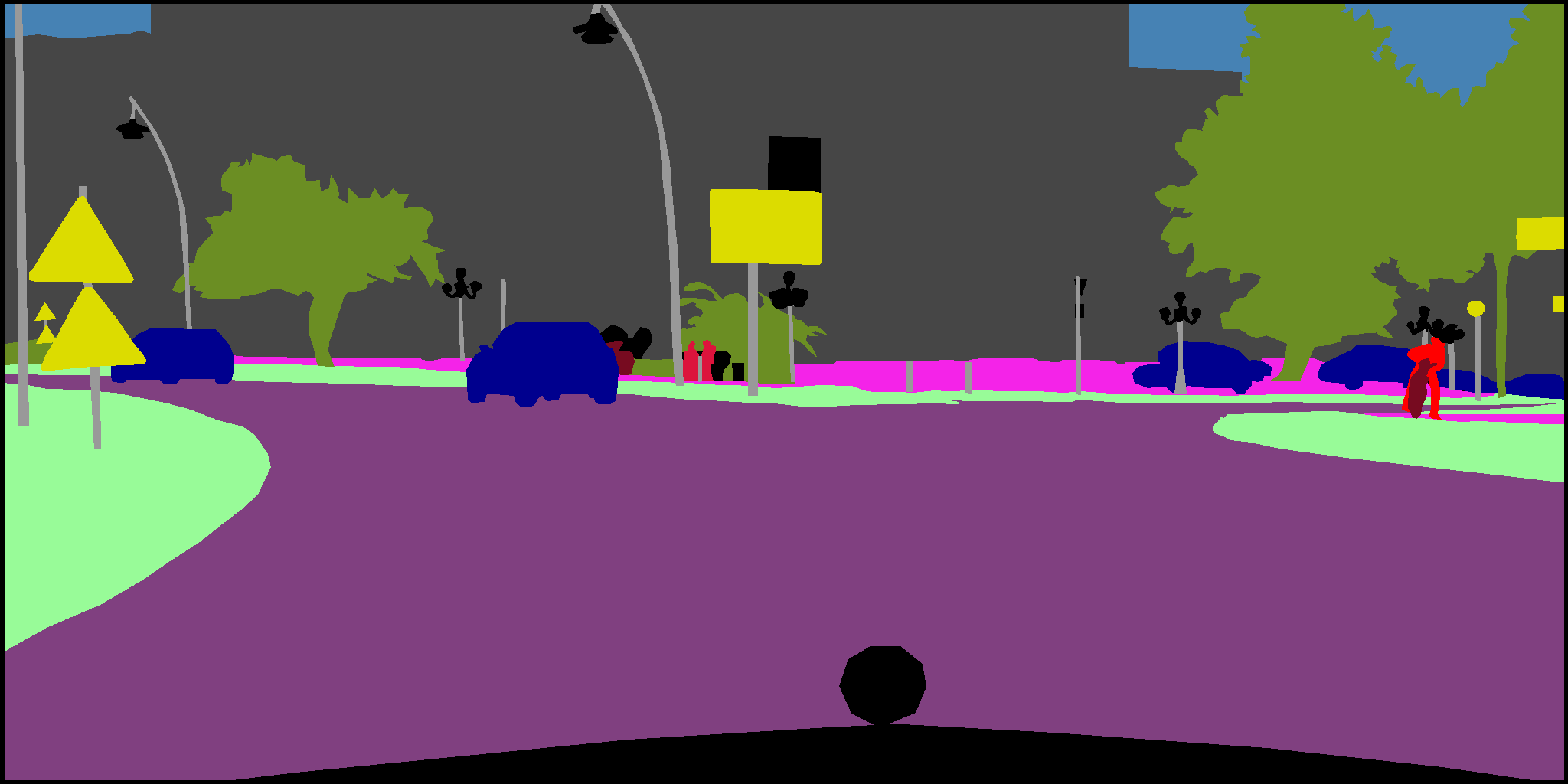

##### semantic segmentation - grayscale image |

|||

|

|||

A grayscale PNG file that stores integer values (label pixel_value in [annotation spec](#annotation_definitions) reference table, semantic segmentation) of the labeled object at each pixel. |

|||

|

|||

|

|||

|

|||

#### capture.annotation.values |

|||

|

|||

<!-- Not yet implemented annotations |

|||

##### instance segmentation - polygon |

|||

|

|||

A json object that stores collections of polygons. Each polygon record maps a tuple of (instance, label) to a list of |

|||

K pixel coordinates that forms a polygon. This object can be directly stored in annotation.values |

|||

|

|||

``` |

|||

semantic_segmentation_polygon { |

|||

label_id: <int> -- Integer identifier of the label |

|||

label_name: <str> -- String identifier of the label |

|||

instance_id: <str> -- UUID of the instance. |

|||

polygon: [<int, int>,...] -- List of points in pixel coordinates of the outer edge. Connecting these points in order should create a polygon that identifies the object. |

|||

} |

|||

``` |

|||

--> |

|||

|

|||

##### 2D bounding box |

|||

|

|||

Each bounding box record maps a tuple of (instance, label) |

|||

to a set of 4 variables (x, y, width, height) that draws a bounding box. |

|||

We follow the OpenCV 2D coordinate [system](https://github.com/vvvv/VL.OpenCV/wiki/Coordinate-system-conversions-between-OpenCV,-DirectX-and-vvvv#opencv) where the origin (0,0), (x=0, y=0) is at the top left of the image. |

|||

|

|||

``` |

|||

bounding_box_2d { |

|||

label_id: <int> -- Integer identifier of the label |

|||

label_name: <str> -- String identifier of the label |

|||

instance_id: <str> -- UUID of the instance. |

|||

x: <float> -- x coordinate of the upper left corner. |

|||

y: <float> -- y coordinate of the upper left corner. |

|||

width: <float> -- number of pixels in the x direction |

|||

height: <float> -- number of pixels in the y direction |

|||

} |

|||

``` |

|||

<!-- Not yet implemented annotations |

|||

|

|||

##### 3D bounding box |

|||

|

|||

A json file that stored collections of 3D bounding boxes. |

|||

Each bounding box record maps a tuple of (instance, label) to translation, size and rotation that draws a 3D bounding box, as well as velocity and acceleration (optional) of the 3D bounding box. |

|||

All location data is given with respect to the **sensor coordinate system**. |

|||

|

|||

``` |

|||

bounding_box_3d { |

|||

label_id: <int> -- Integer identifier of the label |

|||

label_name: <str> -- String identifier of the label |

|||

instance_id: <str> -- UUID of the instance. |

|||

translation: <float, float, float> -- 3d bounding box's center location in meters as center_x, center_y, center_z with respect to global coordinate system. |

|||

size: <float, float, float> -- 3d bounding box size in meters as width, length, height. |

|||

rotation: <float, float, float, float> -- 3d bounding box orientation as quaternion: w, x, y, z. |

|||

velocity: <float, float, float> -- 3d bounding box velocity in meters per second as v_x, v_y, v_z. |

|||

acceleration: <float, float, float> [optional] -- 3d bounding box acceleration in meters per second^2 as a_x, a_y, a_z. |

|||

} |

|||

``` |

|||

|

|||

|

|||

#### instances (V2, WIP) TODO should we include this section? |

|||

|

|||

Although we don’t have a specific table that account for instances, it should be noted that instances should be checked against the following cases |

|||

|

|||

* Consider cases for object tracking |

|||

|

|||

* Consider cases not used for object tracking, so that instances do not need to be consistent across different captures/annotations. |

|||

How to support instance segmentation (maybe we need to use polygon instead of pixel color) |

|||

|

|||

* Stored in values of annotation and metric values |

|||

|

|||

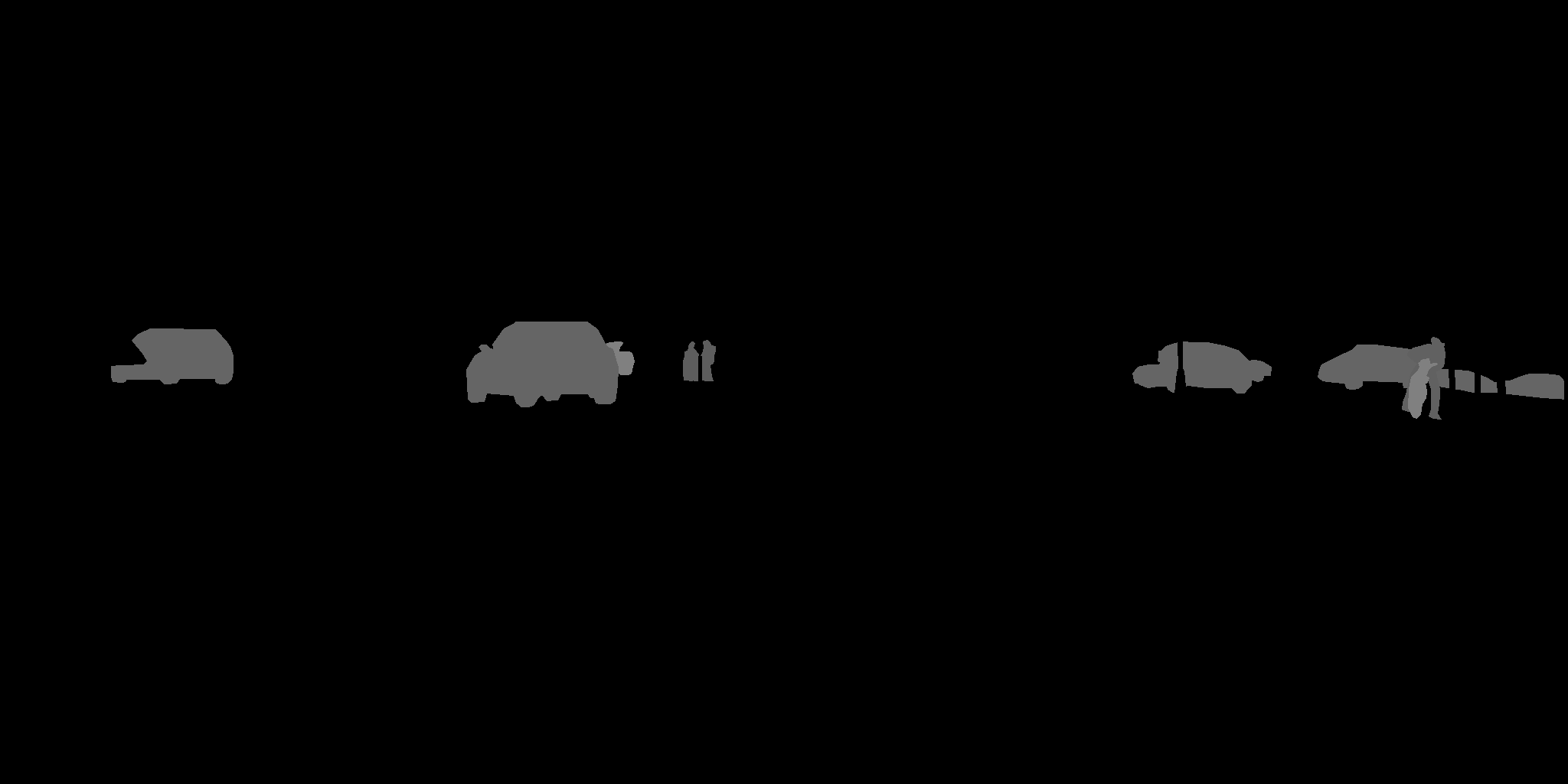

##### instance segmentation file - grayscale image (V2) |

|||

|

|||

A grayscale PNG file that stores integer values of labeled instances at each pixel. |

|||

|

|||

|

|||

--> |

|||

|

|||

### metrics |

|||

|

|||

Metrics store extra metadata that can be used to describe a particular sequence, capture or annotation. |

|||

Metric records are stored as an arbitrary number (M) of key-value pairs. For a sequence metric, capture_id, annotation_id and step should be null. |

|||

For a capture metric, annotation_id can be null. |

|||

For an annotation metric, all four columns of sequence_id, capture_id, annotation_id and step are not null. |

|||

|

|||

Metrics files might be generated in parallel from different simulation instances. |

|||

|

|||

``` |

|||

metric { |

|||

capture_id: <str> -- Foreign key which points to capture.id. |

|||

annotation_id: <str> -- Foreign key which points to annotation.id. |

|||

sequence_id: <str> -- Foreign key which points to capture.sequence_id. |

|||

step: <int> -- Foreign key which points to capture.step. |

|||

metric_definition: <int> -- Foreign key which points to metric_definition.id |

|||

values: [<obj>,...] -- List of all metric records stored as json objects. |

|||

} |

|||

``` |

|||

|

|||

### definitions |

|||

|

|||

Ego, sensor, annotation, and metric definition tables are static during the simulation. |

|||

This typically comes from the definition of the simulation and are generated during the simulation. |

|||

|

|||

#### egos.json |

|||

|

|||

A json file containing a collection of egos. This file is an enumeration of all egos in this simulation. |

|||

A specific object with sensors attached to it is a commonly used ego in a driving simulation. |

|||

|

|||

``` |

|||

ego { |

|||

id: <str> -- UUID of the ego. |

|||

description: <str> [optional] -- Ego instance description. |

|||

} |

|||

``` |

|||

|

|||

#### sensors.json |

|||

|

|||

A json file containing a collection of all sensors present in the simulation. |

|||

Each sensor is assigned a unique UUID. Each is associated with an ego and stores the UUID of the ego as a foreign key. |

|||

|

|||

``` |

|||

sensor { |

|||

id: <str> -- UUID of the sensor. |

|||

ego_id: <int> -- Foreign key pointing to ego.id. |

|||

modality: <str> {camera, lidar, radar, sonar,...} -- Sensor modality. |

|||

description: <str> [optional] -- Sensor instance description. |

|||

} |

|||

``` |

|||

#### annotation_definitions.json |

|||

|

|||

A json file containing a collection of annotation specifications (annotation_definition). |

|||

Each record describes a particular type of annotation and contains an annotation-specific specification describing how annotation data should be mapped back to labels or objects in the scene. |

|||

|

|||

Typically, the `spec` key describes all labels_id and label_name used by the annotation. |

|||

Some special cases like semantic segmentation might assign additional values (e.g. pixel value) to record the mapping between label_id/label_name and pixel color in the annotated PNG files. |

|||

|

|||

``` |

|||

annotation_definition { |

|||

id: <int> -- Integer identifier of the annotation definition. |

|||

name: <str> -- Human readable annotation spec name (e.g. sementic_segmentation, instance_segmentation, etc.) |

|||

description: <str, optional> -- Description of this annotation specifications. |

|||

format: <str> -- The format of the annotation files. (e.g. png, json, etc.) |

|||

spec: [<obj>...] -- Format-specific specification for the annotation values (ex. label-value mappings for semantic segmentation images) |

|||

} |

|||

|

|||

# semantic segmentation |

|||

annotation_definition.spec { |

|||

label_id: <int> -- Integer identifier of the label |

|||

label_name: <str> -- String identifier of the label |

|||

pixel_value: <int> -- Grayscale pixel value |

|||

color_pixel_value: <int, int, int> [optional] -- Color pixel value |

|||

} |

|||

|

|||

# label enumeration spec, used for annotations like bounding box 2d. This might be a subset of all labels used in simulation. |

|||

annotation_definition.spec { |

|||

label_id: <int> -- Integer identifier of the label |

|||

label_name: <str> -- String identifier of the label |

|||

} |

|||

``` |

|||

|

|||

#### metric_definitions.json |

|||

|

|||

A json file that stores collections of metric specifications records (metric_definition). |

|||

Each specification record describes a particular metric stored in [metrics](#metrics) values. |

|||

Each metric_definition record is assigned a unique identifier to a collection of specification records, which is stored as a list of key-value pairs. |

|||

The design is very similar to [annotation_definitions](#annotation_definitions). |

|||

|

|||

``` |

|||

metric_definition { |

|||

id: <int> -- Integer identifier of the metric definition. |

|||

name: <str> -- Human readable metric spec name (e.g. object_count, average distance, etc.) |

|||

description: <str, optional> -- Description of this metric specifications. |

|||

spec: [<obj>...] -- Format-specific specification for the metric values |

|||

} |

|||

|

|||

# label enumeration spec, used to enumerate all labels. For example, this can be used for object count metrics. |

|||

metric_definition.spec { |

|||

label_id: <int> -- Integer identifier of the label |

|||

label_name: <str> -- String identifier of the label |

|||

} |

|||

``` |

|||

|

|||

### schema versioning |

|||

|

|||

* The schema uses [semantic versioning](https://semver.org/). |

|||

|

|||

* Version info is placed at root level of the json file that holds a collection of objects (e.g. captures.json, |

|||

metrics.json, annotation_definitions.json,... ). All json files in a dataset will share the same version. |

|||

It should change atomically across files if the version of the schema is updated. |

|||

|

|||

* The version should only change when the Perception package changes (and even then, rarely). |

|||

Defining new metric_definitions or annotation_definitions will not change the schema version since it does not involve any schema changes. |

|||

|

|||

## example |

|||

|

|||

A mockup of synthetic dataset according to this schema can be found |

|||

[here](com.unity.perception/Schema/mock_data/simrun/README.md). In this mockup, we have: |

|||

|

|||

* 1 ego |

|||

|

|||

* 2 sensors: 1 camera and 1 LIDAR |

|||

|

|||

* 19 labels |

|||

|

|||

* 3 captures, 2 metrics, 1 sequence, 2 steps |

|||

|

|||

* the first includes 1 camera capture and 1 semantic segmentation annotation. |

|||

|

|||

* two captures, 1 camera capture and 1 LIDAR capture, are triggered at the same time. |

|||

For the camera, semantic segmentation, instance segmentation and 3d bounding box annotations are provided. |

|||

For the LIDAR sensor, semantic segmentation annotation of point cloud is included. |

|||

|

|||

* one of the metric events is emitted for metrics at capture level. The other one is emitted at annotation level. |

|||

|

|||

* 3 types of annotations: semantic segmentation, 3d bound box and LIDAR semantic segmentation. |

|||

|

|||

* 1 type of metric: object counts |

|||

|

|||

P0: 7.070493000000e+02 0.000000000000e+00 6.040814000000e+02 0.000000000000e+00 0.000000000000e+00 7.070493000000e+02 1.805066000000e+02 0.000000000000e+00 0.000000000000e+00 0.000000000000e+00 1.000000000000e+00 0.000000000000e+00 |

|||

P1: 7.070493000000e+02 0.000000000000e+00 6.040814000000e+02 -3.797842000000e+02 0.000000000000e+00 7.070493000000e+02 1.805066000000e+02 0.000000000000e+00 0.000000000000e+00 0.000000000000e+00 1.000000000000e+00 0.000000000000e+00 |

|||

P2: 7.070493000000e+02 0.000000000000e+00 6.040814000000e+02 4.575831000000e+01 0.000000000000e+00 7.070493000000e+02 1.805066000000e+02 -3.454157000000e-01 0.000000000000e+00 0.000000000000e+00 1.000000000000e+00 4.981016000000e-03 |

|||

P3: 7.070493000000e+02 0.000000000000e+00 6.040814000000e+02 -3.341081000000e+02 0.000000000000e+00 7.070493000000e+02 1.805066000000e+02 2.330660000000e+00 0.000000000000e+00 0.000000000000e+00 1.000000000000e+00 3.201153000000e-03 |

|||

R0_rect: 9.999128000000e-01 1.009263000000e-02 -8.511932000000e-03 -1.012729000000e-02 9.999406000000e-01 -4.037671000000e-03 8.470675000000e-03 4.123522000000e-03 9.999556000000e-01 |

|||

Tr_velo_to_cam: 6.927964000000e-03 -9.999722000000e-01 -2.757829000000e-03 -2.457729000000e-02 -1.162982000000e-03 2.749836000000e-03 -9.999955000000e-01 -6.127237000000e-02 9.999753000000e-01 6.931141000000e-03 -1.143899000000e-03 -3.321029000000e-01 |

|||

Tr_imu_to_velo: 9.999976000000e-01 7.553071000000e-04 -2.035826000000e-03 -8.086759000000e-01 -7.854027000000e-04 9.998898000000e-01 -1.482298000000e-02 3.195559000000e-01 2.024406000000e-03 1.482454000000e-02 9.998881000000e-01 -7.997231000000e-01 |

|||

|

|||

|

|||

{ |

|||

"version": "0.0.1", |

|||

"annotation_definitions": [ |

|||

{ |

|||

"id": 1, |

|||

"name": "semantic segmentation", |

|||

"description": "pixel-wise semantic segmentation label", |

|||

"format": "PNG", |

|||

"spec": [ |

|||

{"label_id": 8, "label_name": "road", "pixel_value": 0}, |

|||

{"label_id": 9, "label_name": "sidewalk", "pixel_value": 1}, |

|||

{"label_id": 12, "label_name": "building", "pixel_value": 2}, |

|||

{"label_id": 13, "label_name": "wall", "pixel_value": 3}, |

|||

{"label_id": 14, "label_name": "fence", "pixel_value": 4}, |

|||

{"label_id": 18, "label_name": "pole", "pixel_value": 5}, |

|||

{"label_id": 20, "label_name": "traffic light", "pixel_value": 6}, |

|||

{"label_id": 21, "label_name": "traffic sign", "pixel_value": 7}, |

|||

{"label_id": 22, "label_name": "vegetation", "pixel_value": 8}, |

|||

{"label_id": 23, "label_name": "terrain", "pixel_value": 9}, |

|||

{"label_id": 24, "label_name": "sky", "pixel_value": 10}, |

|||

{"label_id": 25, "label_name": "person", "pixel_value": 11}, |

|||

{"label_id": 26, "label_name": "rider", "pixel_value": 12}, |

|||

{"label_id": 27, "label_name": "car", "pixel_value": 13}, |

|||

{"label_id": 28, "label_name": "truck", "pixel_value": 14}, |

|||

{"label_id": 29, "label_name": "bus", "pixel_value": 15}, |

|||

{"label_id": 32, "label_name": "train", "pixel_value": 16}, |

|||

{"label_id": 33, "label_name": "motorcycle", "pixel_value": 17}, |

|||

{"label_id": 34, "label_name": "bicycle", "pixel_value": 18} |

|||

] |

|||

}, |

|||

{ |

|||

"id": 2, |

|||

"name": "3d bounding box", |

|||

"description": "3d bounding box annotation of object instances", |

|||

"format": "JSON", |

|||

"spec": [ |

|||

{"label_id": 27, "label_name": "car"}, |

|||

{"label_id": 34, "label_name": "bicycle"}, |

|||

{"label_id": 25, "label_name": "person"} |

|||

] |

|||

}, |

|||

{ |

|||

"id": 3, |

|||

"name": "lidar semantic segmention", |

|||

"description": "3d point cloud semantic segmentation", |

|||

"format": "PCD", |

|||

"spec": [ |

|||

{"label_id": 27, "label_name": "car", "point_value": 0}, |

|||

{"label_id": 34, "label_name": "bicycle", "point_value": 1}, |

|||

{"label_id": 25, "label_name": "person", "point_value": 2} |

|||

] |

|||

}, |

|||

{ |

|||

"id": 4, |

|||

"name": "2d bounding box", |

|||

"description": "2d bounding box annotation", |

|||

"format": "JSON", |

|||

"spec": [ |

|||

{"label_id": 27, "label_name": "car"}, |

|||

{"label_id": 34, "label_name": "bicycle"}, |

|||

{"label_id": 25, "label_name": "person"} |

|||

] |

|||

} |

|||

] |

|||

} |

|||

|

|||

{ |

|||

"version": "0.0.1", |

|||

"captures": [ |

|||

{ |

|||

"id": "e8b44709-dddf-439d-94d2-975460924903", |

|||

"sequence_id": "e96b97cd-8130-4ab4-a105-1b911a6d912b", |

|||

"step": 1, |

|||

"timestamp": 1, |

|||

"sensor": { |

|||

"sensor_id": "b4f6a75e-12de-4b4c-9574-5b135cecac6f", |

|||

"ego_id": "4f80234d-4342-420f-9187-07004613cd1f", |

|||

"modality": "camera", |

|||

"translation": [0.2, 1.1, 0.3], |

|||

"rotation": [0.3, 0.2, 0.1, 0.5], |

|||

"camera_intrinsic": [ |

|||

[0.1, 0, 0], |

|||

[3.0, 0.1, 0], |

|||

[0.5, 0.45, 1] |

|||

] |

|||

}, |

|||

"ego": { |

|||

"ego_id": 1, |

|||

"translation": [0.02, 0.0, 0.0], |

|||

"rotation": [0.1, 0.1, 0.3, 0.0], |

|||

"velocity": [0.1, 0.1, 0.0], |

|||

"acceleration": null |

|||

}, |

|||

"filename": "captures/camera_000.png", |

|||

"format": "PNG", |

|||

"annotations": [ |

|||

{ |

|||

"id": "35cbdf6e-96e5-446e-852d-fe40be79ce77", |

|||

"annotation_definition": 1, |

|||

"filename": "annotations/semantic_segmentation_000.png", |

|||

"values": null |

|||

} |

|||

] |

|||

} |

|||

] |

|||

} |

|||

|

|||

|

|||

{ |

|||

"version": "0.0.1", |

|||

"captures": [ |

|||

{ |

|||

"id": "4521949a-2a71-4c03-beb0-4f6362676639", |

|||

"sequence_id": "e96b97cd-8130-4ab4-a105-1b911a6d912b", |

|||

"step": 2, |

|||

"timestamp": 2, |

|||

"sensor": { |

|||

"sensor_id": 1, |

|||

"ego_id": 1, |

|||

"modality": "camera", |

|||

"translation": [0.2, 1.1, 0.3], |

|||

"rotation": [0.3, 0.2, 0.1, 0.5], |

|||

"camera_intrinsic": [ |

|||

[0.1, 0, 0], |

|||

[3.0, 0.1, 0], |

|||

[0.5, 0.45, 1] |

|||

] |

|||

}, |

|||

"ego": { |

|||

"ego_id": 1, |

|||

"translation": [0.12, 0.1, 0.0], |

|||

"rotation": [0.0, 0.15, 0.24, 0.0], |

|||

"velocity": [0.0, 0.0, 0.0], |

|||

"acceleration": null |

|||

}, |

|||

"filename": "captures/camera_001.png", |

|||

"format": "PNG", |

|||

"annotations": [ |

|||

{ |

|||

"id": "a79ab4fb-acf3-47ad-8a6f-20af795e23e1", |

|||

"annotation_definition": 1, |

|||

"filename": "annotations/semantic_segmentation_001.png", |

|||

"values": null |

|||

}, |

|||

{ |

|||

"id": "36db01f8-e322-4c81-a650-bec89a7e6100", |

|||

"annotation_definition": 2, |

|||

"filename": null, |

|||

"values": [ |

|||

{ |

|||

"instance_id": "85149ab1-3b75-443b-8540-773b31559a26", |

|||

"label_id": 27, |

|||

"label_name": "car", |

|||

"translation": [24.0, 12.1, 0.0], |

|||

"size": [2.0, 3.0, 1.0], |

|||

"rotation": [0.0, 1.0, 2.0, 0.0], |

|||

"velocity": [0.5, 0.0, 0.0], |

|||

"acceleration": null |

|||

}, |

|||

{ |

|||

"instance_id": "f2e56dad-9bfd-4930-9dca-bfe08672de3a", |

|||

"label_id": 34, |

|||

"label_name": "bicycle", |

|||

"translation": [5.2, 7.9, 0.0], |

|||

"size": [0.3, 0.5, 1.0], |

|||

"rotation": [0.0, 1.0, 2.0, 0.0], |

|||

"velocity": [0.0, 0.1, 0.0], |

|||

"acceleration": null |

|||

}, |

|||

{ |

|||

"instance_id": "a52dfb48-e5a4-4008-96b6-80da91caa777", |

|||

"label_id": 25, |

|||

"label_name": "person", |

|||

"translation": [41.2, 1.5, 0.0], |

|||

"size": [0.3, 0.3, 1.8], |

|||

"rotation": [0.0, 1.0, 2.0, 0.0], |

|||

"velocity": [0.05, 0.0, 0.0], |

|||

"acceleration": null |

|||

} |

|||

] |

|||

}, |

|||

{ |

|||

"id": "36db01f8-e322-4c81-a650-bec89a7e6100", |

|||

"annotation_definition": 4, |

|||

"filename": null, |

|||

"values": [ |

|||

{ |

|||

"instance_id": "85149ab1-3b75-443b-8540-773b31559a26", |

|||

"label_id": 27, |

|||

"label_name": "car", |

|||

"x": 30, |

|||

"y": 50, |

|||

"width": 100, |

|||

"height": 100 |

|||

}, |

|||

{ |

|||

"instance_id": "f2e56dad-9bfd-4930-9dca-bfe08672de3a", |

|||

"label_id": 34, |

|||

"label_name": "bicycle", |

|||

"x": 120, |

|||

"y": 331, |

|||

"width": 50, |

|||

"height": 20 |

|||

}, |

|||

{ |

|||

"instance_id": "a52dfb48-e5a4-4008-96b6-80da91caa777", |

|||

"label_id": 25, |

|||

"label_name": "person", |

|||

"x": 432, |

|||

"y": 683, |

|||

"width": 10, |

|||

"height": 20 |

|||

} |

|||

] |

|||

} |

|||

] |

|||

}, |

|||

{ |

|||

"id": "4b35a47a-3f63-4af3-b0e8-e68cb384ad75", |

|||

"sequence_id": "e96b97cd-8130-4ab4-a105-1b911a6d912b", |

|||

"step": 2, |

|||

"timestamp": 2, |

|||

"sensor": { |

|||

"sensor_id": 2, |

|||

"ego_id": 1, |

|||

"modality": "lidar", |

|||

"translation": [0.0, 0.0, 0.0], |

|||

"rotation": [0.0, 0.0, 0.0, 0.0], |

|||

"camera_intrinsic": null |

|||

}, |

|||

"ego": { |

|||

"ego_id": 1, |

|||

"translation": [0.12, 0.1, 0.0], |

|||

"rotation": [0.0, 0.15, 0.24, 0.0], |

|||

"velocity": [0.0, 0.0, 0.0], |

|||

"acceleration": null |

|||

}, |

|||

"filename": "captures/lidar_000.pcd", |

|||

"format": "PCD", |

|||

"annotations": [ |

|||

{ |

|||

"id": "3b7b2af7-4d9f-4f1d-a9f5-32365c5896c8", |

|||

"annotation_definition": 3, |

|||

"filename": "annotations/lidar_semantic_segmentation_000.pcd" |

|||

} |

|||

] |

|||

} |

|||

] |

|||

} |

|||

|

|||

|

|||

{ |

|||

"version": "0.0.1", |

|||

"egos": [ |

|||

{ |

|||

"id": "4f80234d-4342-420f-9187-07004613cd1f", |

|||

"description": "the main car driving in simulation" |

|||

} |

|||

] |

|||

} |

|||

|

|||

{ |

|||

"version": "0.0.1", |

|||

"metric_definitions": [ |

|||

{ |

|||

"id": 1, |

|||

"name": "object count", |

|||

"description": "count number of objects observed", |

|||

"spec": [ |

|||

{"label_id": 27, "label_name": "car"}, |

|||

{"label_id": 34, "label_name": "bicycle"}, |

|||

{"label_id": 25, "label_name": "person"} |

|||

] |

|||

} |

|||

] |

|||

} |

|||

|

|||

{ |

|||

"version": "0.0.1", |

|||

"metrics": [ |

|||

{ |

|||

"capture_id": "e8b44709-dddf-439d-94d2-975460924903", |

|||

"annotation_id": null, |

|||

"sequence_id": "e96b97cd-8130-4ab4-a105-1b911a6d912b", |

|||

"step": 1, |

|||

"metric_definition": 1, |

|||

"values": [ |

|||

{"label_id": 27, "label_name": "car", "count": 5}, |

|||

{"label_id": 34, "label_name": "bicycle", "count": 1}, |

|||

{"label_id": 25, "label_name": "person", "count": 7} |

|||

] |

|||

}, |

|||

{ |

|||

"capture_id": "4b35a47a-3f63-4af3-b0e8-e68cb384ad75", |

|||

"annotation_id": "35cbdf6e-96e5-446e-852d-fe40be79ce77", |

|||

"sequence_id": "e96b97cd-8130-4ab4-a105-1b911a6d912b", |

|||

"step": 1, |

|||

"metric_definition": 1, |

|||

"values": [ |

|||

{"label_id": 27, "label_name": "car", "count": 3}, |

|||

{"label_id": 25, "label_name": "person", "count": 2} |

|||

] |

|||

}, |

|||

{ |

|||

"capture_id": "3d09bbce-7f7b-4d9c-8c8a-2f75158e0c8e", |

|||

"annotation_id": null, |

|||

"sequence_id": "e96b97cd-8130-4ab4-a105-1b911a6d912b", |

|||

"step": 1, |

|||

"metric_definition": 1, |

|||

"values": [ |

|||

{"label_id": 27, "label_name": "car", "count": 1}, |

|||

{"label_id": 34, "label_name": "bicycle", "count": 2}, |

|||

{"label_id": 25, "label_name": "person", "count": 2} |

|||

] |

|||

} |

|||

] |

|||

} |

|||

|

|||

{ |

|||

"version": "0.0.1", |

|||

"sensors": [ |

|||

{ |

|||

"id": "b4f6a75e-12de-4b4c-9574-5b135cecac6f", |

|||

"ego_id": "4f80234d-4342-420f-9187-07004613cd1f", |

|||

"modality": "camera", |

|||

"description": "Point Grey Flea 2 (FL2-14S3M-C)" |

|||

}, |

|||

{ |

|||

"id": "6fb1a823-5b83-4a79-b566-fe4435ec1942", |

|||

"ego_id": "4f80234d-4342-420f-9187-07004613cd1f", |

|||

"modality": "lidar", |

|||

"description": "Velodyne HDL-64E" |

|||

} |

|||

] |

|||

} |

|||

|

|||

Mockup of Synthetic Dataset |

|||

|

|||

This is a mock dataset that is created according to this schema [design](https://docs.google.com/document/d/1lKPm06z09uX9gZIbmBUMO6WKlIGXiv3hgXb_taPOnU0) |

|||

|

|||

Included in this mockup: |

|||

|

|||

- 1 ego car |

|||

- 2 sensors: 1 camera and 1 LIDAR |

|||

- 19 labels |

|||

- 3 captures, 2 metrics, 1 sequence, 2 steps |

|||

- the first includes 1 camera capture and 1 semantic segmentation annotation. |

|||

- two captures, 1 camera capture and 1 LIDAR capture, are triggered at the same time. For the camera, semantic segmentation, instance segmentation and 3d bounding box annotations are provided. For the LIDAR sensor, semantic segmentation annotation of point cloud is included. |

|||

- one of the metric event is emitted for metrics at capture level. The other one is emitted at annotation level. |

|||

- 4 types of annotations: semantic segmentation, 2d bounding box, 3d bounding box and LIDAR semantic segmentation. |

|||

- 1 type of metric: object count |

|||

|

|||

"run_execution_id","app_param_id","instance_id","attempt_id","file_name","download_uri" |

|||

"simrun","18cWX0n","1","1","Annotations/lidar_semantic_segmentation_000.pcd","https://mock.url/Annotations/lidar_semantic_segmentation_000.pcd" |

|||

"simrun","18cWX0n","1","1","Annotations/sementic_segmantation_000.png","https://mock.url/Annotations/sementic_segmantation_000.png" |

|||

"simrun","18cWX0n","1","1","Annotations/sementic_segmantation_001.png","https://mock.url/Annotations/sementic_segmantation_001.png" |

|||

"simrun","18cWX0n","1","1","Captures/camera_000.png","https://mock.url/Captures/camera_000.png" |

|||

"simrun","18cWX0n","1","1","Captures/camera_001.png","https://mock.url/Captures/camera_001.png" |

|||

"simrun","18cWX0n","1","1","Captures/lidar_000.pcd","https://mock.url/Captures/lidar_000.pcd" |

|||

"simrun","18cWX0n","1","1","Dataset/captures_000.json","https://mock.url/Dataset/captures_000.json" |

|||

"simrun","18cWX0n","1","1","Dataset/captures_001.json","https://mock.url/Dataset/captures_001.json" |

|||

"simrun","18cWX0n","1","1","Dataset/metrics_000.json","https://mock.url/Dataset/metrics_000.json" |

|||

"simrun","18cWX0n","1","1","Dataset/annotation_definitions.json","https://mock.url/Dataset/annotation_definitions.json" |

|||

"simrun","18cWX0n","1","1","Dataset/metric_definitions.json","https://mock.url/Dataset/metric_definitions.json" |

|||

"simrun","18cWX0n","1","1","Dataset/egos.json","https://mock.url/Dataset/egos.json" |

|||

"simrun","18cWX0n","1","1","Dataset/sensors.json","https://mock.url/Dataset/sensors.json" |

|||

撰写

预览

正在加载...

取消

保存

Reference in new issue