# The Perception Camera Component

The Perception Camera component ensures that the [Camera](https://docs.unity3d.com/Manual/class-Camera.html) runs at deterministic rates. It also ensures that the Camera uses [DatasetCapture](DatasetCapture.md) to capture RGB and other Camera-related ground truth in the [JSON dataset](Schema/Synthetic_Dataset_Schema.md). You can use the Perception Camera component on the High Definition Render Pipeline (HDRP) or the Universal Render Pipeline (URP).

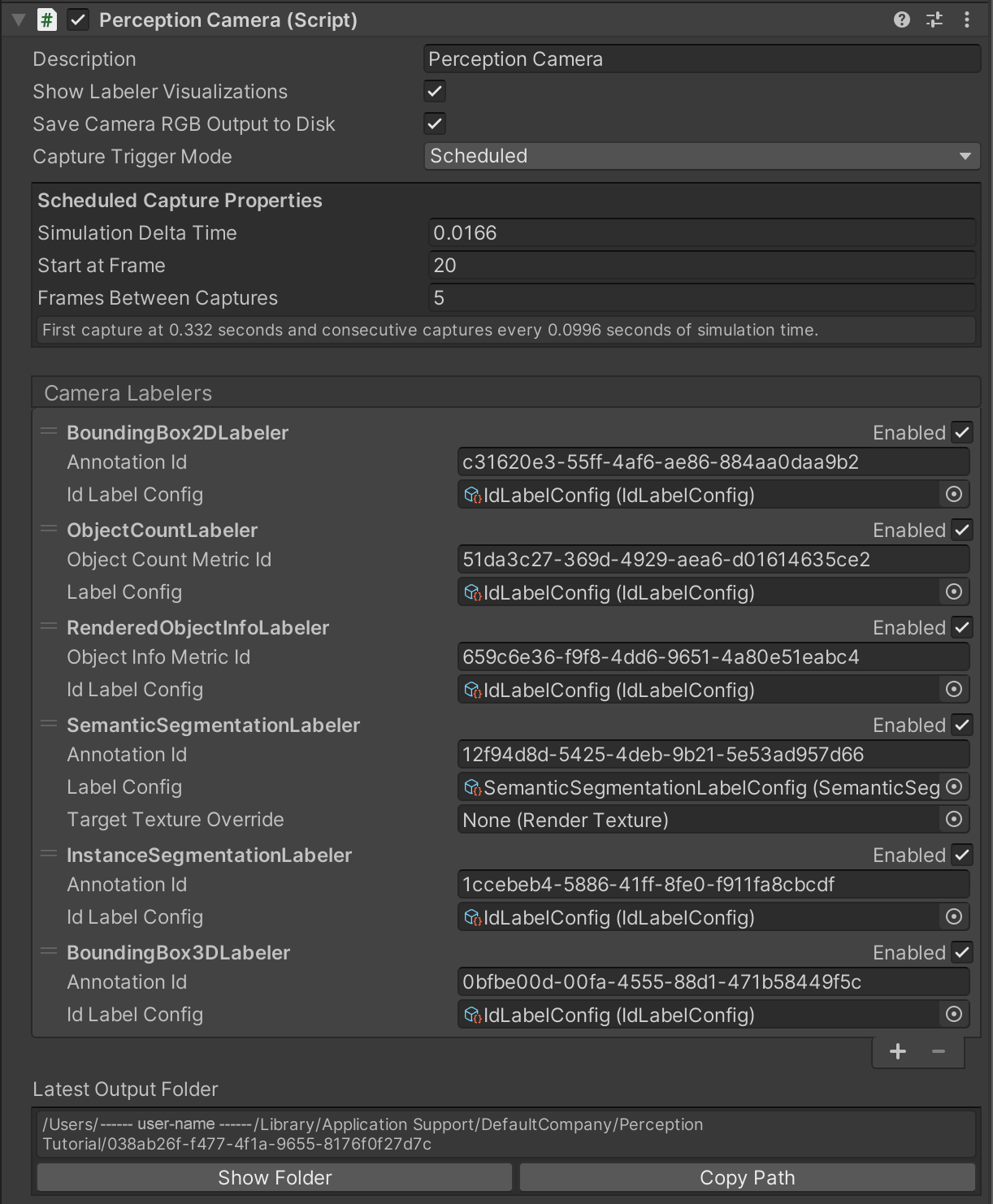

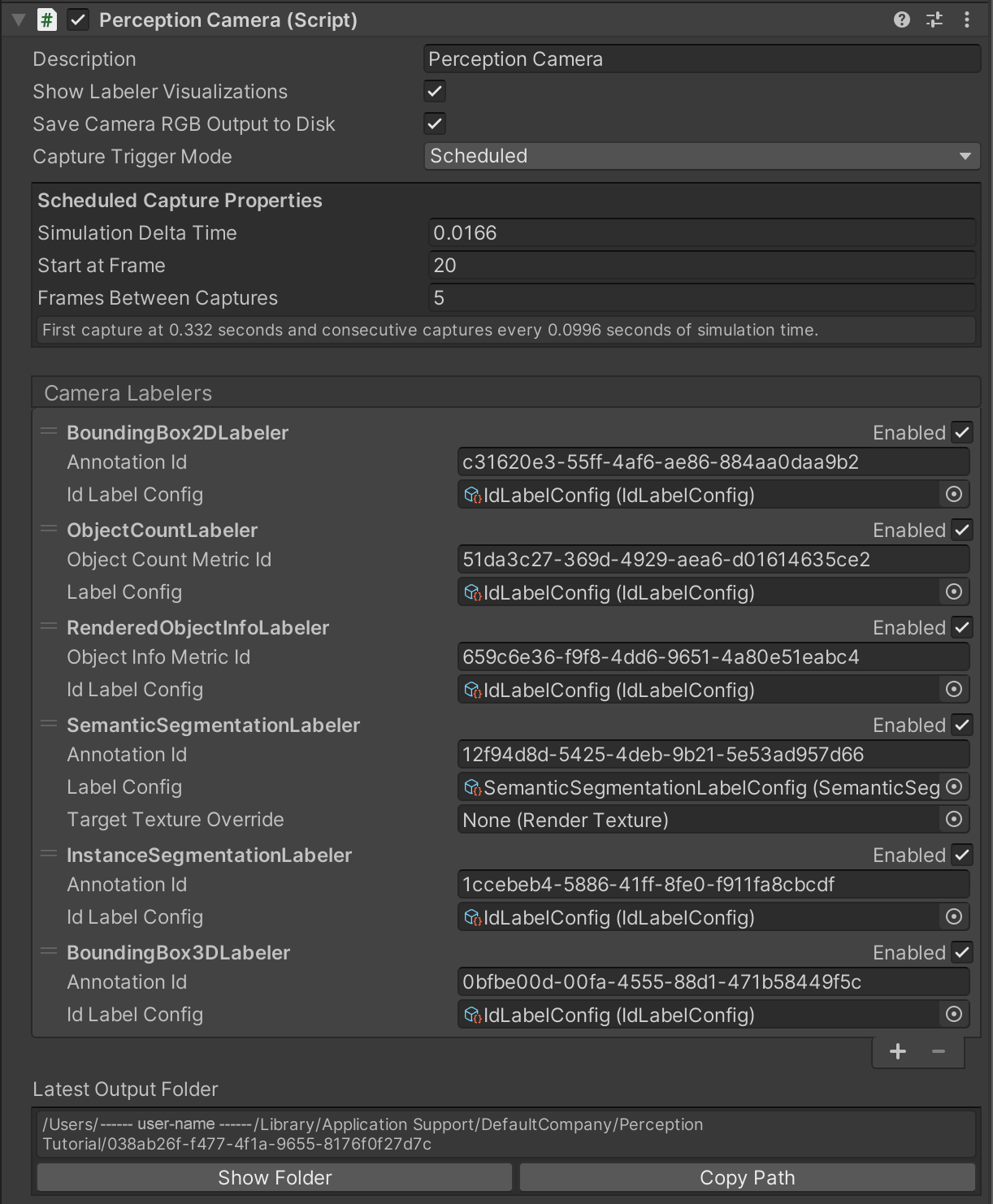

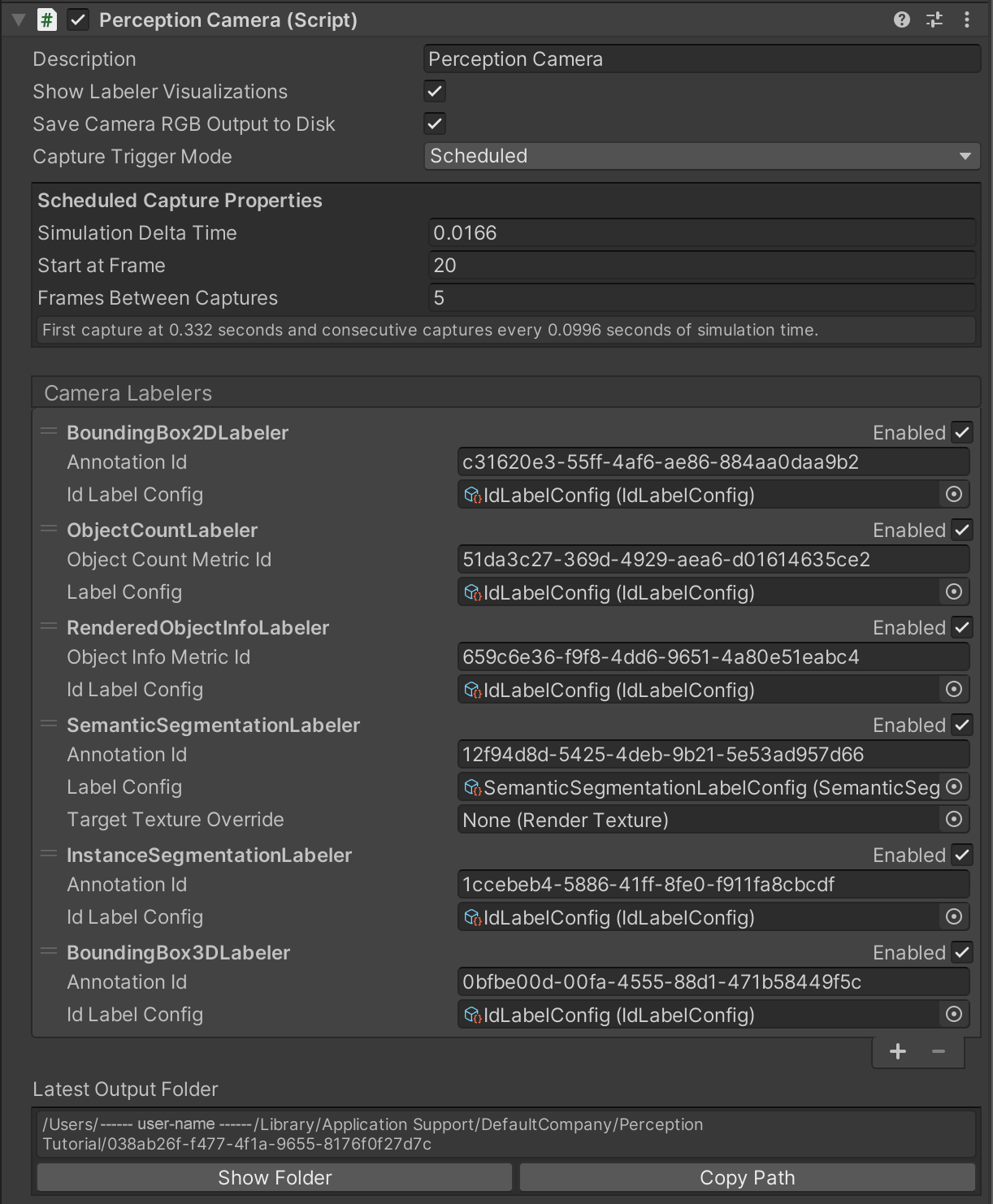

The Inspector view of the Perception Camera component

## Properties

| Property: | Function: |

|--|--|

| Description | A description of the Camera to be registered in the JSON dataset. |

| Show Visualizations | Display realtime visualizations for labelers that are currently active on this camera. |

| Capture RGB Images | When you enable this property, Unity captures RGB images as PNG files in the dataset each frame. |

| Capture Trigger Mode | The method of triggering captures for this camera. In `Scheduled` mode, captures happen automatically based on a start frame and frame delta time. In `Manual` mode, captures should be triggered manually through calling the `RequestCapture` method of `PerceptionCamera`. |

| Camera Labelers | A list of labelers that generate data derived from this Camera. |

### Properties for Scheduled Capture Mode

| Property: | Function: |

|--|--|

| Simulation Delta Time | The simulation frame time (seconds) for this camera. E.g. 0.0166 translates to 60 frames per second. This will be used as Unity's `Time.captureDeltaTime`, causing a fixed number of frames to be generated for each second of elapsed simulation time regardless of the capabilities of the underlying hardware. For more information on sensor scheduling, see [DatasetCapture](DatasetCapture.md). |

| First Capture Frame | Frame number at which this camera starts capturing. |

| Frames Between Captures | The number of frames to simulate and render between the camera's scheduled captures. Setting this to 0 makes the camera capture every frame. |

### Properties for Manual Capture Mode

| Property: | Function: |

|--|--|

| Affect Simulation Timing | Have this camera affect simulation timings (similar to a scheduled camera) by requesting a specific frame delta time. Enabling this option will let you set the `Simulation Delta Time` property described above.|

## Camera labelers

Camera labelers capture data related to the Camera in the JSON dataset. You can use this data to train models and for dataset statistics. The Perception package provides several Camera labelers, and you can derive from the CameraLabeler class to define more labelers.

### Semantic Segmentation Labeler

_Example semantic segmentation image from a modified SynthDet project_

The SemanticSegmentationLabeler generates a 2D RGB image with the attached Camera. Unity draws objects in the color you associate with the label in the SemanticSegmentationLabelingConfiguration. If Unity can't find a label for an object, it draws it in black.

### Instance Segmentation Labeler

The instance segmentation labeler generates a 2D RGB image with the attached camera. Unity draws each instance of a labeled

object with a unique color.

### Bounding Box 2D Labeler

_Example bounding box visualization from SynthDet generated by the `SynthDet_Statistics` Jupyter notebook_

The BoundingBox2DLabeler produces 2D bounding boxes for each visible object with a label you define in the IdLabelConfig. Unity calculates bounding boxes using the rendered image, so it only excludes occluded or out-of-frame portions of the objects.

### Bounding Box 3D Ground Truth Labeler

The Bounding Box 3D Ground Truth Labeler produces 3D ground truth bounding boxes for each labeled game object in the scene. Unlike the 2D bounding boxes, 3D bounding boxes are calculated from the labeled meshes in the scene and all objects (independent of their occlusion state) are recorded.

### Object Count Labeler

```

{

"label_id": 25,

"label_name": "drink_whippingcream_lucerne",

"count": 1

}

```

_Example object count for a single label_

The ObjectCountLabeler records object counts for each label you define in the IdLabelConfig. Unity only records objects that have at least one visible pixel in the Camera frame.

### Rendered Object Info Labeler

```

{

"label_id": 24,

"instance_id": 320,

"visible_pixels": 28957

}

```

_Example rendered object info for a single object_

The RenderedObjectInfoLabeler records a list of all objects visible in the Camera image, including its instance ID, resolved label ID and visible pixels. If Unity cannot resolve objects to a label in the IdLabelConfig, it does not record these objects.

### KeypointLabeler

The keypoint labeler captures keypoints of a labeled gameobject. The typical use of this labeler is capturing human pose

estimation data. The labeler uses a [keypoint template](#KeypointTemplate) which defines the keypoints to capture for the

model and the skeletal connections between those keypoints. The positions of the keypoints are recorded in pixel coordinates

and saved to the captures json file.

```

keypoints {

label_id: -- Integer identifier of the label

instance_id: -- UUID of the instance.

template_guid: -- UUID of the keypoint template

pose: -- Pose ground truth information

keypoints [ -- Array of keypoint data, one entry for each keypoint defined in associated template file.

{

index: -- Index of keypoint in template

x: -- X pixel coordinate of keypoint

y: -- Y pixel coordinate of keypoint

state: -- 0: keypoint does not exist, 1 keypoint exists

}, ...

]

}

```

#### Keypoint Template

keypoint templates are used to define the keypoints and skeletal connections captured by the KeypointLabeler. The keypoint

template takes advantage of Unity's humanoid animation rig, and allows the user to automatically associate template keypoints

to animation rig joints. Additionally, the user can choose to ignore the rigged points, or add points not defined in the rig.

A Coco keypoint template is included in the perception package.

##### Editor

The keypoint template editor allows the user to create/modify a keypoint template. The editor consists of the header information,

the keypoint array, and the skeleton array.

_Header section of the keypoint template_

In the header section, a user can change the name of the template and supply textures that they would like to use for the keypoint

visualization.

_Keypoint section of the keypoint template_

The keypoint section allows the user to create/edit keypoints and associate them with Unity animation rig points. Each keypoint record

has 4 fields: label (the name of the keypoint), Associate to Rig (a boolean value which, if true, automatically maps the keypoint to

the gameobject defined by the rig), Rig Label (only needed if Associate To Rig is true, defines which rig component to associate with

the keypoint), and Color (RGB color value of the keypoint in the visualization).

_Skeleton section of the keypoint template_

The skeleton section allows the user to create connections between joints, basically defining the skeleton of a labeled object.

##### Format

```

annotation_definition.spec {

template_id: -- The UUID of the template

template_name: -- Human readable name of the template

key_points [ -- Array of joints defined in this template

{

label: -- The label of the joint

index: -- The index of the joint

}, ...

]

skeleton [ -- Array of skeletal connections (which joints have connections between one another) defined in this template

{

joint1: -- The first joint of the connection

joint2: -- The second joint of the connection

}, ...

]

}

```

#### Animation Pose Label

This file is used to define timestamps in an animation to a pose label.

## Limitations

Ground truth is not compatible with all rendering features, especially those that modify the visibility or shape of objects in the frame.

When generating ground truth:

* Unity does not run Vertex and geometry shaders

* Unity does not consider transparency and considers all geometry opaque

* Unity does not run post-processing effects, except built-in lens distortion in URP and HDRP

If you discover more incompatibilities, please open an issue in the [Perception GitHub repository](https://github.com/Unity-Technologies/com.unity.perception/issues).