当前提交

9728cea0

共有 10 个文件被更改,包括 260 次插入 和 132 次删除

-

10com.unity.perception/Documentation~/DatasetCapture.md

-

125com.unity.perception/Documentation~/GettingStarted.md

-

55com.unity.perception/Documentation~/PerceptionCamera.md

-

2com.unity.perception/Documentation~/Randomization/Index.md

-

14com.unity.perception/Documentation~/SetupSteps.md

-

8com.unity.perception/Documentation~/TableOfContents.md

-

24com.unity.perception/Documentation~/index.md

-

27com.unity.perception/Documentation~/GroundTruthLabeling.md

-

102com.unity.perception/Documentation~/images/SemanticSegmentationLabelConfig.png

-

25com.unity.perception/Documentation~/GroundTruth-Labeling.md

|

|||

# Getting Started with Perception |

|||

# Getting started with Perception |

|||

This walkthrough will provide creating a new scene for generating perception datasets including segmentation data and image captures. |

|||

This walkthrough shows you how to create a new scene in order to generate perception datasets including segmentation data and image captures. |

|||

First, follow [this guide](SetupSteps.md) to install Perception in your project. |

|||

To install Perception in your project, follow the [Installing the Perception package in your project](SetupSteps.md) guide. |

|||

This step can be skipped for HDRP projects. |

|||

You can skip this step for HDRP projects. |

|||

1. Select your project's `ScriptableRenderer` asset and open the inspector window. In most projects it is located at `Assets/Settings/ForwardRenderer.asset`. |

|||

2. Click `Add Renderer Feature` and select `Ground Truth Renderer Feature` |

|||

1. Select your project's **ScriptableRenderer** asset and open the Inspector window. In most projects it is located at `Assets/Settings/ForwardRenderer.asset`. |

|||

2. Select **Add Renderer Feature** and **Ground Truth Renderer Feature**. |

|||

<img src="images/ScriptableRendererStep.png" align="middle"/> |

|||

|

|||

<br/>_ForwardRenderer_ |

|||

1. Create a new scene using File -> New Scene |

|||

2. `ctrl+s` to save the scene and give it a name |

|||

3. Select the Main Camera and reset the Position transform to 0 |

|||

4. In the Hierarchy window select the main camera |

|||

1. In the inspector panel of the main camera select Add Component |

|||

2. Add a **Perception Camera** component |

|||

1. Create a new scene using **File** > **New Scene** |

|||

2. Ctrl+S to save the scene and give it a name |

|||

3. Select the Main Camera and reset the **Position** transform to 0 |

|||

4. In the Hierarchy window select the **Main Camera** |

|||

1. In the inspector panel of the Main Camera select **Add Component** |

|||

2. Add a Perception Camera component |

|||

<img src="images/PerceptionCameraFinished.png" align="middle"/> |

|||

|

|||

<br/>_Perception Camera component_ |

|||

1. Create a cube by right-clicking in the Hierarchy window, select 3D Object -> Cube |

|||

2. Create 2 more cubes this way |

|||

1. Create a cube by right-clicking in the Hierarchy window and selecting **3D Object** > **Cube** |

|||

2. Create two more cubes this way |

|||

4. Position the Cubes in front of the main Camera |

|||

4. Position the cubes in front of the Main Camera |

|||

|

|||

|

|||

<br/>_Position of the cubes in front of the Main Camera_ |

|||

|

|||

5. On each cube, in the Inspector panel add a Labeling component |

|||

1. Select **Add (+)** |

|||

2. In the text field add the name of the object, for example Crate. Unity uses this label in the semantic segmentation images. |

|||

|

|||

|

|||

<br/>_A labeling component, for example "Crate"_ |

|||

|

|||

6. Create and set up an IdLabelConfig |

|||

1. In the Project panel select **Add (+)**, then **Perception** > **ID Label Config** |

|||

2. In the Assets folder, select the new **IdLabelConfig** |

|||

3. In the Inspector, select **Add to list (+)** three times |

|||

4. In the three label text fields, add the text from the Labeling script on the objects you created in the Scene (that is Crate, Cube and Box) |

|||

|

|||

|

|||

<br/>_IdLabelConfig with three labels_ |

|||

|

|||

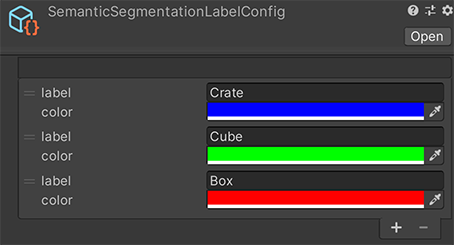

7. Create and set up a SemanticSegmentationLabelingConfiguration |

|||

|

|||

1. In the Project panel select **Add (+)**, then **Perception** > **Semantic Segmentation Label Config** |

|||

2. In the Assets folder, select the new SemanticSegmentationLabelingConfiguration |

|||

3. In the Inspector, select **Add to list (+)** three times |

|||

4. In the three label text fields, add the text from the Labeling script on the objects you created in the Scene (that is Crate, Cube and Box) |

|||

|

|||

|

|||

<br/>_SemanticSegmentationLabelConfig with three labels and three colors_ |

|||

<img src="images/CompletedScene.PNG" align="middle"/> |

|||

8. In the Hierarchy panel select the Main Camera |

|||

5. On each cube, from the inspector panel add a **Labeling** component |

|||

1. Click the **+** |

|||

2. In the text field add the name of the object i.e Crate. This will be the label used in the semantic segmentation images |

|||

9. Add the IdLabelConfig to the Perception Camera script |

|||

<img src="images/LabeledObject.PNG" align="middle"/> |

|||

1. In the Perception Camera script, find the following three Camera Labelers: BoundingBox2DLabeler, ObjectCountLabeler and RenderedObjectInfoLabeler. For each Camera Labeler, in the Id Label Config field (or Label Config field, for the ObjectCountLabeler Camera Labeler), click the circle button. |

|||

2. In the Select IdLabelConfig window, select the **IdLabelConfig** you created. |

|||

6. In the Project panel right click -> Perception -> Labeling Configuration |

|||

7. Select the new **ID Label Config** |

|||

1. Click the **+** |

|||

2. In the label text field add the same text that the Label script contains on the objects created in the scene (i.e Cube, Box, Crate) |

|||

|

|||

<img src="images/IDLabelingConfigurationFinished.PNG" align="middle"/> |

|||

10. Add the SemanticSegmentationLabelingConfiguration to the Perception Camera script |

|||

|

|||

1. In the Perception Camera script, find the SemanticSegmentationLabeler Camera Labeler. In its Label Config field, click the circle button. |

|||

2. In the Select SemanticSegmentationLabelConfig window, select the **SemanticSegmentationLabelConfig** you created. |

|||

8. Select the Main Camera in the Hierarchy panel |

|||

9. In the Perception Camera attach the ID Label Config created in previous step for each ID Label config |

|||

|

|||

<br/>_Perception Camera Labelers_ |

|||

<img src="images/MainCameraLabelConfig.PNG" align="middle"/> |

|||

1. Press play in the editor, allow the scene to run for a few seconds, and then exit playmode |

|||

2. In the console log you will see a Shutdown in Progress message that will show a file path to the location of the generated dataset. |

|||

1. In the Editor, press the play button, allow the scene to run for a few seconds, then exit Play mode. |

|||

2. In the console log you see a Shutdown in Progress message that shows a file path to the location of the generated dataset. |

|||

3. In the dataset folder you will find the following data: |

|||

3. You find the following data in the dataset folder: |

|||

<img src="images/rgb_2.png" align="middle"/> |

|||

|

|||

<br/>_RGB capture_ |

|||

_RGB image_ |

|||

|

|||

<br/>_Semantic segmentation image_ |

|||

<img src="images/segmentation_2.png" align="middle"/> |

|||

## Optional Step: realtime visualization of labelers |

|||

_Example semantic segmentation image_ |

|||

The Perception package comes with the ability to show realtime results of the labeler in the scene. To enable this capability: |

|||

## Optional Step: Realtime visualization of labelers |

|||

|

|||

<br/>_Example of Perception running with show visualizations enabled_ |

|||

The perception package now comes with the ability to show realtime results of the labeler in the scene. To enable this capability: |

|||

1. To use the visualizer, select the Main Camera, and in the Inspector pane, in the Perception Camera component, enable **Show Visualizations**. This enables the built-in labelers which includes segmentation data, 2D bounding boxes, pixel and object counts. |

|||

2. Enabling the visualizer creates new UI controls in the Editor's Game view. These controls allow you to control each of the individual visualizers. You can enable or disable individual visualizers. Some visualizers also include controls that let you change their output. |

|||

<img src="images/visualized.png" align="middle"/> |

|||

|

|||

_Example of perception running with show visualizations on_ |

|||

|

|||

<br/>_Visualization controls in action_ |

|||

1. To use the visualizer, verify that *Show Visualizations* is checked on in the Inspector pane. This turns on the built in labelers which includes segmentation data, 2D bounding boxes, pixel and object counts. |

|||

2. Turning on the visualizer creates new UI controls in the editor's game view. These controls allow you to atomically control each of the individual visualizers. Each individual can be turned on/off on their own. Some visualizers also include controls to change their output. |

|||

|

|||

<img src="images/controls.gif" align="middle"/> |

|||

|

|||

_Visualization controls in action_ |

|||

|

|||

***Important Note:*** The perception package takes advantage of asynchronous processing to ensure reasonable frame rates of a scene. A side effect of realtime visualization is that the labelers have to be applied to the capture in its actual frame, which will potentially adversely affect the scene's framerate. |

|||

**Important Note:** The Perception package uses asynchronous processing to ensure reasonable frame rates of a scene. A side effect of real-time visualization is that the labelers must be applied to the capture in its actual frame, which potentially adversely affects the Scene's framerate. |

|||

|

|||

* [Installation Instructions](SetupSteps.md) |

|||

* [Getting Started](GettingStarted.md) |

|||

* [Labeling](GroundTruth-Labeling.md) |

|||

* [Installation instructions](SetupSteps.md) |

|||

* [Getting started](GettingStarted.md) |

|||

* [Labeling](GroundTruthLabeling.md) |

|||

* [Dataset Capture](DatasetCapture.md) |

|||

* [Dataset capture](DatasetCapture.md) |

|||

* [Randomization](Randomization/Index.md) |

|||

|

|||

# Labeling |

|||

Many labelers require mapping the objects in the view to the values recorded in the dataset. As an example, Semantic Segmentation needs to determine the color to draw each object in the segmentation image. |

|||

|

|||

This mapping is accomplished for a GameObject by: |

|||

* Finding the nearest Labeling component attached to the object or its parents. |

|||

|

|||

* Finding the first label in the Labeling component that is present anywhere in the Labeler's Label Config. |

|||

|

|||

Unity uses the resolved Label Entry from the Label Config to produce the final output. |

|||

|

|||

## Labeling component |

|||

The Labeling component associates a list of string-based labels with a GameObject and its descendants. A Labeling component on a descendant overrides its parent's labels. |

|||

|

|||

## Label Config |

|||

Many labelers require require a Label Config asset. This asset specifies a list of all labels to be captured in the dataset along with extra information used by the various labelers. |

|||

|

|||

## Best practices |

|||

Generally algorithm testing and training requires a single label on an asset for proper identification such as “chair”, “table”, or “door". To maximize asset reuse, however, it is useful to give each object multiple labels in a hierarchy. |

|||

|

|||

For example, you could label an asset representing a box of Rice Krispies as `food\cereal\kellogs\ricekrispies` |

|||

|

|||

* “food”: type |

|||

* “cereal”: subtype |

|||

* “kellogs”: main descriptor |

|||

* “ricekrispies”: sub descriptor |

|||

|

|||

If the goal of the algorithm is to identify all objects in a Scene that are “food”, that label is available and can be used. Conversely if the goal is to identify only Rice Krispies cereal within a Scene that label is also available. Depending on the goal of the algorithm, you can use any mix of labels in the hierarchy. |

|||

|

|||

# Labeling |

|||

Many labelers require mapping the objects in the view to the values recorded in the dataset. As an example, Semantic Segmentation needs to determine the color to draw each object in the segmentation image. |

|||

|

|||

This mapping is accomplished for a GameObject by: |

|||

* Finding the nearest Labeling component attached to the object or its ancestors. |

|||

* Find the first label in the Labeling which is present anywhere in the Labeler's Label Config. |

|||

* The resolved Label Entry from the Label Config is used to produce the final output. |

|||

|

|||

## Labeling component |

|||

The `Labeling` component associates a list of string-based labels with a GameObject and its descendants. A `Labeling` component on a descendant overrides its parent's labels. |

|||

|

|||

## Label Config |

|||

Many labelers require require a `Label Config` asset. This asset specifies a list of all labels to be captured in the dataset along with extra information used by the various labelers. |

|||

|

|||

## Best practices |

|||

Generally algorithm testing and training requires a single label on an asset for proper identification such as “chair”, “table”, or “door". To maximize asset reuse, however, it is useful to give each object multiple labels in a hierarchy. |

|||

|

|||

For example, an asset representing a box of Rice Krispies cereal could be labeled as `food\cereal\kellogs\ricekrispies` |

|||

|

|||

* “food” - type |

|||

* “cereal” - subtype |

|||

* “kellogs” - main descriptor |

|||

* “ricekrispies” - sub descriptor |

|||

|

|||

If the goal of the algorithm is to identify all objects in a scene that is “food” that label is available and can be used. Conversely if the goal is to identify only Rice Krispies cereal within a scene that label is also available. Depending on the goal of the algorithm any mix of labels in the hierarchy can be used. |

|||

撰写

预览

正在加载...

取消

保存

Reference in new issue