当前提交

e1da0870

共有 597 个文件被更改,包括 39321 次插入 和 5 次删除

-

23README.md

-

7tutorials/pose_estimation/.gitignore

-

67tutorials/pose_estimation/Documentation/0_ros_setup.md

-

189tutorials/pose_estimation/Documentation/1_set_up_the_scene.md

-

288tutorials/pose_estimation/Documentation/2_set_up_the_data_collection_scene.md

-

151tutorials/pose_estimation/Documentation/3_data_collection_model_training.md

-

249tutorials/pose_estimation/Documentation/4_pick_and_place.md

-

29tutorials/pose_estimation/Documentation/5_more_randomizers.md

-

1001tutorials/pose_estimation/Documentation/Gifs/1_URDF_importer.gif

-

1001tutorials/pose_estimation/Documentation/Gifs/1_import_prefabs.gif

-

1001tutorials/pose_estimation/Documentation/Gifs/1_package_imports.gif

-

1001tutorials/pose_estimation/Documentation/Gifs/1_robot_settings.gif

-

1001tutorials/pose_estimation/Documentation/Gifs/1_rp_materials.gif

-

1001tutorials/pose_estimation/Documentation/Gifs/2_aspect_ratio.gif

-

1001tutorials/pose_estimation/Documentation/Gifs/2_light_randomizer.gif

-

1001tutorials/pose_estimation/Documentation/Gifs/2_object_position_randomizer.gif

-

1001tutorials/pose_estimation/Documentation/Gifs/2_robot_randomizer_settings.gif

-

1001tutorials/pose_estimation/Documentation/Gifs/2_y_rotation_randomizer.gif

-

1001tutorials/pose_estimation/Documentation/Gifs/2_y_rotation_randomizer_settings.gif

-

76tutorials/pose_estimation/Documentation/Gifs/4_trajectory_field.png

-

1001tutorials/pose_estimation/Documentation/Gifs/5_camera_randomizer.gif

-

223tutorials/pose_estimation/Documentation/Images/0_GIT_installed.png

-

441tutorials/pose_estimation/Documentation/Images/0_data_collection_environment.png

-

1001tutorials/pose_estimation/Documentation/Images/0_json_environment.png

-

517tutorials/pose_estimation/Documentation/Images/0_scene.png

-

569tutorials/pose_estimation/Documentation/Images/1_URDF_importer.png

-

420tutorials/pose_estimation/Documentation/Images/1_assets_preview.png

-

416tutorials/pose_estimation/Documentation/Images/1_build_support.png

-

81tutorials/pose_estimation/Documentation/Images/1_camera_settings.png

-

311tutorials/pose_estimation/Documentation/Images/1_create_new_project.png

-

224tutorials/pose_estimation/Documentation/Images/1_directional_light.png

-

1001tutorials/pose_estimation/Documentation/Images/1_floor_settings.png

-

282tutorials/pose_estimation/Documentation/Images/1_forward_renderer.png

-

347tutorials/pose_estimation/Documentation/Images/1_forward_renderer_inspector.png

-

79tutorials/pose_estimation/Documentation/Images/1_hierarchy.png

-

921tutorials/pose_estimation/Documentation/Images/1_install_unity_version_1.png

-

677tutorials/pose_estimation/Documentation/Images/1_install_unity_version_2.png

-

532tutorials/pose_estimation/Documentation/Images/1_package_manager.png

-

1001tutorials/pose_estimation/Documentation/Images/1_package_manager_complete.png

-

668tutorials/pose_estimation/Documentation/Images/1_robot_settings.png

-

1001tutorials/pose_estimation/Documentation/Images/1_scene_overview.png

-

238tutorials/pose_estimation/Documentation/Images/2_Pose_Estimation_Data_Collection.png

-

78tutorials/pose_estimation/Documentation/Images/2_RobotArmReachablePositionRandomizerSetting.png

-

1001tutorials/pose_estimation/Documentation/Images/2_cube_label.png

-

527tutorials/pose_estimation/Documentation/Images/2_domain_randomization.png

-

381tutorials/pose_estimation/Documentation/Images/2_final_perception_script.png

-

275tutorials/pose_estimation/Documentation/Images/2_fixed_length_scenario.png

-

285tutorials/pose_estimation/Documentation/Images/2_light_randomizer_settings.png

-

649tutorials/pose_estimation/Documentation/Images/2_multiple_objects.png

-

929tutorials/pose_estimation/Documentation/Images/2_perception_camera.png

-

252tutorials/pose_estimation/Documentation/Images/2_tags.png

-

287tutorials/pose_estimation/Documentation/Images/2_tutorial_id_label_config.png

-

81tutorials/pose_estimation/Documentation/Images/2_y_rotation_randomizer.png

-

230tutorials/pose_estimation/Documentation/Images/3_data_logs.png

-

236tutorials/pose_estimation/Documentation/Images/3_performance_model.png

-

348tutorials/pose_estimation/Documentation/Images/3_publish_object.png

-

455tutorials/pose_estimation/Documentation/Images/3_saved_data.png

-

162tutorials/pose_estimation/Documentation/Images/3_tensorboard.png

-

332tutorials/pose_estimation/Documentation/Images/4_Pose_Estimation_ROS.png

-

181tutorials/pose_estimation/Documentation/Images/4_ROS_objects.png

-

499tutorials/pose_estimation/Documentation/Images/4_docker_daemon.png

-

271tutorials/pose_estimation/Documentation/Images/4_ros_connect.png

-

293tutorials/pose_estimation/Documentation/Images/4_ros_settings.png

-

893tutorials/pose_estimation/Documentation/Images/4_terminal.png

-

95tutorials/pose_estimation/Documentation/Images/4_trajectory_field.png

-

100tutorials/pose_estimation/Documentation/Images/5_uniform_pose_randomizer_settings.png

-

722tutorials/pose_estimation/Documentation/Images/button_error.png

-

383tutorials/pose_estimation/Documentation/Images/cube_environment.png

-

256tutorials/pose_estimation/Documentation/Images/faq_base_mat.png

-

448tutorials/pose_estimation/Documentation/Images/path_data.png

-

151tutorials/pose_estimation/Documentation/Images/unity_motionplan.png

-

16tutorials/pose_estimation/Documentation/install_unity.md

-

156tutorials/pose_estimation/Documentation/quick_demo_full.md

-

54tutorials/pose_estimation/Documentation/quick_demo_train.md

-

71tutorials/pose_estimation/Documentation/troubleshooting.md

-

21tutorials/pose_estimation/Model/.gitignore

-

50tutorials/pose_estimation/Model/Dockerfile

-

68tutorials/pose_estimation/Model/README.md

-

33tutorials/pose_estimation/Model/config.yaml

-

157tutorials/pose_estimation/Model/documentation/codebase_structure.md

-

274tutorials/pose_estimation/Model/documentation/docs/docker_id_image.png

-

414tutorials/pose_estimation/Model/documentation/docs/docker_settings.png

-

967tutorials/pose_estimation/Model/documentation/docs/jupyter_notebook_local.png

-

976tutorials/pose_estimation/Model/documentation/docs/kubeflow_details_pipeline.png

-

321tutorials/pose_estimation/Model/documentation/docs/network.png

-

236tutorials/pose_estimation/Model/documentation/docs/performance_model.png

-

339tutorials/pose_estimation/Model/documentation/docs/successful_run_jupyter_notebook.png

-

162tutorials/pose_estimation/Model/documentation/docs/tensorboard.png

-

100tutorials/pose_estimation/Model/documentation/running_on_docker.md

-

22tutorials/pose_estimation/Model/documentation/running_on_the_cloud.md

-

25tutorials/pose_estimation/Model/environment-gpu.yml

-

23tutorials/pose_estimation/Model/environment.yml

|

|||

*.DS_Store |

|||

*.vsconfig |

|||

ROS/src/robotiq |

|||

ROS/src/universal_robot |

|||

ROS/src/ros_tcp_endpoint |

|||

ROS/src/moveit_msgs |

|||

ROS/src/ur3_moveit/models/ |

|||

|

|||

# Pose-Estimation-Demo Tutorial: Part 0 |

|||

|

|||

This page provides steps on how to manually set up a catkin workspace for the Pose Estimation tutorial. |

|||

|

|||

1. Navigate to the `pose_estimation/` directory of this downloaded repository. This directory will be used as the ROS catkin workspace. |

|||

|

|||

2. Copy or download this directory to your ROS operating system if you are doing ROS operations in another machine, VM, or container. |

|||

|

|||

3. If the following packages are not already installed on your ROS machine, run the following commands to install them: |

|||

|

|||

```bash |

|||

sudo apt-get update && sudo apt-get upgrade |

|||

sudo apt-get install python3-pip ros-noetic-robot-state-publisher ros-noetic-moveit ros-noetic-rosbridge-suite ros-noetic-joy ros-noetic-ros-control ros-noetic-ros-controllers ros-noetic-tf* ros-noetic-gazebo-ros-pkgs ros-noetic-joint-state-publisher |

|||

sudo pip3 install rospkg numpy jsonpickle scipy easydict torch==1.7.1+cu101 torchvision==0.8.2+cu101 torchaudio==0.7.2 -f https://download.pytorch.org/whl/torch_stable.html |

|||

``` |

|||

|

|||

> Note: If you encounter errors installing Pytorch via the above `pip3` command, try the following instead: |

|||

> ```bash |

|||

> sudo pip3 install rospkg numpy jsonpickle scipy easydict torch==1.7.1 torchvision==0.8.2 torchaudio==0.7.2 -f https://download.pytorch.org/whl/torch_stable.html |

|||

> ``` |

|||

|

|||

|

|||

Most of the ROS setup has been provided via the `ur3_moveit` package. This section will describe the provided files. |

|||

|

|||

4. If you have not already built and sourced the ROS workspace since importing the new ROS packages, navigate to your ROS workplace, and run: |

|||

|

|||

```bash |

|||

catkin_make |

|||

source devel/setup.bash |

|||

``` |

|||

|

|||

Ensure there are no unexpected errors. |

|||

|

|||

The ROS parameters will need to be set to your configuration in order to allow the server endpoint to fetch values for the TCP connection. |

|||

|

|||

5. Navigate to your ROS workspace (e.g. `~/catkin_ws`). Assign the ROS IP in the `params.yaml` file as follows: |

|||

|

|||

```bash |

|||

echo "ROS_IP: $(hostname -I)" > src/ur3_moveit/config/params.yaml |

|||

``` |

|||

|

|||

>Note: You can also manually assign this value by navigating to the `src/ur3_moveit/config/params.yaml` file and opening it for editing. |

|||

>```yaml |

|||

>ROS_IP: <your ROS IP> |

|||

>``` |

|||

>e.g. |

|||

>```yaml |

|||

>ROS_IP: 10.0.0.250 |

|||

>``` |

|||

|

|||

> Note: Learn more about the server endpoint and ROS parameters [here](https://github.com/Unity-Technologies/Unity-Robotics-Hub/blob/main/tutorials/ros_unity_integration/server_endpoint.md). |

|||

|

|||

This YAML file is a rosparam set from the launch files provided for this tutorial, which has been copied below for reference. Additionally, the `server_endpoint`, `pose estimation`, and `mover` nodes are launched from this file. |

|||

|

|||

```xml |

|||

<launch> |

|||

<rosparam file="$(find ur3_moveit)/config/params.yaml" command="load"/> |

|||

'<include file="$(find ur3_moveit)/launch/demo.launch" /> |

|||

<node name="server_endpoint" pkg="ur3_moveit" type="server_endpoint.py" args="--wait" output="screen" respawn="true" /> |

|||

<node name="pose_estimation" pkg="ur3_moveit" type="pose_estimation_script.py" args="--wait" output="screen"/> |

|||

<node name="mover" pkg="ur3_moveit" type="mover.py" args="--wait" output="screen" respawn="true" respawn_delay="2.0"/> |

|||

</launch> |

|||

``` |

|||

|

|||

>Note: The launch files for this project are available in the package's launch directory, i.e. `src/ur3_moveit/launch/`. |

|||

|

|||

The ROS workspace is now ready to accept commands! Return to [Part 4: Set up the Unity side](4_pick_and_place.md#step-3) to continue the tutorial. |

|||

|

|||

# Pose Estimation Demo: Part 1 |

|||

|

|||

In this first part of the tutorial, we will start by downloading and installing the Unity Editor. We will install our project's dependencies: the Perception, URDF, and TCP Connector packages. We will then use a set of provided prefabs to easily prepare a simulated environment containing a table, a cube, and a working robot arm. |

|||

|

|||

|

|||

**Table of Contents** |

|||

- [Requirements](#reqs) |

|||

- [Create a New Project](#step-1) |

|||

- [Download the Perception, the URDF and the TCP connector Packages](#step-2) |

|||

- [Setup the Ground Truth Render Feature](#step-3) |

|||

- [Setup the Scene](#step-4) |

|||

|

|||

--- |

|||

|

|||

### <a name="reqs">Requirements</a> |

|||

|

|||

To follow this tutorial you need to **clone** this repository even if you want to create your Unity project from scratch. |

|||

|

|||

|

|||

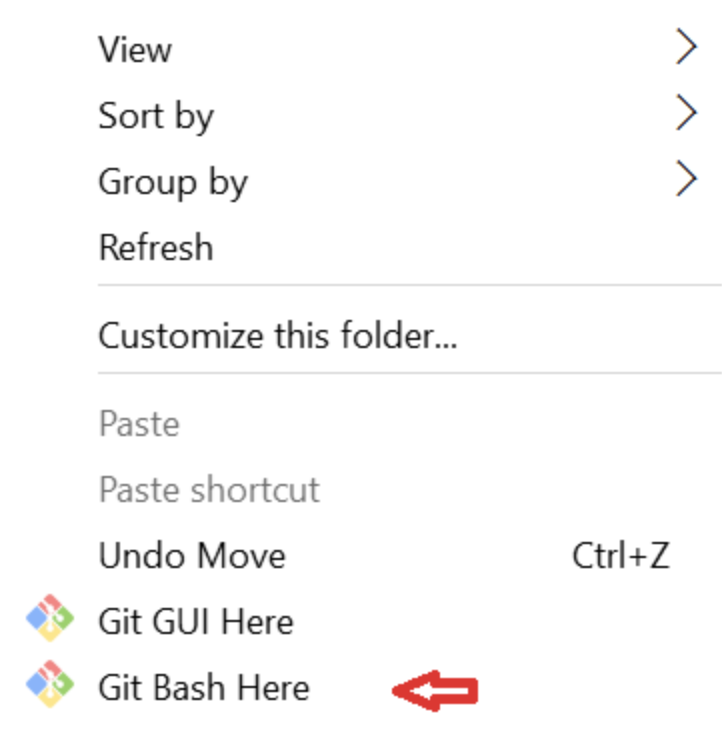

> Note For Windows users: You need to have a software enabling you to run bash files. One option is to download [GIT](https://git-scm.com/downloads). During installation of GIT, add GIT Bash to windows context menu by selecting its option. After installation, right click in your folder, and select [GIT Bash Here](Images/0_GIT_installed.png). |

|||

|

|||

1. Open a terminal and put yourself where you want to host the repository. |

|||

```bash |

|||

git clone https://github.com/Unity-Technologies/Unity-Robotics-Hub.git |

|||

``` |

|||

|

|||

Then we need to be in the `Unity-Robotics-Hub/tutorials/pose_estimation` folder and generate the contents of the `universal_robot`, `moveit_msgs`, `ros_tcp_endpoint`, and the `robotiq` folders. |

|||

```bash |

|||

cd Unity-Robotics-Hub/tutorials/pose_estimation |

|||

./submodule.sh |

|||

``` |

|||

|

|||

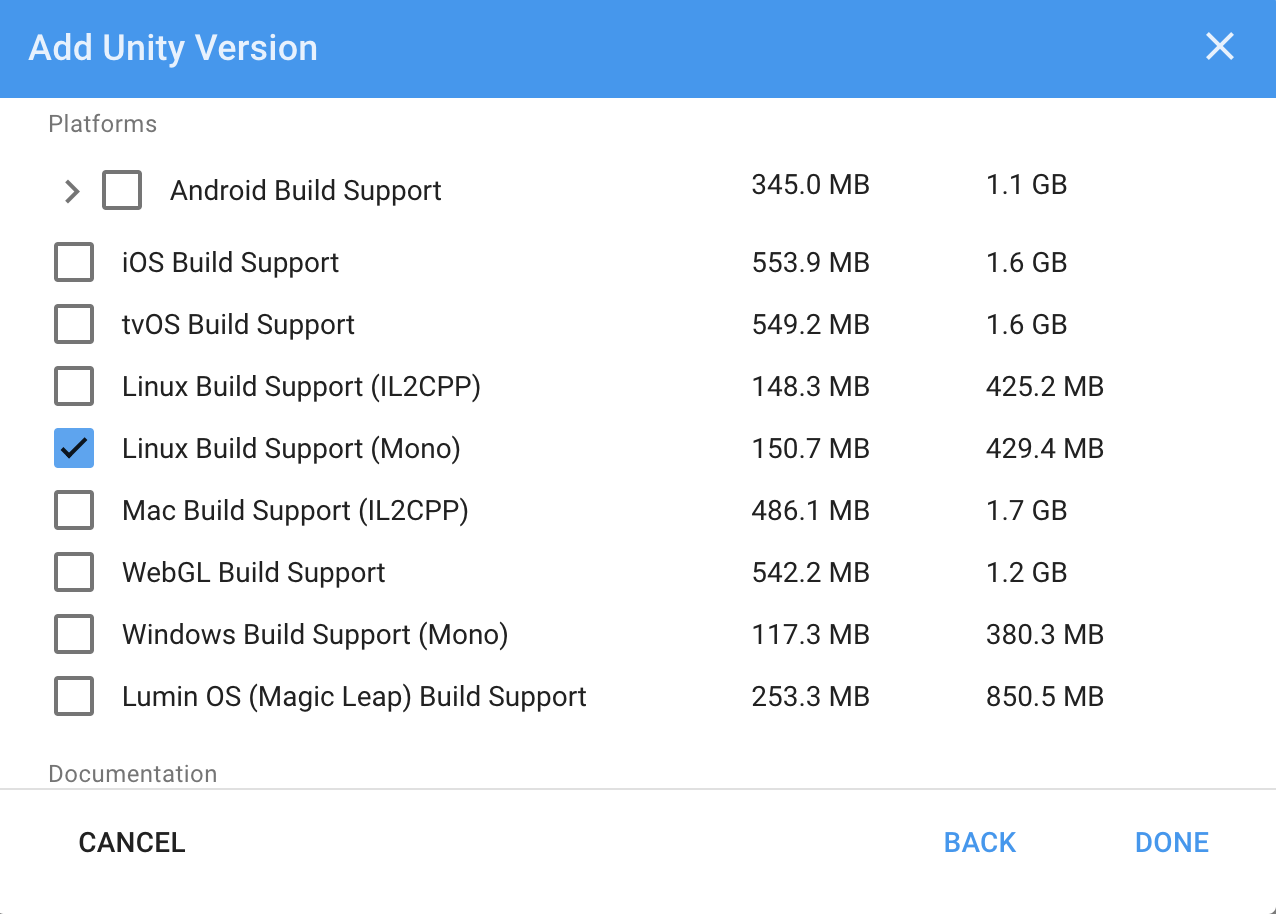

2. [Install Unity `2020.2.*`.](install_unity.md) |

|||

|

|||

|

|||

### <a name="step-1">Create a New Project</a> |

|||

When you first run Unity, you will be asked to open an existing project, or create a new one. |

|||

|

|||

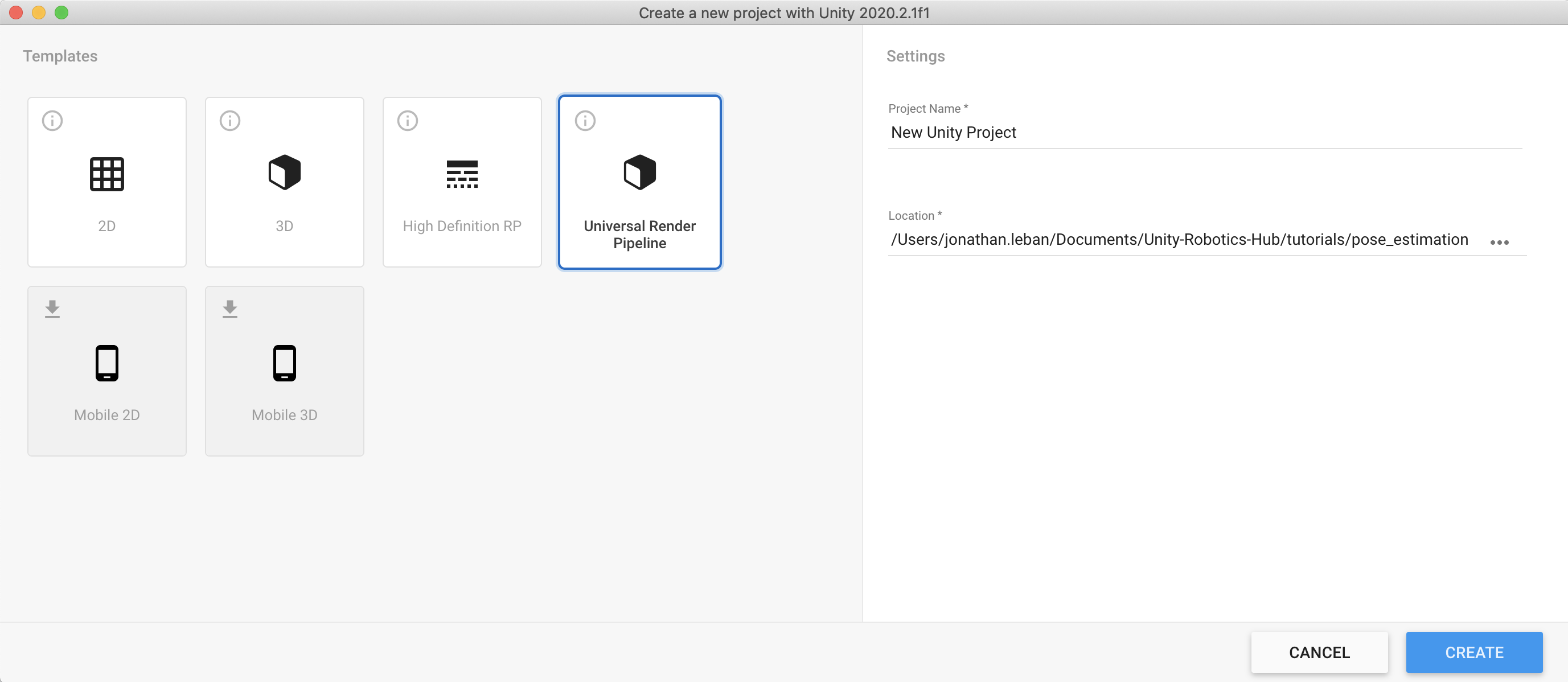

1. Open Unity and create a new project using the **Universal Render Pipeline**. Name your new project _**Pose Estimation Tutorial**_, and specify a desired location as shown below. |

|||

|

|||

<p align="center"> |

|||

<img src="Images/1_create_new_project.png" align="center" width=950/> |

|||

</p> |

|||

|

|||

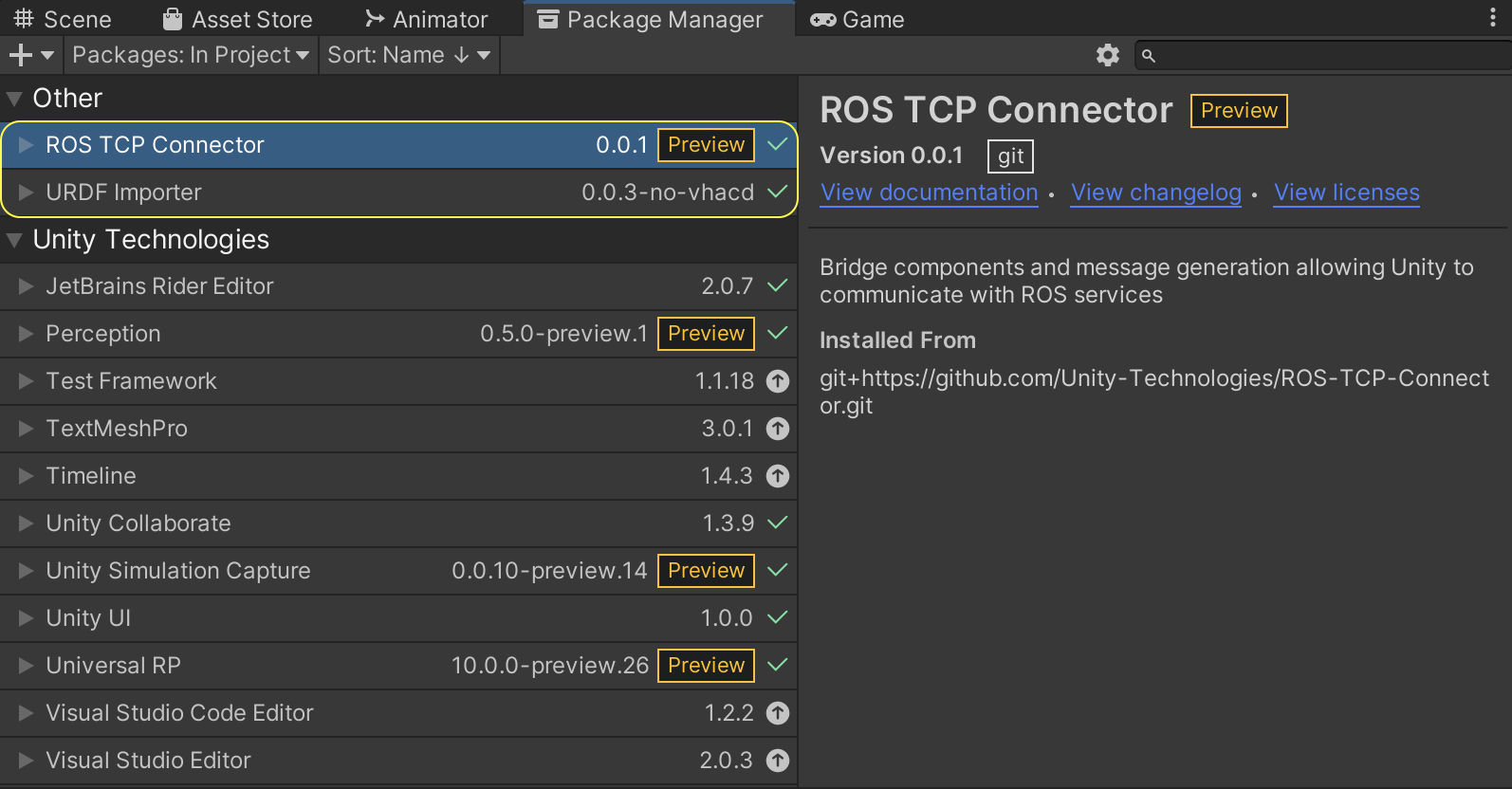

### <a name="step-2">Download the Perception, the URDF and the TCP connector Packages</a> |

|||

|

|||

Once your new project is created and loaded, you will be presented with the Unity Editor interface. From this point on, whenever we refer to the "editor", we mean the Unity Editor. |

|||

|

|||

#### How to install packages |

|||

We will need to download and install several packages. In general, packages can be installed in Unity with the following steps: |

|||

|

|||

- From the top menu bar, open _**Window**_ -> _**Package Manager**_. As the name suggests, the _**Package Manager**_ is where you can download new packages, update or remove existing ones, and access a variety of information and additional actions for each package. |

|||

|

|||

- Click on the _**+**_ sign at the top-left corner of the _**Package Manager**_ window and then choose the option _**Add package from git URL...**_. |

|||

|

|||

- Enter the package address and click _**Add**_. It will take some time for the manager to download and import the package. |

|||

|

|||

Installing the different packages may take some time (few minutes). |

|||

|

|||

<p align="center"> |

|||

<img src="Gifs/1_package_imports.gif"/> |

|||

</p> |

|||

|

|||

|

|||

#### Install Dependencies |

|||

Install the following packages with the provided git URLs: |

|||

|

|||

1. [Perception package](https://github.com/Unity-Technologies/com.unity.perception) - `com.unity.perception@0.7.0-preview.1` |

|||

* This will help us collect training data for our machine learning model. |

|||

|

|||

2. [URDF Importer package](https://github.com/Unity-Technologies/URDF-Importer) - `https://github.com/Unity-Technologies/URDF-Importer.git#v0.1.2` |

|||

* This package will help us import a robot into our scene from a file in the [Unified Robot Description Format (URDF)](http://wiki.ros.org/urdf). |

|||

|

|||

3. [TCP Connector package](https://github.com/Unity-Technologies/ROS-TCP-Connector) - `https://github.com/Unity-Technologies/ROS-TCP-Connector.git#v0.1.2` |

|||

* This package will enable a connection between ROS and Unity. |

|||

|

|||

>Note: If you encounter a Package Manager issue, check the [Troubleshooting Guide](troubleshooting.md) for potential solutions. |

|||

|

|||

### <a name="step-3">Set up Ground Truth Render Feature</a> |

|||

|

|||

The Hierarchy, Scene View, Game View, Play/Pause/Step toolbar, Inspector, Project, and Console windows of the Unity Editor have been highlighted below for reference, based on the default layout. Custom Unity Editor layouts may vary slightly. A top menu bar option is available to re-open any of these windows: Window > General. |

|||

|

|||

<p align="center"> |

|||

<img src="Images/1_scene_overview.png"/> |

|||

</p> |

|||

|

|||

|

|||

The perception packages relies on a "ground truth render feature" to save out labeled images as training data. You don't need to worry about the details, but follow the steps below to add this component: |

|||

|

|||

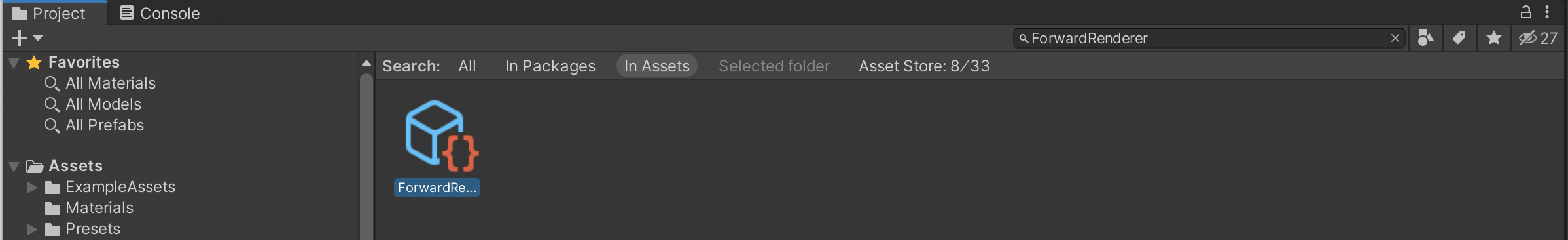

1. The _**Project**_ tab contains a search bar; use it to find the file named `ForwardRenderer`, and click on the file named `ForwardRenderer.asset` as shown below: |

|||

|

|||

<p align="center"> |

|||

<img src="Images/1_forward_renderer.png"/> |

|||

</p> |

|||

|

|||

2. Click on the found file to select it. Then, from the _**Inspector**_ tab of the editor, click on the _**Add Renderer Feature**_ button, and select _**Ground Truth Renderer Feature**_ from the dropdown menu: |

|||

|

|||

<p align="center"> |

|||

<img src="Images/1_forward_renderer_inspector.png" width=430 height=350/> |

|||

</p> |

|||

|

|||

|

|||

### <a name="step-4">Set up the Scene</a> |

|||

|

|||

#### The Scene |

|||

Simply put in Unity, a `Scene` contains any object that exists in the world. This world can be a game, or in this case, a data-collection-oriented simulation. Every new project contains a Scene named SampleScene, which is automatically opened when the project is created. This Scene comes with several objects and settings that we do not need, so let's create a new one. |

|||

|

|||

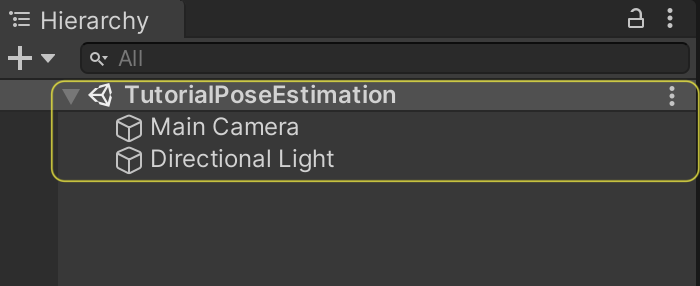

1. In the _**Project**_ tab, right-click on the `Assets > Scenes` folder and click _**Create -> Scene**_. Name this new Scene `TutorialPoseEstimation` and double-click on it to open it. |

|||

|

|||

The _**Hierarchy**_ tab of the editor displays all the Scenes currently loaded, and all the objects currently present in each loaded Scene, as shown below: |

|||

<p align="center"> |

|||

<img src="Images/1_hierarchy.png" width=500 height=200/> |

|||

</p> |

|||

|

|||

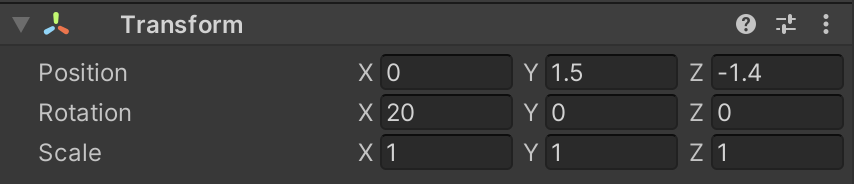

As seen above, the new Scene already contains a camera (`Main Camera`) and a light (`Directional Light`). We will now modify the camera's field of view and position to prepare it for the tutorial. |

|||

|

|||

2. Still in the _**Inspector**_ tab of the `Main Camera`, modify the camera's `Position` and `Rotation` to match the values shown below. This orients the camera so that it will have a good view of the objects we are about to add to the scene. |

|||

|

|||

<p align="center"> |

|||

<img src="Images/1_camera_settings.png" height=117 width=500/> |

|||

</p> |

|||

|

|||

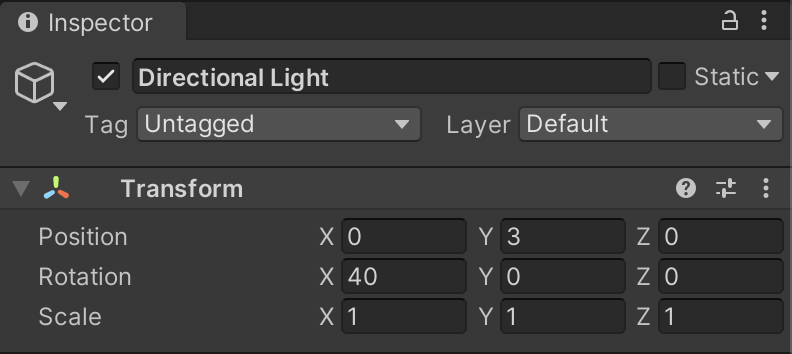

3. Click on `Directional Light` and in the _**Inspector**_ tab, modify the light's `Position` and `Rotation` to match the screenshot below. |

|||

|

|||

<p align="center"> |

|||

<img src="Images/1_directional_light.png" height=217 width=500/> |

|||

</p> |

|||

|

|||

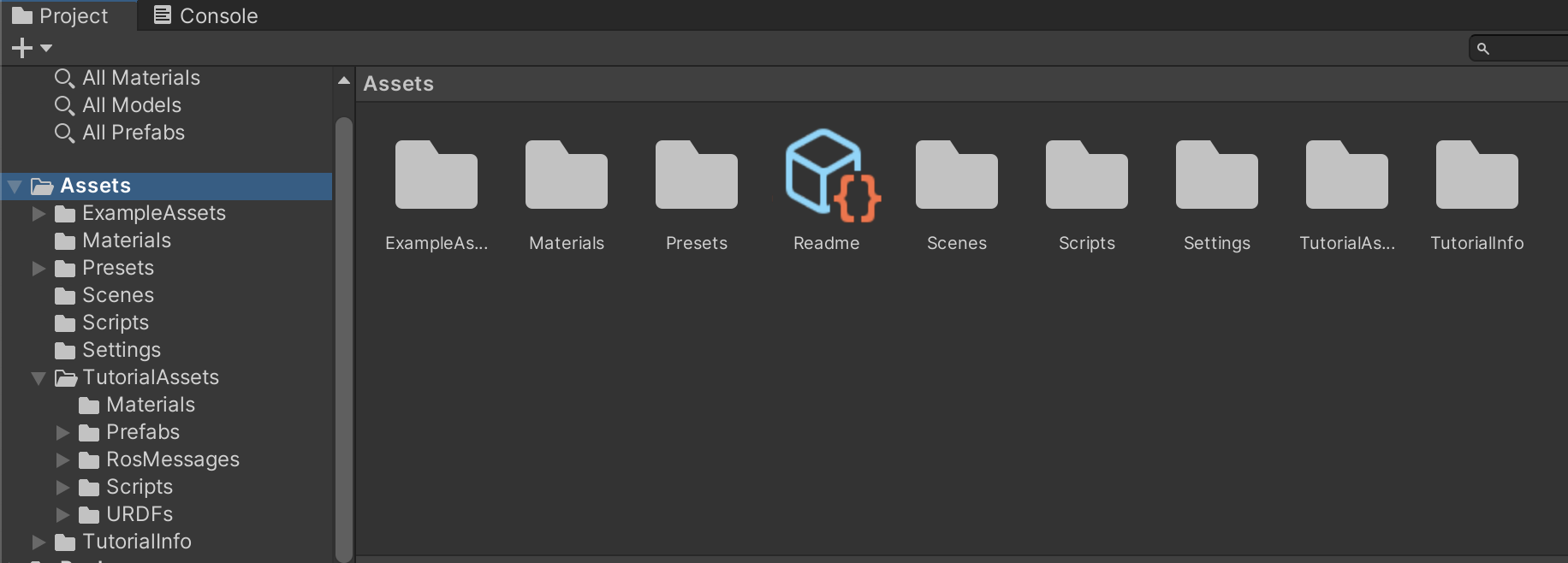

#### Adding Tutorial Files |

|||

Now it is time to add some more objects to our scene. Before doing so, we need to import some folders containing the required assets. |

|||

|

|||

4. Download [TutorialAssets.zip](https://github.com/Unity-Technologies/Unity-Robotics-Hub/releases/download/Pose-Estimation/TutorialAssets.zip), and unzip it. It should contain the following subfolders: `Materials`, `Prefabs`, `RosMessages`, `Scripts`, `URDFs`. |

|||

|

|||

5. Drag and Drop the `TutorialAssets` folder onto the `Assets` folder in the _**Project**_ tab. |

|||

|

|||

Your `Assets` folder should like this: |

|||

|

|||

<p align="center"> |

|||

<img src="Images/1_assets_preview.png"/> |

|||

</p> |

|||

|

|||

#### Using Prefabs |

|||

Unity’s [Prefab](https://docs.unity3d.com/Manual/Prefabs.html) system allows you to create, configure, and store a **GameObject** complete with all its components, property values, and child **GameObjects** as a reusable **Asset**. It is a convenient way to store complex objects. |

|||

|

|||

A prefab is just a file, and you can easily create an instance of the object in the scene from a prefab by dragging it into the _**Hierarchy panel**_. |

|||

|

|||

For your convenience, we have provided prefabs for most of the components of the scene (the cube, goal, table, and floor). |

|||

|

|||

6. In the _**Project**_ tab, go to `Assets > TutorialAssets > Prefabs > Part1` and drag and drop the `Cube` prefab inside the _**Hierarchy panel**_. |

|||

|

|||

7. Repeat the action with the `Goal`, `Table` and the `Floor`. |

|||

|

|||

|

|||

<p align="center"> |

|||

<img src="Gifs/1_import_prefabs.gif"/> |

|||

</p> |

|||

|

|||

>Note: If you encounter an issue with the imported prefab materials, check the [Troubleshooting Guide](troubleshooting.md) for potential solutions. |

|||

|

|||

|

|||

#### Importing the Robot |

|||

Finally we will add the robot and the URDF files in order to import the UR3 Robot. |

|||

|

|||

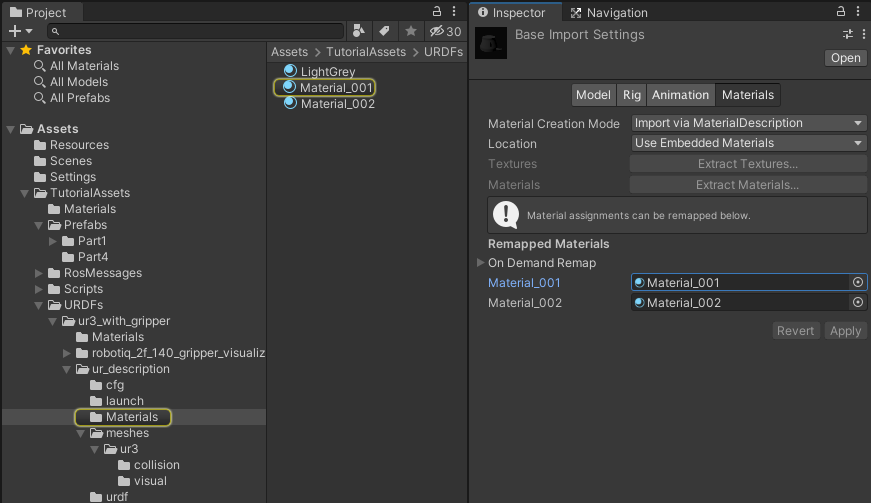

8. In the _**Project**_ tab, go to `Assets > TutorialAssets > URDFs > ur3_with_gripper` and right click on the `ur3_with_gripper.urdf` file and select `Import Robot From Selected URDF file`. A window will pop up, keep the default **Y Axis** type in the Import menu and the **Mesh Decomposer** to `VHACD`. Then, click Import URDF. These set of actions are showed in the following video. |

|||

|

|||

>Note Unity uses a left-handed coordinate system in which the y-axis points up. However, many robotics packages use a right-handed coordinate system in which the z-axis or x-axis points up. For this reason, it is important to pay attention to the coordinate system when importing URDF files or interfacing with other robotics software. |

|||

|

|||

>Note: VHACD algorithm produces higher quality convex hull for collision detection than the default algorithm. |

|||

|

|||

<p align="center"> |

|||

<img src="Gifs/1_URDF_importer.gif" width=800 height=548/> |

|||

</p> |

|||

|

|||

>Note: If you encounter an issue with importing the robot, check the [Troubleshooting Guide](troubleshooting.md) for potential solutions. |

|||

|

|||

#### Setting up the Robot |

|||

|

|||

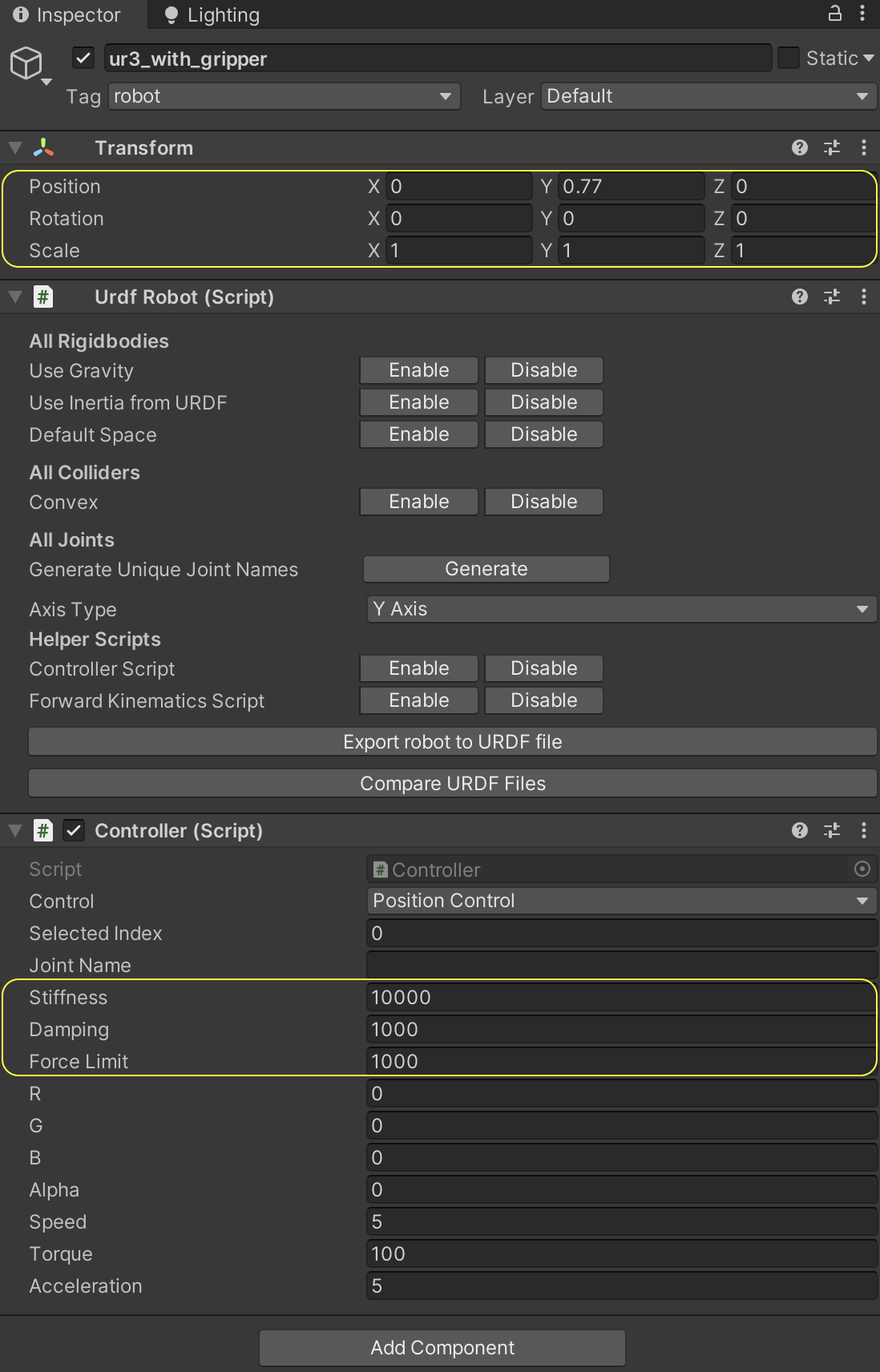

9. Select the `ur3_with_gripper` GameObject and in the _**Inspector**_ view, go to the `Controller` script and set the `Stiffness` to **10000**, the `Damping` to **1000** and the `Force Limit` to **1000**. These are physics properties that control how the robot moves. |

|||

|

|||

10. In the _**Hierarchy**_ tab, select the `ur3_with_gripper` GameObject and click on the arrow on the left, then click on the arrow on the left of `world`, then on `base_link`. In the `Articulation Body` component, toggle on `Immovable` for the `base link`. This will fix the robot base to its current position. |

|||

|

|||

<p align="center"> |

|||

<img src="Gifs/1_robot_settings.gif" width=800 height=465/> |

|||

</p> |

|||

|

|||

|

|||

### Proceed to [Part 2](2_set_up_the_data_collection_scene.md). |

|||

|

|||

|

|||

|

|||

# Pose Estimation Demo: Part 2 |

|||

|

|||

In [Part 1](1_set_up_the_scene.md) of the tutorial, we learned: |

|||

* How to create a Unity Scene |

|||

* How to use the Package Manager to download and install Unity packages |

|||

* How to move and rotate objects in the scene |

|||

* How to instantiate Game Objects with Prefabs |

|||

* How to import a robot from a URDF file |

|||

|

|||

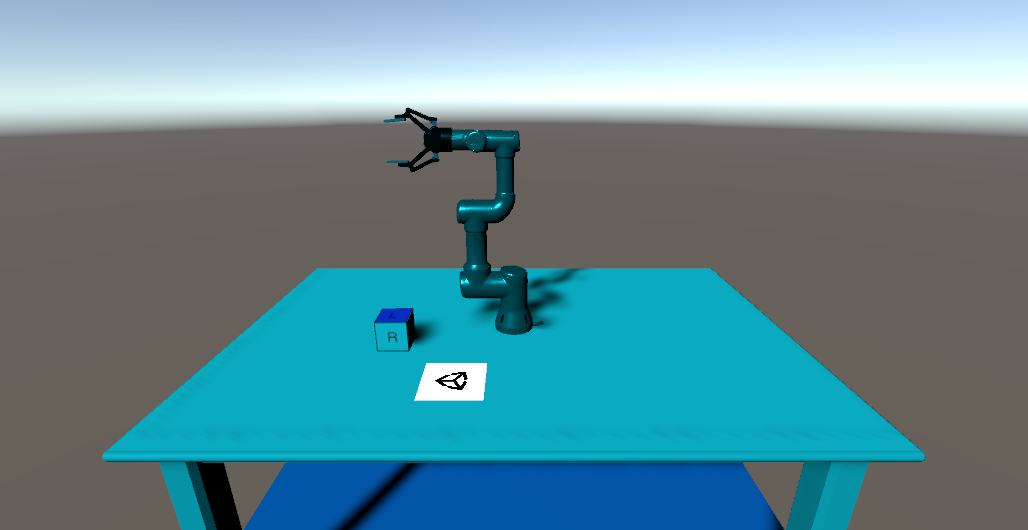

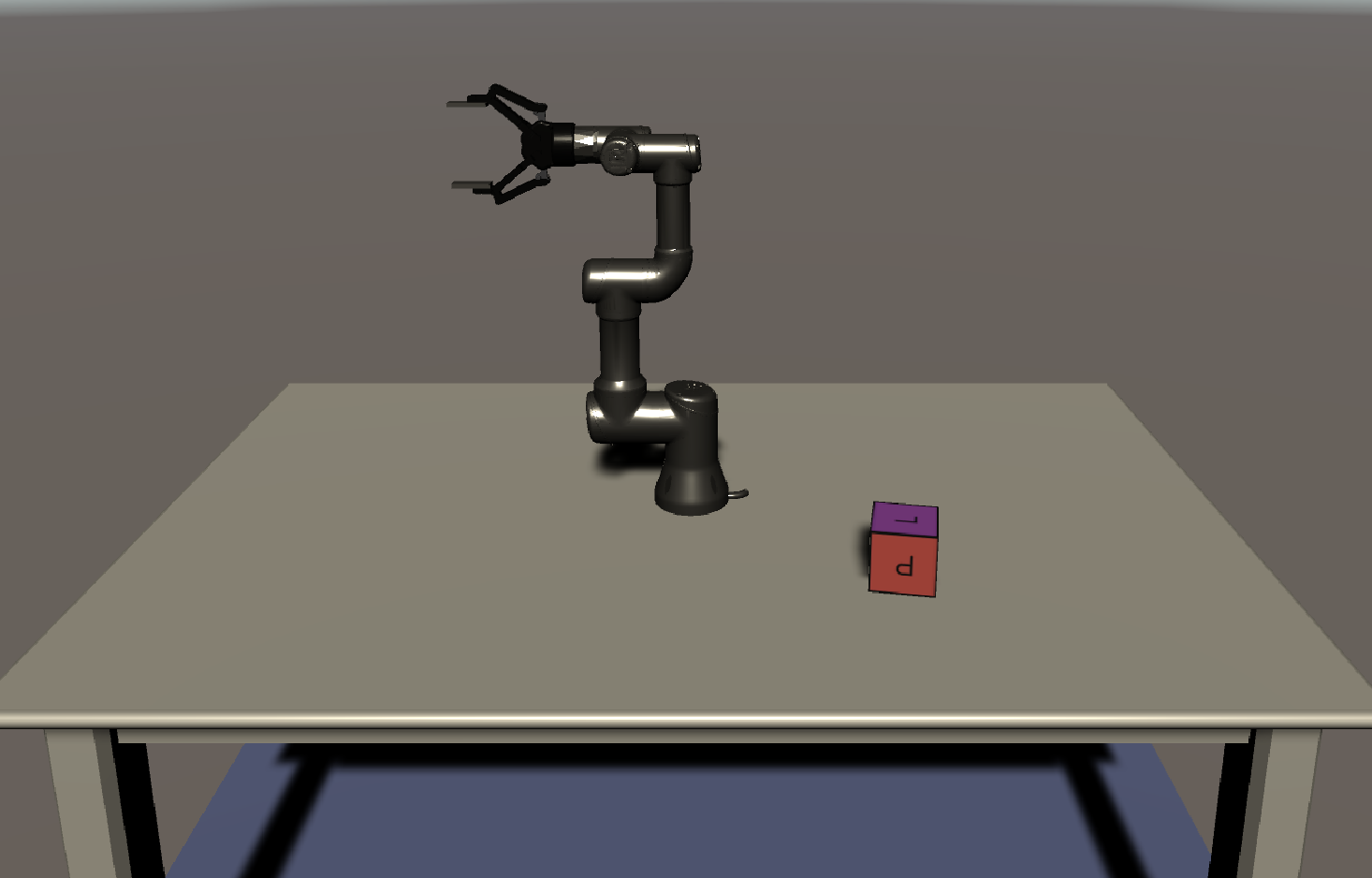

You should now have a table, a cube, a camera, and a robot arm in your scene. In this part we will prepare the scene for data collection with the Perception package. |

|||

|

|||

<p align="center"> |

|||

<img src="Images/2_Pose_Estimation_Data_Collection.png" width="680" height="520"/> |

|||

</p> |

|||

|

|||

**Table of Contents** |

|||

- [Equipping the Camera for Data Collection](#step-1) |

|||

- [Equipping the Cube for Data Collection](#step-2) |

|||

- [Add and set up randomizers](#step-3) |

|||

|

|||

--- |

|||

|

|||

### <a name="step-1">Equipping the Camera for Data Collection</a> |

|||

|

|||

You need to have a fixed aspect ratio so that you are sure to have the same size of images you have when you collect the data. This matters as we have trained our Deep Learning model on it and during the pick-and-place task we will take a screenshot of the game view to feed to the model. This screenshot needs to also have the same resolution than the images collected during the training. |

|||

|

|||

1. Select the `Game` view and select `Free Aspect`. Then select the **+**, with the message `Add new item` on it if you put your mouse over the + sign. For the Width select `650` and for the Height select `400`. A gif below shows you how to do it. |

|||

|

|||

<p align="center"> |

|||

<img src="Gifs/2_aspect_ratio.gif"/> |

|||

</p> |

|||

|

|||

We need to add a few components to our camera in order to equip it for synthetic data generation. |

|||

|

|||

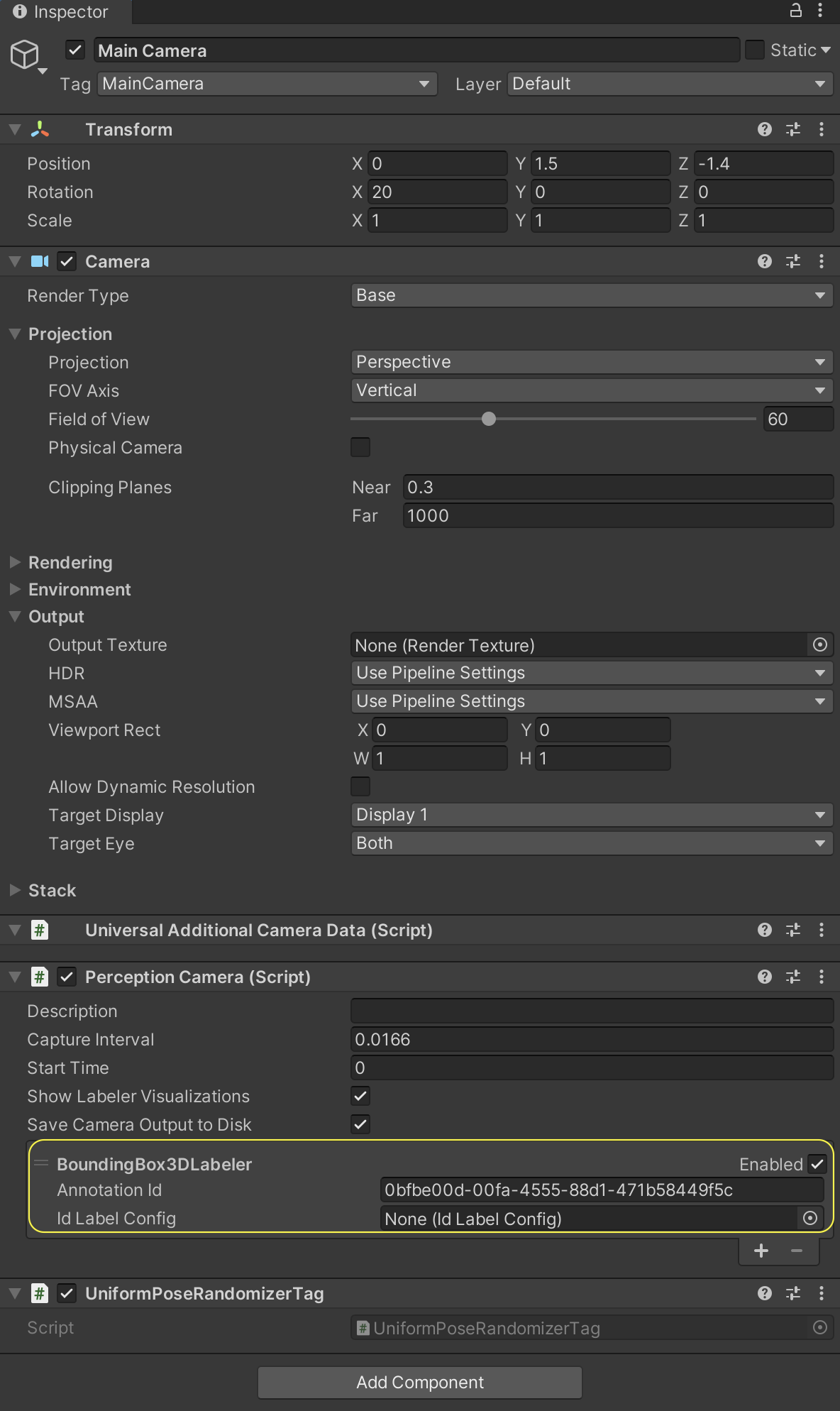

2. Select the `Main Camera` GameObject in the _**Hierarchy**_ tab and in the _**Inspector**_ tab, click on _**Add Component**_. |

|||

|

|||

3. Start typing `Perception Camera` in the search bar that appears, until the `Perception Camera` script is found, with a **#** icon to the left. |

|||

|

|||

4. Click on this script to add it as a component. Your camera is now a `Perception` camera. |

|||

|

|||

5. Go to `Edit > Project Settings > Editor` and uncheck `Asynchronous Shader Compilation`. |

|||

|

|||

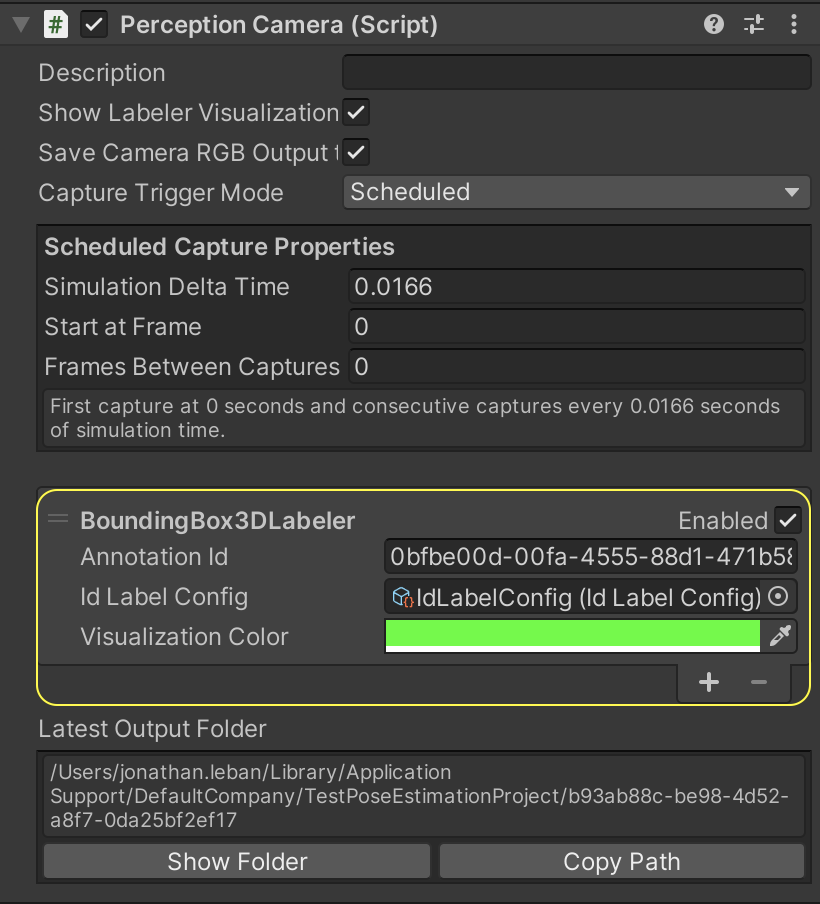

In the Inspector view for the Perception Camera component, the list of Camera Labelers is currently empty, as you can see with `List is Empty`. For each type of ground-truth you wish to generate alongside your captured frames, you will need to add a corresponding Camera Labeler to this list. In our project we want to extract the position and orientation of an object, so we will use the `BoudingBox3DLabeler`. |

|||

|

|||

There are several other types of labelers available, and you can even write your own. If you want more information on labelers, you can consult the [Perception package documentation](https://github.com/Unity-Technologies/com.unity.perception). |

|||

|

|||

6. In the _**Inspector**_ tab, in the `Perception Camera` script, click on the _**+**_ button at the bottom right corner of the `List is Empty` field, and select `BoundingBox3DLabeler`. Once applied, the labeler will highlight the edges of the labeled `GameObjects`. Don't worry, this highlighting won't show up in the image data we collect, it is just there to help us visualize the labeler. |

|||

|

|||

Once you add the labeler, the Inspector view of the Perception Camera component will look like this: |

|||

|

|||

<p align="center"> |

|||

<img src="Images/2_perception_camera.png" width="500"/> |

|||

</p> |

|||

|

|||

|

|||

### <a name="step-2">Equipping the Cube for Data Collection</a> |

|||

|

|||

Our work above prepares us to collect RGB images from the camera and some associated 3D bounding box(es) for objects in our scene. However, we still need to specify _which_ objects we'd like to collect poses for. In this tutorial, we will only collect the pose of the cube, but you can add more objects if you'd like. |

|||

|

|||

You will notice that the `BoundingBox3DLabeler` component has a field named `Id Label Config`. The label configuration we link here will determine which object poses get saved in our dataset. |

|||

|

|||

1. In the _**Project**_ tab, right-click the `Assets` folder, then click `Create -> Perception -> Id Label Config`. |

|||

|

|||

This will create a new asset file named `IdLabelConfig` inside the `Assets` folder. |

|||

|

|||

Now that you have created your label configuration, we need to assign them to the labeler that you previously added to your `Perception Camera` component. |

|||

|

|||

2. Select the `Main Camera` object from the _**Hierarchy**_ tab, and in the _**Inspector**_ tab, assign the newly created `IdLabelConfig` to the `IdLabelConfig`. To do so, you can either drag and drop the former into the corresponding fields for the label, or click on the small circular button in front of the `Id Label Config field`, which brings up an asset selection window filtered to only show compatible assets. |

|||

|

|||

The `Perception Camera` component will now look like the image below: |

|||

|

|||

<p align="center"> |

|||

<img src="Images/2_final_perception_script.png" height=450/> |

|||

</p> |

|||

|

|||

Now we need to assign the same `IdLabelConfig` object to the cube, since it is the pose of the cube we wish to collect. |

|||

|

|||

3. Select the `Cube` GameObject and in the _**Inspector**_ tab, click on the _**Add Component**_ button. |

|||

|

|||

4. Start typing `Labeling` in the search bar that appears, until the `Labeling` script is found, with a **#** icon to the left and double click on it. |

|||

|

|||

5. Press the **Add New Label** button and change `New Label` to `cube_position`. Then, click on `Add to Label Config...` and below `Other Label Configs in Project` there should be `IdLabelConfig`. Click on `Add Label` and then press the red X to close the window. |

|||

|

|||

The _**Inspector**_ view of the `Cube` should look like the following: |

|||

|

|||

<p align="center"> |

|||

<img src="Images/2_cube_label.png" height=650/> |

|||

</p> |

|||

|

|||

|

|||

### <a name="step-3">Add and setup randomizers</a> |

|||

|

|||

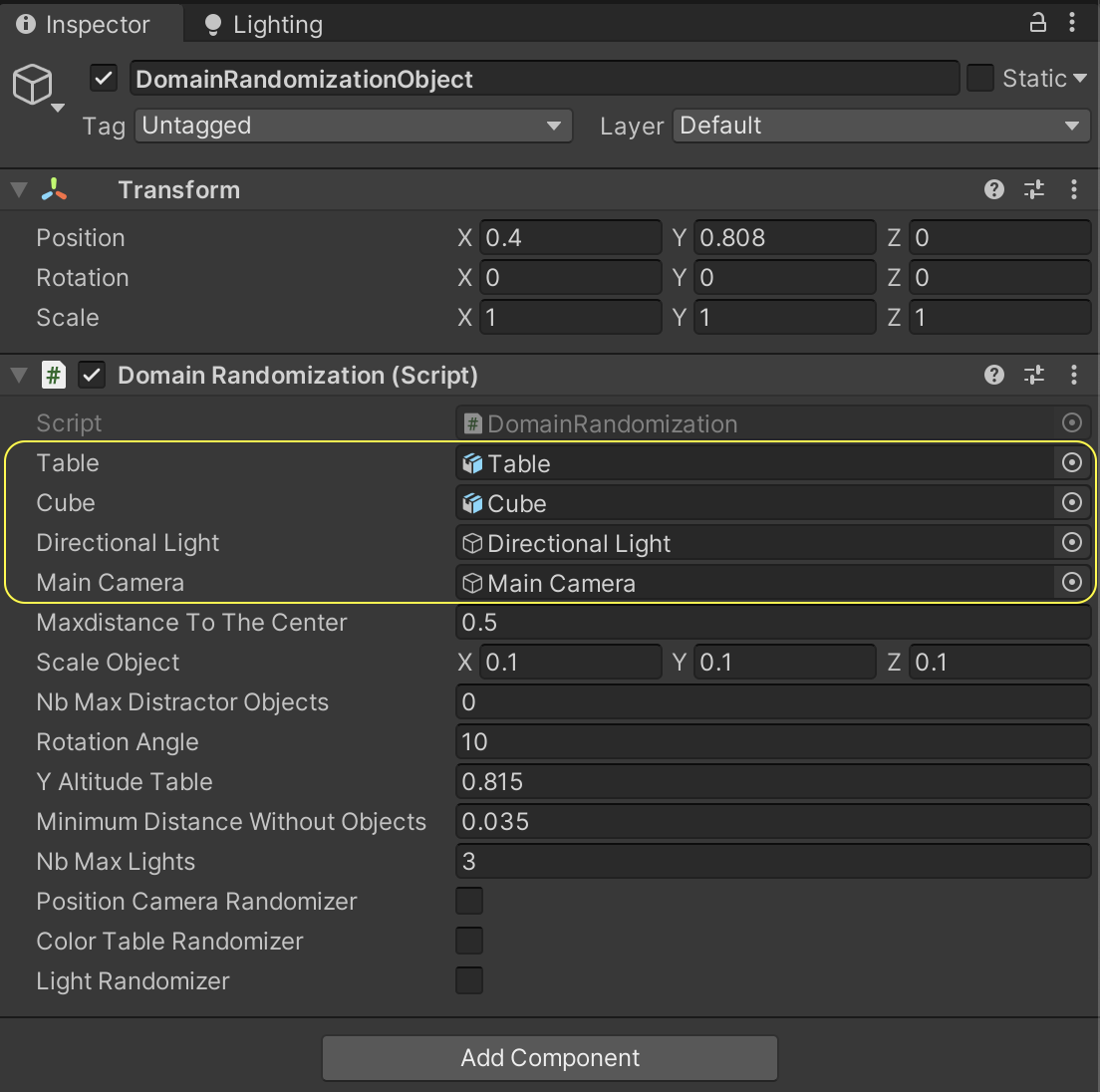

#### Domain Randomization |

|||

We will be collecting training data from a simulation, but most real perception use-cases occur in the real world. |

|||

To train a model to be robust enough to generalize to the real domain, we rely on a technique called [Domain Randomization](https://arxiv.org/pdf/1703.06907.pdf). Instead of training a model in a single, fixed environment, we _randomize_ aspects of the environment during training in order to introduce sufficient variation into the generated data. This forces the machine learning model to handle many small visual variations, making it more robust. |

|||

|

|||

In this tutorial, we will randomize the position and the orientation of the cube on the table, and also the color, intensity, and position of the light. Note that the Randomizers in the Perception package can be extended to many other aspects of the environment as well. |

|||

|

|||

|

|||

#### The Scenario |

|||

To start randomizing your simulation, you will first need to add a **Scenario** to your scene. Scenarios control the execution flow of your simulation by coordinating all Randomizer components added to them. If you want to know more about it, you can go see [this tutorial](https://github.com/Unity-Technologies/com.unity.perception/blob/master/com.unity.perception/Documentation~/Tutorial/Phase1.md#step-5-set-up-background-randomizers). There are several pre-built Randomizers provided by the Perception package, but they don't fit our specific problem. Fortunately, the Perception package also allows one to write [custom randomizers](https://github.com/Unity-Technologies/com.unity.perception/blob/master/com.unity.perception/Documentation~/Tutorial/Phase2.md), which we will do here. |

|||

|

|||

|

|||

1. In the _**Hierarchy**_, select the **+** and `Create Empty`. Rename this GameObject `Simulation Scenario`. |

|||

|

|||

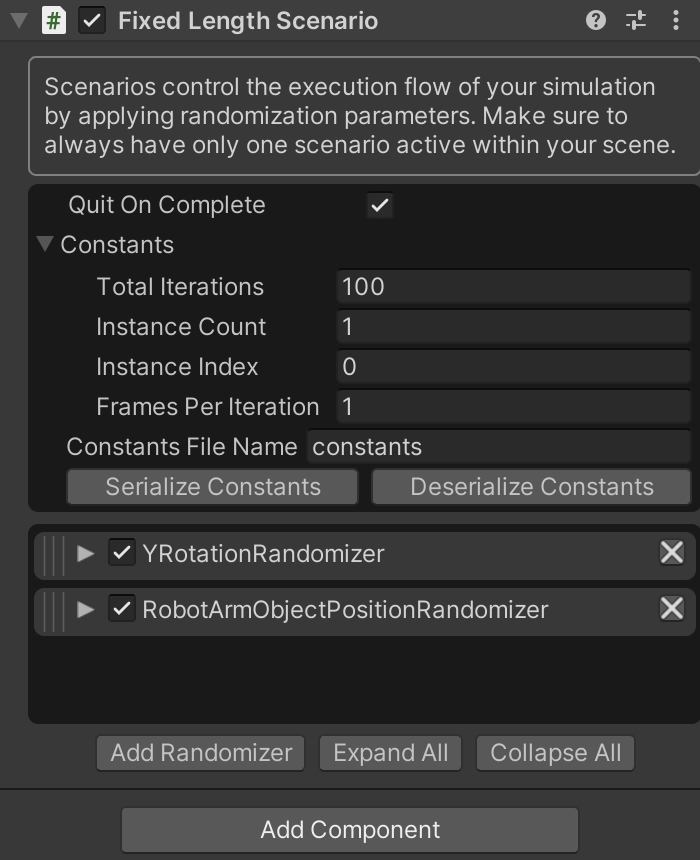

2. Select the `Simulation Scenario` GameObject and in the _**Inspector**_ tab, click on the _**Add Component**_ button. Start typing `Fixed Length Scenario` in the search bar that appears, until the `Fixed Length Scenario` script is found, with a **#** icon to the left. Then double click on it. |

|||

|

|||

Each Scenario executes a number of Iterations, and each Iteration carries on for a number of frames. These are timing elements you can leverage in order to customize your Scenarios and the timing of your randomizations. |

|||

|

|||

|

|||

#### Writing our Custom Object Rotation Randomizer |

|||

Each new Randomizer requires two C# scripts: a **Randomizer** and **RandomizerTag**. The **Randomizer** will go on the Scenario to orchestrate the randomization. The corresponding **RandomizerTag** is added to any GameObject(s) we want to _apply_ the randomization to. |

|||

|

|||

First, we will write a randomizer to randomly rotate the cube around its y-axis each iteration. |

|||

|

|||

3. In the _**Project**_ tab, right-click on the **Scripts** folder and select `Create -> C# Script`. Name your new script file `YRotationRandomizer`. |

|||

|

|||

4. Create another script and name it `YRotationRandomizerTag`. |

|||

|

|||

5. Double-click `YRotationRandomizer.cs` to open it in _**Visual Studio**_. |

|||

|

|||

Note that while _**Visual Studio**_ is the default option, you can choose any text editor of your choice. You can change this setting in _**Preferences -> External Tools -> External Script Editor**_. |

|||

|

|||

6. Remove the contents of the class and copy/paste the code below: |

|||

|

|||

``` |

|||

using System.Collections; |

|||

using System.Collections.Generic; |

|||

using System; |

|||

using UnityEngine; |

|||

using UnityEngine.Perception.Randomization.Parameters; |

|||

using UnityEngine.Perception.Randomization.Randomizers; |

|||

|

|||

[Serializable] |

|||

[AddRandomizerMenu("Perception/Y Rotation Randomizer")] |

|||

public class YRotationRandomizer : InferenceRandomizer |

|||

{ |

|||

public FloatParameter random; // in range (0, 1) |

|||

|

|||

protected override void OnIterationStart() |

|||

{ |

|||

OnCustomIteration(); |

|||

} |

|||

|

|||

public override void OnCustomIteration() |

|||

{ |

|||

/* Runs at the start of every iteration. */ |

|||

|

|||

IEnumerable<YRotationRandomizerTag> tags = tagManager.Query<YRotationRandomizerTag>(); |

|||

foreach (YRotationRandomizerTag tag in tags) |

|||

{ |

|||

float yRotation = random.Sample() * 360.0f; |

|||

|

|||

// sets rotation |

|||

tag.SetYRotation(yRotation); |

|||

} |

|||

} |

|||

} |

|||

|

|||

``` |

|||

|

|||

The purpose of this piece of code is to rotate a set of objects randomly about their y-axes every iteration. In Unity, the y-axis points "up". |

|||

|

|||

>Note: If you look at the Console tab of the editor now, you will see an error regarding `YRotationRandomizerTag` not being found. This is to be expected, since we have not yet created this class; the error will go away once we create the class later. |

|||

|

|||

Let's go through the code above and understand each part: |

|||

* Near the top, you'll notice the line `[AddRandomizerMenu("Perception/Y Rotation Randomizer")]`. This gives the name we see in the UI when we add the randomizer in the `Fixed Length Scenario`. |

|||

* The `YRotationRandomizer` class extends `Randomizer`, which is the base class for all Randomizers that can be added to a Scenario. This base class provides a plethora of useful functions and properties that can help catalyze the process of creating new Randomizers. |

|||

* The `FloatParameter` field contains a seeded random number generator. We can set the range, and the distribution of this value in the editor UI. |

|||

* The `OnIterationStart()` function is a life-cycle method on all `Randomizer`s. It is called by the scenario every iteration (e.g., once per frame). |

|||

* The `OnCustomiteration()` function for responsible of the actions to randomize the Y-axis of the cube. Although you could incorporate the content of the `OnCustomIteration()` function inside the `OnIterationStart()` function, we chose this architecture so that we can call the method reponsible for the Y rotation axis in other scripts. |

|||

* The `tagManager` is an object available to every `Randomizer` that can help us find game objects tagged with a given `RandomizerTag`. In our case, we query the `tagManager` to gather references to all the objects with a `YRotationRandomizerTag` on them. |

|||

* We then loop through these `tags` to rotate each object having one: |

|||

* `random.Sample()` gives us a random float between 0 and 1, which we multiply by 360 to convert to degrees. |

|||

* We then rotate this object using the `SetYRotation()` method the tag, which we will write in a moment. |

|||

|

|||

7. Open `YRotationRandomizerTag.cs` and replace its contents with the code below: |

|||

|

|||

``` |

|||

using System.Collections; |

|||

using System.Collections.Generic; |

|||

using UnityEngine; |

|||

using UnityEngine.Perception.Randomization.Randomizers; |

|||

|

|||

public class YRotationRandomizerTag : RandomizerTag |

|||

{ |

|||

private Vector3 originalRotation; |

|||

|

|||

private void Start() |

|||

{ |

|||

originalRotation = transform.eulerAngles; |

|||

} |

|||

|

|||

public void SetYRotation(float yRotation) |

|||

{ |

|||

transform.eulerAngles = new Vector3(originalRotation.x, yRotation, originalRotation.z); |

|||

} |

|||

} |

|||

|

|||

``` |

|||

The `Start` method is automatically called once, at runtime, before the first frame. Here, we use the `Start` method to save this object's original rotation in a variable. When `SetYRotation` is called by the Randomizer every iteration, it updates the rotation around the y-axis, but keeps the x and z components of the rotation the same. |

|||

|

|||

|

|||

#### Adding our Custom Object Rotation Randomizer |

|||

|

|||

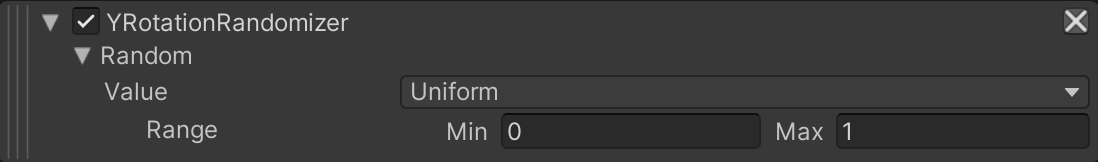

If you return to your list of Randomizers in the Inspector view of SimulationScenario, you can now add this new Randomizer. |

|||

|

|||

8. Add `YRotationRandomizer` to the list of Randomizers in SimulationScenario (see gif after the next action). You will notice that you can adjust the distribution here, as mentioned above. |

|||

|

|||

<p align="center"> |

|||

<img src="Images/2_y_rotation_randomizer.png" height=100/> |

|||

</p> |

|||

|

|||

9. Select the `Cube` GameObject and in the _**Inspector**_ tab, add a `YRotationRandomizerTag` component. |

|||

|

|||

<p align="center"> |

|||

<img src="Gifs/2_y_rotation_randomizer_settings.gif" height=550 width=1020/> |

|||

</p> |

|||

|

|||

|

|||

10. Run the simulation and inspect how the cube now switches between different orientations. You can pause the simulation and then use the step button (to the right of the pause button) to move the simulation one frame forward and clearly see the variation of the cube's y-rotation. You should see something similar to the following. |

|||

|

|||

<p align="center"> |

|||

<img src="Gifs/2_y_rotation_randomizer.gif" height=411 width=800/> |

|||

</p> |

|||

|

|||

#### Randomizing Object Positions |

|||

|

|||

It is great that we can now rotate the cube, but we also want to move it around the table. However, not all positions on the table are valid - we also need it to be within the robot arm's reach. |

|||

|

|||

To save time, we have provided a pre-written custom randomizer to do this. |

|||

|

|||

11. Select the `Simulation Scenario` GameObject, and do the following: |

|||

* In the _**Inspector**_ tab, on the `Fixed Length Scenario` component, click `Add Randomizer` and start typing `RobotArmObjectPositionRandomizer`. |

|||

* Set the `MinRobotReachability` to `0.2` and the `MaxRobotReachability` to `0.4`. |

|||

* Under `Plane`, click on the circle and start typing `ObjectPlacementPlane` and then double click on the GameObject that appears. |

|||

* Under `base`, you need to drag and drop the base (`ur3_with_gripper/world/base_link/base`) of the robot. |

|||

|

|||

<p align="center"> |

|||

<img src="Gifs/2_robot_randomizer_settings.gif" height=508 /> |

|||

</p> |

|||

|

|||

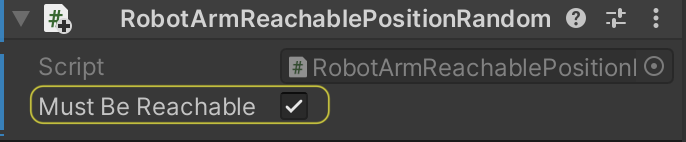

12. Now we need to add the RandomizerTag to the cube. |

|||

* Select the `Cube` GameObject and in the _**Inspector**_ tab, click on the _**Add Component**_ button. Start typing `RobotArmObjectPositionRandomizerTag` in the search bar that appears, until the `RobotArmObjectPositionRandomizerTag` script is found, with a **#** icon to the left. Then double click on it. |

|||

* Click on the arrow on the left of the `script` icon, and check the property `Must Be Reachable`. |

|||

|

|||

The `RobotArmObjectPositionRandomizerTag` component should look like the following: |

|||

|

|||

<p align="center"> |

|||

<img src="Images/2_RobotArmReachablePositionRandomizerSetting.png" height=150/> |

|||

</p> |

|||

|

|||

If you press play, you should now see the cube and goal moving around the robot with the cube rotating at each frame. |

|||

|

|||

<p align="center"> |

|||

<img src="Gifs/2_object_position_randomizer.gif" height=388 width=800/> |

|||

</p> |

|||

|

|||

#### Light Randomizer |

|||

|

|||

Now we will add the light Randomizer. |

|||

|

|||

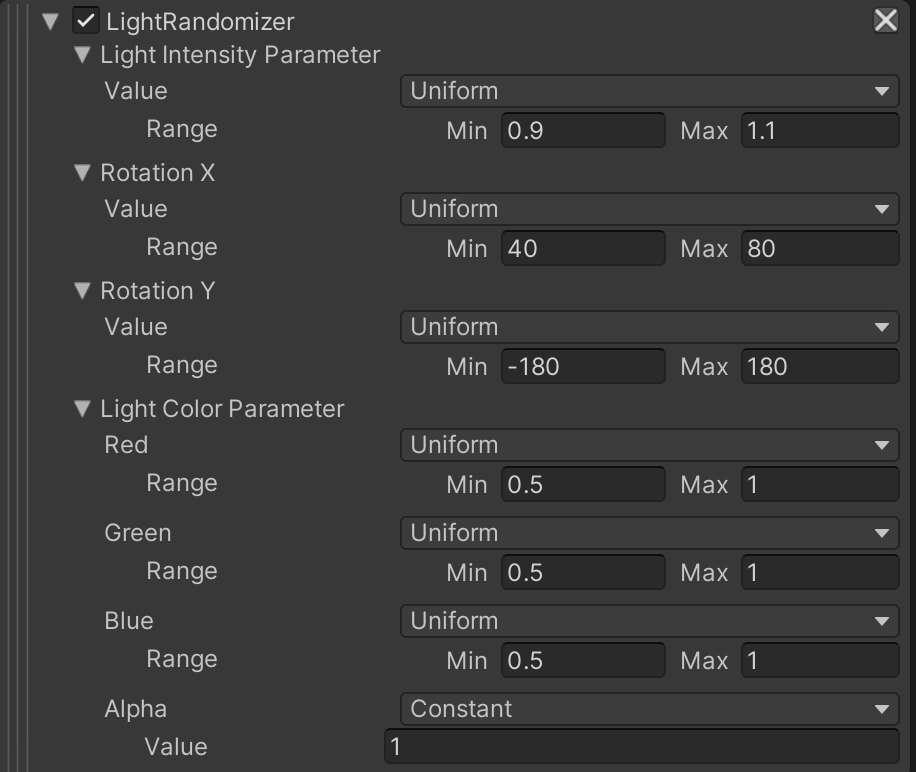

13. Select the `Simulation Scenario` GameObject and in the _**Inspector**_ tab, on the `Fixed Length Scenario` component, click on `Add Randomizer` and start typing `LightRandomizer`. |

|||

* For the `Light Intensity Parameter`, for the range parameter set the `Min` to `0.9` and the `Max` to `1.1`. |

|||

* For the range parameter of the `Rotation X`, set the `Min` to `40` and the `Max` to `80`. |

|||

* For the range parameter of the `Rotation Y`, set the `Min` to `-180` and the `Max` to `180`. |

|||

* For the range parameter of the `Red`, `Green` and `Blue` of the `color` parameter, set the `Min` to `0.5`. |

|||

|

|||

The Randomizer should now look like the following: |

|||

|

|||

<p align="center"> |

|||

<img src="Images/2_light_randomizer_settings.png" height=500/> |

|||

</p> |

|||

|

|||

14. Now we need to add the RandomizerTag to the light. Select the `Directional Light` GameObject and in the _**Inspector**_ tab, click on the _**Add Component**_ button. Start typing `LightRandomizerTag` in the search bar that appears, until the `LightRandomizerTag` script is found, with a **#** icon to the left. Then double click on it. |

|||

|

|||

To view this script, you can right click on the three dots are the end of the component and select `Edit Script`. |

|||

This randomizer is a bit different from the previous ones as you can see by the line `[RequireComponent(typeof(Light))]` at line 7. This line makes it so that you can only add the `LightRandomizerTag` component to an object that already has a **Light** component attached. This way, the Randomizers that query for this tag can be confident that the found objects have a **Light** component. |

|||

|

|||

If you press play, you should see the color, direction, and intensity of the lighting now changes with each frame. |

|||

|

|||

<p align="center"> |

|||

<img src="Gifs/2_light_randomizer.gif" height=600/> |

|||

</p> |

|||

|

|||

### Proceed to [Part 3](3_data_collection_model_training.md). |

|||

|

|||

### Go back to [Part 1](1_set_up_the_scene.md) |

|||

|

|||

# Pose Estimation Demo: Part 3 |

|||

|

|||

In [Part 1](1_set_up_the_scene.md) of the tutorial, we learned how to create our scene in the Unity editor. |

|||

|

|||

In [Part 2](2_set_up_the_data_collection_scene.md) of the tutorial, we learned: |

|||

* How to equip the camera for the data collection |

|||

* How to equip the cube for the data collection |

|||

* How to create your own randomizer |

|||

* How to add our custom randomizer |

|||

|

|||

In this part, we will be collecting a large dataset of RGB images of the scene, and the corresponding pose of the cube. We will then use this data to train a machine learning model to predict the cube's position and rotation from images taken by our camera. We will then be ready to use the trained model for our pick-and-place task in [Part 4](4_pick_and_place.md). |

|||

|

|||

Steps included in this part of the tutorial: |

|||

|

|||

**Table of Contents** |

|||

- [Collect the Training and Validation Data](#step-1) |

|||

- [Train the Deep Learning Model](#step-2) |

|||

- [Exercises for the Reader](#exercises-for-the-reader) |

|||

|

|||

--- |

|||

|

|||

## <a name="step-1">Collect the Training and Validation Data</a> |

|||

|

|||

Now it is time to collect the data: a set of images with the corresponding position and orientation of the cube relative to the camera. |

|||

|

|||

We need to collect data for the training process and data for the validation one. |

|||

|

|||

We have chosen a training dataset of 30,000 images and a validation dataset of 3,000 images. |

|||

|

|||

1. Select the `Simulation Scenario` GameObject and in the _**Inspector**_ tab, in the `Fixed Length Scenario` and in `Constants` set the `Total Iterations` to 30000. |

|||

|

|||

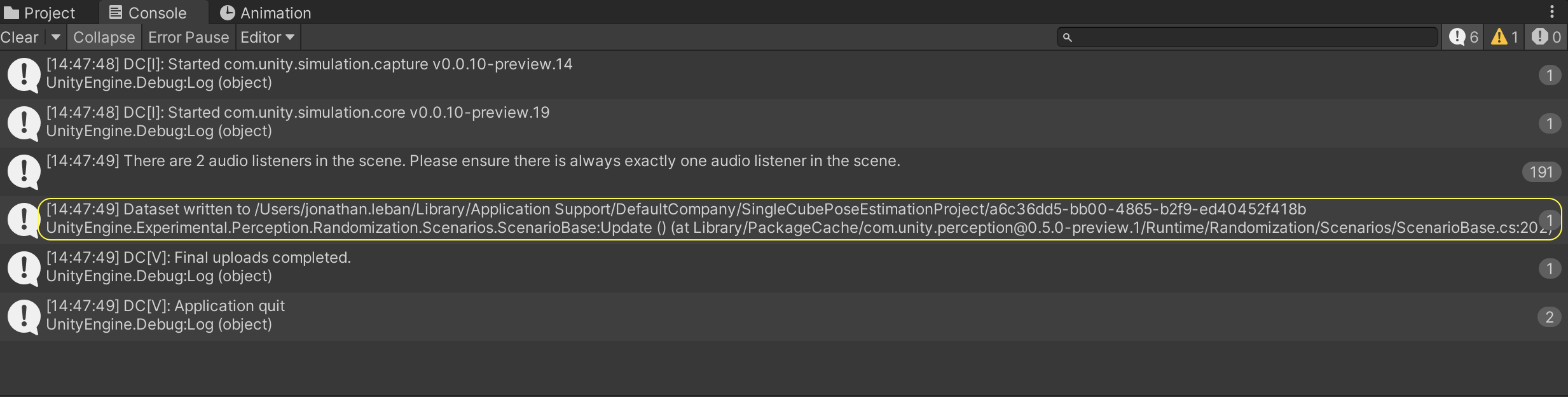

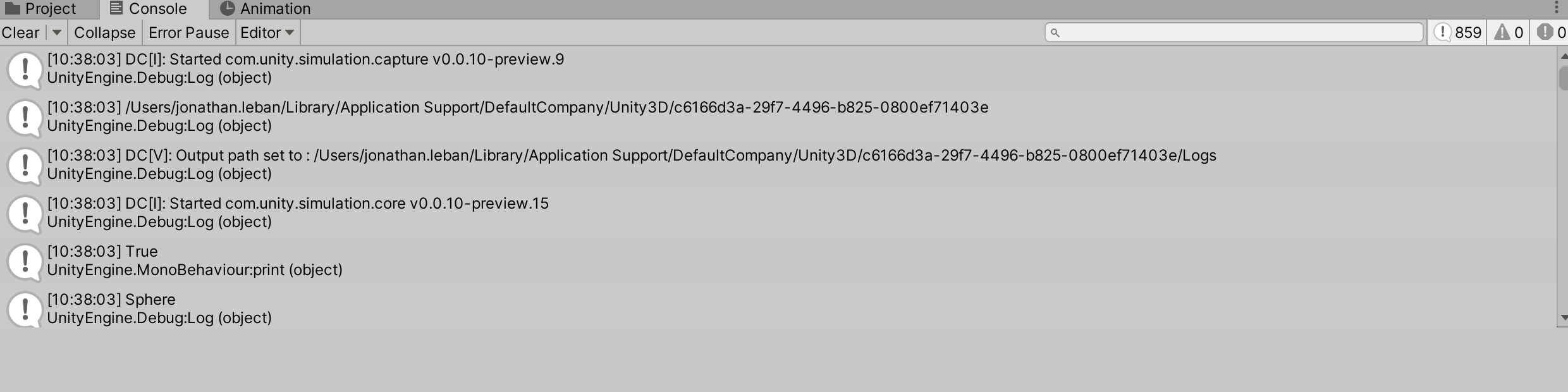

2. Press play and wait until the simulation is done. It should take a bit of time (~10 min). Once it is done, go to the _**Console**_ tab which is the tag on the right of the _**Project**_ tab. |

|||

|

|||

You should see something similar to the following: |

|||

|

|||

<p align="center"> |

|||

<img src="Images/3_saved_data.png"/> |

|||

</p> |

|||

|

|||

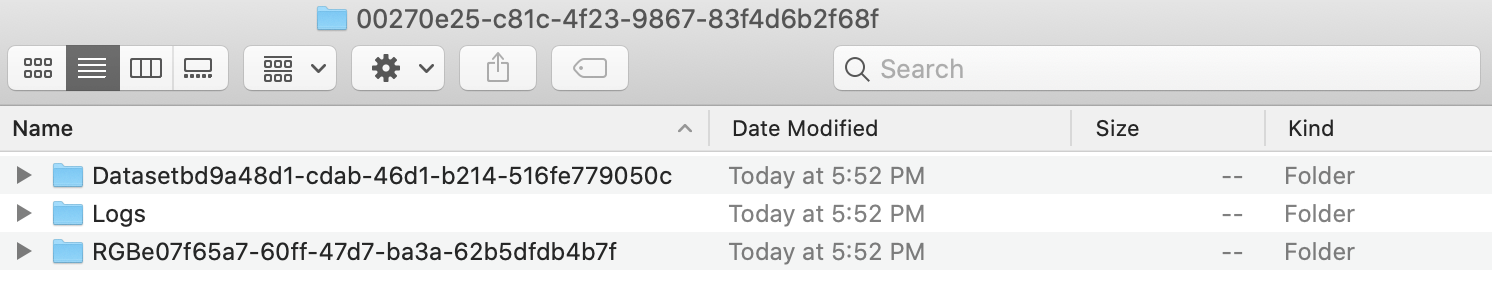

In my case the data is written to `/Users/jonathan.leban/Library/Application Support/DefaultCompany/SingleCubePoseEstimationProject` but for you the data path will be different. Go to that directory from your terminal. |

|||

|

|||

You should then see something similar to the following: |

|||

<p align="center"> |

|||

<img src="Images/3_data_logs.png"/> |

|||

</p> |

|||

|

|||

3. Change this folder's name to `UR3_single_cube_training`. |

|||

|

|||

4. Now we need to collect the validation dataset. Select the `Simulation Scenario` GameObject and in the _**Inspector**_ tab, in the `Fixed Length Scenario` and in `Constants` set the `Total Iterations` to 3000. |

|||

|

|||

5. Press play and wait until the simulation is done. Once it is done go to the _**Console**_ tab and go to the directory where the data has been saved. |

|||

|

|||

6. Change the folder name where this latest data was saved to `UR3_single_cube_validation`. |

|||

|

|||

7. **(Optional)**: Move the `UR3_single_cube_training` and `UR3_single_cube_validation` folders to a directory of your choice. |

|||

|

|||

|

|||

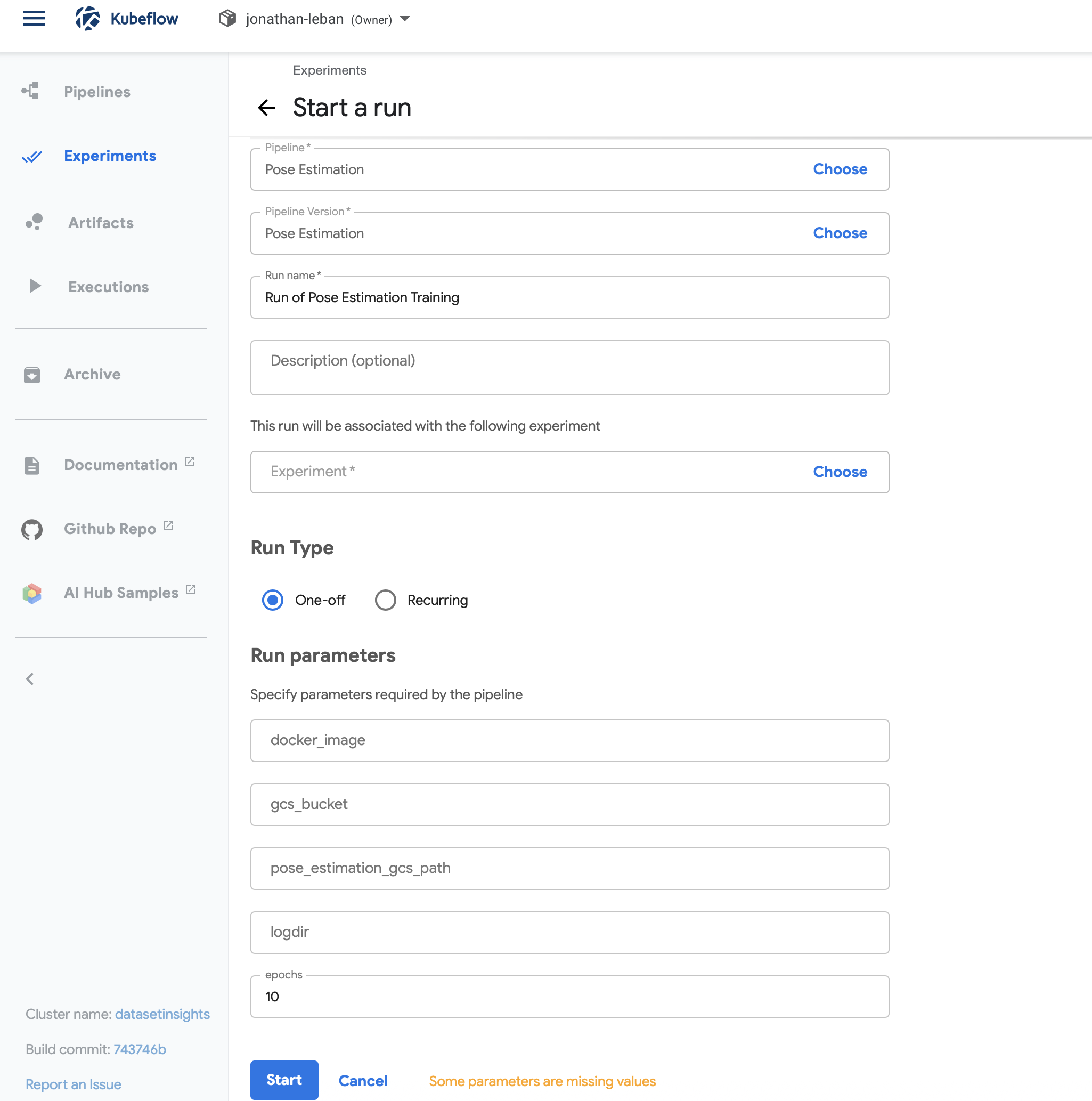

## <a name="step-2">Train the Deep Learning Model</a> |

|||

Now its time to train our deep learning model! We've provided the model training code for you, but if you'd like to learn more about it - or make your own changes - you can dig into the details [here](../Model). |

|||

|

|||

This step can take a long time if your computer doesn't have GPU support (~5 days on CPU). Even with a GPU, it can take around ~10 hours. We have provided an already trained model as an alternative to waiting for training to complete. If you would like to use this provided model, you can proceed to [Part 4](4_pick_and_place.md). |

|||

|

|||

1. Navigate to the `tutorials/pose_estimation/Model` directory. |

|||

|

|||

### Requirements |

|||

|

|||

We support two approaches for running the model: Docker (which can run anywhere) or locally with Conda. |

|||

|

|||

#### Option A: Using Docker |

|||

If you would like to run using Docker, you can follow the [Docker steps provided](../Model/documentation/running_on_docker.md) in the model documentation. |

|||

|

|||

|

|||

#### Option B: Using Conda |

|||

To run this project locally, you will need to install [Anaconda](https://docs.anaconda.com/anaconda/install/) or [Miniconda](https://docs.conda.io/en/latest/miniconda.html). |

|||

|

|||

If running locally without Docker, we first need to create a conda virtual environment and install the dependencies for our machine learning model. If you only have access to CPUs, install the dependencies specified in the `environment.yml` file. If your development machine has GPU support, you can choose to use the `environment-gpu.yml` file instead. |

|||

|

|||

2. In a terminal window, enter the following command to create the environment. Replace `<env-name>` with an environment name of your choice, e.g. `pose-estimation`: |

|||

```bash |

|||

conda env create -n <env-name> -f environment.yml |

|||

``` |

|||

|

|||

Then, you need to activate the conda environment. |

|||

|

|||

3. Still in the same terminal window, enter the following command: |

|||

```bash |

|||

conda activate <env-name> |

|||

``` |

|||

|

|||

### Updating the Model Config |

|||

|

|||

At the top of the [cli.py](../Model/pose_estimation/cli.py) file in the model code, you can see the documentation for all supported commands. Since typing these in can be laborious, we use a [config.yaml](../Model/config.yaml) file to feed in all these arguments. You can still use the command line arguments if you want - they will override the config. |

|||

|

|||

There are a few settings specific to your setup that you'll need to change. |

|||

|

|||

First, we need to specify the path to the folders where your training and validation data are saved: |

|||

|

|||

4. In the [config.yaml](../Model/config.yaml), under `system`, you need to set the argument `data/root` to the path of the directory containing your data folders. For example, since I put my data (`UR3_single_cube_training` and `UR3_single_cube_validation`) in a folder called `data` in Documents, I set the following: |

|||

```bash |

|||

data_root: /Users/jonathan.leban/Documents/data |

|||

``` |

|||

|

|||

Second, we need to modify the location where the model is going to be saved: |

|||

|

|||

5. In the [config.yaml](../Model/config.yaml), under `system`, you need to set the argument `log_dir_system` to the full path to the output folder where your model's results will be saved. For example, I created a new directory called `models` in my Documents, and then set the following: |

|||

```bash |

|||

log_dir_system: /Users/jonathan.leban/Documents/models |

|||

``` |

|||

|

|||

### Training the model |

|||

Now its time to train our deep learning model! |

|||

|

|||

6. If you are not already in the `tutorials/pose_estimation/Model` directory, navigate there. |

|||

|

|||

7. Enter the following command to start training: |

|||

```bash |

|||

python -m pose_estimation.cli train |

|||

``` |

|||

|

|||

>Note (Optional): If you want to override certain training hyperparameters, you can do so with additional arguments on the above command. See the documentation at the top of [cli.py](../Model/pose_estimation/cli.py) for a full list of supported arguments. |

|||

|

|||

>Note: If the training process ends unexpectedly, check the [Troubleshooting Guide](troubleshooting.md) for potential solutions. |

|||

|

|||

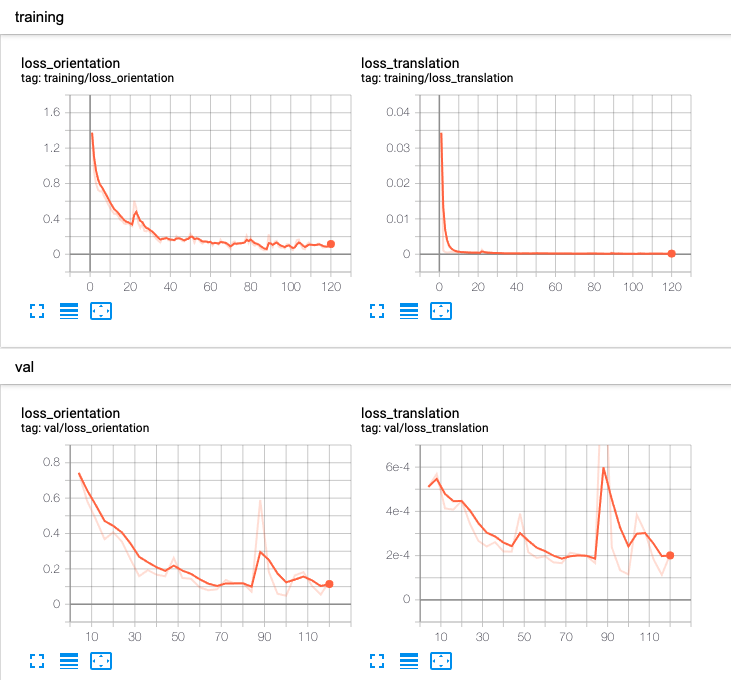

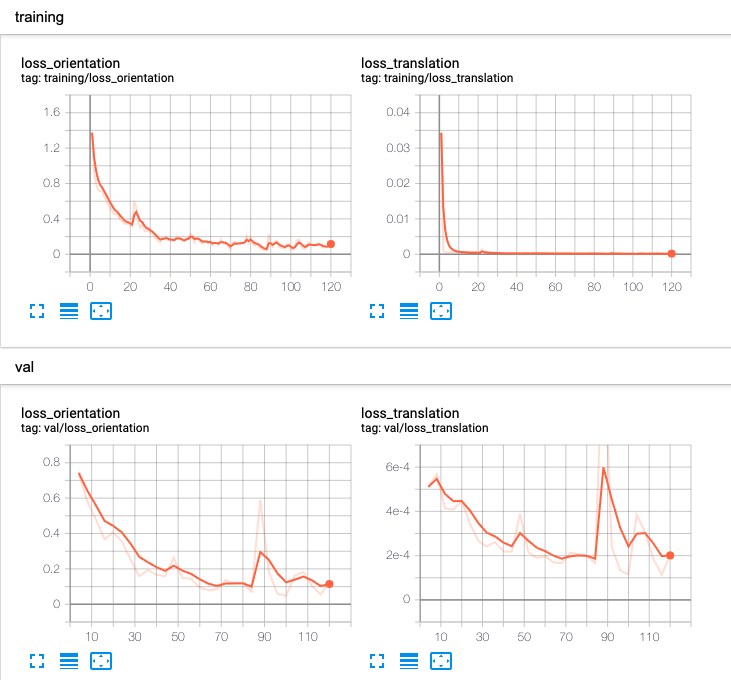

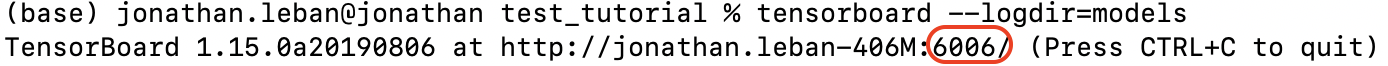

### Visualizing Training Results with Tensorboard |

|||

If you'd like to examine the results of your training run in more detail, see our guide on [viewing the Tensorboard logs](tensorboard.md). |

|||

|

|||

### Evaluating the Model |

|||

Once training has completed, we can also run our model on our validation dataset to measure its performance on data it has never seen before. |

|||

|

|||

However, first we need to specify a few settings in our config file. |

|||

|

|||

8. In [config.yaml](../Model/config.yaml), under `checkpoint`, you need to set the argument `log_dir_checkpoint` to the path where you have saved your newly trained model. |

|||

|

|||

9. If you are not already in the `tutorials/pose_estimation/Model` directory, navigate there. |

|||

|

|||

10. To start the evaluation run, enter the following command: |

|||

```bash |

|||

python -m pose_estimation.cli evaluate |

|||

``` |

|||

|

|||

>Note (Optional): To override additional settings on your evaluation run, you can tag on additional arguments to the command above. See the documentation in [cli.py](../Model/pose_estimation/cli.py) for more details. |

|||

|

|||

|

|||

### Exercises for the Reader |

|||

**Optional**: If you would like to learn more about randomizers and apply domain randomization to this scene more thoroughly, check out our further exercises for the reader [here](5_more_randomizers.md). |

|||

|

|||

### Proceed to [Part 4](4_pick_and_place.md). |

|||

|

|||

### |

|||

|

|||

### Go back to [Part 2](2_set_up_the_data_collection_scene.md) |

|||

|

|||

# Pose Estimation Demo: Part 4 |

|||

|

|||

|

|||

In [Part 1](1_set_up_the_scene.md) of the tutorial, we learned how to create our scene in the Unity editor. In [Part 2](2_set_up_the_data_collection_scene.md), we set up the scene for data collection. |

|||

|

|||

In [Part 3](3_data_collection_model_training.md) we have learned: |

|||

* How to collect the data |

|||

* How to train the deep learning model |

|||

|

|||

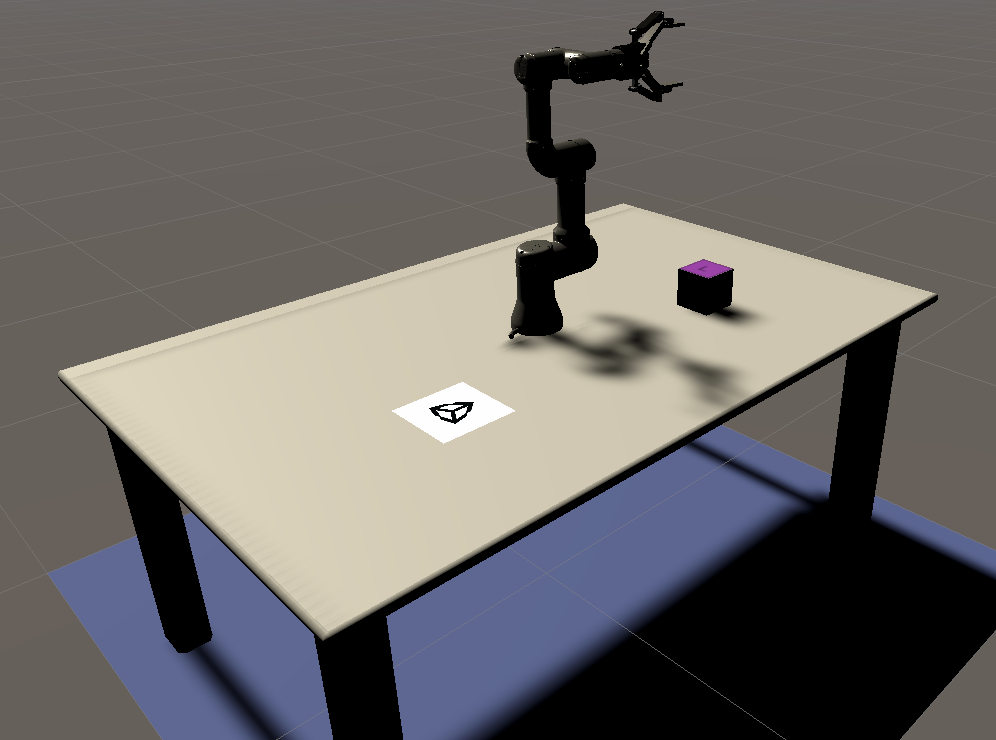

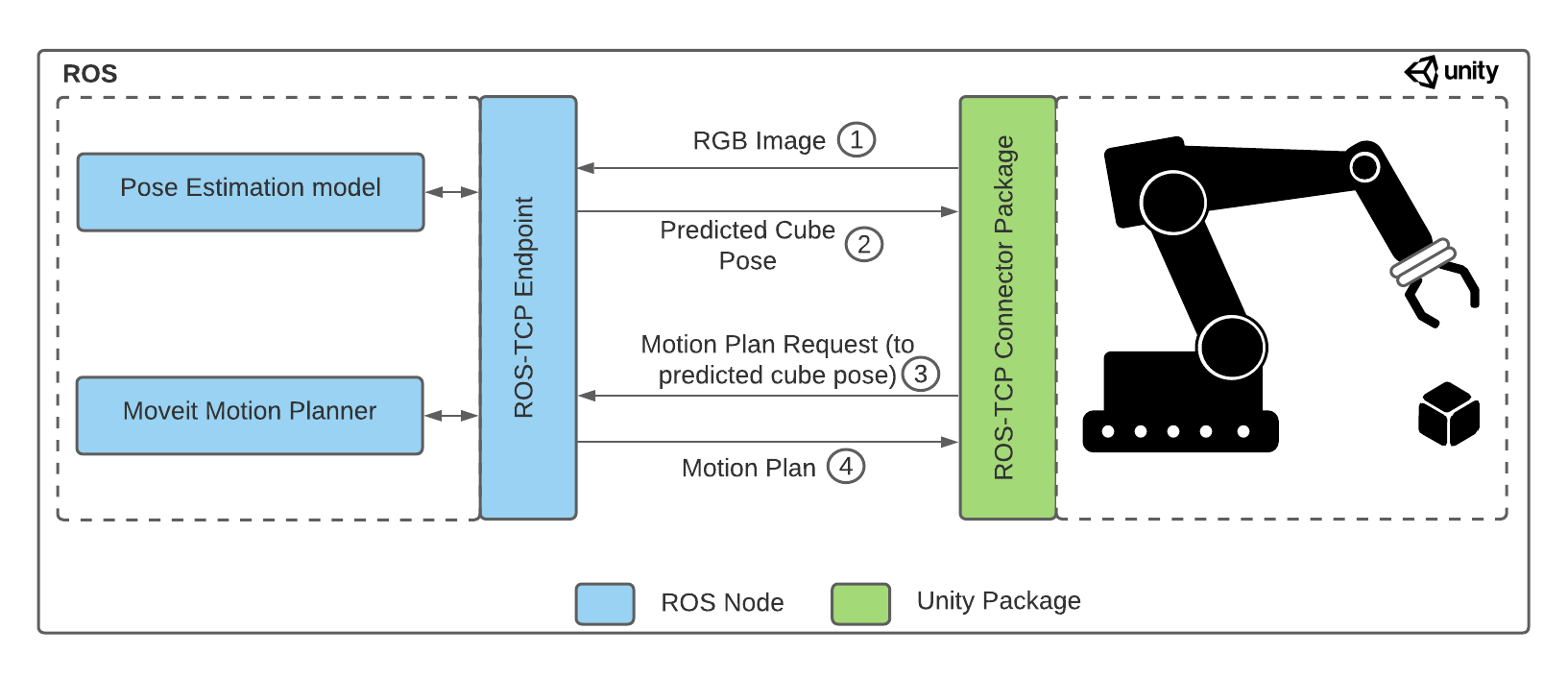

In this part, we will use our trained deep learning model to predict the pose of the cube, and pick it up with our robot arm. |

|||

|

|||

<p align="center"> |

|||

<img src="Images/4_Pose_Estimation_ROS.png"/> |

|||

</p> |

|||

|

|||

**Table of Contents** |

|||

- [Setup](#setup) |

|||

- [Adding the Pose Estimation Model](#step-2) |

|||

- [Set up the ROS side](#step-3) |

|||

- [Set up the Unity side](#step-4) |

|||

- [Putting it together](#step-5) |

|||

|

|||

--- |

|||

|

|||

### <a name="setup">Set up</a> |

|||

If you have correctly followed parts 1 and 2, whether or not you choose to use the Unity project given by us or start it from scratch, you should have cloned the repository. |

|||

|

|||

|

|||

>Note: If you cloned the project and forgot to use `--recurse-submodules`, or if any submodule in this directory doesn't have content (e.g. moveit_msgs or ros_tcp_endpoint), you can run the following command to grab the Git submodules. But before you need to be in the `pose_estimation` folder. |

|||

>```bash |

|||

>cd /PATH/TO/Unity-Robotics-Hub/tutorials/pose_estimation && |

|||

>git submodule update --init --recursive |

|||

>``` |

|||

|

|||

Three package dependencies for this project, [Universal Robot](https://github.com/ros-industrial/universal_robot) for the UR3 arm configurations, [Robotiq](https://github.com/ros-industrial/robotiq) for the gripper, and [MoveIt Msgs](https://github.com/ros-planning/moveit_msgs) are large repositories. A bash script has been provided to run a sparse clone to only copy the files required for this tutorial, as well as the [ROS TCP Endpoint](https://github.com/Unity-Technologies/ROS-TCP-Endpoint/). |

|||

|

|||

1. Open a terminal and go to the directory of the `pose_estimation` folder. Then run: |

|||

```bash |

|||

./submodule.sh |

|||

``` |

|||

|

|||

In your `pose_estimation` folder, you should have a `ROS` folder. Inside that folder you should have a `src` folder and inside that one 5 folders: `moveit_msgs`, `robotiq`, `ros_tcp_endpoint`, `universal_robot` and `ur3_moveit`. |

|||

|

|||

### <a name="step-2">Adding the Pose Estimation Model</a> |

|||

|

|||

Here you have two options for the model: |

|||

|

|||

#### Option A: Use Our Pre-trained Model |

|||

|

|||

1. To save time, you may use the model we have trained. Download this [UR3_single_cube_model.tar](https://github.com/Unity-Technologies/Unity-Robotics-Hub/releases/download/Pose-Estimation/UR3_single_cube_model.tar) file, which contains the pre-trained model weights. |

|||

|

|||

#### Option B: Use Your Own Model |

|||

|

|||

2. You can also use the model you have trained in [Part 3](3_data_collection_model_training.md). However, be sure to rename your model `UR3_single_cube_model.tar` as the script that will call the model is expecting this name. |

|||

|

|||

#### Moving the Model to the ROS Folder |

|||

|

|||

3. Go inside the `ROS/SRC/ur3_moveit` folder and create a folder called `models`. Then copy your model file (.tar) into it. |

|||

|

|||

### <a name="step-3">Set up the ROS side</a> |

|||

|

|||

>Note: This project has been developed with Python 3 and ROS Noetic. |

|||

|

|||

The provided ROS files require the following packages to be installed. The following section steps through configuring a Docker container as the ROS workspace for this tutorial. If you would like to manually set up your own ROS workspace with the provided files instead, follow the steps in [Part 0: ROS Setup](0_ros_setup.md) to do so. |

|||

|

|||

Building this Docker container will install the necessary packages for this tutorial. |

|||

|

|||

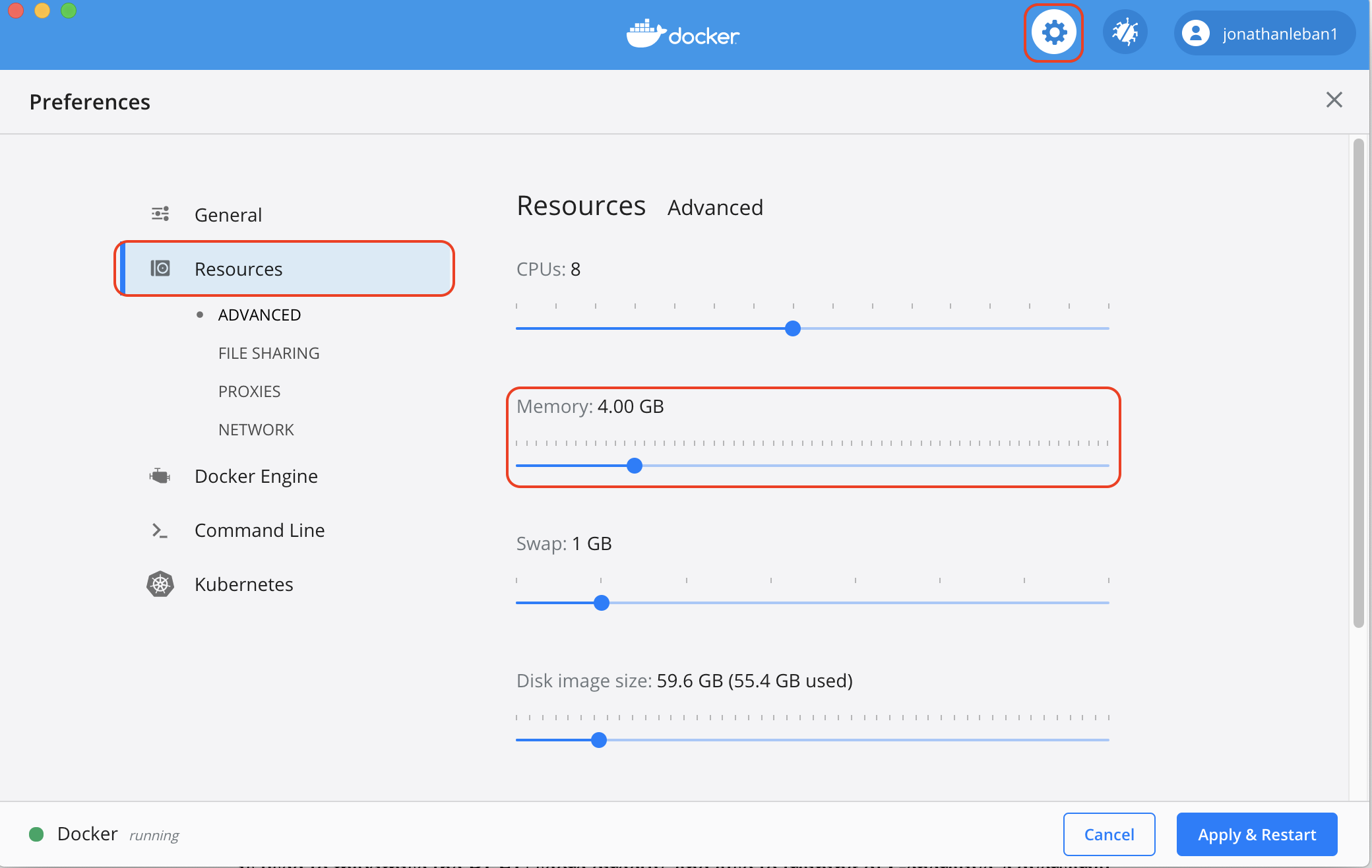

1. Install the [Docker Engine](https://docs.docker.com/engine/install/) if not already installed. Start the Docker daemon. To check if the Docker daemon is running, when you open you Docker application you should see something similar to the following (green dot on the bottom left corner with the word running at the foot of Docker): |

|||

|

|||

<p align="center"> |

|||

<img src="Images/4_docker_daemon.png" height=500/> |

|||

</p> |

|||

|

|||

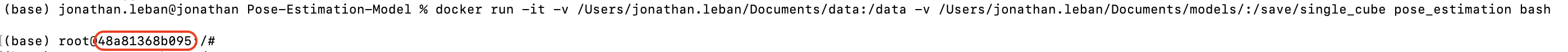

2. In the terminal, ensure the current location is at the root of the `pose_estimation` directory. Build the provided ROS Docker image as follows: |

|||

|

|||

```bash |

|||

docker build -t unity-robotics:pose-estimation -f docker/Dockerfile . |

|||

``` |

|||

|

|||

>Note: The provided Dockerfile uses the [ROS Noetic base Image](https://hub.docker.com/_/ros/). Building the image will install the necessary packages as well as copy the [provided ROS packages and submodules](../ROS/) to the container, predownload and cache the [VGG16 model](https://pytorch.org/docs/stable/torchvision/models.html#torchvision.models.vgg16), and build the catkin workspace. |

|||

|

|||

|

|||

3. Start the newly built Docker container: |

|||

|

|||

```docker |

|||

docker run -it --rm -p 10000:10000 -p 5005:5005 unity-robotics:pose-estimation /bin/bash |

|||

``` |

|||

|

|||

When this is complete, it will print: `Successfully tagged unity-robotics:pose-estimation`. This console should open into a bash shell at the ROS workspace root, e.g. `root@8d88ed579657:/catkin_ws#`. |

|||

|

|||

>Note: If you encounter issues with Docker, check the [Troubleshooting Guide](troubleshooting.md) for potential solutions. |

|||

|

|||

4. Source your ROS workspace: |

|||

|

|||

```bash |

|||

source devel/setup.bash |

|||

``` |

|||

|

|||

The ROS workspace is now ready to accept commands! |

|||

|

|||

>Note: The Docker-related files (Dockerfile, bash scripts for setup) are located in `PATH-TO-pose_estimation/docker`. |

|||

|

|||

--- |

|||

|

|||

### <a name="step-4">Set up the Unity side</a> |

|||

|

|||

If your Pose Estimation Tutorial Unity project is not already open, select and open it from the Unity Hub. |

|||

|

|||

We will work on the same scene that was created in the [Part 1](1_set_up_the_scene.md) and [Part 2](2_set_up_the_data_collection_scene.md), so if you have not already, complete Parts 1 and 2 to set up the Unity project. |

|||

|

|||

#### Connecting with ROS |

|||

|

|||

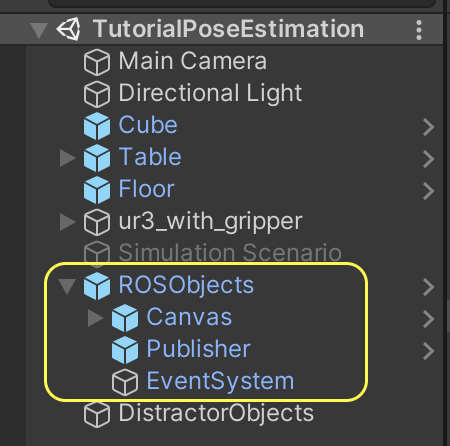

Prefabs have been provided for the UI elements and trajectory planner for convenience. These are grouped under the parent `ROSObjects` tag. |

|||

|

|||

1. In the Project tab, go to `Assets > TutorialAssets > Prefabs > Part4` and drag and drop the `ROSObjects` prefab inside the _**Hierarchy**_ panel. |

|||

|

|||

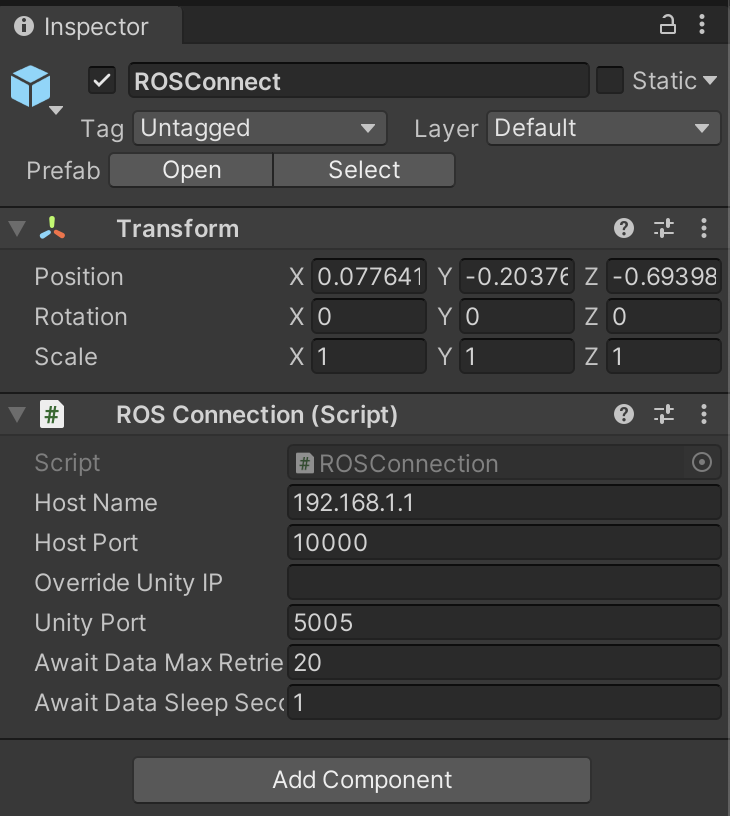

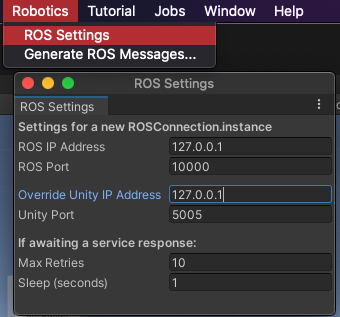

2. The ROS TCP connection needs to be created. In the top menu bar in the Unity Editor, select `Robotics -> ROS Settings`. Find the IP address of your ROS machine. |

|||

* If you are going to run ROS services with the Docker container introduced [above](#step-3), fill `ROS IP Address` and `Override Unity IP` with the loopback IP address `127.0.0.1`. If you will be running ROS services via a non-Dockerized setup, you will most likely want to have the `Override Unity IP` field blank, which will let the Unity IP be determined automatically. |

|||

|

|||

* If you are **not** going to run ROS services with the Docker container, e.g. a dedicated Linux machine or VM, open a terminal window in this ROS workspace. Set the ROS IP Address field as the output of the following command: |

|||

|

|||

```bash |

|||

hostname -I |

|||

``` |

|||

|

|||

3. Ensure that the ROS Port is set to `10000` and the Unity Port is set to `5005`. You can leave the Show HUD box unchecked. This HUD can be helpful for debugging message and service requests with ROS. You may turn this on if you encounter connection issues. |

|||

|

|||

<p align="center"> |

|||

<img src="Images/4_ros_settings.png" width="500"/> |

|||

</p> |

|||

|

|||

Opening the ROS Settings has created a ROSConnectionPrefab in `Assets/Resources` with the user-input settings. When the static `ROSConnection.instance` is referenced in a script, if a `ROSConnection` instance is not already present, the prefab will be instantiated in the Unity scene, and the connection will begin. |

|||

|

|||

>Note: While using the ROS Settings menu is the suggested workflow, you may still manually create a GameObject with an attached ROSConnection component. |

|||

|

|||

The provided script `Assets/TutorialAssets/Scripts/TrajectoryPlanner.cs` contains the logic to invoke the motion planning services, as well as the logic to control the gripper and end effector tool. This has been adapted from the [Pick-and-Place tutorial](https://github.com/Unity-Technologies/Unity-Robotics-Hub/blob/main/tutorials/pick_and_place/3_pick_and_place.md). The component has been added to the ROSObjects/Publisher object. |

|||

|

|||

In this TrajectoryPlanner script, there are two functions that are defined, but not yet implemented. `InvokePoseEstimationService()` and `PoseEstimationCallback()` will create a [ROS Service](http://wiki.ros.org/Services) Request and manage on the ROS Service Response, respectively. The following steps will provide the code and explanations for these functions. |

|||

|

|||

4. Open the `TrajectoryPlanner.cs` script in an editor. Find the empty `InvokePoseEstimationService(byte[] imageData)` function definition, starting at line 165. Replace the empty function with the following: |

|||

|

|||

```csharp |

|||

private void InvokePoseEstimationService(byte[] imageData) |

|||

{ |

|||

uint imageHeight = (uint)renderTexture.height; |

|||

uint imageWidth = (uint)renderTexture.width; |

|||

|

|||

RosMessageTypes.Sensor.Image rosImage = new RosMessageTypes.Sensor.Image(new RosMessageTypes.Std.Header(), imageWidth, imageHeight, "RGBA", isBigEndian, step, imageData); |

|||

PoseEstimationServiceRequest poseServiceRequest = new PoseEstimationServiceRequest(rosImage); |

|||

ros.SendServiceMessage<PoseEstimationServiceResponse>("pose_estimation_srv", poseServiceRequest, PoseEstimationCallback); |

|||

} |

|||

``` |

|||

|

|||

The `InvokePoseEstimationService` function will be called upon pressing the `Pose Estimation` button in the Unity Game view. It takes a screenshot of the scene as an input, and instantiates a new RGBA [sensor_msgs/Image](http://docs.ros.org/en/melodic/api/sensor_msgs/html/msg/Image.html) with the defined dimensions. Finally, this instantiates and sends a new Pose Estimation service request to ROS. |

|||

|

|||

>Note: The C# scripts for the necessary ROS msg and srv files in this tutorial have been generated via the [ROS-TCP-Connector](https://github.com/Unity-Technologies/ROS-TCP-Connector) and provided in the project's `Assets/TutorialAssets/RosMessages/` directory. |

|||

|

|||

Next, the function that is called to manage the Pose Estimation service response needs to be implemented. |

|||

|

|||

5. Still in the TrajectoryPlanner script, find the empty `PoseEstimationCallback(PoseEstimationServiceResponse response)` function definition. Replace the empty function with the following: |

|||

|

|||

```csharp |

|||

void PoseEstimationCallback(PoseEstimationServiceResponse response) |

|||

{ |

|||

if (response != null) |

|||

{ |

|||

// The position output by the model is the position of the cube relative to the camera so we need to extract its global position |

|||

var estimatedPosition = Camera.main.transform.TransformPoint(response.estimated_pose.position.From<RUF>()); |

|||

var estimatedRotation = Camera.main.transform.rotation * response.estimated_pose.orientation.From<RUF>(); |

|||

|

|||

PublishJoints(estimatedPosition, estimatedRotation); |

|||

|

|||

EstimatedPos.text = estimatedPosition.ToString(); |

|||

EstimatedRot.text = estimatedRotation.eulerAngles.ToString(); |

|||

} |

|||

InitializeButton.interactable = true; |

|||

RandomizeButton.interactable = true; |

|||

} |

|||

``` |

|||

|

|||

This callback is automatically run when the Pose Estimation service response arrives. This function simply converts the incoming pose into UnityEngine types and updates the UI elements accordingly. Once converted, the estimated position and rotation are sent to `PublishJoints`, which will send a formatted request to the MoveIt trajectory planning service. |

|||

|

|||

>Note: The incoming position and rotation are converted `From<RUF>`, i.e. Unity's coordinate space, in order to cleanly convert from a `geometry_msgs/Point` and `geometry_msgs/Quaternion` to `UnityEngine.Vector3` and `UnityEngine.Quaternion`, respectively. This is equivalent to creating a `new Vector3(response.estimated_pose.position.x, response.estimated_pose.position.y, response.estimated_pose.position.z)`, and so on. This functionality is provided via the [ROSGeometry](https://github.com/Unity-Technologies/ROS-TCP-Connector/blob/dev/ROSGeometry.md) component of the ROS-TCP-Connector package. |

|||

|

|||

>Note: The `Randomizer Cube` button calls the `RandomizeCube()` method. This randomizes the position and orientation of the cube, the position of the goal, and the color, intensity, and position of the light. It does this running the Randomizers defined in the `Fixed Length Scenario` of the `Simulation Scenario` GameObject. If you want to learn more about how we modified the Randomizers so that they could be called at inference time, check out the [InferenceRandomizer.cs](../PoseEstimationDemoProject/Assets/TutorialAssets/Scripts/InferenceRandomizer.cs) script. |

|||

|

|||

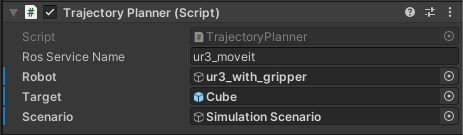

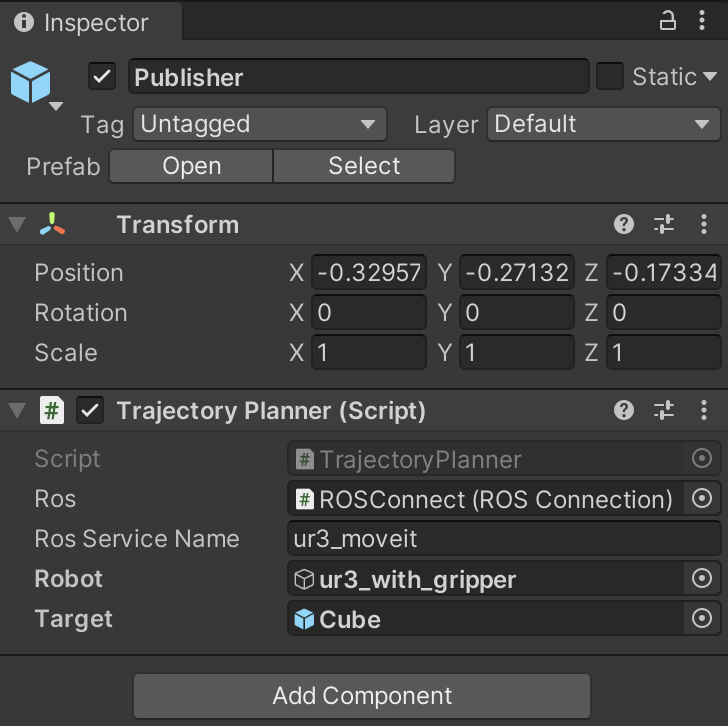

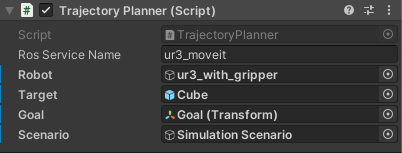

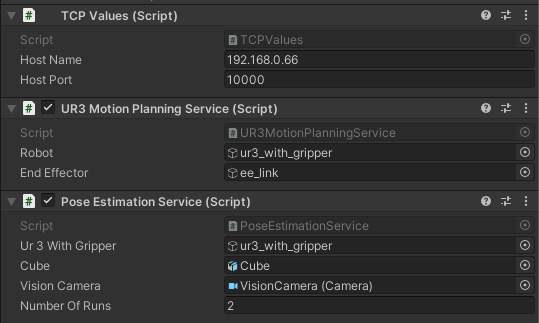

Note that the TrajectoryPlanner component shows its member variables in the _**Inspector**_ window, which need to be assigned. |

|||

|

|||

6. Return to Unity. Select the `ROSObjects/Publisher` GameObject. Assign the `ur3_with_gripper` GameObject to the `Robot` field. Drag and drop the `Cube` GameObject from the _**Hierarchy**_ onto the `Target` Inspector field. Drag and drop the `Goal` to the `Goal` field. Finally, assign the `Simulation Scenario` object to the `Scenario` field. You should see the following: |

|||

|

|||

<p align="center"> |

|||

<img src="Images/4_trajectory_field.png" width="500"/> |

|||

</p> |

|||

|

|||

#### Switching to Inference Mode |

|||

|

|||

7. On the `Simulation Scenario` GameObject, uncheck the `Fixed Length Scenario` component to disable it, as we are no longer in the Data Collection part. If you want to collect new data in the future, you can always check back on the `Fixed Length Scenario` and uncheck to disable the `ROSObjects`. |

|||

|

|||

8. On the `Main Camera` GameObject, uncheck the `Perception Camera` script component, since we do not need it anymore. |

|||

|

|||

Also note that the UI elements have been provided in `ROSObjects/Canvas`, including the Event System that is added on default by Unity. In `ROSObjects/Canvas/ButtonPanel`, the OnClick callbacks have been pre-assigned in the prefab. These buttons set the robot to its upright default position, randomize the cube position and rotation, randomize the target, and call the Pose Estimation service. |

|||

|

|||

|

|||

### <a name="step-5">Putting it together</a> |

|||

|

|||

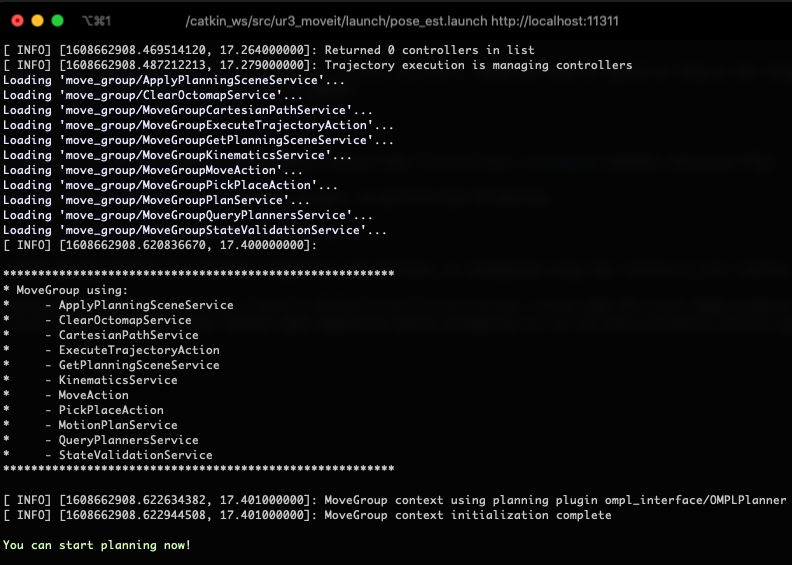

Then, run the following roslaunch in order to start roscore, set the ROS parameters, start the server endpoint, start the Mover Service and Pose Estimation nodes, and launch MoveIt. |

|||

|

|||

1. In the terminal window of your ROS workspace opened in [Set up the ROS side](#step-3), run the provided launch file: |

|||

|

|||

```bash |

|||

roslaunch ur3_moveit pose_est.launch |

|||

``` |

|||

|

|||

--- |

|||

|

|||

This launch file also loads all relevant files and starts ROS nodes required for trajectory planning for the UR3 robot (`demo.launch`). The launch files for this project are available in the package's launch directory, i.e. `src/ur3_moveit/launch/`. |

|||

|

|||

This launch will print various messages to the console, including the set parameters and the nodes launched. The final message should confirm `You can start planning now!`. |

|||

|

|||

>Note: The launch file may throw errors regarding `[controller_spawner-5] process has died`. These are safe to ignore as long as the final message is `Ready to plan`. This confirmation may take up to a minute to appear. |

|||

|

|||

<p align="center"><img src="Images/4_terminal.png" width="600"/></p> |

|||

|

|||

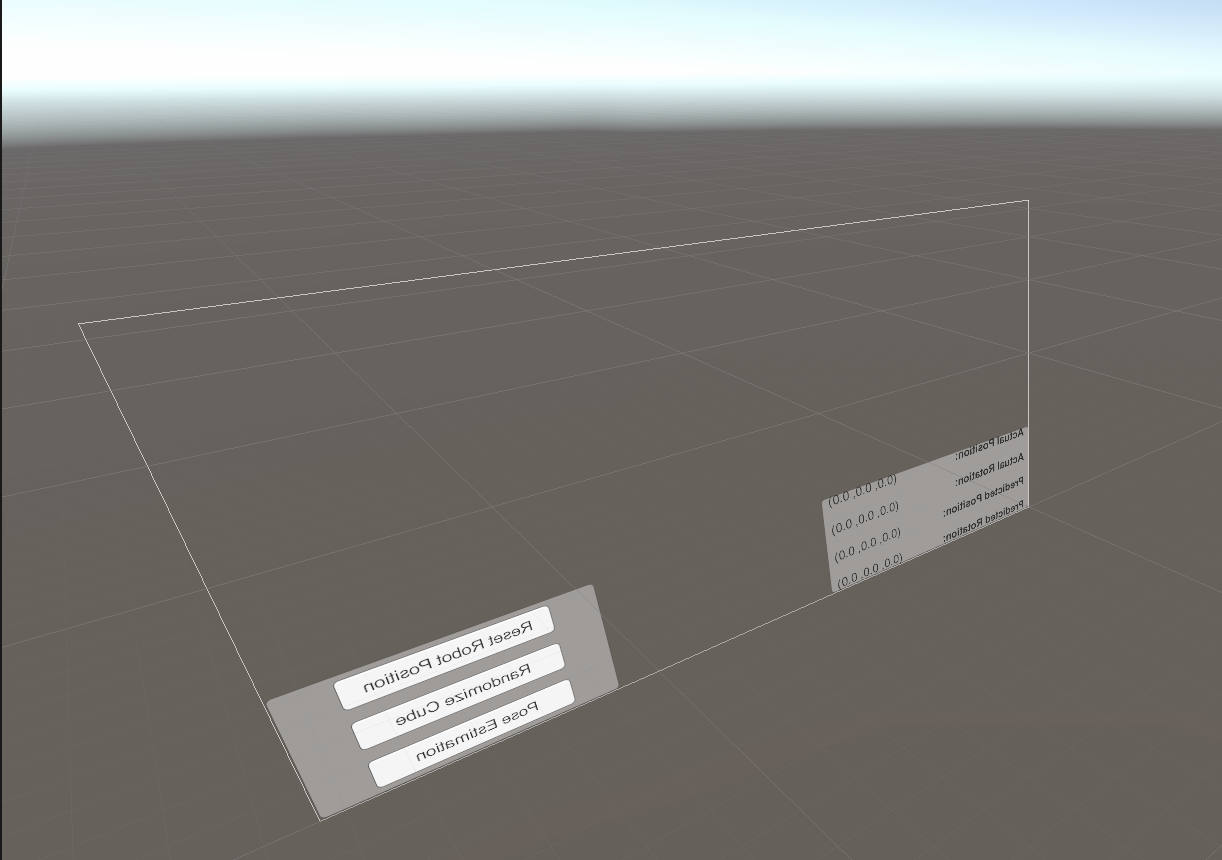

2. Return to Unity, and press Play. |

|||

|

|||