当前提交

9e450b65

共有 13 个文件被更改,包括 3646 次插入 和 7 次删除

-

2tutorials/pick_and_place/3_pick_and_place.md

-

13tutorials/pick_and_place/README.md

-

1tutorials/pick_and_place/ROS/src/niryo_moveit/CMakeLists.txt

-

8tutorials/pick_and_place/ROS/src/niryo_moveit/scripts/server_endpoint.py

-

347tutorials/pick_and_place/4_pick_and_place.md

-

5tutorials/pick_and_place/ROS/src/niryo_moveit/launch/part_4.launch

-

185tutorials/pick_and_place/ROS/src/niryo_moveit/scripts/sim_real_pnp.py

-

206tutorials/pick_and_place/Scripts/RealSimPickAndPlace.cs

-

1001tutorials/pick_and_place/img/4_button.png

-

701tutorials/pick_and_place/img/4_new_flow.png

-

183tutorials/pick_and_place/img/4_old_flow.png

-

1001tutorials/pick_and_place/img/4_script.png

|

|||

# Pick-and-Place Tutorial: Part 4 |

|||

|

|||

This part is going to be a little different than the previous tutorials in that it will utilize a real Niryo One robot. Although we assume you have completed the previous three parts ([Part 1](1_urdf.md), [Part 2](2_ros_tcp.md), [Part 3](3_pick_and_place.md)), we do not assume that you have access to a Niryo One outside of simulation. As such this tutorial should mostly be used as a reference for how to simulate the behaviour of a robot operating in real life. |

|||

|

|||

**Table of Contents** |

|||

|

|||

- [Niryo One Information](#niryo-one-information) |

|||

- [RobotMoveGoal Parameters](#robotmovegoal-parameters) |

|||

- [Differences From Part 3](#differences-from-part-3) |

|||

- [The ROS Side](#the-ros-side) |

|||

- [The Unity Side](#the-unity-side) |

|||

- [Key Differences](#key-differences) |

|||

- [Setting Up The Unity Scene](#setting-up-the-unity-scene) |

|||

- [Setting Up Niryo One](#setting-up-niryo-one) |

|||

- [Update Niryo One URDFs](#update-niryo-one-urdfs) |

|||

- [Add niryo_moveit Package](#add-niryo_moveit-package) |

|||

- [Execution](#execution) |

|||

|

|||

|

|||

# Niryo One Information |

|||

|

|||

The best source of information for the Niryo One is the [User Manual](https://niryo.com/docs/niryo-one/user-manual/complete-user-manual/). It contains a lot of general information about the Niryo One, such as how to connect it to a network, how to log in to it, and how to use the Niryo One Studio desktop application. |

|||

|

|||

> NOTE: The Niryo One will need to be connected to the same network as the machine running the Unity Editor. |

|||

|

|||

For this tutorial we will be using the full [Niryo One ROS stack](https://niryo.com/docs/niryo-one/developer-tutorials/get-started-with-the-niryo-one-ros-stack/) running on the Niryo One robot. |

|||

|

|||

From the Niryo One ROS documentation: |

|||

> The commander package exposes an action server named “niryo_one/commander/robot_action”, with a “RobotMoveCommand” message. This is probably what you’re looking for if you want to send direct command to the robot using ROS. |

|||

|

|||

Using the `RobotMoveCommand` action server we can write an [action client](http://wiki.ros.org/actionlib_tutorials/Tutorials/Writing%20a%20Simple%20Action%20Client%20%28Python%29) that sends `RobotMoveGoal` commands to the Niryo One for execution. |

|||

|

|||

> If you are unfamiliar with ROS actions you can read more about them [here](http://wiki.ros.org/actionlib). |

|||

|

|||

> Commands can also be executed on the Niryo One by publishing `RobotMoveActionGoal` messages directly to the `niryo_one/commander/robot_action/goal` topic. However, the default behavior of the action server allows only one action to be executed at a time, so any new actions received by the action server will replace any currently executing actions. |

|||

|

|||

## RobotMoveGoal Parameters |

|||

|

|||

The `goal` section in the `niryo_one_msgs/action/RobotMove.action` file shows a single field, `RobotMoveCommand`. Then looking at the `RobotMoveCommand` message we see that it is used as a catchall for executing a variety of commands on the robot. |

|||

|

|||

### RobotMoveGoal.RobotMoveCommand |

|||

|

|||

Since we are only interested in executing trajectory and gripper commands we will only focus on the following parameters: |

|||

|

|||

- `int32 cmd_type` |

|||

- Which type of robot command to execute, trajectory or tool command |

|||

- `niryo_one_msgs/TrajectoryPlan Trajectory` |

|||

- The trajectory to be executed |

|||

- `niryo_one_msgs/ToolCommand tool_cmd` |

|||

- The end effector command to be executed |

|||

|

|||

Since the `cmd_type` variable is used to determine which command in the `RobotMoveCommand` message is to be executed, we will need to find the appropriate values to execute trajectory or tool commands. |

|||

|

|||

We can find this information in the `niryo_one_ros/niryo_one_commander/src/niryo_one_commander/command_type.py` file: |

|||

|

|||

```python |

|||

JOINTS = 1 |

|||

POSE = 2 |

|||

POSITION = 3 |

|||

RPY = 4 |

|||

SHIFT_POSE = 5 |

|||

TOOL = 6 |

|||

EXECUTE_TRAJ = 7 |

|||

POSE_QUAT = 8 |

|||

SAVED_POSITION = 9 |

|||

SAVED_TRAJECTORY = 10 |

|||

``` |

|||

|

|||

We can see that the `cmd_type` variable in `RobotMoveCommand` will need to be `7` to execute a trajectory and `6` to execute a tool command. |

|||

|

|||

### RobotMoveGoal.RobotMoveCommand.ToolCommand |

|||

|

|||

To execute an end effector command on the Niryo One, a `ToolCommand` object is required. Much like `RobotMoveCommand`, the `ToolCommand` message type is a catch all for gripper commands as the Niryo One supports a variety of [grippers](https://niryo.com/niryo-one-accessories/). |

|||

|

|||

We are only interested in opening and closing the standard gripper so we will only use the following parameters: |

|||

|

|||

- `uint8 tool_id` |

|||

- Which end effector is being used |

|||

- `uint8 cmd_type` |

|||

- Which command to execute. Ex. open or close |

|||

- `uint16 gripper_close_speed` |

|||

- How fast should the gripper close |

|||

- `uint16 gripper_open_speed` |

|||

- How fast should the gripper open |

|||

|

|||

The `tool_id`s are stored in `niryo_one_ros/niryo_one_bringup/config/v2/niryo_one_motors.yaml` |

|||

|

|||

```yaml |

|||

dxl_authorized_motors: # here include all Dynamixel tools that can possibly be attached to Niryo One |

|||

- 2 # -> id of Axis 4 |

|||

- 3 # -> id of Axis 5 |

|||

- 6 # -> id of Axis 6 |

|||

- 11 # id of Gripper 1 |

|||

- 12 # id of Gripper 2 |

|||

- 13 # id of Gripper 3 |

|||

- 31 # if of Vacuum Pump 1 |

|||

``` |

|||

|

|||

We are using the standard gripper which has an id of 11. |

|||

|

|||

Just like the `cmd_type` parameter in `RobotMoveCommand` we will need to look at `niryo_one_ros/niryo_one_tools/config/end_effectors.yaml` to find the appropriate values to use. |

|||

|

|||

```yaml |

|||

command_list: |

|||

# Gripper |

|||

open_gripper: 1 |

|||

close_gripper: 2 |

|||

# Vacuump pump |

|||

pull_air_vacuum_pump: 10 |

|||

push_air_vacuum_pump: 11 |

|||

# Tools controlled by digital I/Os |

|||

setup_digital_io: 20 |

|||

activate_digital_io: 21 |

|||

deactivate_digital_io: 22 |

|||

|

|||

``` |

|||

|

|||

From here we see that the `RobotMoveGoal.RobotMoveCommand.ToolCommand.cmd_type` variable will need to be `2` to close the gripper and `1` to open it. |

|||

|

|||

|

|||

# Differences From Part 3 |

|||

|

|||

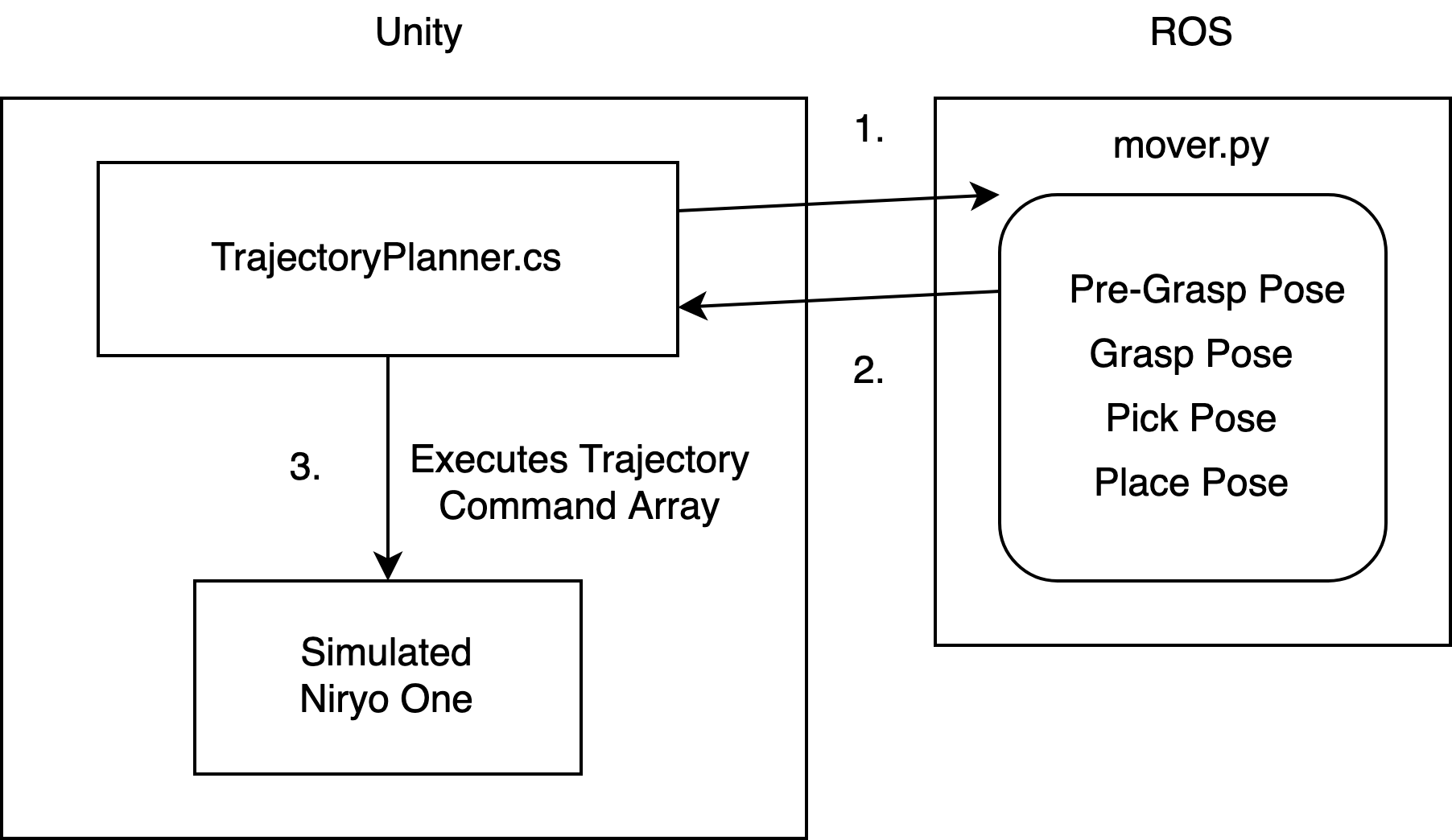

**Part 3 - Purely Simulated - Flow:** |

|||

|

|||

|

|||

|

|||

1. In part 3, we had `TrajectoryPlanner` send a MoverServiceRequest message on the `niryo_moveit` topic. The message contained the robot pose, target cube coordinates, and target destination coordinates |

|||

1. `mover.py` received the message, calculated the four trajectories, added them to an array, and sent back the array in a MoverServiceResponse message to `TrajectoryPlanner`. |

|||

1. `TrajectoryPlanner` then executed the trajectories one at a time on the simulated Niryo One. |

|||

|

|||

**Part 4 - Simulated and Real - Flow:** |

|||

|

|||

|

|||

|

|||

1. Now, `RealSimPickAndPlace.cs` will publish robot pose, target cube coordinates, and target destination coordinates to `sim_real_pnp` topic. |

|||

1. `sim_and_real_pnp.py` will recieve the message, plan the trajectories, and send the action goals one at a time to the `RobotMove` action server. |

|||

|

|||

|

|||

1. **Simultaneously** |

|||

1. The `RobotMove` action server will publish the goal messages along to the `robot_action/goal` topic. |

|||

1. The `RobotMove` action server will execute the trajectory on Niryo One. |

|||

|

|||

1. The simulated Niryo One, `RealSimPickAndPlace.cs`, subscriber will read from `robot_action/goal`, and execute the same commands. |

|||

|

|||

**Note the changes between Part 3 and Part 4::** |

|||

|

|||

- The `MoverService`, `mover.py`, has been replaced with a ROS Subscriber in `sim_and_real_pnp.py` but retains the trajectory-planning capability. |

|||

- An action server, RobotMove, has been introduced to schedule and execute trajectories. |

|||

- Gripper command execution was previously hard-coded in `TrajectoryPlanner.cs` in part 3 but is now being managed by the `RobotMove` action server. |

|||

- Extra poses have been added to make execution of the individual poses more apparent on the physical robot. |

|||

|

|||

|

|||

# The ROS Side |

|||

There are two lines that have not been previously used in `server_endpoint.py` that we will now be using. |

|||

|

|||

- The following line will be used by the simulated Niryo One in our Unity scene to read from the `robot_action/goal` topic and execute the same trajectories as the real Niryo One. |

|||

```python |

|||

'niryo_one/commander/robot_action/goal': RosSubscriber('niryo_one/commander/robot_action/goal', RobotMoveActionGoal, tcp_server), |

|||

``` |

|||

|

|||

- We will be reusing the `MoverServiceRequest` message type to publish the target and target destination poses to ROS from Unity. |

|||

```python |

|||

'sim_real_pnp': RosPublisher('sim_real_pnp', MoverServiceRequest) |

|||

``` |

|||

|

|||

We will also make use of the `sim_and_real_pnp.py` script. It is very similar to `mover.py` used in the previous tutorial with some minor differences. |

|||

|

|||

- Instead of a ROS Service this node is a ROS Subscriber that uses a ROS ActionClient to send goals. |

|||

|

|||

- Two functions have been added to handle the creation of the RobotMoveActionGoal messages. |

|||

|

|||

- To send a trajectory command: |

|||

|

|||

```python |

|||

def send_trajectory_goal(client, trajectory): |

|||

|

|||

# Build the goal |

|||

goal = RobotMoveGoal() |

|||

goal.cmd.Trajectory = trajectory |

|||

goal.cmd.cmd_type = TRAJECTORY_COMMAND_ID |

|||

|

|||

client.send_goal(goal) |

|||

client.wait_for_result() |

|||

|

|||

return |

|||

``` |

|||

|

|||

- To send a gripper command: |

|||

|

|||

```python |

|||

def send_tool_goal(client, gripper_command): |

|||

tool_command = ToolCommand() |

|||

tool_command.tool_id = TOOL_ID |

|||

tool_command.cmd_type = gripper_command |

|||

tool_command.gripper_open_speed = GRIPPER_SPEED |

|||

tool_command.gripper_close_speed = GRIPPER_SPEED |

|||

|

|||

goal = RobotMoveGoal() |

|||

goal.cmd.tool_cmd = tool_command |

|||

goal.cmd.cmd_type = TOOL_COMMAND_ID |

|||

|

|||

client.send_goal(goal) |

|||

client.wait_for_result() |

|||

|

|||

return |

|||

``` |

|||

|

|||

- The `pick_and_place` function has been updated to call the two previous functions instead of appending the trajectory or gripper command to a list. |

|||

|

|||

- Example from `mover.py` |

|||

|

|||

```python |

|||

previous_ending_joint_angles = grasp_pose.joint_trajectory.points[-1].positions |

|||

response.trajectories.append(grasp_pose) |

|||

``` |

|||

|

|||

- Updated code in `sim_real_pnp.py` |

|||

|

|||

```python |

|||

previous_ending_joint_angles = grasp_pose.trajectory.joint_trajectory.points[-1].positions |

|||

send_trajectory_goal(client, grasp_pose) |

|||

``` |

|||

|

|||

# The Unity Side |

|||

Using the same scene from [Part 3](3_pick_and_place.md), we are going to use a new script, `RealSimPickAndPlace.cs`, that mirrors a lot of the functionality of the `TrajectoryPlanner.cs` script. |

|||

|

|||

|

|||

## Key Differences |

|||

Instead of calling a service and waiting for the response to execute a robot command, we will subscribe to the `niryo_one/commander/robot_action/goal` topic and execute each command we receive. |

|||

|

|||

- A subscriber is created on Start to read the robot command messages and execute them as they are received. |

|||

|

|||

```csharp |

|||

void Start() |

|||

{ |

|||

ros.Subscribe<RobotMoveActionGoal>(rosRobotCommandsTopicName, ExecuteRobotCommands); |

|||

} |

|||

``` |

|||

|

|||

- The subscriber callback mimics the robot commander code in the Niryo One ROS stack and determines whether a trajectory or tool command was issued. |

|||

|

|||

```csharp |

|||

void ExecuteRobotCommands(RobotMoveActionGoal robotAction) |

|||

{ |

|||

if (robotAction.goal.cmd.cmd_type == TRAJECTORY_COMMAND_EXECUTION) |

|||

{ |

|||

StartCoroutine(ExecuteTrajectories(robotAction.goal.cmd.Trajectory.trajectory)); |

|||

} |

|||

else if (robotAction.goal.cmd.cmd_type == TOOL_COMMAND_EXECUTION) |

|||

{ |

|||

if (robotAction.goal.cmd.tool_cmd.cmd_type == OPEN_GRIPPER) |

|||

{ |

|||

Debug.Log("Open Tool Command"); |

|||

OpenGripper(); |

|||

} |

|||

else if (robotAction.goal.cmd.tool_cmd.cmd_type == CLOSE_GRIPPER) |

|||

{ |

|||

Debug.Log("Close Tool Command"); |

|||

CloseGripper(); |

|||

} |

|||

} |

|||

} |

|||

``` |

|||

|

|||

- The ExecuteTrajectories function has been updated to accept a single RobotTrajectory object and execute the robot poses one at a time: |

|||

|

|||

```csharp |

|||

private IEnumerator ExecuteTrajectories(RobotTrajectory trajectories) |

|||

{ |

|||

// For every robot pose in trajectory plan |

|||

foreach (var point in trajectories.joint_trajectory.points) |

|||

{ |

|||

var jointPositions = point.positions; |

|||

float[] result = jointPositions.Select(r=> (float)r * Mathf.Rad2Deg).ToArray(); |

|||

|

|||

// Set the joint values for every joint |

|||

for (int joint = 0; joint < jointArticulationBodies.Length; joint++) |

|||

{ |

|||

var joint1XDrive = jointArticulationBodies[joint].xDrive; |

|||

joint1XDrive.target = result[joint]; |

|||

jointArticulationBodies[joint].xDrive = joint1XDrive; |

|||

} |

|||

// Wait for robot to achieve pose for all joint assignments |

|||

yield return new WaitForSeconds(JOINT_ASSIGNMENT_WAIT); |

|||

} |

|||

} |

|||

``` |

|||

|

|||

|

|||

- The `PublishJoints()` function's only change is that the message is published to a topic instead of being sent as a ServiceMessage. |

|||

|

|||

`ros.Send(rosJointPublishTopicName, request);` |

|||

|

|||

|

|||

## Setting Up The Unity Scene |

|||

1. In Unity, Select Robotics -> ROS Settings from the top menu bar and update the ROS IP Address to that of the Niryo One. |

|||

|

|||

1. Select the Publisher GameObject and add the `RealSimPickAndPlace` script as a component. |

|||

|

|||

1. Note that the RealSimPickAndPlace component shows its member variables in the Inspector window, which need to be assigned. |

|||

|

|||

Once again, drag and drop the `Target` and `TargetPlacement` objects onto the Target and Target Placement Inspector fields, respectively. Assign the `niryo_one` robot to the Niryo One field. |

|||

|

|||

|

|||

|

|||

1. Select the previously made Button object in Canvas/Button, and scroll to see the Button component. Under the `OnClick()` header, click the dropdown where it is currently assigned to the SourceDestinationPublisher.Publish(). Replace this call with RealSimPickAndPlace > `PublishJoints()`. |

|||

|

|||

|

|||

|

|||

1. The Unity side is now ready to communicate with ROS on the Niryo One! |

|||

|

|||

|

|||

# Setting Up Niryo One |

|||

|

|||

> An easy way to find the Niryo One's IP address is to connect to it using the Niryo One Studio application |

|||

|

|||

## Update Niryo One URDFs |

|||

> The world-space origin of the Niryo One is defined in its URDF file. The provided Niryo One URDF used in the previous tutorials has already been updated to reflect the position of the simulated Niryo One situated on top of the table. In order to have accurate trajectory planning the URDF files on the real Niryo One will need to be updated to reflect the simulated robot's position. |

|||

|

|||

- The two files that will need to be updated are `niryo_one.urdf.xacro` and `without_mesh_niryo_one.urdf.xacro` located in the `/home/niryo/catkin_ws/src/niryo_one_description/urdf/v2` directory. |

|||

- Look for the joint named `joint_world` and update the `origin`'s `xyz` to `0 0 0.63` to reflect that the simulated Niryo is placed at `0.63` on the Z axis. |

|||

|

|||

```xml |

|||

<joint name="joint_world" type="fixed"> |

|||

<parent link="world" /> |

|||

<child link="base_link" /> |

|||

<origin xyz="0 0 0.63" rpy="0 0 0" /> |

|||

</joint> |

|||

``` |

|||

|

|||

## Add niryo_moveit Package |

|||

The Niryo One ROS stack is already installed on the robot and only the `niryo_moveit` package will need to be added. |

|||

|

|||

1. Update the `niryo_moveit/config/params.yml` file's `ROS_IP` parameter to match that of the Niryo One. |

|||

|

|||

1. Copy the `niryo_moveit` package to the `catkin_ws` directory on the Niryo One's catkin workspace at `/home/niryo/catkin_ws` and run the `catkin_make` command. |

|||

|

|||

> Using the SCP command to transfer the `niryo_moveit` package might look something like, `scp -r ~/PATH/TO/niryo_moveit niryo@NIRYO_IP_ADDRESS:/home/niryo/catkin_ws/src` |

|||

|

|||

# Execution |

|||

1. Use the `part_4.launch` file to setup the ROS params and start the `server_endpoint` and `sim_real_pnp` scripts. |

|||

- `roslaunch niryo_moveit part_4.launch` |

|||

|

|||

1. Return to the Unity Editor and press Play. Press the UI Button to send the joint configurations to ROS on the Niryo One, and watch the robot arm move simultaneously in simulation and real life! |

|||

|

|||

|

|||

<img src="img/4_pick_and_place.gif" width="400"/> |

|||

|

|||

<launch> |

|||

<rosparam file="$(find niryo_moveit)/config/params.yaml" command="load"/> |

|||

<node name="server_endpoint" pkg="niryo_moveit" type="server_endpoint.py" args="--wait" output="screen" respawn="true" /> |

|||

<node name="sim_real_pnp" pkg="niryo_moveit" type="sim_real_pnp.py" args="--wait" output="screen"/> |

|||

</launch> |

|||

|

|||

#!/usr/bin/env python |

|||

|

|||

from __future__ import print_function |

|||

|

|||

import rospy |

|||

|

|||

import time |

|||

import sys |

|||

import copy |

|||

import math |

|||

import moveit_commander |

|||

import actionlib |

|||

|

|||

import moveit_msgs.msg |

|||

|

|||

from niryo_one_msgs.msg import RobotMoveAction, ToolCommand, TrajectoryPlan |

|||

from niryo_one_msgs.msg import RobotMoveGoal |

|||

|

|||

from moveit_msgs.msg import Constraints, JointConstraint, PositionConstraint, OrientationConstraint, BoundingVolume |

|||

from sensor_msgs.msg import JointState |

|||

from moveit_msgs.msg import RobotState |

|||

import geometry_msgs.msg |

|||

from geometry_msgs.msg import Quaternion, Pose |

|||

from std_msgs.msg import String |

|||

from moveit_commander.conversions import pose_to_list |

|||

|

|||

from niryo_moveit.srv import MoverServiceRequest |

|||

|

|||

joint_names = ['joint_1', 'joint_2', 'joint_3', 'joint_4', 'joint_5', 'joint_6'] |

|||

|

|||

# Gripper parameters |

|||

OPEN_COMMAND = 1 |

|||

CLOSE_COMMAND = 2 |

|||

GRIPPER_SPEED = 100 |

|||

|

|||

# IDs used to determine which values in a RobotMoveGoal message to execute |

|||

TOOL_ID = 11 |

|||

TOOL_COMMAND_ID = 6 |

|||

TRAJECTORY_COMMAND_ID = 7 |

|||

|

|||

PICK_PLACE_HEIGHT_OFFSET = 0.065 |

|||

|

|||

# Between Melodic and Noetic, the return type of plan() changed. moveit_commander has no __version__ variable, so checking the python version as a proxy |

|||

if sys.version_info >= (3, 0): |

|||

def planCompat(plan): |

|||

return plan[1] |

|||

else: |

|||

def planCompat(plan): |

|||

return plan |

|||

|

|||

""" |

|||

Given the start angles of the robot, plan a trajectory that ends at the destination pose. |

|||

""" |

|||

def plan_trajectory(move_group, destination_pose, start_joint_angles): |

|||

current_joint_state = JointState() |

|||

current_joint_state.name = joint_names |

|||

current_joint_state.position = start_joint_angles |

|||

|

|||

moveit_robot_state = RobotState() |

|||

moveit_robot_state.joint_state = current_joint_state |

|||

move_group.set_start_state(moveit_robot_state) |

|||

|

|||

move_group.set_pose_target(destination_pose) |

|||

|

|||

if not move_group.plan(): |

|||

exception_str = """ |

|||

Trajectory could not be planned for a destination of {}\n with starting joint angles {}. |

|||

Please make sure target and destination are reachable by the robot. |

|||

""".format(destination_pose, start_joint_angles) |

|||

raise Exception(exception_str) |

|||

|

|||

trajectory_plan = TrajectoryPlan() |

|||

trajectory_plan.trajectory = planCompat(move_group.plan()) |

|||

|

|||

return trajectory_plan |

|||

|

|||

|

|||

def send_trajectory_goal(client, trajectory): |

|||

|

|||

# Build the goal |

|||

goal = RobotMoveGoal() |

|||

goal.cmd.Trajectory = trajectory |

|||

goal.cmd.cmd_type = TRAJECTORY_COMMAND_ID |

|||

|

|||

client.send_goal(goal) |

|||

client.wait_for_result() |

|||

|

|||

return |

|||

|

|||

def send_tool_goal(client, gripper_command): |

|||

tool_command = ToolCommand() |

|||

tool_command.tool_id = TOOL_ID |

|||

tool_command.cmd_type = gripper_command |

|||

tool_command.gripper_open_speed = GRIPPER_SPEED |

|||

tool_command.gripper_close_speed = GRIPPER_SPEED |

|||

|

|||

goal = RobotMoveGoal() |

|||

goal.cmd.tool_cmd = tool_command |

|||

goal.cmd.cmd_type = TOOL_COMMAND_ID |

|||

|

|||

client.send_goal(goal) |

|||

client.wait_for_result() |

|||

|

|||

return |

|||

|

|||

|

|||

""" |

|||

Creates a pick and place plan using the four states below. |

|||

|

|||

1. Pre Grasp - position gripper directly above target object |

|||

2. Grasp - lower gripper so that fingers are on either side of object |

|||

3. Pick Up - raise gripper back to the pre grasp position |

|||

4. Place - move gripper to desired placement position |

|||

|

|||

Gripper behaviour is handled outside of this trajectory planning. |

|||

- Gripper close occurs after 'grasp' position has been achieved |

|||

- Gripper open occurs after 'place' position has been achieved |

|||

|

|||

https://github.com/ros-planning/moveit/blob/master/moveit_commander/src/moveit_commander/move_group.py |

|||

""" |

|||

def plan_pick_and_place(req): |

|||

print("Starting planning....") |

|||

client = actionlib.SimpleActionClient('niryo_one/commander/robot_action', RobotMoveAction) |

|||

client.wait_for_server() |

|||

|

|||

group_name = "arm" |

|||

move_group = moveit_commander.MoveGroupCommander(group_name) |

|||

|

|||

current_robot_joint_configuration = [ |

|||

math.radians(req.joints_input.joint_00), |

|||

math.radians(req.joints_input.joint_01), |

|||

math.radians(req.joints_input.joint_02), |

|||

math.radians(req.joints_input.joint_03), |

|||

math.radians(req.joints_input.joint_04), |

|||

math.radians(req.joints_input.joint_05), |

|||

] |

|||

|

|||

# Pre grasp - position gripper directly above target object |

|||

pre_grasp_pose = plan_trajectory(move_group, req.pick_pose, current_robot_joint_configuration) |

|||

|

|||

previous_ending_joint_angles = pre_grasp_pose.trajectory.joint_trajectory.points[-1].positions |

|||

send_trajectory_goal(client, pre_grasp_pose) |

|||

|

|||

# Grasp - lower gripper so that fingers are on either side of object |

|||

pick_pose = copy.deepcopy(req.pick_pose) |

|||

pick_pose.position.z -= PICK_PLACE_HEIGHT_OFFSET # Static value coming from Unity, TODO: pass along with request |

|||

grasp_pose = plan_trajectory(move_group, pick_pose, previous_ending_joint_angles) |

|||

|

|||

previous_ending_joint_angles = grasp_pose.trajectory.joint_trajectory.points[-1].positions |

|||

send_trajectory_goal(client, grasp_pose) |

|||

send_tool_goal(client, CLOSE_COMMAND) |

|||

|

|||

# Pick Up - raise gripper back to the pre grasp position |

|||

pick_up_pose = plan_trajectory(move_group, req.pick_pose, previous_ending_joint_angles) |

|||

previous_ending_joint_angles = pick_up_pose.trajectory.joint_trajectory.points[-1].positions |

|||

send_trajectory_goal(client, pick_up_pose) |

|||

|

|||

# Place - move gripper to desired placement position |

|||

pre_place_pose = plan_trajectory(move_group, req.place_pose, previous_ending_joint_angles) |

|||

previous_ending_joint_angles = pre_place_pose.trajectory.joint_trajectory.points[-1].positions |

|||

send_trajectory_goal(client, pre_place_pose) |

|||

|

|||

# Gently Place object |

|||

place_pose = copy.deepcopy(req.place_pose) |

|||

place_pose.position.z -= PICK_PLACE_HEIGHT_OFFSET # Static value coming from Unity, TODO: pass along with request |

|||

place_traj = plan_trajectory(move_group, place_pose, previous_ending_joint_angles) |

|||

previous_ending_joint_angles = place_traj.trajectory.joint_trajectory.points[-1].positions |

|||

send_trajectory_goal(client, place_traj) |

|||

send_tool_goal(client, OPEN_COMMAND) |

|||

|

|||

# Reset robot at pre place pose pose |

|||

post_place_traj = plan_trajectory(move_group, req.place_pose, previous_ending_joint_angles) |

|||

send_trajectory_goal(client, post_place_traj) |

|||

|

|||

print("Finished executing action.") |

|||

|

|||

def listener(): |

|||

rospy.init_node('sim_real_pnp', anonymous=True) |

|||

|

|||

rospy.Subscriber("sim_real_pnp", MoverServiceRequest, plan_pick_and_place) |

|||

|

|||

rospy.spin() |

|||

|

|||

if __name__ == '__main__': |

|||

listener() |

|||

|

|||

using System.Collections; |

|||

using System.Linq; |

|||

using ROSGeometry; |

|||

using RosMessageTypes.Moveit; |

|||

using RosMessageTypes.NiryoMoveit; |

|||

using RosMessageTypes.NiryoOne; |

|||

using UnityEngine; |

|||

using Pose = RosMessageTypes.Geometry.Pose; |

|||

using RosQuaternion = RosMessageTypes.Geometry.Quaternion; |

|||

|

|||

public class RealSimPickAndPlace : MonoBehaviour |

|||

{ |

|||

private const int OPEN_GRIPPER = 1; |

|||

private const int CLOSE_GRIPPER = 2; |

|||

private const int TOOL_COMMAND_EXECUTION = 6; |

|||

private const int TRAJECTORY_COMMAND_EXECUTION = 7; |

|||

|

|||

private ROSConnection ros; |

|||

private const int NUM_ROBOT_JOINTS = 6; |

|||

|

|||

// Hardcoded variables

|

|||

private const float JOINT_ASSIGNMENT_WAIT = 0.038f; |

|||

private readonly Vector3 PICK_POSE_OFFSET = Vector3.up * 0.15f; |

|||

|

|||

// Assures that the gripper is always positioned above the target cube before grasping.

|

|||

private readonly Quaternion pickOrientation = Quaternion.Euler(90, 90, 0); |

|||

|

|||

// Variables required for ROS communication

|

|||

public string rosJointPublishTopicName = "sim_real_pnp"; |

|||

public string rosRobotCommandsTopicName = "niryo_one/commander/robot_action/goal"; |

|||

|

|||

public GameObject niryoOne; |

|||

public GameObject target; |

|||

public GameObject targetPlacement; |

|||

|

|||

// Articulation Bodies

|

|||

private ArticulationBody[] jointArticulationBodies; |

|||

private ArticulationBody leftGripperJoint; |

|||

private ArticulationBody rightGripperJoint; |

|||

|

|||

private Transform leftGripperGameObject; |

|||

private Transform rightGripperGameObject; |

|||

|

|||

/// <summary>

|

|||

/// Close the gripper

|

|||

/// </summary>

|

|||

private void CloseGripper() |

|||

{ |

|||

var leftDrive = leftGripperJoint.xDrive; |

|||

var rightDrive = rightGripperJoint.xDrive; |

|||

|

|||

leftDrive.target = 0.01f; |

|||

rightDrive.target = -0.01f; |

|||

|

|||

leftGripperJoint.xDrive = leftDrive; |

|||

rightGripperJoint.xDrive = rightDrive; |

|||

} |

|||

|

|||

/// <summary>

|

|||

/// Open the gripper

|

|||

/// </summary>

|

|||

private void OpenGripper() |

|||

{ |

|||

var leftDrive = leftGripperJoint.xDrive; |

|||

var rightDrive = rightGripperJoint.xDrive; |

|||

|

|||

leftDrive.target = -0.01f; |

|||

rightDrive.target = 0.01f; |

|||

|

|||

leftGripperJoint.xDrive = leftDrive; |

|||

rightGripperJoint.xDrive = rightDrive; |

|||

} |

|||

|

|||

|

|||

/// <summary>

|

|||

/// Publish the robot's current joint configuration, the target object's

|

|||

/// position and rotation, and the target placement for the target object's

|

|||

/// position and rotation.

|

|||

///

|

|||

/// Includes conversion from Unity coordinates to ROS coordinates, Forward Left Up

|

|||

/// </summary>

|

|||

public void PublishJoints() |

|||

{ |

|||

MoverServiceRequest request = new MoverServiceRequest |

|||

{ |

|||

joints_input = |

|||

{ |

|||

joint_00 = jointArticulationBodies[0].xDrive.target, |

|||

joint_01 = jointArticulationBodies[1].xDrive.target, |

|||

joint_02 = jointArticulationBodies[2].xDrive.target, |

|||

joint_03 = jointArticulationBodies[3].xDrive.target, |

|||

joint_04 = jointArticulationBodies[4].xDrive.target, |

|||

joint_05 = jointArticulationBodies[5].xDrive.target |

|||

}, |

|||

pick_pose = new Pose |

|||

{ |

|||

position = (target.transform.position + PICK_POSE_OFFSET).To<FLU>(), |

|||

// The hardcoded x/z angles assure that the gripper is always positioned above the target cube before grasping.

|

|||

orientation = Quaternion.Euler(90, target.transform.eulerAngles.y, 0).To<FLU>() |

|||

}, |

|||

place_pose = new Pose |

|||

{ |

|||

position = (targetPlacement.transform.position + PICK_POSE_OFFSET).To<FLU>(), |

|||

orientation = pickOrientation.To<FLU>() |

|||

} |

|||

}; |

|||

|

|||

ros.Send(rosJointPublishTopicName, request); |

|||

} |

|||

|

|||

/// <summary>

|

|||

/// Find all robot joints in Awake() and add them to the jointArticulationBodies array.

|

|||

/// Find left and right finger joints and assign them to their respective articulation body objects.

|

|||

/// </summary>

|

|||

void Awake() |

|||

{ |

|||

jointArticulationBodies = new ArticulationBody[NUM_ROBOT_JOINTS]; |

|||

string shoulder_link = "world/base_link/shoulder_link"; |

|||

jointArticulationBodies[0] = niryoOne.transform.Find(shoulder_link).GetComponent<ArticulationBody>(); |

|||

|

|||

string armLink = shoulder_link + "/arm_link"; |

|||

jointArticulationBodies[1] = niryoOne.transform.Find(armLink).GetComponent<ArticulationBody>(); |

|||

|

|||

string elbowLink = armLink + "/elbow_link"; |

|||

jointArticulationBodies[2] = niryoOne.transform.Find(elbowLink).GetComponent<ArticulationBody>(); |

|||

|

|||

string forearmLink = elbowLink + "/forearm_link"; |

|||

jointArticulationBodies[3] = niryoOne.transform.Find(forearmLink).GetComponent<ArticulationBody>(); |

|||

|

|||

string wristLink = forearmLink + "/wrist_link"; |

|||

jointArticulationBodies[4] = niryoOne.transform.Find(wristLink).GetComponent<ArticulationBody>(); |

|||

|

|||

string handLink = wristLink + "/hand_link"; |

|||

jointArticulationBodies[5] = niryoOne.transform.Find(handLink).GetComponent<ArticulationBody>(); |

|||

|

|||

// Find left and right fingers

|

|||

string rightGripper = handLink + "/tool_link/gripper_base/servo_head/control_rod_right/right_gripper"; |

|||

string leftGripper = handLink + "/tool_link/gripper_base/servo_head/control_rod_left/left_gripper"; |

|||

|

|||

leftGripperGameObject = niryoOne.transform.Find(leftGripper); |

|||

rightGripperGameObject = niryoOne.transform.Find(rightGripper); |

|||

|

|||

rightGripperJoint = rightGripperGameObject.GetComponent<ArticulationBody>(); |

|||

leftGripperJoint = leftGripperGameObject.GetComponent<ArticulationBody>(); |

|||

} |

|||

|

|||

void Start() |

|||

{ |

|||

ros.Subscribe<RobotMoveActionGoal>(rosRobotCommandsTopicName, ExecuteRobotCommands); |

|||

} |

|||

|

|||

/// <summary>

|

|||

/// Execute robot commands receved from ROS Subscriber.

|

|||

/// Gripper commands will be executed immeditately wihle trajectories will be

|

|||

/// executed in a coroutine.

|

|||

/// </summary>

|

|||

/// <param name="robotAction"> RobotMoveActionGoal of trajectory or gripper commands</param>

|

|||

void ExecuteRobotCommands(RobotMoveActionGoal robotAction) |

|||

{ |

|||

if (robotAction.goal.cmd.cmd_type == TRAJECTORY_COMMAND_EXECUTION) |

|||

{ |

|||

StartCoroutine(ExecuteTrajectories(robotAction.goal.cmd.Trajectory.trajectory)); |

|||

} |

|||

else if (robotAction.goal.cmd.cmd_type == TOOL_COMMAND_EXECUTION) |

|||

{ |

|||

if (robotAction.goal.cmd.tool_cmd.cmd_type == OPEN_GRIPPER) |

|||

{ |

|||

Debug.Log("Open Tool Command"); |

|||

OpenGripper(); |

|||

} |

|||

else if (robotAction.goal.cmd.tool_cmd.cmd_type == CLOSE_GRIPPER) |

|||

{ |

|||

Debug.Log("Close Tool Command"); |

|||

CloseGripper(); |

|||

} |

|||

} |

|||

} |

|||

|

|||

/// <summary>

|

|||

/// Execute trajectories from RobotMoveActionGoal topic.

|

|||

///

|

|||

/// Execution will iterate through every robot pose in the trajectory pose

|

|||

/// array while updating the joint values on the simulated robot.

|

|||

///

|

|||

/// </summary>

|

|||

/// <param name="trajectories"> The array of poses for the robot to execute</param>

|

|||

private IEnumerator ExecuteTrajectories(RobotTrajectory trajectories) |

|||

{ |

|||

// For every robot pose in trajectory plan

|

|||

foreach (var point in trajectories.joint_trajectory.points) |

|||

{ |

|||

var jointPositions = point.positions; |

|||

float[] result = jointPositions.Select(r=> (float)r * Mathf.Rad2Deg).ToArray(); |

|||

|

|||

// Set the joint values for every joint

|

|||

for (int joint = 0; joint < jointArticulationBodies.Length; joint++) |

|||

{ |

|||

var joint1XDrive = jointArticulationBodies[joint].xDrive; |

|||

joint1XDrive.target = result[joint]; |

|||

jointArticulationBodies[joint].xDrive = joint1XDrive; |

|||

} |

|||

// Wait for robot to achieve pose for all joint assignments

|

|||

yield return new WaitForSeconds(JOINT_ASSIGNMENT_WAIT); |

|||

} |

|||

} |

|||

} |

|||

1001

tutorials/pick_and_place/img/4_button.png

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

1001

tutorials/pick_and_place/img/4_script.png

文件差异内容过多而无法显示

查看文件

文件差异内容过多而无法显示

查看文件

部分文件因为文件数量过多而无法显示

撰写

预览

正在加载...

取消

保存

Reference in new issue